【AI學(xué)習(xí)筆記5】用C語(yǔ)言實(shí)現(xiàn)一個(gè)最簡(jiǎn)單的全連接神經(jīng)網(wǎng)絡(luò)FCNN(MLP), A simple FCNN(MLP) in C language

用C語(yǔ)言實(shí)現(xiàn)一個(gè)最簡(jiǎn)單的全連接神經(jīng)網(wǎng)絡(luò)FCNN(MLP), A simple FCNN(MLP) in C language

一、從圖像中識(shí)別英文字母【1】

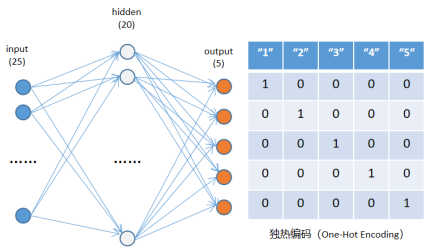

- 從圖像中識(shí)別多個(gè)不同的數(shù)字,屬于多分類問題;

- 每個(gè)圖像是5*5的像素矩陣,分別包含1-5五個(gè)字母數(shù)字;

- 網(wǎng)絡(luò)結(jié)構(gòu):一個(gè)隱藏層的FCNN(Fully-connected neural network, MLP)網(wǎng)絡(luò);

每個(gè)圖像是5x5個(gè)像素,因此網(wǎng)絡(luò)輸入是25個(gè)節(jié)點(diǎn);

輸出是五分類,因此是5個(gè)節(jié)點(diǎn);

隱藏層可以取20個(gè)節(jié)點(diǎn),激活函數(shù)用sigmoid。

二、用C語(yǔ)言代碼實(shí)現(xiàn)FCNN(MLP)模型的訓(xùn)練(Training)【1】

#include "stdio.h"

#include "stdlib.h"

#include "math.h"

#define TRAIN_NUMS 5

#define TEST_NUMS 5

#define ROWS 5

#define COLS 5

#define INPUT_NUMS (ROWS * COLS)

#define HIDDEN_NUMS 20

#define OUTPUT_NUMS 5

#define LEARNING_RATE 0.01

#define EPOCHS 100000

// 訓(xùn)練數(shù)據(jù)

float train_inputs[TRAIN_NUMS][ROWS * COLS] = {

{0, 0, 1, 0, 0, 0, 1, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 1, 1, 1, 0},

{1, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1, 1},

{1, 1, 1, 1, 1, 0, 0, 0, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1},

{1, 0, 0, 1, 0, 1, 0, 0, 1, 0, 1, 1, 1, 1, 1, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0},

{1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1},

};

float train_labels[5] = { 1, 2, 3, 4, 5 };

// 測(cè)試數(shù)據(jù)

float test_inputs[TEST_NUMS][ROWS * COLS] = {

{1, 1, 1, 1, 1, 0, 0, 0, 0, 1, 0, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1},

{1, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1, 1},

{1, 0, 0, 1, 0, 1, 0, 0, 1, 0, 1, 1, 1, 1, 1, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0},

{0, 0, 1, 0, 0, 0, 1, 1, 0, 0, 0, 0, 1, 0, 0, 0, 0, 1, 0, 0, 0, 1, 1, 1, 0},

{1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1, 1, 0, 0, 0, 0, 1, 1, 1, 1, 1, 1},

};

float test_labels[5] = { 3, 2, 4, 1, 5 };

float hiddens[HIDDEN_NUMS];

float outputs[OUTPUT_NUMS];

float weights0[INPUT_NUMS][HIDDEN_NUMS]; //input --> hidden

float weights1[HIDDEN_NUMS][OUTPUT_NUMS]; //hidden --> output

float errs_output[OUTPUT_NUMS], errs_hidden[HIDDEN_NUMS];

// sigmoid激活函數(shù)

double sigmoid(double x) {

return 1.0 / (1.0 + exp(-x));

}

// probnorm概率歸一化函數(shù)

void probnorm(float* outputs, int n) {

float sum = 0.0;

float maxprob = 0.0, probsum = 0.0;

int maxid = 0;

for (int i = 0; i < n; i++) {

sum += outputs[i];

if (outputs[i] > maxprob) {

maxid = i;

maxprob = outputs[i];

}

}

for (int i = 0; i < n; i++) {

outputs[i] = outputs[i] / sum;

}

outputs[maxid] = 0.0;

probsum = 0.0;

for (int i = 0; i < n; i++) {

probsum += outputs[i];

}

outputs[maxid] = 1.0 - probsum;

}

// 前向傳播函數(shù)

void forward(float* inputs) {

for (int i = 0; i < HIDDEN_NUMS; i++) {

float sum = 0.0;

for (int j = 0; j < INPUT_NUMS; j++) {

sum += inputs[j] * weights0[j][i];

}

hiddens[i] = sigmoid(sum);

}

for (int i = 0; i < OUTPUT_NUMS; i++) {

float sum = 0.0;

for (int j = 0; j < HIDDEN_NUMS; j++) {

sum += hiddens[j] * weights1[j][i];

}

outputs[i] = sigmoid(sum);

}

probnorm(outputs, OUTPUT_NUMS);

}

// 反向傳播函數(shù)

void backward(float* inputs, float label) {

for (int i = 0; i < OUTPUT_NUMS; i++) {

errs_output[i] = ((i == (int)(label-1)) ? 1.0 : 0.0) - outputs[i];

}

for (int i = 0; i < HIDDEN_NUMS; i++) {

errs_hidden[i] = 0.0f;

for (int j = 0; j < OUTPUT_NUMS; j++) {

errs_hidden[i] += errs_output[j]*weights1[i][j];

}

}

for (int i = 0; i < INPUT_NUMS; i++) {

for (int j = 0; j < HIDDEN_NUMS; j++) {

weights0[i][j] += LEARNING_RATE * errs_hidden[j] * inputs[i];

}

}

for (int i = 0; i < HIDDEN_NUMS; i++) {

for (int j = 0; j < OUTPUT_NUMS; j++) {

weights1[i][j] += LEARNING_RATE * errs_output[j] * hiddens[i];

}

}

}

// 訓(xùn)練函數(shù)

void train() {

for (int i = 0; i < TRAIN_NUMS; i++) {

forward(train_inputs[i]);

backward(train_inputs[i], train_labels[i]);

}

}

// 測(cè)試函數(shù)

void test() {

int num_correct = 0;

for (int i = 0; i < TEST_NUMS; i++) {

forward(test_inputs[i]);

for (int k = 0; k < OUTPUT_NUMS; k++) {

printf("o[%d]=%f ", k, outputs[k]);

}

printf("\n");

int prediction = 0;

for (int j = 1; j < OUTPUT_NUMS; j++) {

if (outputs[j] > outputs[prediction]) {

prediction = j;

}

}

if (prediction == (test_labels[i]-1)) {

num_correct++;

}

printf("Prediction = %d\n", prediction+1);

}

double accuracy = (double)num_correct / TEST_NUMS;

printf("Test Accuracy = %f\n", accuracy);

}

int main() {

// 初始化權(quán)重

for (int i = 0; i < INPUT_NUMS; i++) {

for (int j = 0; j < HIDDEN_NUMS; j++) {

weights0[i][j] = (double)rand() / RAND_MAX - 0.5;

}

}

for (int i = 0; i < HIDDEN_NUMS; i++) {

for (int j = 0; j < OUTPUT_NUMS; j++) {

weights1[i][j] = (double)rand() / RAND_MAX - 0.5;

}

}

// 訓(xùn)練模型

for (int epoch = 0; epoch < EPOCHS; epoch++) {

train();

}

// 測(cè)試模型

test();

return 0;

}

三、推理結(jié)果(Inference)

在Cygwin環(huán)境下用gcc編譯compile,然后訓(xùn)練,再執(zhí)行推理,結(jié)果如下:

o[0]=0.000215 o[1]=0.000933 o[2]=0.998017 o[3]=0.000001 o[4]=0.000834

Prediction = 3

o[0]=0.000177 o[1]=0.998267 o[2]=0.000925 o[3]=0.000219 o[4]=0.000412

Prediction = 2

o[0]=0.000034 o[1]=0.000301 o[2]=0.000000 o[3]=0.999175 o[4]=0.000489

Prediction = 4

o[0]=0.999439 o[1]=0.000209 o[2]=0.000182 o[3]=0.000005 o[4]=0.000164

Prediction = 1

o[0]=0.000077 o[1]=0.000362 o[2]=0.000774 o[3]=0.000398 o[4]=0.998389

Prediction = 5

Test Accuracy = 1.000000

Accuracy為100%,是因?yàn)槭褂玫膖est_inputs和train_inputs完全相同,如果test_inputs改為不同的5x5像素矩陣,則Accuracy會(huì)降低。要想提高準(zhǔn)確率,則需要更多的訓(xùn)練數(shù)據(jù)(train_inputs)。

參考文獻(xiàn)(References):

【1】 septemc 《使用C語(yǔ)言實(shí)現(xiàn)神經(jīng)網(wǎng)絡(luò)進(jìn)行數(shù)字識(shí)別》

https://blog.csdn.net/Septem_ccn/article/details/132589351

posted on 2025-01-05 14:16 JasonQiuStar 閱讀(431) 評(píng)論(0) 收藏 舉報(bào)

浙公網(wǎng)安備 33010602011771號(hào)

浙公網(wǎng)安備 33010602011771號(hào)