循環(huán)神經(jīng)網(wǎng)絡(luò)的從零開始實(shí)現(xiàn)(RNN)

代碼總覽

%matplotlib inline

import math

import torch

from torch import nn

from torch.nn import functional as F

from d2l import torch as d2l

batch_size, num_steps = 32, 35

train_iter, vocab = d2l.load_data_time_machine(batch_size, num_steps)

# 獨(dú)熱編碼

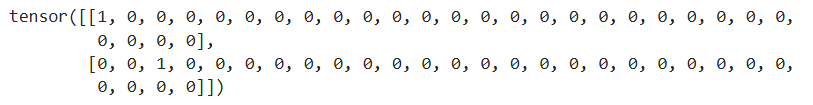

F.one_hot(torch.tensor([0, 2]), len(vocab))

# 小批量數(shù)據(jù)形狀是二維張量: (批量大小,時(shí)間步數(shù))

X = torch.arange(10).reshape((2, 5))

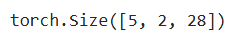

F.one_hot(X.T, 28).shape

# 初始化模型參數(shù)

def get_params(vocab_size, num_hiddens, device):

num_inputs = num_outputs = vocab_size

def normal(shape):

return torch.randn(size=shape, device=device) * 0.01

# 隱藏層參數(shù)

W_xh = normal((num_inputs, num_hiddens))

W_hh = normal((num_hiddens, num_hiddens)) # 這行若沒有,就是一個(gè)單隱藏層的 MLP

b_h = torch.zeros(num_hiddens, device=device)

# 輸出層參數(shù)

W_hq = normal((num_hiddens, num_outputs))

b_q = torch.zeros(num_outputs, device=device)

# 附加梯度

params = [W_xh, W_hh, b_h, W_hq, b_q]

for param in params:

param.requires_grad_(True)

return params

# 一個(gè) init_rnn_state 函數(shù)在初始化時(shí)返回隱狀態(tài)

def init_rnn_state(batch_size, num_hiddens, device):

return (torch.zeros((batch_size, num_hiddens), device=device), )

# 下面的rnn函數(shù)定義了如何在一個(gè)時(shí)間步內(nèi)計(jì)算隱狀態(tài)和輸出

def rnn(inputs, state, params):

# inputs的形狀:(時(shí)間步數(shù)量,批量大小,詞表大小)

W_xh, W_hh, b_h, W_hq, b_q = params

H, = state

outputs = []

# X的形狀:(批量大小,詞表大小)

for X in inputs:

H = torch.tanh(torch.mm(X, W_xh) + torch.mm(H, W_hh) + b_h)

Y = torch.mm(H, W_hq) + b_q

outputs.append(Y)

return torch.cat(outputs, dim=0), (H,)

# 創(chuàng)建一個(gè)類來包裝這些函數(shù), 并存儲從零開始實(shí)現(xiàn)的循環(huán)神經(jīng)網(wǎng)絡(luò)模型的參數(shù)

class RNNModelScratch:

"""從零開始實(shí)現(xiàn)的循環(huán)神經(jīng)網(wǎng)絡(luò)模型"""

def __init__(self, vocab_size, num_hiddens, device,

get_params, init_state, forward_fn):

self.vocab_size, self.num_hiddens = vocab_size, num_hiddens

self.params = get_params(vocab_size, num_hiddens, device)

self.init_state, self.forward_fn = init_state, forward_fn

def __call__(self, X, state):

X = F.one_hot(X.T, self.vocab_size).type(torch.float32)

return self.forward_fn(X, state, self.params)

def begin_state(self, batch_size, device):

return self.init_state(batch_size, self.num_hiddens, device)

# 檢查輸出是否具有正確的形狀

num_hiddens = 512

net = RNNModelScratch(len(vocab), num_hiddens, d2l.try_gpu(), get_params,

init_rnn_state, rnn)

state = net.begin_state(X.shape[0], d2l.try_gpu())

Y, new_state = net(X.to(d2l.try_gpu()), state)

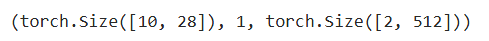

Y.shape, len(new_state), new_state[0].shape

# 首先定義預(yù)測函數(shù)來生成prefix之后的新字符

def predict_ch8(prefix, num_preds, net, vocab, device):

"""在prefix后面生成新字符"""

state = net.begin_state(batch_size=1, device=device)

outputs = [vocab[prefix[0]]]

get_input = lambda: torch.tensor([outputs[-1]], device=device).reshape((1, 1))

for y in prefix[1:]: # 預(yù)熱期

_, state = net(get_input(), state)

outputs.append(vocab[y])

for _ in range(num_preds): # 預(yù)測num_preds步

y, state = net(get_input(), state)

outputs.append(int(y.argmax(dim=1).reshape(1)))

return ''.join([vocab.idx_to_token[i] for i in outputs])

predict_ch8('time traveller ', 10, net, vocab, d2l.try_gpu())

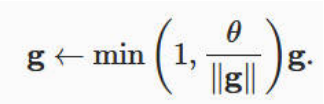

# 梯度裁剪

def grad_clipping(net, theta):

"""裁剪梯度"""

if isinstance(net, nn.Module):

params = [p for p in net.parameters() if p.requires_grad]

else:

params = net.params

norm = torch.sqrt(sum(torch.sum((p.grad ** 2)) for p in params))

if norm > theta:

for param in params:

param.grad[:] *= theta / norm

# 定義一個(gè)函數(shù)在一個(gè)迭代周期內(nèi)訓(xùn)練模型

def train_epoch_ch8(net, train_iter, loss, updater, device, use_random_iter):

"""訓(xùn)練網(wǎng)絡(luò)一個(gè)迭代周期(定義見第8章)"""

state, timer = None, d2l.Timer()

metric = d2l.Accumulator(2) # 訓(xùn)練損失之和,詞元數(shù)量

for X, Y in train_iter:

if state is None or use_random_iter:

# 在第一次迭代或使用隨機(jī)抽樣時(shí)初始化state

state = net.begin_state(batch_size=X.shape[0], device=device)

else:

if isinstance(net, nn.Module) and not isinstance(state, tuple):

# state對于nn.GRU是個(gè)張量

state.detach_()

else:

# state對于nn.LSTM或?qū)τ谖覀儚牧汩_始實(shí)現(xiàn)的模型是個(gè)張量

for s in state:

s.detach_()

y = Y.T.reshape(-1)

X, y = X.to(device), y.to(device)

y_hat, state = net(X, state)

l = loss(y_hat, y.long()).mean()

if isinstance(updater, torch.optim.Optimizer):

updater.zero_grad()

l.backward()

grad_clipping(net, 1)

updater.step()

else:

l.backward()

grad_clipping(net, 1)

# 因?yàn)橐呀?jīng)調(diào)用了mean函數(shù)

updater(batch_size=1)

metric.add(l * y.numel(), y.numel())

return math.exp(metric[0] / metric[1]), metric[1] / timer.stop()

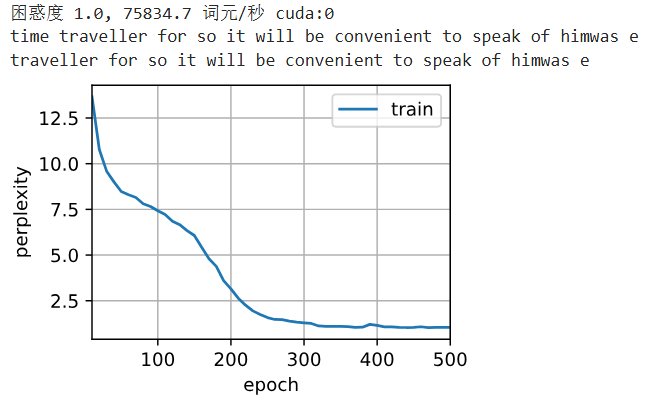

# 循環(huán)神經(jīng)網(wǎng)絡(luò)模型的訓(xùn)練函數(shù)既支持從零開始實(shí)現(xiàn), 也可以使用高級API來實(shí)現(xiàn)

def train_ch8(net, train_iter, vocab, lr, num_epochs, device,

use_random_iter=False):

"""訓(xùn)練模型(定義見第8章)"""

loss = nn.CrossEntropyLoss()

animator = d2l.Animator(xlabel='epoch', ylabel='perplexity',

legend=['train'], xlim=[10, num_epochs])

# 初始化

if isinstance(net, nn.Module):

updater = torch.optim.SGD(net.parameters(), lr)

else:

updater = lambda batch_size: d2l.sgd(net.params, lr, batch_size)

predict = lambda prefix: predict_ch8(prefix, 50, net, vocab, device)

# 訓(xùn)練和預(yù)測

for epoch in range(num_epochs):

ppl, speed = train_epoch_ch8(

net, train_iter, loss, updater, device, use_random_iter)

if (epoch + 1) % 10 == 0:

print(predict('time traveller'))

animator.add(epoch + 1, [ppl])

print(f'困惑度 {ppl:.1f}, {speed:.1f} 詞元/秒 {str(device)}')

print(predict('time traveller'))

print(predict('traveller'))

# 現(xiàn)在,我們訓(xùn)練循環(huán)神經(jīng)網(wǎng)絡(luò)模型

num_epochs, lr = 500, 1

train_ch8(net, train_iter, vocab, lr, num_epochs, d2l.try_gpu())

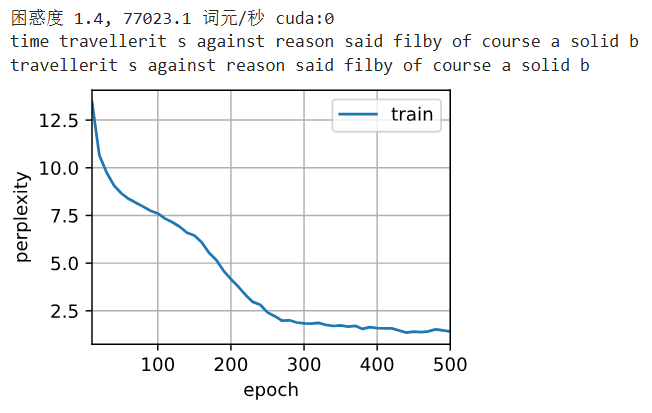

# 最后,讓我們檢查一下使用隨機(jī)抽樣方法的結(jié)果

net = RNNModelScratch(len(vocab), num_hiddens, d2l.try_gpu(), get_params, init_rnn_state, rnn)

train_ch8(net, train_iter, vocab, lr, num_epochs, d2l.try_gpu(), use_random_iter=True)

代碼解釋

1. 初始設(shè)置與數(shù)據(jù)準(zhǔn)備

%matplotlib inline

import math

import torch

from torch import nn

from torch.nn import functional as F

from d2l import torch as d2l

-

功能:

-

%matplotlib inline: 在Jupyter Notebook中內(nèi)嵌顯示matplotlib圖形

-

import math: 導(dǎo)入數(shù)學(xué)計(jì)算模塊

-

import torch: 導(dǎo)入PyTorch深度學(xué)習(xí)框架

-

from torch import nn: 導(dǎo)入PyTorch的神經(jīng)網(wǎng)絡(luò)模塊

-

from torch.nn import functional as F: 導(dǎo)入PyTorch的函數(shù)模塊

-

from d2l import torch as d2l: 導(dǎo)入《動(dòng)手學(xué)深度學(xué)習(xí)》的配套工具庫

-

batch_size, num_steps = 32, 35

train_iter, vocab = d2l.load_data_time_machine(batch_size, num_steps)

-

功能:

-

設(shè)置批量大小為32,時(shí)間步數(shù)為35

-

加載時(shí)間機(jī)器數(shù)據(jù)集:

-

d2l.load_data_time_machine() 函數(shù)加載并預(yù)處理數(shù)據(jù)

-

返回?cái)?shù)據(jù)迭代器(train_iter)和詞匯表(vocab)

-

詞匯表大小:28個(gè)字符(小寫字母+空格+標(biāo)點(diǎn))

-

-

2. 數(shù)據(jù)預(yù)處理與表示

# 獨(dú)熱編碼

F.one_hot(torch.tensor([0, 2]), len(vocab))

-

功能:

-

演示如何將整數(shù)索引轉(zhuǎn)換為獨(dú)熱編碼

-

輸入:[0, 2](兩個(gè)字符的索引)

-

輸出:形狀為(2, 28)的張量,每行對應(yīng)一個(gè)字符的獨(dú)熱編碼

-

例如:索引0 → [1,0,0,...],索引2 → [0,0,1,0,...]

-

# 小批量數(shù)據(jù)形狀是二維張量: (批量大小,時(shí)間步數(shù))

X = torch.arange(10).reshape((2, 5))

F.one_hot(X.T, 28).shape

-

功能:

-

創(chuàng)建示例數(shù)據(jù):2個(gè)樣本,每個(gè)樣本5個(gè)時(shí)間步

-

轉(zhuǎn)置數(shù)據(jù):從(2,5)變?yōu)?5,2)

-

應(yīng)用獨(dú)熱編碼:得到形狀(5, 2, 28)

-

這表示:5個(gè)時(shí)間步,2個(gè)樣本,每個(gè)時(shí)間步是28維的獨(dú)熱向量

-

3. 模型參數(shù)初始化

# 初始化模型參數(shù)

def get_params(vocab_size, num_hiddens, device):

num_inputs = num_outputs = vocab_size

def normal(shape):

return torch.randn(size=shape, device=device) * 0.01

# 隱藏層參數(shù)

W_xh = normal((num_inputs, num_hiddens))

W_hh = normal((num_hiddens, num_hiddens)) # 這行若沒有,就是一個(gè)單隱藏層的 MLP

b_h = torch.zeros(num_hiddens, device=device)

# 輸出層參數(shù)

W_hq = normal((num_hiddens, num_outputs))

b_q = torch.zeros(num_outputs, device=device)

# 附加梯度

params = [W_xh, W_hh, b_h, W_hq, b_q]

for param in params:

param.requires_grad_(True)

return params

-

功能:

-

初始化RNN的五個(gè)關(guān)鍵參數(shù):

-

W_xh: 輸入到隱藏層的權(quán)重 (28×512)

-

W_hh: 隱藏層到隱藏層的權(quán)重 (512×512) - RNN的關(guān)鍵!

-

b_h: 隱藏層偏置 (512,)

-

W_hq: 隱藏層到輸出層的權(quán)重 (512×28)

-

b_q: 輸出層偏置 (28,)

-

-

使用小隨機(jī)數(shù)初始化權(quán)重(標(biāo)準(zhǔn)差0.01)

-

偏置初始化為0

-

所有參數(shù)設(shè)置為需要梯度計(jì)算

-

4. 隱藏狀態(tài)初始化

# 一個(gè) init_rnn_state 函數(shù)在初始化時(shí)返回隱狀態(tài)

def init_rnn_state(batch_size, num_hiddens, device):

return (torch.zeros((batch_size, num_hiddens), device=device), )

-

功能:

-

創(chuàng)建初始隱藏狀態(tài)(H0)

-

形狀:(batch_size, num_hiddens) = (32, 512)

-

全部初始化為0

-

返回元組格式(為了與LSTM等更復(fù)雜模型兼容)

-

5. RNN前向傳播

# 下面的rnn函數(shù)定義了如何在一個(gè)時(shí)間步內(nèi)計(jì)算隱狀態(tài)和輸出

def rnn(inputs, state, params):

# inputs的形狀:(時(shí)間步數(shù)量,批量大小,詞表大小)

W_xh, W_hh, b_h, W_hq, b_q = params

H, = state

outputs = []

# X的形狀:(批量大小,詞表大小)

for X in inputs:

H = torch.tanh(torch.mm(X, W_xh) + torch.mm(H, W_hh) + b_h)

Y = torch.mm(H, W_hq) + b_q

outputs.append(Y)

return torch.cat(outputs, dim=0), (H,)

-

功能:

-

RNN核心計(jì)算邏輯

-

遍歷每個(gè)時(shí)間步:

-

計(jì)算新隱藏狀態(tài):H = tanh(X·W_xh + H·W_hh + b_h)

-

計(jì)算當(dāng)前輸出:Y = H·W_hq + b_q

-

-

拼接所有時(shí)間步的輸出

-

返回輸出序列和最終隱藏狀態(tài)

-

6. RNN模型封裝

# 創(chuàng)建一個(gè)類來包裝這些函數(shù), 并存儲從零開始實(shí)現(xiàn)的循環(huán)神經(jīng)網(wǎng)絡(luò)模型的參數(shù)

class RNNModelScratch:

"""從零開始實(shí)現(xiàn)的循環(huán)神經(jīng)網(wǎng)絡(luò)模型"""

def __init__(self, vocab_size, num_hiddens, device,

get_params, init_state, forward_fn):

self.vocab_size, self.num_hiddens = vocab_size, num_hiddens

self.params = get_params(vocab_size, num_hiddens, device)

self.init_state, self.forward_fn = init_state, forward_fn

def __call__(self, X, state):

X = F.one_hot(X.T, self.vocab_size).type(torch.float32)

return self.forward_fn(X, state, self.params)

def begin_state(self, batch_size, device):

return self.init_state(batch_size, self.num_hiddens, device)

-

功能:

-

封裝RNN模型為可調(diào)用類

-

__init__: 初始化參數(shù)和前向函數(shù)

-

__call__:

-

將輸入轉(zhuǎn)換為獨(dú)熱編碼

-

調(diào)用前向傳播函數(shù)

-

-

begin_state: 創(chuàng)建初始隱藏狀態(tài)

-

7. 模型驗(yàn)證與文本生成

# 檢查輸出是否具有正確的形狀

num_hiddens = 512

net = RNNModelScratch(len(vocab), num_hiddens, d2l.try_gpu(), get_params,

init_rnn_state, rnn)

state = net.begin_state(X.shape[0], d2l.try_gpu())

-

功能:

-

實(shí)例化RNN模型

-

創(chuàng)建初始隱藏狀態(tài)

-

Y, new_state = net(X.to(d2l.try_gpu()), state)

Y.shape, len(new_state), new_state[0].shape

-

功能:

-

執(zhí)行前向傳播

-

驗(yàn)證輸出形狀:(時(shí)間步×批量大小, 詞匯表大小) = (10, 28)

-

驗(yàn)證隱藏狀態(tài)形狀:(批量大小, 隱藏單元數(shù)) = (2, 512)

-

# 首先定義預(yù)測函數(shù)來生成prefix之后的新字符

def predict_ch8(prefix, num_preds, net, vocab, device):

"""在prefix后面生成新字符"""

state = net.begin_state(batch_size=1, device=device)

outputs = [vocab[prefix[0]]]

get_input = lambda: torch.tensor([outputs[-1]], device=device).reshape((1, 1))

for y in prefix[1:]: # 預(yù)熱期

_, state = net(get_input(), state)

outputs.append(vocab[y])

for _ in range(num_preds): # 預(yù)測num_preds步

y, state = net(get_input(), state)

outputs.append(int(y.argmax(dim=1).reshape(1))

return ''.join([vocab.idx_to_token[i] for i in outputs])

-

功能:

-

初始化隱藏狀態(tài)

-

預(yù)熱期:用前綴字符初始化狀態(tài)

-

預(yù)測期:用模型預(yù)測下一個(gè)字符

-

將預(yù)測結(jié)果轉(zhuǎn)換為字符串

-

8. 訓(xùn)練準(zhǔn)備:梯度裁剪

# 梯度裁剪

def grad_clipping(net, theta):

"""裁剪梯度"""

if isinstance(net, nn.Module):

params = [p for p in net.parameters() if p.requires_grad]

else:

params = net.params

norm = torch.sqrt(sum(torch.sum((p.grad ** 2)) for p in params))

if norm > theta:

for param in params:

param.grad[:] *= theta / norm

-

功能:

-

防止梯度爆炸

-

計(jì)算所有參數(shù)梯度的L2范數(shù)

-

如果范數(shù)超過閾值(theta=1),等比例縮小梯度

-

9. 訓(xùn)練循環(huán)實(shí)現(xiàn)

# 定義一個(gè)函數(shù)在一個(gè)迭代周期內(nèi)訓(xùn)練模型

def train_epoch_ch8(net, train_iter, loss, updater, device, use_random_iter):

"""訓(xùn)練網(wǎng)絡(luò)一個(gè)迭代周期(定義見第8章)"""

state, timer = None, d2l.Timer()

metric = d2l.Accumulator(2) # 訓(xùn)練損失之和,詞元數(shù)量

for X, Y in train_iter:

if state is None or use_random_iter:

# 在第一次迭代或使用隨機(jī)抽樣時(shí)初始化state

state = net.begin_state(batch_size=X.shape[0], device=device)

else:

if isinstance(net, nn.Module) and not isinstance(state, tuple):

# state對于nn.GRU是個(gè)張量

state.detach_()

else:

# state對于nn.LSTM或?qū)τ谖覀儚牧汩_始實(shí)現(xiàn)的模型是個(gè)張量

for s in state:

s.detach_()

y = Y.T.reshape(-1)

X, y = X.to(device), y.to(device)

y_hat, state = net(X, state)

l = loss(y_hat, y.long()).mean()

if isinstance(updater, torch.optim.Optimizer):

updater.zero_grad()

l.backward()

grad_clipping(net, 1)

updater.step()

else:

l.backward()

grad_clipping(net, 1)

# 因?yàn)橐呀?jīng)調(diào)用了mean函數(shù)

updater(batch_size=1)

metric.add(l * y.numel(), y.numel())

return math.exp(metric[0] / metric[1]), metric[1] / timer.stop()

-

功能:

-

管理隱藏狀態(tài)(初始化或分離)

-

準(zhǔn)備數(shù)據(jù)(移動(dòng)到設(shè)備)

-

前向傳播

-

計(jì)算損失(交叉熵)

-

反向傳播

-

梯度裁剪

-

參數(shù)更新

-

計(jì)算困惑度(perplexity)和訓(xùn)練速度

-

# 循環(huán)神經(jīng)網(wǎng)絡(luò)模型的訓(xùn)練函數(shù)既支持從零開始實(shí)現(xiàn), 也可以使用高級API來實(shí)現(xiàn)

def train_ch8(net, train_iter, vocab, lr, num_epochs, device,

use_random_iter=False):

"""訓(xùn)練模型(定義見第8章)"""

loss = nn.CrossEntropyLoss()

animator = d2l.Animator(xlabel='epoch', ylabel='perplexity',

legend=['train'], xlim=[10, num_epochs])

# 初始化

if isinstance(net, nn.Module):

updater = torch.optim.SGD(net.parameters(), lr)

else:

updater = lambda batch_size: d2l.sgd(net.params, lr, batch_size)

predict = lambda prefix: predict_ch8(prefix, 50, net, vocab, device)

# 訓(xùn)練和預(yù)測

for epoch in range(num_epochs):

ppl, speed = train_epoch_ch8(

net, train_iter, loss, updater, device, use_random_iter)

if (epoch + 1) % 10 == 0:

print(predict('time traveller'))

animator.add(epoch + 1, [ppl])

print(f'困惑度 {ppl:.1f}, {speed:.1f} 詞元/秒 {str(device)}')

print(predict('time traveller'))

print(predict('traveller'))

-

功能:

-

設(shè)置損失函數(shù)和可視化

-

初始化優(yōu)化器

-

每10個(gè)epoch生成預(yù)測文本

-

繪制困惑度曲線

-

輸出最終訓(xùn)練結(jié)果

-

10. 模型訓(xùn)練執(zhí)行

# 訓(xùn)練循環(huán)神經(jīng)網(wǎng)絡(luò)模型

num_epochs, lr = 500, 1

- 功能:設(shè)置訓(xùn)練輪數(shù)(500)和學(xué)習(xí)率(1)

train_ch8(net, train_iter, vocab, lr, num_epochs, d2l.try_gpu())

- 功能:執(zhí)行訓(xùn)練(順序采樣)

# 最后,檢查一下使用隨機(jī)抽樣方法的結(jié)果

net = RNNModelScratch(len(vocab), num_hiddens, d2l.try_gpu(), get_params, init_rnn_state, rnn)

- 功能:重新初始化模型(確保公平比較)

train_ch8(net, train_iter, vocab, lr, num_epochs, d2l.try_gpu(), use_random_iter=True)

- 功能:執(zhí)行訓(xùn)練(隨機(jī)采樣)

關(guān)鍵執(zhí)行流程總結(jié)

1. 數(shù)據(jù)流

-

文本數(shù)據(jù) → 字符索引 → 獨(dú)熱編碼

-

輸入形狀:(批量大小, 時(shí)間步數(shù)) → (時(shí)間步數(shù), 批量大小, 詞匯表大小)

2. 模型流

輸入X → 獨(dú)熱編碼 → RNN單元 → 隱藏狀態(tài)H → 輸出Y

↑ ↓

└───[H]──┘

3. 訓(xùn)練流

for epoch in 500:

初始化隱藏狀態(tài)

for batch in 數(shù)據(jù)迭代器:

前向傳播 → 計(jì)算損失 → 反向傳播 → 梯度裁剪 → 更新參數(shù)

每10個(gè)epoch:生成文本并顯示困惑度

4. 文本生成流

給定前綴 → 預(yù)熱狀態(tài) → 循環(huán)生成字符 → 拼接結(jié)果

浙公網(wǎng)安備 33010602011771號

浙公網(wǎng)安備 33010602011771號