十九、云原生分布式存儲 CubeFS

十九、云原生分布式存儲 CubeFS

1、分布式存儲初識

1.1 分布式存儲主要特性

- 支持近乎無限的擴容

- 支持容錯能力和數據冗余

- 支持多機房多區(qū)域部署

- 支持負載均衡和并行處理

- 支持權限管理和多用戶

- 支持多種文件存儲類型

- 支持普通硬件設計

1.2 為什么要在K8s上落地存儲平臺

萬物皆可容器化

- 簡化部署和管理

- 自動化運維

- 一鍵式動態(tài)擴展

- 故障自愈和高可用性

- 云原生生態(tài)集成

1.3 云原生存儲平臺CubeFS介紹

CubeFS是新一代云原生存儲產品,目前是云原生計算基金會(CNCF)托管的畢業(yè)開源項目,兼容S3、POSIX、HDFS等多種訪問協議,支持多副本與糾刪碼兩種存儲引擎,為用戶提供多租戶、多AZ部署以及跨區(qū)域復制等多種特性,廣泛應用于大數據、AI、容器平臺、數據庫、中間件存算分離、數據共享以及數據保護等場景。

CubeFS特性:

- 多協議:支持S3、POSIX、HDFS

- 雙引擎:支持多副本與糾刪碼

- 多租戶:支持多租戶隔離和權限分配

- 可擴展:支持各模塊水平擴展,輕松擴展到PB或EB級

- 高性能:支持多級緩存、支持多種高性能的復制協議

- 云原生:自帶CSI插件,一鍵集成kubernetes

- 多場景:大數據分析、機器學習、深度訓練、共享存儲、對象存儲、數據庫中間件等

1.4 分布式存儲平臺落地架構

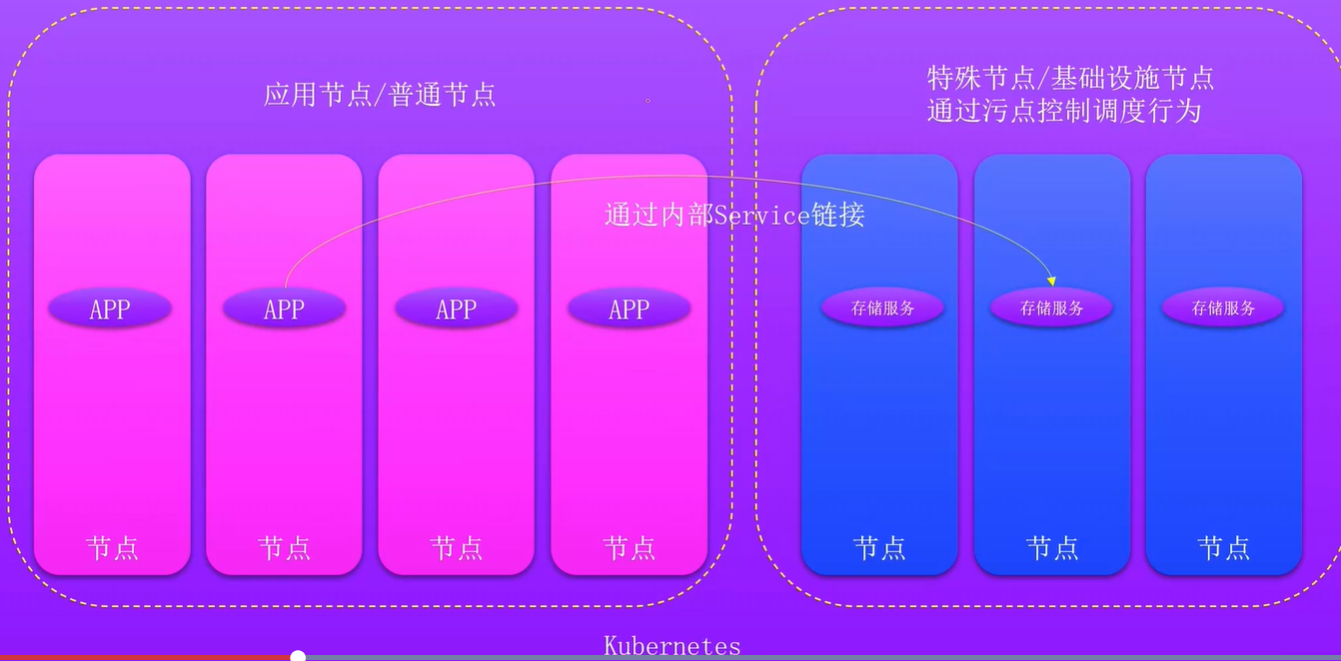

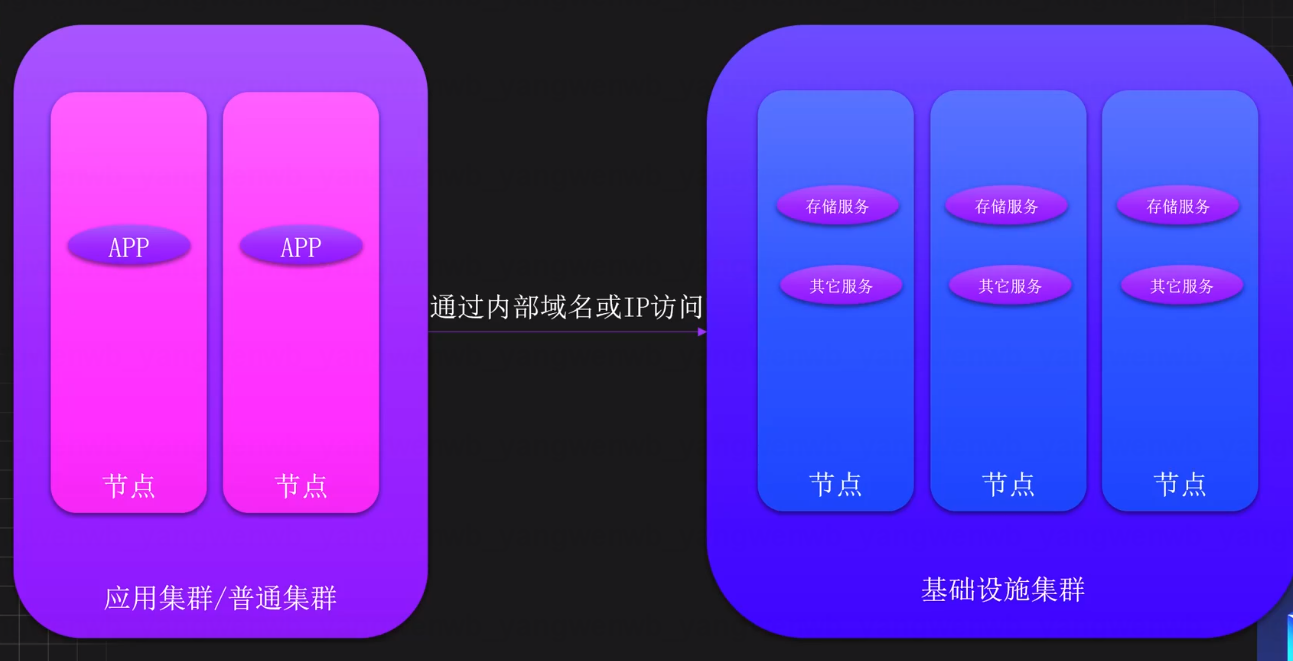

1.4.1 混合部署

1.4.2 獨立部署-基礎設施集群

1.5 資源分配建議

元數據節(jié)點總內存計算規(guī)則:每個文件元數據占用空間2KB~4KB左右

根據文件數量預估

假設已知的文件數量預估為10億

通過計算規(guī)則需要的內存KB為:20億KB

換算為G:2000000000 / 1024 / 1024 ≈ 2000G

根據數據量預估

假設集群數據總量為10PB = 10240TB = 10737418240MB

通過默認分片大小8MB預估,可能需要10737418240 / 8 ≈ 1342177280個文件

通過計算規(guī)則需要的內存KB為:2684354560KB ≈ 2500G

1.6 硬件設計

服務器硬件設計 / 1PB / 256G內存

2、CubeFS安裝

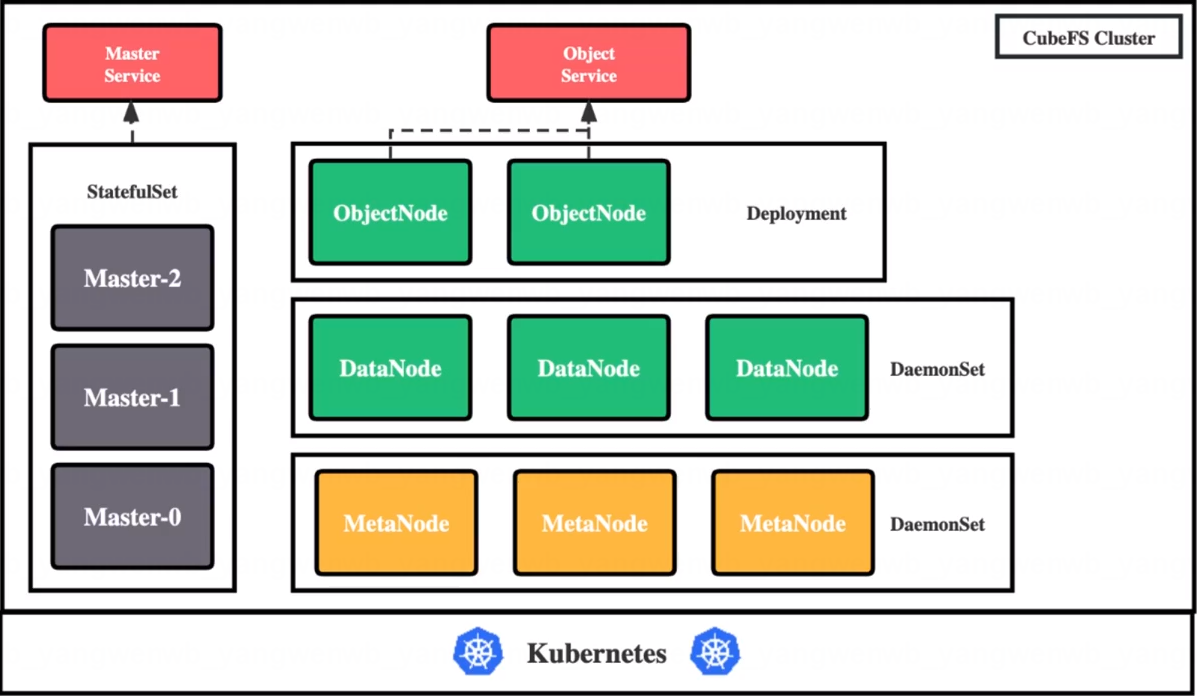

2.1 CubeFS 部署架構

CubeFS 目前由這四部分組成:

- Master:資源管理節(jié)點,負責維護整個集群的元信息,部署為 StatefulSet 資源

- DataNode:數據存儲節(jié)點,需要掛載大量磁盤負責文件數據的實際存儲,部署為 DaemonSet 資源

- MetaNode:元數據節(jié)點,負責存儲所有的文件元信息,部署為 DaemonSet 資源

- ObjectNode:負責提供轉換 S3 協議提供對象存儲的能力,無狀態(tài)服務,部署為 Deployment 資源

2.2 集群規(guī)劃

| 主機名稱 | 物理IP | 系統 | 資源配置 | 數據磁盤 |

|---|---|---|---|---|

| k8s-master01 | 192.168.200.50 | Rocky9.4 | 4核8g | 40G*2 |

| k8s-node01 | 192.168.200.51 | Rocky9.4 | 4核8g | 40G*2 |

| k8s-node02 | 192.168.200.52 | Rocky9.4 | 4核8g | 40G*2 |

2.3 首先給節(jié)點打上標簽,用來標記部署什么服務:

# Master 節(jié)點,至少三個,建議為奇數個

kubectl label node <nodename> component.cubefs.io/master=enabled

# MetaNode 元數據節(jié)點,至少 3 個,奇偶無所謂

kubectl label node <nodename> component.cubefs.io/metanode=enabled

# Dataode 數據節(jié)點,至少 3 個,奇偶無所謂

kubectl label node <nodename> component.cubefs.io/datanode=enabled

# ObjectNode 對象存儲節(jié)點,可以按需進行標記,不需要對象存儲功能的話也可以不部署這個組件

kubectl label node <nodename> component.cubefs.io/objectnode=enabled

master節(jié)點僅作演示

# 生產要指定節(jié)點打標簽

[root@k8s-master01 ~]# kubectl label node component.cubefs.io/master=enabled --all

[root@k8s-master01 ~]# kubectl label node component.cubefs.io/metanode=enabled --all

[root@k8s-master01 ~]# kubectl label node component.cubefs.io/datanode=enabled --all

[root@k8s-master01 ~]# kubectl label node component.cubefs.io/objectnode=enabled --all

2.4 數據盤配置

在配置了 component.cubefs.io/datanode=enabled 標簽的節(jié)點上,對數據盤進行初始化操作。

首先需要添加一個新盤,然后通過 fdisk -l 查看:

[root@k8s-master01 ~]# fdisk -l | grep /dev/nvme

Disk /dev/nvme0n1: 50 GiB, 53687091200 bytes, 104857600 sectors

/dev/nvme0n1p1 * 2048 2099199 2097152 1G 83 Linux

/dev/nvme0n1p2 2099200 104857599 102758400 49G 8e Linux LVM

Disk /dev/nvme0n2: 40 GiB, 42949672960 bytes, 83886080 sectors

格式化每個磁盤并掛載(三節(jié)點操作):

# 格式化硬盤

[root@k8s-master01 ~]# mkfs.xfs -f /dev/nvme0n2

# 創(chuàng)建掛載目錄,如果機器上存在多個需要掛載的數據磁盤,則每個磁盤按以上步驟進行格式化和掛載磁盤,掛載目錄按照 data0/data1/../data999 的順序命名

[root@k8s-master01 ~]# mkdir /data0

# 掛載磁盤

[root@k8s-master01 ~]# mount /dev/nvme0n2 /data0

# 設置為開機自動掛載(注意三個節(jié)點磁盤的UUID號不同):

[root@k8s-master01 ~]# blkid /dev/nvme0n2

/dev/nvme0n2: UUID="56b90331-8053-403b-9393-597811b81310" TYPE="xfs"

[root@k8s-master01 ~]# echo "UUID=56b90331-8053-403b-9393-597811b81310 /data0 xfs defaults 0 0" >>/etc/fstab

[root@k8s-master01 ~]# mount -a

2.5 CubeFS 部署

下載安裝文件

[root@k8s-master01 ~]# git clone https://gitee.com/dukuan/cubefs-helm.git

調整安裝配置

[root@k8s-master01 ~]# cd cubefs-helm/cubefs/

[root@k8s-master01 cubefs]# vim values.yaml

[root@k8s-master01 cubefs]# sed -n "2,15p;21,24p;27,31p;33,35p;50p;52p;56,57p;70,77p;79p;87p;93,100p;107p;123,124p;127,134p;153p;156p;166p" values.yaml

component:

master: true

datanode: true

metanode: true

objectnode: true

client: false

csi: false

monitor: false

ingress: false

blobstore_clustermgr: false

blobstore_blobnode: false

blobstore_proxy: false

blobstore_scheduler: false

blobstore_access: false

image:

server: crpi-q1nb2n896zwtcdts.cn-beijing.personal.cr.aliyuncs.com/ywb01/cfs-server:v3.5.0

client: crpi-q1nb2n896zwtcdts.cn-beijing.personal.cr.aliyuncs.com/ywb01/cfs-client:v3.5.0

blobstore: crpi-q1nb2n896zwtcdts.cn-beijing.personal.cr.aliyuncs.com/ywb01/blobstore:v3.4.0

csi_driver: crpi-q1nb2n896zwtcdts.cn-beijing.personal.cr.aliyuncs.com/ywb01/cfs-csi-driver:v3.5.0

csi_provisioner: crpi-q1nb2n896zwtcdts.cn-beijing.personal.cr.aliyuncs.com/ywb01/csi-provisioner:v2.2.2

csi_attacher: crpi-q1nb2n896zwtcdts.cn-beijing.personal.cr.aliyuncs.com/ywb01/sig-storage/csi-attacher:v3.4.0

csi_resizer: crpi-q1nb2n896zwtcdts.cn-beijing.personal.cr.aliyuncs.com/ywb01/csi-resizer:v1.3.0

driver_registrar: crpi-q1nb2n896zwtcdts.cn-beijing.personal.cr.aliyuncs.com/ywb01/csi-node-driver-registrar:v2.5.0

grafana: crpi-q1nb2n896zwtcdts.cn-beijing.personal.cr.aliyuncs.com/ywb01/grafana:6.4.4

prometheus: crpi-q1nb2n896zwtcdts.cn-beijing.personal.cr.aliyuncs.com/ywb01/prometheus:v2.13.1

consul: crpi-q1nb2n896zwtcdts.cn-beijing.personal.cr.aliyuncs.com/ywb01/consul:1.6.1

# 主節(jié)點配置:

master:

replicas: 3

nodeSelector:

"component.cubefs.io/master": "enabled"

resources:

enabled: false

requests:

memory: "512Mi" # 生產環(huán)境建議: 8G

cpu: "200m" # 生產環(huán)境建議: 2000m

limits:

memory: "2Gi" # 生產環(huán)境建議: 32G

cpu: "2000m" # 生產環(huán)境建議: 8000m

# 元數據節(jié)點配置:

metanode:

total_mem: "6442450944" # 可用內存建議為主機的 80%

resources:

enabled: true

requests:

memory: "512Mi" # 生產環(huán)境建議: 8G

cpu: "200m" # 生產環(huán)境建議: 2000m

limits:

memory: "2Gi" # 生產環(huán)境建議: 32G

cpu: "2000m" # 生產環(huán)境建議: 8000m

# 數據盤配置:

datanode:

disks:

- /data0:2147483648

resources:

enabled: false

requests:

memory: "512Mi" # 生產環(huán)境建議: 32G

cpu: "200m" # 生產環(huán)境建議: 2000m

limits:

memory: "2Gi" # 生產環(huán)境建議: 256G

cpu: "2000m" # 生產環(huán)境建議: 8000m

# 對象存儲節(jié)點配置:

objectnode:

replicas: 3

domains: "objectcfs.cubefs.io,objectnode.cubefs.io"

# 執(zhí)行部署:

[root@k8s-master01 cubefs]# helm upgrade --install cubefs -n cubefs --create-namespace .

# 查看 Pod 狀態(tài):

[root@k8s-master01 cubefs]# kubectl get pod -n cubefs

NAME READY STATUS RESTARTS AGE

datanode-22bh8 1/1 Running 0 4m13s

datanode-67fbm 1/1 Running 0 4m13s

datanode-9dh4q 1/1 Running 0 4m13s

master-0 1/1 Running 0 4m13s

master-1 1/1 Running 0 42s

master-2 1/1 Running 0 39s

metanode-n6tpz 1/1 Running 0 4m13s

metanode-nq5m4 1/1 Running 0 4m13s

metanode-tt8q8 1/1 Running 0 4m13s

objectnode-5ff648b685-6rrkt 1/1 Running 0 4m13s

objectnode-5ff648b685-p9cxd 1/1 Running 0 4m13s

objectnode-5ff648b685-r2kw8 1/1 Running 0 4m13s

如果遇到啟動失敗的,可以在對應的節(jié)點上,查看 /var/log/cubefs 下的日志。

3、CubeFS 客戶端部署使用

3.1 CubeFS 客戶端部署

下載工具包:

[root@k8s-master01 ~]# tar xf cubefs-3.5.0-linux-amd64.tar.gz

[root@k8s-master01 ~]# mv cubefs/build/bin/cfs-cli /usr/local/bin/

[root@k8s-master01 ~]# mv cubefs/build/bin/cfs-client /usr/local/bin/

[root@k8s-master01 ~]# cfs-cli --version

CubeFS CLI

Version : v3.5.0

Branch : HEAD

Commit : 10353bf433fefd51c6eef564035c8a682515789c

Build : go1.20.4 linux amd64 2025-03-17 17:40

客戶端配置:

[root@k8s-master01 ~]# kubectl get svc -n cubefs

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

master-service ClusterIP 10.105.1.29 <none> 17010/TCP 155m

# 更改 masterAddr 為 master service 的 svc ip

[root@k8s-master01 ~]# vim ~/.cfs-cli.json

[root@k8s-master01 ~]# cat ~/.cfs-cli.json

{

"masterAddr": [

"10.105.1.29:17010"

],

"timeout": 60

}

3.2 集群管理

獲取集群信息,包括集群名稱、地址、卷數量、節(jié)點數量和使用率等:

[root@k8s-master01 ~]# cfs-cli cluster info

[Cluster]

Cluster name : my-cluster

Master leader : master-0.master-service:17010

Master-1 : master-0.master-service:17010

Master-2 : master-1.master-service:17010

Master-3 : master-2.master-service:17010

Auto allocate : Enabled

MetaNode count (active/total) : 3/3

MetaNode used : 0 GB

MetaNode available : 17 GB

MetaNode total : 18 GB

DataNode count (active/total) : 3/3

DataNode used : 0 GB

DataNode available : 103 GB

DataNode total : 104 GB

Volume count : 0

Allow Mp Decomm : Enabled

EbsAddr :

LoadFactor : 0

DpRepairTimeout : 2h0m0s

DataPartitionTimeout : 20m0s

volDeletionDelayTime : 48 h

EnableAutoDecommission : false

AutoDecommissionDiskInterval : 10s

EnableAutoDpMetaRepair : false

AutoDpMetaRepairParallelCnt : 100

MarkDiskBrokenThreshold : 0%

DecommissionDpLimit : 10

DecommissionDiskLimit : 1

DpBackupTimeout : 168h0m0s

ForbidWriteOpOfProtoVersion0 : false

LegacyDataMediaType : 0

BatchCount : 0

MarkDeleteRate : 0

DeleteWorkerSleepMs: 0

AutoRepairRate : 0

MaxDpCntLimit : 3000

MaxMpCntLimit : 300

獲取集群狀態(tài),按區(qū)域獲取元數據和數據節(jié)點使用率、狀態(tài)等:

[root@k8s-master01 ~]# cfs-cli cluster stat

[Cluster Status]

DataNode Status:

TOTAL/GB USED/GB INCREASED/GB USED RATIO

104 0 0 0.009

MetaNode Status:

TOTAL/GB USED/GB INCREASED/GB USED RATIO

18 0 0 0.014

Zone List:

ZONE NAME ROLE TOTAL/GB USED/GB AVAILABLE/GB USED RATIO TOTAL NODES WRITEBLE NODES

default DATANODE 104.81 0.93 103.88 0.01 3 3

METANODE 18 0.25 17.75 0.01 3 3

Metanode 的 Total 為最大可用內存,由所有 metanode 的 MaxMemAvailWeight 之和計算得來。

設置卷刪除延遲的時間,表示卷被刪除多久才會被徹底刪除,默認 48h,在此之前可以恢復:

cfs-cli cluster volDeletionDelayTime 72

3.3 元數據節(jié)點管理

列出所有的元數據節(jié)點,包括 ID、地址、讀寫狀態(tài)及存活狀態(tài)等:

[root@k8s-master01 ~]# cfs-cli metanode list

[Meta nodes]

ID ADDRESS WRITABLE ACTIVE MEDIA ForbidWriteOpOfProtoVer0

2 192.168.200.52:17210(master-0.master-service.cubefs.svc.cluster.local:17210) Yes Active N/A notForbid

6 192.168.200.51:17210(master-2.master-service.cubefs.svc.cluster.local:17210) Yes Active N/A notForbid

7 192.168.200.50:17210(192-168-200-50.kubernetes.default.svc.cluster.local:17210,master-1.master-service.cubefs.svc.cluster.local:17210) Yes Active N/A notForbid

查看某個節(jié)點的詳細信息:

[root@k8s-master01 ~]# cfs-cli metanode info 192.168.200.52:17210

[Meta node info]

ID : 2

Address : 192.168.200.52:17210(master-0.master-service.cubefs.svc.cluster.local:17210)

Threshold : 0.75

MaxMemAvailWeight : 5.91 GB

Allocated : 95.27 MB

Total : 6.00 GB

Zone : default

Status : Active

Rdonly : false

Report time : 2025-08-23 20:23:52

Partition count : 0

Persist partitions : []

Can alloc partition : true

Max partition count : 300

CpuUtil : 1.0%

3.4 數據節(jié)點管理

列舉所有的數據節(jié)點,包括 ID、地址、讀寫狀態(tài)和存活狀態(tài):

[root@k8s-master01 ~]# cfs-cli datanode list

[Data nodes]

ID ADDRESS WRITABLE ACTIVE MEDIA ForbidWriteOpOfProtoVer0

3 192.168.200.50:17310(master-1.master-service.cubefs.svc.cluster.local:17310,192-168-200-50.kubernetes.default.svc.cluster.local:17310) Yes Active N/A notForbid

4 192.168.200.51:17310(master-2.master-service.cubefs.svc.cluster.local:17310) Yes Active N/A notForbid

5 192.168.200.52:17310(master-0.master-service.cubefs.svc.cluster.local:17310) Yes Active N/A notForbid

展示某個節(jié)點的詳細信息:

[root@k8s-master01 ~]# cfs-cli datanode info 192.168.200.52:17310

[Data node info]

ID : 5

Address : 192.168.200.52:17310(master-0.master-service.cubefs.svc.cluster.local:17310)

Allocated ratio : 0.008872044107781753

Allocated : 317.41 MB

Available : 34.63 GB

Total : 34.94 GB

Zone : default

Rdonly : false

Status : Active

MediaType : Unspecified

ToBeOffline : False

Report time : 2025-08-23 20:25:53

Partition count : 0

Bad disks : []

Decommissioned disks: []

Persist partitions : []

Backup partitions : []

Can alloc partition : true

Max partition count : 3000

CpuUtil : 1.3%

IoUtils :

/dev/nvme0n2:0.0%

下線數據節(jié)點(不要輕易操作)

# 下線數據節(jié)點,下線后該節(jié)點的數據將自動遷移至其他節(jié)點:

[root@k8s-master01 ~]# cfs-cli datanode decommission 192.168.200.52:17310

# 下線后,節(jié)點信息無法在查看:

[root@k8s-master01 ~]# cfs-cli datanode info 192.168.200.52:17310

Error: data node not exists

# 數據空間也會降低:

[root@k8s-master01 ~]# cfs-cli cluster stat

[Cluster Status]

DataNode Status:

TOTAL/GB USED/GB INCREASED/GB USED RATIO

69 0 0 0.009

MetaNode Status:

TOTAL/GB USED/GB INCREASED/GB USED RATIO

18 0 0 0.014

Zone List:

ZONE NAME ROLE TOTAL/GB USED/GB AVAILABLE/GB USED RATIO TOTAL NODES WRITEBLE NODES

default DATANODE 69.88 0.62 69.26 0.01 2 2

METANODE 18 0.25 17.75 0.01 3 3

Pod 重建后,節(jié)點重新加入:

# 查詢下線節(jié)點pod

[root@k8s-master01 ~]# kubectl get po -n cubefs -owide | grep data | grep 192.168.200.52

datanode-9dh4q 1/1 Running 0 3h42m 192.168.200.52 k8s-node02 <none> <none>

# 刪除pod重新加入節(jié)點

[root@k8s-master01 ~]# kubectl delete po datanode-9dh4q -n cubefs

# 數據空間恢復

[root@k8s-master01 ~]# cfs-cli cluster stat

[Cluster Status]

DataNode Status:

TOTAL/GB USED/GB INCREASED/GB USED RATIO

104 0 0 0.009

MetaNode Status:

TOTAL/GB USED/GB INCREASED/GB USED RATIO

18 0 0 0.014

Zone List:

ZONE NAME ROLE TOTAL/GB USED/GB AVAILABLE/GB USED RATIO TOTAL NODES WRITEBLE NODES

default DATANODE 104.81 0.93 103.88 0.01 3 3

METANODE 18 0.26 17.74 0.01 3 3

3.5 用戶管理

CubeFS 支持多用戶,可以為每個用戶對每個卷分配不同的權限,同時也可為對象存儲提供用戶認證。

創(chuàng)建用戶:

[root@k8s-master01 ~]# cfs-cli user create test --yes

獲取用戶信息:

[root@k8s-master01 ~]# cfs-cli user info test

[Summary]

User ID : test

Access Key : XMnVAEysoGEFtZyV

Secret Key : 1w4TdSfHoY0cqSrHBUKvJ5Hx5AxmcuiP

Type : normal

Create Time: 2025-08-23 20:52:48

[Volumes]

VOLUME PERMISSION

列舉所有用戶:

[root@k8s-master01 ~]# cfs-cli user list

ID TYPE ACCESS KEY SECRET KEY CREATE TIME

root Root mPsMgs0lqDZ5ebG4 1LIvar5UHEsKnxLb1BIuCKWO7fWMUZaE 2025-08-23 17:02:09

test Normal XMnVAEysoGEFtZyV 1w4TdSfHoY0cqSrHBUKvJ5Hx5AxmcuiP 2025-08-23 20:52:48

刪除用戶:

[root@k8s-master01 ~]# cfs-cli user delete test --yes

[root@k8s-master01 ~]# cfs-cli user list

ID TYPE ACCESS KEY SECRET KEY CREATE TIME

root Root mPsMgs0lqDZ5ebG4 1LIvar5UHEsKnxLb1BIuCKWO7fWMUZaE 2025-08-23 17:02:09

3.6 數據卷管理

創(chuàng)建一個卷:

# 命令格式:cfs-cli volume create [VOLUME NAME] [USER ID] [flags]

[root@k8s-master01 ~]# cfs-cli volume create volume-test test --capacity 1 -y

# volume-test:卷的名字

# test:用戶,如果用戶不存在就自動創(chuàng)建

# --capacity 1:卷的大小,單位為G(不指定大小默認10G)

列出所有的卷:

[root@k8s-master01 ~]# cfs-cli volume list

VOLUME OWNER USED TOTAL STATUS CREATE TIME

volume-test test 0.00 B 1.00 GB Normal Sat, 23 Aug 2025 20:58:43 CST

查看某個卷的詳細信息:

[root@k8s-master01 ~]# cfs-cli volume info volume-test

Summary:

ID : 9

Name : volume-test

Owner : test

Authenticate : Disabled

Capacity : 1 GB

Create time : 2025-08-23 20:58:43

DeleteLockTime : 0

Cross zone : Disabled

DefaultPriority : false

Dentry count : 0

Description :

DpCnt : 10

DpReplicaNum : 3

Follower read : Disabled

Meta Follower read : Disabled

Direct Read : Disabled

Inode count : 1

Max metaPartition ID : 3

MpCnt : 3

MpReplicaNum : 3

NeedToLowerReplica : Disabled

RwDpCnt : 10

Status : Normal

ZoneName : default

VolType : 0

DpReadOnlyWhenVolFull : false

Transaction Mask : rename

Transaction timeout : 1

Tx conflict retry num : 10

Tx conflict retry interval(ms) : 20

Tx limit interval(s) : 0

Forbidden : false

DisableAuditLog : false

TrashInterval : 0s

DpRepairBlockSize : 128KB

EnableAutoDpMetaRepair : false

Quota : Disabled

AccessTimeValidInterval : 24h0m0s

MetaLeaderRetryTimeout : 0s

EnablePersistAccessTime : false

ForbidWriteOpOfProtoVer0 : false

VolStorageClass : Unspecified

AllowedStorageClass : []

CacheDpStorageClass : Unspecified

禁用卷:

[root@k8s-master01 ~]# cfs-cli volume set-forbidden volume-test true

[root@k8s-master01 ~]# cfs-cli volume info volume-test | grep -i Forbidden

Forbidden : true

取消禁用:

[root@k8s-master01 ~]# cfs-cli volume set-forbidden volume-test false

[root@k8s-master01 ~]# cfs-cli volume info volume-test | grep -i Forbidden

Forbidden : false

卷擴容或者更新卷配置:

[root@k8s-master01 ~]# cfs-cli volume update volume-test test --capacity 2 -y

[root@k8s-master01 ~]# cfs-cli volume list

VOLUME OWNER USED TOTAL STATUS CREATE TIME

volume-test test 0.00 B 2.00 GB Normal Sat, 23 Aug 2025 20:58:43 CST

添加空間限制:

# 如果卷的空間滿了,就不能繼續(xù)往里面寫數據

[root@k8s-master01 ~]# cfs-cli volume update volume-test --readonly-when-full true -y

[root@k8s-master01 ~]# cfs-cli volume info volume-test | grep -i readonly

DpReadOnlyWhenVolFull : true

刪除卷:

[root@k8s-master01 ~]# cfs-cli volume delete volume-test -y

3.7 CubeFS 掛載測試

創(chuàng)建卷:

[root@k8s-master01 ~]# cfs-cli volume create volume-test ltptest -y

創(chuàng)建客戶端的配置文件:

[root@k8s-master01 ~]# vim volume-test-client.conf

[root@k8s-master01 ~]# cat volume-test-client.conf

{

"mountPoint": "/volume-test",

"volName": "volume-test", # 掛載卷名稱

"owner": "ltptest", # 用戶

"masterAddr": "10.103.104.112:17010", # master-service的IP

"logDir": "/cfs/client/log",

"logLevel": "info",

"profPort": "27510"

}

安裝 fuse:

[root@k8s-master01 ~]# yum install fuse -y

掛載:

[root@k8s-master01 ~]# cfs-client -c volume-test-client.conf

[root@k8s-master01 ~]# df -Th | grep volume-test

cubefs-volume-test fuse.cubefs 10G 0 10G 0% /volume-test

寫入數據測試:

[root@k8s-master01 ~]# cd /volume-test/

[root@k8s-master01 volume-test]# dd if=/dev/zero of=./cubefs bs=1M count=512

512+0 records in

512+0 records out

536870912 bytes (537 MB, 512 MiB) copied, 45.2422 s, 11.9 MB/s

[root@k8s-master01 volume-test]# dd if=/dev/zero of=./cubefs bs=128M count=4

4+0 records in

4+0 records out

536870912 bytes (537 MB, 512 MiB) copied, 36.2865 s, 14.8 MB/s

# 查看卷使用:

[root@k8s-master01 volume-test]# cfs-cli volume list

VOLUME OWNER USED TOTAL STATUS CREATE TIME

volume-test ltptest 512.00 MB 10.00 GB Normal Sun, 24 Aug 2025 22:29:21 CST

3.8 CubeFS 擴容

3.8.1 基于磁盤擴容

如果 CubeFS 是部署在 K8s 中的,擴容時需要給每個主機都添加一塊硬盤:

首先需要添加一個新盤,然后通過 fdisk -l 查看:

[root@k8s-master01 ~]# fdisk -l | grep /dev/nvme0n3

Disk /dev/nvme0n3: 50 GiB, 53687091200 bytes, 104857600 sectors

格式化每個磁盤并掛載(三節(jié)點操作):

# 格式化硬盤

[root@k8s-master01 ~]# mkfs.xfs -f /dev/nvme0n3

# 創(chuàng)建掛載目錄,如果機器上存在多個需要掛載的數據磁盤,則每個磁盤按以上步驟進行格式化和掛載磁盤,掛載目錄按照 data0/data1/../data999 的順序命名

[root@k8s-master01 ~]# mkdir /data1

# 掛載磁盤

[root@k8s-master01 ~]# mount /dev/nvme0n3 /data1

# 設置為開機自動掛載(注意三個節(jié)點磁盤的UUID號不同):

[root@k8s-master01 ~]# blkid /dev/nvme0n3

/dev/nvme0n3: UUID="6abaad00-bdf3-4e29-8fe0-9920da8ced6b" TYPE="xfs"

[root@k8s-master01 ~]# echo "UUID=6abaad00-bdf3-4e29-8fe0-9920da8ced6b /data1 xfs defaults 0 0" >>/etc/fstab

[root@k8s-master01 ~]# mount -a

更改 datanode 的配置:

[root@k8s-master01 ~]# cd cubefs-helm/cubefs/

[root@k8s-master01 cubefs]# vim values.yaml

[root@k8s-master01 cubefs]# sed -n "107p;123,125p" values.yaml

datanode:

disks:

- /data0:2147483648

- /data1:2147483648

更新配置:

[root@k8s-master01 cubefs]# helm upgrade cubefs -n cubefs .

觸發(fā) datanode 的重啟:

[root@k8s-master01 cubefs]# kubectl delete po -n cubefs -l app.kubernetes.io/component=datanode

重啟查看集群狀態(tài):

[root@k8s-master01 cubefs]# cfs-cli cluster stat

[Cluster Status]

DataNode Status:

TOTAL/GB USED/GB INCREASED/GB USED RATIO

159 1 1 0.009

MetaNode Status:

TOTAL/GB USED/GB INCREASED/GB USED RATIO

18 0 0 0.020

Zone List:

ZONE NAME ROLE TOTAL/GB USED/GB AVAILABLE/GB USED RATIO TOTAL NODES WRITEBLE NODES

default DATANODE 159.75 1.38 158.37 0.01 3 0

METANODE 18 0.36 17.64 0.02 3

3.8.2 基于主機的擴容

基于主機的擴容,需要通過添加 datanode 節(jié)點來完成。

- 添加一個新節(jié)點,已有節(jié)點可以忽略

- 在新節(jié)點上添加和當前配置一樣的硬盤并掛載

- 在新節(jié)點上打

component.cubefs.io/datanode=enabled標簽即可

4、CubeFS 對象存儲

下載 Minio 對象存儲客戶端:

[root@k8s-master01 ~]# curl https://dl.minio.org.cn/client/mc/release/linux-amd64/mc --create-dirs -o /usr/local/bin/mc

[root@k8s-master01 ~]# chmod +x /usr/local/bin/mc

配置對象存儲:

# 為每個項目創(chuàng)建用戶:

[root@k8s-master01 ~]# cfs-cli user create projecta -y

[root@k8s-master01 ~]# cfs-cli user list

ID TYPE ACCESS KEY SECRET KEY CREATE TIME

root Root mPsMgs0lqDZ5ebG4 1LIvar5UHEsKnxLb1BIuCKWO7fWMUZaE 2025-08-23 17:02:09

projecta Normal vMAV6AOksC4cukBL NcYi7mLw39iz0jQbXE9K2XrgO672NN7P 2025-08-24 13:46:04

# 查看objectnode-service的IP

[root@k8s-master01 ~]# kubectl get svc -n cubefs

NAME TYPE CLUSTER-IP EXTERNAL-IP PORT(S) AGE

objectnode-service ClusterIP 10.111.87.176 <none> 1601/TCP 20h

# 添加項目的 host:

[root@k8s-master01 ~]# mc alias set projecta http://10.111.87.176:1601 vMAV6AOksC4cukBL NcYi7mLw39iz0jQbXE9K2XrgO672NN7P

# projecta:用戶

# http:objectnode-service的IP

# vMAV6AOksC4cukBL:projecta用戶ACCESS的值

# NcYi7mLw39iz0jQbXE9K2XrgO672NN7P:projecta用戶SECRET的值

對象存儲基本使用

# 創(chuàng)建桶:

[root@k8s-master01 ~]# mc mb projecta/app

# 查看桶:

[root@k8s-master01 ~]# mc ls projecta/

[2025-08-24 14:05:01 CST] 0B app/

# 會自動生成一個同名的卷(每個桶都是以卷的形式存在的)

[root@k8s-master01 ~]# cfs-cli volume list

VOLUME OWNER USED TOTAL STATUS CREATE TIME

app projecta 0 MB 10.00 GB Normal Sun, 24 Aug 2025 14:05:01 CST

# 上傳文件:

[root@k8s-master01 ~]# mc cp volume-ceshi-client.conf projecta/app/

# 查看文件

[root@k8s-master01 ~]# mc ls projecta/app/

[2025-08-24 14:07:28 CST] 192B STANDARD volume-ceshi-client.conf

# 刪除一個文件

[root@k8s-master01 ~]# mc rm projecta/app/volume-ceshi-client.conf

# 文件已經被刪除

[root@k8s-master01 ~]# mc ls projecta/app/

[root@k8s-master01 ~]#

# 上傳目錄:

# 不會上傳目錄本身,只會上傳目錄下的文件,所以要在存儲桶里寫一個同名目錄(自動創(chuàng)建)

[root@k8s-master01 ~]# mc cp cubefs/ projecta/app/cubefs/ -r

# 查看目錄及文件

[root@k8s-master01 ~]# mc ls projecta/app/

[2025-08-24 14:24:17 CST] 0B cubefs/

[root@k8s-master01 ~]# mc ls projecta/app/cubefs

[2025-08-24 14:40:35 CST] 0B build/

# 刪除一個目錄

[root@k8s-master01 ~]# mc rm projecta/app/cubefs/ -r --force

5、CubeFS 對接 K8s

5.1 CSI 部署

首先給非控制節(jié)點打上 CSI 的標簽(需要使用存儲的節(jié)點都需要打標簽):

[root@k8s-master01 ~]# kubectl label node component.cubefs.io/csi=enabled -l '!node-role.kubernetes.io/control-plane'

更改 values 配置:

[root@k8s-master01 ~]# cd cubefs-helm/cubefs/

[root@k8s-master01 cubefs]# vim values.yaml

[root@k8s-master01 cubefs]# sed -n "2p;8p;193p;207,214p;217p;219p" values.yaml

component:

csi: true

csi:

resources:

enabled: false

requests:

memory: "1024Mi"

cpu: "200m"

limits:

memory: "2048Mi"

cpu: "2000m"

setToDefault: true

reclaimPolicy: "Delete"

# 執(zhí)行安裝:

[root@k8s-master01 cubefs]# helm upgrade cubefs -n cubefs .

# 查詢創(chuàng)建的 StorageClass:

[root@k8s-master01 cubefs]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

cfs-sc (default) csi.cubefs.com Delete Immediate true 13s

# 查看 Pod

[root@k8s-master01 cubefs]# kubectl get po -n cubefs

NAME READY STATUS RESTARTS AGE

cfs-csi-controller-7cd54dddff-t5npw 4/4 Running 0 11m

cfs-csi-node-4qn6g 2/2 Running 0 11m

cfs-csi-node-hbq99 2/2 Running 0 11m

....

5.2 PVC 和 PV 測試

創(chuàng)建 PVC 測試:

[root@k8s-master01 ~]# vim cfs-pvc.yaml

[root@k8s-master01 ~]# cat cfs-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cubefs-test

namespace: default

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 1Gi

storageClassName: cfs-sc

volumeMode: Filesystem

創(chuàng)建后,查看 PV 和綁定狀態(tài):

[root@k8s-master01 ~]# kubectl create -f cfs-pvc.yaml

[root@k8s-master01 cubefs]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS VOLUMEATTRIBUTESCLASS REASON AGE

pvc-e4f37ba6-ebe9-48f7-bd5e-5dbe8bda0a77 1Gi RWX Delete Bound default/cubefs-test cfs-sc <unset> 72s

[root@k8s-master01 cubefs]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

cubefs-test Bound pvc-e4f37ba6-ebe9-48f7-bd5e-5dbe8bda0a77 1Gi RWX cfs-sc <unset> 7m37s

創(chuàng)建服務掛載測試:

[root@k8s-master01 ~]# kubectl create deploy nginx --image=crpi-q1nb2n896zwtcdts.cn-beijing.personal.cr.aliyuncs.com/ywb01/nginx:1.15.12 --dry-run=client -oyaml > nginx-deploy.yaml

[root@k8s-master01 ~]# vim nginx-deploy.yaml

[root@k8s-master01 ~]# cat nginx-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: nginx

name: nginx

spec:

replicas: 2

selector:

matchLabels:

app: nginx

strategy: {}

template:

metadata:

creationTimestamp: null

labels:

app: nginx

spec:

nodeSelector: # 只有安裝了 CSI 驅動的才可以掛載存儲

component.cubefs.io/csi: enabled

volumes:

- name: mypvc

persistentVolumeClaim:

claimName: cubefs-test

containers:

- image: crpi-q1nb2n896zwtcdts.cn-beijing.personal.cr.aliyuncs.com/ywb01/nginx:1.15

name: nginx

volumeMounts:

- name: mypvc

mountPath: "/mnt" # 掛載共享目錄

resources: {}

status: {}

數據共享測試:

# 創(chuàng)建

[root@k8s-master01 ~]# kubectl create -f nginx-deploy.yaml

# 查看pod

[root@k8s-master01 ~]# kubectl get po

NAME READY STATUS RESTARTS AGE

nginx-5c4f45cbc-8jr69 1/1 Running 0 80s

nginx-5c4f45cbc-cjhps 1/1 Running 0 80s

# 登錄其中一個容器寫入一個數據

[root@k8s-master01 ~]# kubectl exec -it nginx-5c4f45cbc-8jr69 -- bash

root@nginx-5c4f45cbc-8jr69:/# echo "ceshi" > /mnt/test

# 驗證數據是否共享成功

[root@k8s-master01 ~]# kubectl exec -it nginx-5c4f45cbc-cjhps -- bash

root@nginx-5c4f45cbc-cjhps:/# ls /mnt/

test

5.3 在線擴容

動態(tài)存儲大部分都支持在線擴容,可以直接編輯 PVC 即可:

[root@k8s-master01 ~]# vim cfs-pvc.yaml

[root@k8s-master01 ~]# cat cfs-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: cubefs-test

namespace: default

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 2Gi

storageClassName: cfs-sc

volumeMode: Filesystem

# 更新配置

[root@k8s-master01 ~]# kubectl apply -f cfs-pvc.yaml

等待一段時間即可完成擴容:

[root@k8s-master01 ~]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

cubefs-test Bound pvc-e4f37ba6-ebe9-48f7-bd5e-5dbe8bda0a77 2Gi RWX cfs-sc <unset> 22m

6、數據持久化實戰(zhàn)

6.1 MySQL 數據持久化

創(chuàng)建 PVC:

[root@k8s-master01 ~]# vim mysql-pvc.yaml

[root@k8s-master01 ~]# cat mysql-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: mysql

namespace: default

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

storageClassName: cfs-sc

volumeMode: Filesystem

# 創(chuàng)建PVC

[root@k8s-master01 ~]# kubectl create -f mysql-pvc.yaml

[root@k8s-master01 ~]# kubectl get pvc mysql

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

mysql Bound pvc-658e0ed8-6e9d-493d-b1f6-870df9c1b15c 5Gi RWO cfs-sc <unset> 22s

創(chuàng)建 Deployment:

[root@k8s-master01 ~]# vim mysql-deploy.yaml

[root@k8s-master01 ~]# cat mysql-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: mysql

name: mysql

spec:

replicas: 1

selector:

matchLabels:

app: mysql

strategy:

type: Recreate

template:

metadata:

creationTimestamp: null

labels:

app: mysql

spec:

nodeSelector:

component.cubefs.io/csi: enabled

volumes:

- name: data

persistentVolumeClaim:

claimName: mysql

containers:

- image: crpi-q1nb2n896zwtcdts.cn-beijing.personal.cr.aliyuncs.com/ywb01/mysql:8.0.20

name: mysql

env:

- name: MYSQL_ROOT_PASSWORD

value: mysql

volumeMounts:

- name: data

mountPath: "/var/lib/mysql"

resources: {}

status: {}

# 創(chuàng)建 mysql

[root@k8s-master01 ~]# kubectl create -f mysql-deploy.yaml

# 查看pod

[root@k8s-master01 ~]# kubectl get po

NAME READY STATUS RESTARTS AGE

mysql-7fc554db7f-b58b4 1/1 Running 0 4m30s

寫入數據測試:

[root@k8s-master01 ~]# kubectl exec -it mysql-7fc554db7f-b58b4 -- bash

root@mysql-7fc554db7f-b58b4:/# mysql -uroot -pmysql

....

mysql> create database cubefs;

Query OK, 1 row affected (0.08 sec)

mysql> create database yunwei;

Query OK, 1 row affected (0.07 sec)

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| cubefs |

| information_schema |

| mysql |

| performance_schema |

| sys |

| yunwei |

+--------------------+

6 rows in set (0.01 sec)

刪除 Pod 后測試數據是否還在:

[root@k8s-master01 ~]# kubectl delete po mysql-7fc554db7f-b58b4

[root@k8s-master01 ~]# kubectl exec -it mysql-7fc554db7f-dk6rx -- bash

root@mysql-7fc554db7f-b58b4:/# mysql -uroot -pmysql

....

mysql> show databases;

+--------------------+

| Database |

+--------------------+

| cubefs |

| information_schema |

| mysql |

| performance_schema |

| sys |

| yunwei |

+--------------------+

6 rows in set (0.01 sec)

6.2 大模型文件持久化

CubeFS 可以支撐 AI 訓練、模型存儲及分發(fā)、IO 加速等需求,所以可以直接把 CubeFS 作為大模型的數據存儲底座。

創(chuàng)建 PVC:

[root@k8s-master01 ~]# vim ollama-pvc.yaml

[root@k8s-master01 ~]# cat ollama-pvc.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: ollama-data

namespace: default

spec:

accessModes:

- ReadWriteMany

resources:

requests:

storage: 10Gi

storageClassName: cfs-sc

volumeMode: Filesystem

# 創(chuàng)建PVC

[root@k8s-master01 ~]# kubectl create -f ollama-pvc.yaml

[root@k8s-master01 ~]# kubectl get pvc ollama-data

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS VOLUMEATTRIBUTESCLASS AGE

ollama-data Bound pvc-9bb055e3-10f7-4bf8-8e26-8d44c4b8fa28 10Gi RWX cfs-sc <unset> 53s

創(chuàng)建 Ollama 服務:

[root@k8s-master01 ~]# vim ollama-deploy.yaml

[root@k8s-master01 ~]# cat ollama-deploy.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

creationTimestamp: null

labels:

app: ollama

name: ollama

spec:

replicas: 1

selector:

matchLabels:

app: ollama

template:

metadata:

creationTimestamp: null

labels:

app: ollama

spec:

nodeSelector:

component.cubefs.io/csi: enabled

volumes:

- name: data

persistentVolumeClaim:

claimName: ollama-data

readOnly: false

containers:

- image: crpi-q1nb2n896zwtcdts.cn-beijing.personal.cr.aliyuncs.com/ywb01/ollama

name: ollama

env:

- name: OLLAMA_MODELS

value: /data/models

volumeMounts:

- name: data

mountPath: /data/models

readOnly: false

resources: {}

status: {}

# 創(chuàng)建 ollama

[root@k8s-master01 ~]# kubectl create -f ollama-deploy.yaml

# 查看pod

[root@k8s-master01 ~]# kubectl get po

NAME READY STATUS RESTARTS AGE

ollama-cf4978c7f-mjgv9 1/1 Running 0 30m

下載模型:

[root@k8s-master01 ~]# kubectl exec -it ollama-cf4978c7f-mjgv9 -- bash

root@ollama-cf4978c7f-mjgv9:/# ollama pull deepseek-r1:1.5b

查看模型文件:

root@ollama-cf4978c7f-mjgv9:~# ls -l /data/models/

total 0

drwxr-xr-x 7 root root 0 Aug 25 00:34 blobs

drwxr-xr-x 3 root root 0 Aug 25 00:35 manifests

啟動模型測試:

root@ollama-cf4978c7f-mjgv9:~# ollama run deepseek-r1:1.5b

>>> 介紹一下自己

<think>

</think>

您好!我是由中國的深度求索(DeepSeek)公司開發(fā)的智能助手DeepSeek-R1。有關模型和產品的詳細內容請參考官方文檔。

>>>

浙公網安備 33010602011771號

浙公網安備 33010602011771號