python學習筆記之常用模塊(第五天)

參考老師的博客:

金角:http://www.rzrgm.cn/alex3714/articles/5161349.html

銀角:http://www.rzrgm.cn/wupeiqi/articles/4963027.html

一、常用函數說明:

★ lamba

python lambda是在python中使用lambda來創建匿名函數,而用def創建的方法是有名稱的,除了從表面上的方法名不一樣外,python lambda還有哪些和def不一樣呢?

1 python lambda會創建一個函數對象,但不會把這個函數對象賦給一個標識符,而def則會把函數對象賦值給一個變量。

2 python lambda它只是一個表達式,而def則是一個語句。

lambda語句中,冒號前是參數,可以有多個,用逗號隔開,冒號右邊的返回值。lambda語句構建的其實是一個函數對象。

例:

m = lambda x,y,z: (x-y)*z

print m(234,122,5)

也經常用于生成列表,例:

list = [i ** i for i in range(10)]

print(list)

list_lambda = map(lambda x:x**x,range(10))

print(list_lambda)

★ enumerate(iterable,[start]) iterable為一個可迭代的對象;

enumerate(iterable[, start]) -> iterator for index, value of iterable Return an enumerate object. iterable must be another object that supports iteration. The enumerate object yields pairs containing a count (from start, which defaults to zero) and a value yielded by the iterable

argument. enumerate is useful for obtaining an indexed list: (0, seq[0]), (1, seq[1]), (2, seq[2]), ...

例:

for k,v in enumerate(['a','b','c',1,2,3],10):

print k,v

★S.format(*args, **kwargs) -> string 字符串的格式輸出,類似于格式化輸出%s

Return a formatted version of S, using substitutions from args and kwargs.

The substitutions are identified by braces ('{' and '}').

s = 'i am {0},{1}'

print(s.format('wang',1))

★map(function,sequence) 將squence每一項做為參數傳給函數,并返回值

例:

def add(arg):

return arg + 101

print(map(add,[12,23,34,56]))

★filter(function or None, sequence) -> list, tuple, or string 返還true的序列

Return those items of sequence for which function(item) is true. If

function is None, return the items that are true. If sequence is a tuple

or string, return the same type, else return a list.

例:

def comp(arg):

if arg < 8:

return True

else:

return False

print(filter(comp,[1,19,21,8,5]))

print(filter(lambda x:x % 2,[1,19,20,8,5]))

print(filter(lambda x:x % 2,(1,19,20,8,5)))

print(filter(lambda x:x > 'a','AbcdE'))

注:因為在3.3里面,map(),filter()這些的返回值已經不再是list,而是iterators, 所以想要使用,只用將iterator 轉換成list 即可, 比如 list(map())

★reduce(function, sequence[, initial]) -> value 對二個參數進行計算

Apply a function of two arguments cumulatively to the items of a sequence,

from left to right, so as to reduce the sequence to a single value.For example, reduce(lambda x, y: x+y, [1, 2, 3, 4, 5]) calculates((((1+2)+3)+4)+5). If initial is present, it is placed before the

items of the sequence in the calculation, and serves as a default when the sequence is empty.

例:

print(reduce(lambda x,y:x*y,[22,11,8]))

print(reduce(lambda x,y:x*y,[3],10))

print(reduce(lambda x,y:x*y,[],5))

★zip(seq1 [, seq2 [...]]) -> [(seq1[0], seq2[0] ...), (...)] 將多個序列轉化為新元祖的序列

Return a list of tuples, where each tuple contains the i-th element from each of the argument sequences. The returned list is truncated in length to the length of the shortest argument sequence.

例:

a = [1,2,3,4,5,6]

b = [11,22,33,44,55]

c = [111,222,333,444]

print(zip(a,b,c))

★eval(source[, globals[, locals]]) -> value 將表達式字符串執行為值,其中globals為全局命名空間,locals為局部命名空間,從指字的命名空間中執行表達式,

Evaluate the source in the context of globals and locals. The source may be a string representing a Python expression or a code object as returned by compile(). The globals must be a dictionary and locals can be any mapping, defaulting to the current globals and locals. If only globals is given, locals defaults to it.

例:

a = '8*(8+20-5%12*23'

print(eval(a))

d = {'a':5,'b':4}

print(eval('a*b',d))

★exec(source[, globals[, locals]]) 語句用來執行儲存在字符串或文件中的Python語句

例:

a = 'print("nihao")'

b = 'for i in range(10): print i'

exec(a)

exec(b)

★execfile(filename[, globals[, locals]])

Read and execute a Python script from a file.The globals and locals are dictionaries, defaulting to the currentglobals and locals. If only globals is given, locals defaults to it.

二、模塊 paramiko

paramiko是一個用于做遠程控制的模塊,使用該模塊可以對遠程服務器進行命令或文件操作,值得一說的是,fabric和ansible內部的遠程管理就是使用的paramiko來現實。

1、下載安裝(pycrypto,由于 paramiko 模塊內部依賴pycrypto,所以先下載安裝pycrypto)

2、使用模塊

#!/usr/bin/env python #coding:utf-8 import paramiko ssh = paramiko.SSHClient() ssh.set_missing_host_key_policy(paramiko.AutoAddPolicy()) ssh.connect('192.168.1.108', 22, 'alex', '123') stdin, stdout, stderr = ssh.exec_command('df') print stdout.read() ssh.close();

import paramiko private_key_path = '/home/auto/.ssh/id_rsa' key = paramiko.RSAKey.from_private_key_file(private_key_path) ssh = paramiko.SSHClient() ssh.set_missing_host_key_policy(paramiko.AutoAddPolicy()) ssh.connect('主機名 ', 端口, '用戶名', key) stdin, stdout, stderr = ssh.exec_command('df') print stdout.read() ssh.close()

import os,sys import paramiko t = paramiko.Transport(('182.92.219.86',22)) t.connect(username='wupeiqi',password='123') sftp = paramiko.SFTPClient.from_transport(t) sftp.put('/tmp/test.py','/tmp/test.py') t.close() import os,sys import paramiko t = paramiko.Transport(('182.92.219.86',22)) t.connect(username='wupeiqi',password='123') sftp = paramiko.SFTPClient.from_transport(t) sftp.get('/tmp/test.py','/tmp/test2.py') t.close()

import paramiko pravie_key_path = '/home/auto/.ssh/id_rsa' key = paramiko.RSAKey.from_private_key_file(pravie_key_path) t = paramiko.Transport(('182.92.219.86',22)) t.connect(username='wupeiqi',pkey=key) sftp = paramiko.SFTPClient.from_transport(t) sftp.put('/tmp/test3.py','/tmp/test3.py') t.close() import paramiko pravie_key_path = '/home/auto/.ssh/id_rsa' key = paramiko.RSAKey.from_private_key_file(pravie_key_path) t = paramiko.Transport(('182.92.219.86',22)) t.connect(username='wupeiqi',pkey=key) sftp = paramiko.SFTPClient.from_transport(t) sftp.get('/tmp/test3.py','/tmp/test4.py') t.close()

三、其他常用模塊:

1、random模塊:

★random 生成隨機數

print random.random() 生成0-1之間的小數

print random.randint(1,3) 生成整數,包含endpoint

print random.randrange(1,3,2) 生成整數,不包含endpoint

randrange(self, start, stop=None, step=?)

生成5位隨機數,例:

import random

a = []

for i in range(5):

if i == random.randint(1,5):

a.append(str(i))

else:

a.append(chr(random.randint(65,90)))

else:

print(''.join(a))

2、MD5、sha、hashlib模塊

★生成MD5碼

例:

一. 使用md5包

import md5

src = 'this is a md5 test.'

m1 = md5.new()

m1.update(src)

print m1.hexdigest()

二、使用sha包

import sha

hash = sha.new()

hash.update('admin')

print hash.hexdigest()

三. 使用hashlib

用于加密相關的操作,代替了md5模塊和sha模塊,主要提供 SHA1, SHA224, SHA256, SHA384, SHA512 ,MD5 算法

錯誤:“Unicode-objects must be encoded before hashing”,意思是在進行md5哈希運算前,需要對數據進行編碼,使用encode("utf8")

import hashlib

hash = hashlib.md5()

hash.update('this is a md5 test.'.encode("utf8")

hash.update('admin')

print(hash.digest())

print(hash.hexdigest())

import hashlib # ######## md5 ######## hash = hashlib.md5() hash.update('admin') print hash.hexdigest() # ######## sha1 ######## hash = hashlib.sha1() hash.update('admin') print hash.hexdigest() # ######## sha256 ######## hash = hashlib.sha256() hash.update('admin') print hash.hexdigest() # ######## sha384 ######## hash = hashlib.sha384() hash.update('admin') print hash.hexdigest() # ######## sha512 ######## hash = hashlib.sha512() hash.update('admin') print hash.hexdigest()

推薦使用第三種方法。

對以上代碼的說明:

1.首先從python直接導入hashlib模塊

2.調用hashlib里的md5()生成一個md5 hash對象

3.生成hash對象后,就可以用update方法對字符串進行md5加密的更新處理

4.繼續調用update方法會在前面加密的基礎上更新加密

5.加密后的二進制結果

6.十六進制結果

如果只需對一條字符串進行加密處理,也可以用一條語句的方式:

print(hashlib.new("md5", "Nobody inspects the spammish repetition").hexdigest())

以上加密算法雖然依然非常厲害,但時候存在缺陷,即:通過撞庫可以反解。所以,有必要對加密算法中添加自定義key再來做加密

|

1

2

3

4

5

6

7

|

import hashlib# ######## md5 ########hash = hashlib.md5('898oaFs09f')hash.update('admin')print hash.hexdigest() |

還不夠吊?python 還有一個 hmac 模塊,它內部對我們創建 key 和 內容 再進行處理然后再加密

|

1

2

3

4

|

import hmach = hmac.new('wueiqi')h.update('hellowo')print h.hexdigest() |

不能再牛逼了!!!

3、pickle和json模塊:

★python對象與文件之間的序列化和反序列化(pickle和json)

用于序列化的兩個模塊

- json,用于字符串 和 python數據類型間進行轉換

- pickle,用于python特有的類型 和 python的數據類型間進行轉換

Json模塊提供了四個功能:dumps、dump、loads、load

pickle模塊提供了四個功能:dumps、dump、loads、load

pickle模塊用來實現python對象的序列化和反序列化。通常地pickle將python對象序列化為二進制流或文件。

python對象與文件之間的序列化和反序列化:

pickle.dump()

pickle.load()

如果要實現python對象和字符串間的序列化和反序列化,則使用:

pickle.dumps()

pickle.loads()

可以被序列化的類型有:

* None,True 和 False;

* 整數,浮點數,復數;

* 字符串,字節流,字節數組;

* 包含可pickle對象的tuples,lists,sets和dictionaries;

* 定義在module頂層的函數:

* 定義在module頂層的內置函數;

* 定義在module頂層的類;

* 擁有__dict__()或__setstate__()的自定義類型;

注意:對于函數或類的序列化是以名字來識別的,所以需要import相應的module。

例:

1 import pickle 2 3 class test(): 4 def __init__(self,n): 5 self.a = n 6 7 t = test(123) 8 t2 = test('abc') 9 10 a_list = ['sky','mobi','mopo'] 11 a_dict = {'a':1,'b':2,'3':'c'} 12 13 with open('test.pickle','wb') as f: 14 pickle.dump(t,f) 15 pickle.dump(a_list,f) 16 pickle.dump(t2,f) 17 18 with open('test.pickle','rb') as g: 19 gg = pickle.load(g) 20 print(gg.a) 21 hh = pickle.load(g) 22 print(hh[1]) 23 ii = pickle.load(g) 24 print(ii.a)

注:dump和load一一對應,順序也不會亂。

★JSON(JavaScript Object Notation):一種輕量級數據交換格式,相對于XML而言更簡單,也易于閱讀和編寫,機器也方便解析和生成,Json是JavaScript中的一個子集。

Python的Json模塊序列化與反序列化的過程分別是 encoding和 decoding

encoding:把一個Python對象編碼轉換成Json字符串

decoding:把Json格式字符串解碼轉換成Python對象

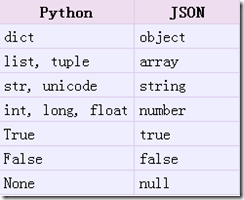

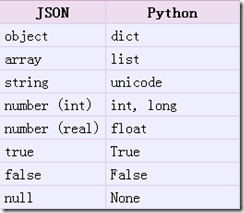

具體的轉化對照如下:

loads方法返回了原始的對象,但是仍然發生了一些數據類型的轉化。比如,上例中‘abc’轉化為了unicode類型。從json到python的類型轉化對照如下:

例:

1 import json 2 3 data = { 'a': [1, 2.0, 3, 4], 'b': ("character string", "byte string"), 'c': 'abc'} 4 5 du = json.dumps(data) 6 print(du) 7 print(json.loads(du,encoding='ASCII')) 8 9 with open('data.json','w') as f: 10 json.dump(data,f,indent=2,sort_keys=True,separators=(',',':'))

#f.write(json.dumps(data))

11

12 with open('data.json','r') as f:

13 data = json.load(f)

14 print(repr(data))

注:json并不像pickle一樣,將python對象序列化為二進制流或文件,所以寫或讀不能加b;

同時只能針對一個對象或類型進行dump和load,不像pickle可以多個。

sort_keys是告訴編碼器按照字典排序(a到z)輸出

indent參數根據數據格式縮進顯示,讀起來更加清晰:

separators參數的作用是去掉,,:后面的空格,從上面的輸出結果都能看到", :"后面都有個空格,這都是為了美化輸出結果的作用,但是在我們傳輸數據的過程中,越精簡越好,冗余的東西全部去掉

經測試,2.7版本導出的json文件,3.4版本導入會報錯:TypeError: the JSON object must be str, not 'bytes'

4、正則表達式模塊:

re模塊用于對python的正則表達式的操作。

字符:

. 匹配除換行符以外的任意字符

\w 匹配字母或數字或下劃線或漢字

\s 匹配任意的空白符

\d 匹配數字

\b 匹配單詞的開始或結束

^ 匹配字符串的開始

$ 匹配字符串的結束

次數:

* 重復零次或更多次

+ 重復一次或更多次

? 重復零次或一次

{n} 重復n次

{n,} 重復n次或更多次

{n,m} 重復n到m次

IP:

^(25[0-5]|2[0-4]\d|[0-1]?\d?\d)(\.(25[0-5]|2[0-4]\d|[0-1]?\d?\d)){3}$

手機號:

^1[3|4|5|8][0-9]\d{8}$

★re.match的函數原型為:re.match(pattern, string, flags)

第一個參數是正則表達式,這里為"(\w+)\s",如果匹配成功,則返回一個Match,否則返回一個None;

第二個參數表示要匹配的字符串;

第三個參數是標致位,用于控制正則表達式的匹配方式,如:是否區分大小寫,多行匹配等等。

★re.search的函數原型為: re.search(pattern, string, flags)

每個參數的含意與re.match一樣。

re.match與re.search的區別:re.match只匹配字符串的開始,如果字符串開始不符合正則表達式,則匹配失敗,函數返回None;而re.search匹配整個字符串,直到找到一個匹配。

★re.findall可以獲取字符串中所有匹配的字符串。如:re.findall(r'\w*oo\w*', text);獲取字符串中,包含'oo'的所有單詞。

★re.sub的函數原型為:re.sub(pattern, repl, string, count)

其中第二個函數是替換后的字符串;本例中為'-'

第四個參數指替換個數。默認為0,表示每個匹配項都替換。

re.sub還允許使用函數對匹配項的替換進行復雜的處理。如:re.sub(r'\s', lambda m: '[' + m.group(0) + ']', text, 0);將字符串中的空格' '替換為'[ ]'。

★re.split可以使用re.split來分割字符串,如:re.split(r'\s+', text);將字符串按空格分割成一個單詞列表。

根據指定匹配進行分組

content = "'1 - 2 * ((60-30+1*(9-2*5/3+7/3*99/4*2998+10*568/14))-(-4*3)/(16-3*2) )'"

new_content = re.split('\*', content)

# new_content = re.split('\*', content, 1)

print new_content

content = "'1 - 2 * ((60-30+1*(9-2*5/3+7/3*99/4*2998+10*568/14))-(-4*3)/(16-3*2) )'"

new_content = re.split('[\+\-\*\/]+', content)

# new_content = re.split('\*', content, 1)

print new_content

inpp = '1-2*((60-30 +(-40-5)*(9-2*5/3 + 7 /3*99/4*2998 +10 * 568/14 )) - (-4*3)/ (16-3*2))'

inpp = re.sub('\s*','',inpp)

new_content = re.split('\(([\+\-\*\/]?\d+[\+\-\*\/]?\d+){1}\)', inpp, 1)

print new_content

★re.compile可以把正則表達式編譯成一個正則表達式對象。可以把那些經常使用的正則表達式編譯成正則表達式對象,這樣可以提高一定的效率。下面是一個正則表達式對象的一個例子:

例:

import re

r = re.compile('\d+')

r1 = r.match('adfaf123asdf1asf1123aa')

if r1:

print(r1.group())

else:

print('no match')

r2 = r.search('adfaf123asdf1asf1123aa')

if r2:

print(r2.group())

print(r2.groups())

else:

print('no match')

r3 = r.findall('adfaf123asdf1asf1123aa')

if r3:

print(r3)

else:

print('no match')

r4 = r.sub('###','adfaf123asdf1asf1123aa')

print(r4)

r5 = r.subn('###','adfaf123asdf1asf1123aa')

print(r5)

r6 = r.split('adfaf123asdf1asf1123aa',maxsplit=2)

print(r6)

注:re執行分二步:首先編譯,然后執行。故先使用re.compile進行查詢的字符串進行編譯,之后的操作無需在次編譯,可以提高效率。

匹配IP具體實例:

ip = '12aa13.12.15aasdfa12.32aasdf192.168.12.13asdfafasf12abadaf12.13'

res = re.findall('(\d{1,3}\.\d{1,3}\.\d{1,3}\.\d{1,3})',ip)

print(res)

res1 = re.findall('(?:\d{1,3}\.){3}\d{1,3}',ip)

print(res1)

而group,groups 主要是針對查詢的字符串是否分組,一般只是針對search和match,即'\d+' 和('\d+') 輸出結果為:

123 和('123',)。

import re a = 'Oldboy School,Beijing Changping shahe:010-8343245' match = re.search(r'(\D+),(\D+):(\S+)',a) print(match.group(1)) print(match.group(2)) print(match.group(3)) print("##########################") match2 = re.search(r'(?P<name>\D+),(?P<address>\D+):(?P<phone>\S+)',a) print(match2.group('name')) print(match2.group('address')) print(match2.group('phone'))

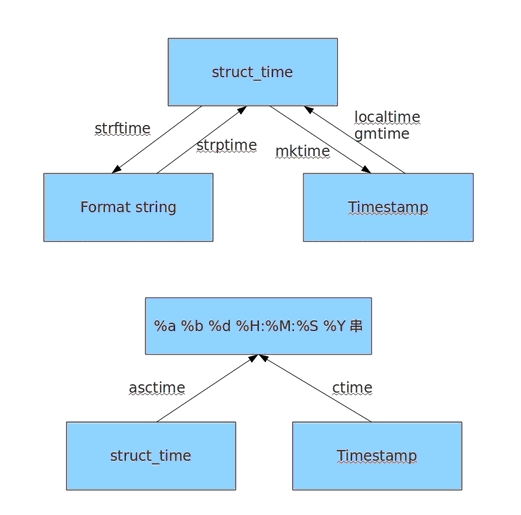

5、time模塊

time模塊提供各種操作時間的函數

#1、時間戳 1970年1月1日之后的秒

#2、元組 包含了:年、日、星期等... time.struct_time

#3、格式化的字符串 2014-11-11 11:11

import time

import datetimeprint(time.clock()) #返回處理器時間,3.3開始已廢棄print(time.process_time()) #返回處理器時間,3.3開始已廢棄print(time.time()) #返回當前系統時間戳print(time.ctime()) #輸出Tue Jan 26 18:23:48 2016 ,當前系統時間print(time.ctime(time.time()-86640)) #將時間戳轉為字符串格式print(time.gmtime(time.time()-86640)) #將時間戳轉換成struct_time格式print(time.localtime(time.time()-86640)) #將時間戳轉換成struct_time格式,但返回 的本地時間print(time.mktime(time.localtime())) #與time.localtime()功能相反,將struct_time格式轉回成時間戳格式#time.sleep(4) #sleepprint(time.strftime("%Y-%m-%d %H:%M:%S",time.gmtime()) ) #將struct_time格式轉成指定的字符串格式print(time.strptime("2016-01-28","%Y-%m-%d") ) #將字符串格式轉換成struct_time格式#datetime moduleprint(datetime.date.today()) #輸出格式 2016-01-26print(datetime.date.fromtimestamp(time.time()-864400) ) #2016-01-16 將時間戳轉成日期格式current_time = datetime.datetime.now() #print(current_time) #輸出2016-01-26 19:04:30.335935print(current_time.timetuple()) #返回struct_time格式#datetime.replace([year[, month[, day[, hour[, minute[, second[, microsecond[, tzinfo]]]]]]]])print(current_time.replace(2014,9,12)) #輸出2014-09-12 19:06:24.074900,返回當前時間,但指定的值將被替換str_to_date = datetime.datetime.strptime("21/11/06 16:30", "%d/%m/%y %H:%M") #將字符串轉換成日期格式new_date = datetime.datetime.now() + datetime.timedelta(days=10) #比現在加10天new_date = datetime.datetime.now() + datetime.timedelta(days=-10) #比現在減10天new_date = datetime.datetime.now() + datetime.timedelta(hours=-10) #比現在減10小時new_date = datetime.datetime.now() + datetime.timedelta(seconds=120) #比現在+120sprint(new_date)6、shutil模塊

高級的 文件、文件夾、壓縮包 處理模塊

shutil.copyfileobj(fsrc, fdst[, length])

將文件內容拷貝到另一個文件中,可以部分內容

shutil.copyfile(src, dst) 拷貝文件

shutil.copymode(src, dst)

僅拷貝權限。內容、組、用戶均不變

shutil.copystat(src, dst)

拷貝狀態的信息,包括:mode bits, atime, mtime, flags

shutil.copy(src, dst)

拷貝文件和權限

shutil.copy2(src, dst)

拷貝文件和狀態信息

shutil.ignore_patterns(*patterns)

shutil.copytree(src, dst, symlinks=False, ignore=None)

遞歸的去拷貝文件

例如:copytree(source, destination, ignore=ignore_patterns('*.pyc', 'tmp*'))

shutil.rmtree(path[, ignore_errors[, onerror]])

遞歸的去刪除文件

shutil.move(src, dst)

遞歸的去移動文件

shutil.make_archive(base_name, format,...)

創建壓縮包并返回文件路徑,例如:zip、tar

- base_name: 壓縮包的文件名,也可以是壓縮包的路徑。只是文件名時,則保存至當前目錄,否則保存至指定路徑,

如:www =>保存至當前路徑

如:/Users/wupeiqi/www =>保存至/Users/wupeiqi/ - format: 壓縮包種類,“zip”, “tar”, “bztar”,“gztar”

- root_dir: 要壓縮的文件夾路徑(默認當前目錄)

- owner: 用戶,默認當前用戶

- group: 組,默認當前組

- logger: 用于記錄日志,通常是logging.Logger對象

|

1

2

3

4

5

6

7

8

9

|

#將 /Users/wupeiqi/Downloads/test 下的文件打包放置當前程序目錄import shutilret = shutil.make_archive("wwwwwwwwww", 'gztar', root_dir='/Users/wupeiqi/Downloads/test')#將 /Users/wupeiqi/Downloads/test 下的文件打包放置 /Users/wupeiqi/目錄import shutilret = shutil.make_archive("/Users/wupeiqi/wwwwwwwwww", 'gztar', root_dir='/Users/wupeiqi/Downloads/test') |

shutil 對壓縮包的處理是調用 ZipFile 和 TarFile 兩個模塊來進行的,

7、ConfigParser

用于對特定的配置進行操作,當前模塊的名稱在 python 3.x 版本中變更為 configparser。

1.基本的讀取配置文件

-read(filename) 直接讀取ini文件內容

-sections() 得到所有的section,并以列表的形式返回

-options(section) 得到該section的所有option

-items(section) 得到該section的所有鍵值對

-get(section,option) 得到section中option的值,返回為string類型

-getint(section,option) 得到section中option的值,返回為int類型,還有相應的getboolean()和getfloat() 函數。

2.基本的寫入配置文件

-add_section(section) 添加一個新的section

-set( section, option, value) 對section中的option進行設置,需要調用write將內容寫入配置文件。

3.Python的ConfigParser Module中定義了3個類對INI文件進行操作。

分別是RawConfigParser、ConfigParser、 SafeConfigParser。

RawCnfigParser是最基礎的INI文件讀取類;

ConfigParser、 SafeConfigParser支持對%(value)s變量的解析。

設定配置文件test.conf

[portal]

url = http://%(host)s:%(port)s/Portal

host = localhost

port = 8080

使用RawConfigParser:

import ConfigParser

file1 = ConfigParser.RawConfigParser()

file1.read('aa.txt')

print(file1.get('portal','url'))

得到終端輸出:

http://%(host)s:%(port)s/Portal

使用ConfigParser:

import ConfigParser

file2 = ConfigParser.ConfigParser()

file2.read('aa.txt')

print(file2.get('portal','url'))

得到終端輸出:

http://localhost:8080/Portal

使用SafeConfigParser:

import ConfigParser

cf = ConfigParser.SafeConfigParser()

file3 = ConfigParser.SafeConfigParser()

file3.read('aa.txt')

print(file3.get('portal','url'))

得到終端輸出(效果同ConfigParser):

http://localhost:8080/Portal

舉例說明:

# 注釋1 ; 注釋2 [section1] k1 = v1 k2:v2 [section2] k1 = v1 import ConfigParser config = ConfigParser.ConfigParser() config.read('i.cfg') # ########## 讀 ########## #secs = config.sections() #print secs #options = config.options('group2') #print options #item_list = config.items('group2') #print item_list #val = config.get('group1','key') #val = config.getint('group1','key') # ########## 改寫 ########## #sec = config.remove_section('group1') #config.write(open('i.cfg', "w")) #sec = config.has_section('wupeiqi') #sec = config.add_section('wupeiqi') #config.write(open('i.cfg', "w")) #config.set('group2','k1',11111) #config.write(open('i.cfg', "w")) #config.remove_option('group2','age') #config.write(open('i.cfg', "w"))

8、logging模塊:

用于便捷記錄日志且線程安全的模塊

1.簡單的將日志打印到屏幕

|

|

默認情況下,logging將日志打印到屏幕,日志級別為WARNING;

日志級別大小關系為:CRITICAL > ERROR > WARNING > INFO > DEBUG > NOTSET,當然也可以自己定義日志級別。

2.通過logging.basicConfig函數對日志的輸出格式及方式做相關配置

|

|

logging.basicConfig函數各參數:

filename: 指定日志文件名

filemode: 和file函數意義相同,指定日志文件的打開模式,'w'或'a'

format: 指定輸出的格式和內容,format可以輸出很多有用信息,如上例所示:

%(levelno)s: 打印日志級別的數值

%(levelname)s: 打印日志級別名稱

%(pathname)s: 打印當前執行程序的路徑,其實就是sys.argv[0]

%(filename)s: 打印當前執行程序名

%(funcName)s: 打印日志的當前函數

%(lineno)d: 打印日志的當前行號

%(asctime)s: 打印日志的時間

%(thread)d: 打印線程ID

%(threadName)s: 打印線程名稱

%(process)d: 打印進程ID

%(message)s: 打印日志信息

datefmt: 指定時間格式,同time.strftime()

level: 設置日志級別,默認為logging.WARNING

stream: 指定將日志的輸出流,可以指定輸出到sys.stderr,sys.stdout或者文件,默認輸出到sys.stderr,當stream和filename同時指定時,stream被忽略

3.將日志同時輸出到文件和屏幕

|

|

4.logging之日志回滾

|

|

從上例和本例可以看出,logging有一個日志處理的主對象,其它處理方式都是通過addHandler添加進去的。

logging的幾種handle方式如下:

|

logging.StreamHandler: 日志輸出到流,可以是sys.stderr、sys.stdout或者文件 日志回滾方式,實際使用時用RotatingFileHandler和TimedRotatingFileHandler logging.handlers.SocketHandler: 遠程輸出日志到TCP/IP sockets |

由于StreamHandler和FileHandler是常用的日志處理方式,所以直接包含在logging模塊中,而其他方式則包含在logging.handlers模塊中,

上述其它處理方式的使用請參見python2.5手冊!

5.通過logging.config模塊配置日志

|

|

上例3:

|

|

上例4:

|

|

9、shelve 模塊

shelve模塊是一個簡單的k,v將內存數據通過文件持久化的模塊,可以持久化任何pickle可支持的python數據格式

shelve是一額簡單的數據存儲方案,他只有一個函數就是open(),這個函數接收一個參數就是文件名,然后返回一個shelf對象,你可以用他來存儲東西,就可以簡單的把他當作一個字典,當你存儲完畢的時候,就調用close函數來關閉

1 import shelve

2

3 d = shelve.open('shelve_file') #打開一個文件

4

5 class Test(object):

6 def __init__(self,n):

7 self.a = n

8

9

10 t = Test(123)

11 t2 = Test(123334)

12

13 name = ["alex","rain","test"]

14 d["test"] = name #持久化列表

15 d["t1"] = t #持久化類

16 d["t2"] = t2

17

18 d.close()

19

20 f = shelve.open('shelve_file')

21 print(f['t1'].a)

22 print(f['test'])

23 f.close

則會生成三類文件shelve_file.dat,shelve_file.dir,shelve_file.bak用來充放數據

多關注其中的writeback=True參數

詳情見shelve模塊:

"""Manage shelves of pickled objects. A "shelf" is a persistent, dictionary-like object. The difference with dbm databases is that the values (not the keys!) in a shelf can be essentially arbitrary Python objects -- anything that the "pickle" module can handle. This includes most class instances, recursive data types, and objects containing lots of shared sub-objects. The keys are ordinary strings. To summarize the interface (key is a string, data is an arbitrary object): import shelve d = shelve.open(filename) # open, with (g)dbm filename -- no suffix d[key] = data # store data at key (overwrites old data if # using an existing key) data = d[key] # retrieve a COPY of the data at key (raise # KeyError if no such key) -- NOTE that this # access returns a *copy* of the entry! del d[key] # delete data stored at key (raises KeyError # if no such key) flag = key in d # true if the key exists list = d.keys() # a list of all existing keys (slow!) d.close() # close it Dependent on the implementation, closing a persistent dictionary may or may not be necessary to flush changes to disk. Normally, d[key] returns a COPY of the entry. This needs care when mutable entries are mutated: for example, if d[key] is a list, d[key].append(anitem) does NOT modify the entry d[key] itself, as stored in the persistent mapping -- it only modifies the copy, which is then immediately discarded, so that the append has NO effect whatsoever. To append an item to d[key] in a way that will affect the persistent mapping, use: data = d[key] data.append(anitem) d[key] = data To avoid the problem with mutable entries, you may pass the keyword argument writeback=True in the call to shelve.open. When you use: d = shelve.open(filename, writeback=True) then d keeps a cache of all entries you access, and writes them all back to the persistent mapping when you call d.close(). This ensures that such usage as d[key].append(anitem) works as intended. However, using keyword argument writeback=True may consume vast amount of memory for the cache, and it may make d.close() very slow, if you access many of d's entries after opening it in this way: d has no way to check which of the entries you access are mutable and/or which ones you actually mutate, so it must cache, and write back at close, all of the entries that you access. You can call d.sync() to write back all the entries in the cache, and empty the cache (d.sync() also synchronizes the persistent dictionary on disk, if feasible). """ from pickle import Pickler, Unpickler from io import BytesIO import collections __all__ = ["Shelf", "BsdDbShelf", "DbfilenameShelf", "open"] class _ClosedDict(collections.MutableMapping): 'Marker for a closed dict. Access attempts raise a ValueError.' def closed(self, *args): raise ValueError('invalid operation on closed shelf') __iter__ = __len__ = __getitem__ = __setitem__ = __delitem__ = keys = closed def __repr__(self): return '<Closed Dictionary>' class Shelf(collections.MutableMapping): """Base class for shelf implementations. This is initialized with a dictionary-like object. See the module's __doc__ string for an overview of the interface. """ def __init__(self, dict, protocol=None, writeback=False, keyencoding="utf-8"): self.dict = dict if protocol is None: protocol = 3 self._protocol = protocol self.writeback = writeback self.cache = {} self.keyencoding = keyencoding def __iter__(self): for k in self.dict.keys(): yield k.decode(self.keyencoding) def __len__(self): return len(self.dict) def __contains__(self, key): return key.encode(self.keyencoding) in self.dict def get(self, key, default=None): if key.encode(self.keyencoding) in self.dict: return self[key] return default def __getitem__(self, key): try: value = self.cache[key] except KeyError: f = BytesIO(self.dict[key.encode(self.keyencoding)]) value = Unpickler(f).load() if self.writeback: self.cache[key] = value return value def __setitem__(self, key, value): if self.writeback: self.cache[key] = value f = BytesIO() p = Pickler(f, self._protocol) p.dump(value) self.dict[key.encode(self.keyencoding)] = f.getvalue() def __delitem__(self, key): del self.dict[key.encode(self.keyencoding)] try: del self.cache[key] except KeyError: pass def __enter__(self): return self def __exit__(self, type, value, traceback): self.close() def close(self): self.sync() try: self.dict.close() except AttributeError: pass # Catch errors that may happen when close is called from __del__ # because CPython is in interpreter shutdown. try: self.dict = _ClosedDict() except (NameError, TypeError): self.dict = None def __del__(self): if not hasattr(self, 'writeback'): # __init__ didn't succeed, so don't bother closing # see http://bugs.python.org/issue1339007 for details return self.close() def sync(self): if self.writeback and self.cache: self.writeback = False for key, entry in self.cache.items(): self[key] = entry self.writeback = True self.cache = {} if hasattr(self.dict, 'sync'): self.dict.sync() class BsdDbShelf(Shelf): """Shelf implementation using the "BSD" db interface. This adds methods first(), next(), previous(), last() and set_location() that have no counterpart in [g]dbm databases. The actual database must be opened using one of the "bsddb" modules "open" routines (i.e. bsddb.hashopen, bsddb.btopen or bsddb.rnopen) and passed to the constructor. See the module's __doc__ string for an overview of the interface. """ def __init__(self, dict, protocol=None, writeback=False, keyencoding="utf-8"): Shelf.__init__(self, dict, protocol, writeback, keyencoding) def set_location(self, key): (key, value) = self.dict.set_location(key) f = BytesIO(value) return (key.decode(self.keyencoding), Unpickler(f).load()) def next(self): (key, value) = next(self.dict) f = BytesIO(value) return (key.decode(self.keyencoding), Unpickler(f).load()) def previous(self): (key, value) = self.dict.previous() f = BytesIO(value) return (key.decode(self.keyencoding), Unpickler(f).load()) def first(self): (key, value) = self.dict.first() f = BytesIO(value) return (key.decode(self.keyencoding), Unpickler(f).load()) def last(self): (key, value) = self.dict.last() f = BytesIO(value) return (key.decode(self.keyencoding), Unpickler(f).load()) class DbfilenameShelf(Shelf): """Shelf implementation using the "dbm" generic dbm interface. This is initialized with the filename for the dbm database. See the module's __doc__ string for an overview of the interface. """ def __init__(self, filename, flag='c', protocol=None, writeback=False): import dbm Shelf.__init__(self, dbm.open(filename, flag), protocol, writeback) def open(filename, flag='c', protocol=None, writeback=False): """Open a persistent dictionary for reading and writing. The filename parameter is the base filename for the underlying database. As a side-effect, an extension may be added to the filename and more than one file may be created. The optional flag parameter has the same interpretation as the flag parameter of dbm.open(). The optional protocol parameter specifies the version of the pickle protocol (0, 1, or 2). See the module's __doc__ string for an overview of the interface. """ return DbfilenameShelf(filename, flag, protocol, writeback) shelve

- >>> import shelve

- >>> s = shelve.open('test.dat')

- >>> s['x'] = ['a', 'b', 'c']

- >>> s['x'].append('d')

- >>> s['x']

- ['a', 'b', 'c']

- >>> temp = s['x']

- >>> temp.append('d')

- >>> s['x'] = temp

- >>> s['x']

- ['a', 'b', 'c', 'd']

#database.py import sys, shelve def store_person(db): """ Query user for data and store it in the shelf object """ pid = raw_input('Enter unique ID number: ') person = {} person['name'] = raw_input('Enter name: ') person['age'] = raw_input('Enter age: ') person['phone'] = raw_input('Enter phone number: ') db[pid] = person def lookup_person(db): """ Query user for ID and desired field, and fetch the corresponding data from the shelf object """ pid = raw_input('Enter ID number: ') field = raw_input('What would you like to know? (name, age, phone) ') field = field.strip().lower() print field.capitalize() + ':', \ db[pid][field] def print_help(): print 'The available commons are: ' print 'store :Stores information about a person' print 'lookup :Looks up a person from ID number' print 'quit :Save changes and exit' print '? :Print this message' def enter_command(): cmd = raw_input('Enter command (? for help): ') cmd = cmd.strip().lower() return cmd def main(): database = shelve.open('database.dat') try: while True: cmd = enter_command() if cmd == 'store': store_person(database) elif cmd == 'lookup': lookup_person(database) elif cmd == '?': print_help() elif cmd == 'quit': return finally: database.close() if __name__ == '__main__': main() shelve數據庫實例

10、xml模塊

xml是實現不同語言或程序之間進行數據交換的協議,跟json差不多,但json使用起來更簡單,不過,古時候,在json還沒誕生的黑暗年代,大家只能選擇用xml呀,至今很多傳統公司如金融行業的很多系統的接口還主要是xml。

xml的格式如下,就是通過<>節點來區別數據結構的:

<?xml version="1.0"?> <data> <country name="Liechtenstein"> <rank updated="yes">2</rank> <year>2008</year> <gdppc>141100</gdppc> <neighbor name="Austria" direction="E"/> <neighbor name="Switzerland" direction="W"/> </country> <country name="Singapore"> <rank updated="yes">5</rank> <year>2011</year> <gdppc>59900</gdppc> <neighbor name="Malaysia" direction="N"/> </country> <country name="Panama"> <rank updated="yes">69</rank> <year>2011</year> <gdppc>13600</gdppc> <neighbor name="Costa Rica" direction="W"/> <neighbor name="Colombia" direction="E"/> </country> </data> xml文件格式

xml協議在各個語言里的都是支持的,在python中可以用以下模塊操作xml

在實際應用中,需要對xml配置文件進行實時修改,

1.增加、刪除 某些節點

2.增加,刪除,修改某個節點下的某些屬性

3.增加,刪除,修改某些節點的文本

實現思想:

使用ElementTree,先將文件讀入,解析成樹,之后,根據路徑,可以定位到樹的每個節點,再對節點進行修改,最后直接將其輸出

具體代碼如下:

from xml.etree.ElementTree import ElementTree def read_xml(in_path): '''''讀取并解析xml文件 in_path: xml路徑 return: ElementTree''' tree = ElementTree() tree.parse(in_path) root = tree.getroot() return(tree,root) def write_xml(tree, out_path): '''''將xml文件寫出 tree: xml樹 out_path: 寫出路徑''' tree.write(out_path, encoding="utf-8",xml_declaration=True) def if_match(node, kv_map): '''''判斷某個節點是否包含所有傳入參數屬性 node: 節點 kv_map: 屬性及屬性值組成的map''' for key in kv_map: #if node.get(key) != kv_map.get(key): if node.get(key) != kv_map[key]: return False return True def if_match_text(node, text,mode='eq'): '''''判斷某個節點是否包含所有傳入參數屬性 node: 節點 text: 標簽具體值 mode: 數值判斷大小''' if mode == 'eq': express = "{0} == {1}" elif mode == 'gt': express = "{0} > {1}" elif mode == 'lt': express = "{0} < {1}" else: print('the mode is error') return False flag = eval(express.format(int(node.text),text)) if flag: return True return False #---------------search ----- def find_nodes(tree, path): '''''查找某個路徑匹配的所有節點 tree: xml樹 path: 節點路徑''' return tree.findall(path) def get_node_by_keyvalue(nodelist, kv_map): '''''根據屬性及屬性值定位符合的節點,返回節點 nodelist: 節點列表 kv_map: 匹配屬性及屬性值map''' result_nodes = [] for node in nodelist: if if_match(node, kv_map): result_nodes.append(node) return result_nodes #---------------change ----- def change_node_properties(nodelist, kv_map, is_delete=False): '''''修改/增加 /刪除 節點的屬性及屬性值 nodelist: 節點列表 kv_map:屬性及屬性值map''' for node in nodelist: for key in kv_map: if is_delete: if key in node.attrib: del node.attrib[key] else: node.set(key, kv_map.get(key)) def change_node_text(nodelist, text, is_add=False, is_delete=False): '''''改變/增加/刪除一個節點的文本 nodelist:節點列表 text : 更新后的文本''' for node in nodelist: if is_add: node.text = text * 3 elif is_delete: node.text = "" else: node.text = text def create_node(tag, property_map, content): '''''新造一個節點 tag:節點標簽 property_map:屬性及屬性值map content: 節點閉合標簽里的文本內容7 return 新節點''' element = Element(tag, property_map) element.text = content return element def add_child_node(nodelist, element): '''''給一個節點添加子節點 nodelist: 節點列表 element: 子節點''' for node in nodelist: node.append(element) def del_node_by_tagkeyvalue(nodelist, tag, kv_map): '''''通過屬性及屬性值定位一個節點,并刪除之 nodelist: 父節點列表 tag:子節點標簽 kv_map: 屬性及屬性值列表''' for parent_node in nodelist: children = parent_node.getchildren() for child in children: if child.tag == tag and if_match(child, kv_map): parent_node.remove(child) def del_node_by_tagtext(nodelist, tag, text,mode='eq',flag=1): '''''通過屬性及屬性值定位一個節點,并刪除之 nodelist: 父節點列表 tag:子節點標簽 text: 標簽具體值''' for parent_node in nodelist: children = parent_node.getchildren() for child in children: if child.tag == tag and if_match_text(child,text,mode): if flag == 1: parent_node.remove(child) else: root.remove(parent_node) if __name__ == "__main__": #1. 讀取xml文件 (tree,root) = read_xml("server.xml") print(root) #2. 屬性修改 #A. 找到父節點 nodes = find_nodes(tree, "country/neighbor") #B. 通過屬性準確定位子節點 result_nodes = get_node_by_keyvalue(nodes, {"direction":"E"}) #C. 修改節點屬性 change_node_properties(result_nodes, {"age": "10","position":'asiaasia'}) #D. 刪除節點屬性 change_node_properties(result_nodes, {"age": "10"},is_delete=True) #3. 節點修改 #A.新建節點 a = create_node("person", {"age":"15","money":"200000"}, "this is the firest content") #B.插入到父節點之下 add_child_node(result_nodes, a) #4. 刪除節點 #定位父節點 del_parent_nodes = find_nodes(tree, "country") #根據attrib準確定位子節點并刪除之 target_del_node = del_node_by_tagkeyvalue(del_parent_nodes, "neighbor", {"direction":"N"}) #根據text準確定位子節點并刪除之 target_del_node = del_node_by_tagtext(del_parent_nodes, "gdppc",60000,'gt',1) #根據text準確定位country并刪除之 target_del_element = del_node_by_tagtext(del_parent_nodes, "rank",20,'gt',0) #5. 修改節點文本 #定位節點 text_nodes = get_node_by_keyvalue(find_nodes(tree, "country/neighbor"), {"direction":"E"}) change_node_text(text_nodes, "east",is_add=True) #6. 輸出到結果文件 write_xml(tree,"test.xml") xml增刪改查

自己創建xml文檔:

import xml.etree.ElementTree as ET

new_xml = ET.Element("namelist")

name = ET.SubElement(new_xml,"name",attrib={"enrolled":"yes"})

age = ET.SubElement(name,"age",attrib={"checked":"no"})

sex = ET.SubElement(name,"sex")

sex.text = '33'

name2 = ET.SubElement(new_xml,"name",attrib={"enrolled":"no"})

age = ET.SubElement(name2,"age")

age.text = '19'

et = ET.ElementTree(new_xml) #生成文檔對象

et.write("test.xml", encoding="utf-8",xml_declaration=True)

ET.dump(new_xml) #打印生成的格式

11、getopt模塊:

| getopt.getopt(args, shortopts, longopts=[]) | 說明 |

|---|---|

| args | 腳本接收的參數,可以通過sys.argv獲取 |

| shortopts | 短參數 |

| longopts | 長參數 |

舉例:opts, args = getopt.getopt(sys.argv[1:], "ho:", ["help", "output="])

- sys.argv里的argv[0]是當前腳本的文件名,所以從sys.argv[1:]開始匹配

- 使用短格式分析串"ho:"。當一個選項只是表示開關狀態時,即后面不帶附加參數時,在分析串中寫入選項字符。當選項后面是帶一個附加參數時,在分析串中寫入選項字符同時后面加一個":"號。所以"ho:"就表示"h"是一個開關選項;"o:"則表示后面應該帶一個參數。

- 使用長格式分析串列表:["help", "output="]。長格式串也可以有開關狀態,即后面不跟"="號。如果跟一個等號則表示后面還應有一個參數。這個長格式表示"help"是一個開關選項;"output="則表示后面應該帶一個參數

- 調用getopt函數。函數返回兩個列表:opts和args。opts為分析出的格式信息。args為不屬于格式信息的剩余的命令行參數,即不是按照getopt()里面定義的長或短選項字符和附加參數以外的信息。opts是一個兩元組的列表。每個元素為:(選項串,附加參數)。如果沒有附加參數則為空串''。

上例中opts的輸出結果為:

[('-h', ''), ('-o', 'file'), ('--help', ''), ('--output', 'out')]

而args則為:['file1', 'file2'],這就是上面不屬于格式信息的剩余的命令行參數。

對于上面例子再增加如下代碼,主要是對分析出的參數進行判斷是否存在,然后再進一步處理。主要的處理模式為:

for o, a in opts:

if o in ("-h", "--help"):

usage()

sys.exit()

if o in ("-o", "--output"):

output = a

使用一個循環,每次從opts中取出一個兩元組,賦給兩個變量。o保存選項參數,a為附加參數。接著對取出的選項參數進行處理。

舉例說明:

1 import sys 2 import getopt 3 4 arg = '-a -b -cfoo -h -d bar -v --help --cc a1 --dd bar a3 --version'.split() 5 print(args) 6 7 opts,args = getopt.getopt(arg,'-h-f:-o:-v',['help','filename=','option=','version']) 8 print('## opts: ',opts) 9 print('## args: ',args) 10 for opt_name,opt_value in opts: 11 print('## opt_name,opt_value: ', opt_name,opt_value) 12 if opt_name in ('-h','--help'): 13 print("[*] Help info") 14 exit() 15 if opt_name in ('-v','--version'): 16 print("[*] Version is 0.01 ") 17 exit() 18 if opt_name in ('-f','--filename'): 19 fileName = opt_value 20 print("[*] Filename is ",fileName) 21 if opt_name in ('-o','--option'): 22 Option = opt_value 23 print("[*] Option is ",Option) 24 exit()

浙公網安備 33010602011771號

浙公網安備 33010602011771號