使用 TiUP 部署 TiDB v8.5.1 集群

集群說(shuō)明

組件說(shuō)明

| 組件 | 節(jié)點(diǎn) | 實(shí)例數(shù)量(最低要求) |

|---|---|---|

| TiDB | 192.168.174.145-146 | 2 |

| PD | 192.168.174.145-147 | 3 |

| TiKV | 192.168.174.145-147 | 3 |

| TiFlash | 192.168.174.145-146 | 2 |

| TiCDC | 192.168.174.145-146 | 3 |

| tiproxy | 192.168.174.145-146 | 2 |

| 監(jiān)控 | 192.168.174.145 | 1 |

| TiUP | 192.168.174.144 |

資源清單

| 組件 | CPU | 內(nèi)存 | 硬盤類型 | 網(wǎng)絡(luò) | 實(shí)例數(shù)量(最低要求) |

|---|---|---|---|---|---|

| TiDB | 16 核+ | 48 GB+ | SSD | 萬(wàn)兆網(wǎng)卡(2 塊最佳) | 2 |

| PD | 8 核+ | 16 GB+ | SSD | 萬(wàn)兆網(wǎng)卡(2 塊最佳) | 3 |

| TiKV | 16 核+ | 64 GB+ | SSD | 萬(wàn)兆網(wǎng)卡(2 塊最佳) | 3 |

| TiFlash | 48 核+ | 128 GB+ | 1 or more SSDs | 萬(wàn)兆網(wǎng)卡(2 塊最佳) | 2 |

| TiCDC | 16 核+ | 64 GB+ | SSD | 萬(wàn)兆網(wǎng)卡(2 塊最佳) | 2 |

| 監(jiān)控 | 8 核+ | 16 GB+ | SAS | 千兆網(wǎng)卡 | 1 |

| TiFlash Write Node | 32 核+ | 64 GB+ | SSD, 200 GB+ | 萬(wàn)兆網(wǎng)卡(2 塊最佳) | 2 |

| TiFlash Compute Node | 32 核+ | 64 GB+ | SSD, 100 GB+ | 萬(wàn)兆網(wǎng)卡(2 塊最佳) | 0 |

| tiproxy | 4 核+ | 8 GB | 2 |

數(shù)據(jù)盤配置

echo "UUID=c51eb23b-195c-4061-92a9-3fad812cc12f /data1 ext4 defaults,nodelalloc,noatime 0 2" >> /etc/fstab關(guān)閉系統(tǒng) swap

echo "vm.swappiness = 0">> /etc/sysctl.conf

sysctl -p

swapoff -a && swapon -a配置系統(tǒng)優(yōu)化參數(shù)

mkdir /etc/tuned/balanced-tidb-optimal/

vi /etc/tuned/balanced-tidb-optimal/tuned.conf[main]

include=balanced

[cpu]

governor=performance

[vm]

transparent_hugepages=never

[disk]

devices_udev_regex=(ID_SERIAL=36d0946606d79f90025f3e09a0c1fc035)|(ID_SERIAL=36d0946606d79f90025f3e09a0c1f9e81)

elevator=nooptuned-adm profile balanced-tidb-optimal修改 sysctl 參數(shù)

echo "fs.file-max = 1000000">> /etc/sysctl.conf

echo "net.core.somaxconn = 32768">> /etc/sysctl.conf

echo "net.ipv4.tcp_syncookies = 0">> /etc/sysctl.conf

echo "vm.overcommit_memory = 1">> /etc/sysctl.conf

echo "vm.min_free_kbytes = 1048576">> /etc/sysctl.conf

sysctl -plimits.conf 文件

cat << EOF >>/etc/security/limits.conf

tidb soft nofile 1000000

tidb hard nofile 1000000

tidb soft stack 32768

tidb hard stack 32768

tidb soft core unlimited

tidb hard core unlimited

EOFSSH 互信及 sudo 免密碼

useradd tidb && passwd tidb下載離線包

wget https://download.pingcap.org/tidb-community-server-v8.5.1-linux-amd64.tar.gz

wget https://download.pingcap.org/tidb-community-toolkit-v8.5.1-linux-amd64.tar.gz部署 TiUP 組件

解壓 tidb

tar xf tidb-community-server-v8.5.1-linux-amd64.tar.gz部署 tiup

sh tidb-community-server-v8.5.1-linux-amd64/local_install.shDisable telemetry success

Successfully set mirror to /root/tidb/tidb-community-server-v8.5.1-linux-amd64

Detected shell: bash

Shell profile: /root/.bash_profile

/root/.bash_profile has been modified to to add tiup to PATH

open a new terminal or source /root/.bash_profile to use it

Installed path: /root/.tiup/bin/tiup

===============================================

1. source /root/.bash_profile

2. Have a try: tiup playground

===============================================更新 bash_profile

source /root/.bash_profile合并離線包

tar xf tidb-community-toolkit-v8.5.1-linux-amd64.tar.gz

ls -ld tidb-community-server-v8.5.1-linux-amd64 tidb-community-toolkit-v8.5.1-linux-amd64

cd tidb-community-server-v8.5.1-linux-amd64/

cp -rp keys ~/.tiup/

tiup mirror merge ../tidb-community-toolkit-v8.5.1-linux-amd64tiup cluster template > topology.yaml

A new version of cluster is available: -> v1.16.1

To update this component: tiup update cluster

To update all components: tiup update --all

The component `cluster` version is not installed; downloading from repository.

部署 tidb 集群

檢查集群存在的潛在風(fēng)險(xiǎn)

tiup cluster check ./topology.yaml --user root

自動(dòng)修復(fù)集群存在的潛在風(fēng)險(xiǎn)

tiup cluster check ./topology.yaml --apply --user root

部署 TiDB 集群

tiup cluster deploy tidb-test v8.5.1 ./topology.yaml --user root

+ Detect CPU Arch Name

- Detecting node 192.168.174.145 Arch info ... Done

- Detecting node 192.168.174.146 Arch info ... Done

- Detecting node 192.168.174.147 Arch info ... Done

+ Detect CPU OS Name

- Detecting node 192.168.174.145 OS info ... Done

- Detecting node 192.168.174.146 OS info ... Done

- Detecting node 192.168.174.147 OS info ... Done

Please confirm your topology:

Cluster type: tidb

Cluster name: tidb-test

Cluster version: v8.5.1

Role Host Ports OS/Arch Directories

---- ---- ----- ------- -----------

pd 192.168.174.145 2379/2380 linux/x86_64 /tidb-deploy/pd-2379,/tidb-data/pd-2379

pd 192.168.174.146 2379/2380 linux/x86_64 /tidb-deploy/pd-2379,/tidb-data/pd-2379

pd 192.168.174.147 2379/2380 linux/x86_64 /tidb-deploy/pd-2379,/tidb-data/pd-2379

tiproxy 192.168.174.145 6000/3080 linux/x86_64 /tiproxy-deploy

tiproxy 192.168.174.146 6000/3080 linux/x86_64 /tiproxy-deploy

tikv 192.168.174.145 20160/20180 linux/x86_64 /tidb-deploy/tikv-20160,/tidb-data/tikv-20160

tikv 192.168.174.146 20160/20180 linux/x86_64 /tidb-deploy/tikv-20160,/tidb-data/tikv-20160

tikv 192.168.174.147 20160/20180 linux/x86_64 /tidb-deploy/tikv-20160,/tidb-data/tikv-20160

tidb 192.168.174.145 4000/10080 linux/x86_64 /tidb-deploy/tidb-4000

tidb 192.168.174.146 4000/10080 linux/x86_64 /tidb-deploy/tidb-4000

tidb 192.168.174.147 4000/10080 linux/x86_64 /tidb-deploy/tidb-4000

tiflash 192.168.174.145 9000/3930/20170/20292/8234/8123 linux/x86_64 /tidb-deploy/tiflash-9000,/tidb-data/tiflash-9000

tiflash 192.168.174.146 9000/3930/20170/20292/8234/8123 linux/x86_64 /tidb-deploy/tiflash-9000,/tidb-data/tiflash-9000

cdc 192.168.174.145 8300 linux/x86_64 /tidb-deploy/cdc-8300,/tidb-data/cdc-8300

cdc 192.168.174.146 8300 linux/x86_64 /tidb-deploy/cdc-8300,/tidb-data/cdc-8300

cdc 192.168.174.147 8300 linux/x86_64 /tidb-deploy/cdc-8300,/tidb-data/cdc-8300

prometheus 192.168.174.145 9090/12020 linux/x86_64 /tidb-deploy/prometheus-9090,/tidb-data/prometheus-9090

grafana 192.168.174.145 3000 linux/x86_64 /tidb-deploy/grafana-3000

alertmanager 192.168.174.145 9093/9094 linux/x86_64 /tidb-deploy/alertmanager-9093,/tidb-data/alertmanager-9093

Attention:

1. If the topology is not what you expected, check your yaml file.

2. Please confirm there is no port/directory conflicts in same host.

Do you want to continue? [y/N]: (default=N) y

+ Generate SSH keys ... Done

+ Download TiDB components

- Download pd:v8.5.1 (linux/amd64) ... Done

- Download tiproxy:v1.3.0 (linux/amd64) ... Done

- Download tikv:v8.5.1 (linux/amd64) ... Done

- Download tidb:v8.5.1 (linux/amd64) ... Done

- Download tiflash:v8.5.1 (linux/amd64) ... Done

- Download cdc:v8.5.1 (linux/amd64) ... Done

- Download prometheus:v8.5.1 (linux/amd64) ... Done

- Download grafana:v8.5.1 (linux/amd64) ... Done

- Download alertmanager: (linux/amd64) ... Done

- Download node_exporter: (linux/amd64) ... Done

- Download blackbox_exporter: (linux/amd64) ... Done

+ Initialize target host environments

- Prepare 192.168.174.145:22 ... Done

- Prepare 192.168.174.146:22 ... Done

- Prepare 192.168.174.147:22 ... Done

+ Deploy TiDB instance

- Copy pd -> 192.168.174.145 ... Done

- Copy pd -> 192.168.174.146 ... Done

- Copy pd -> 192.168.174.147 ... Done

- Copy tiproxy -> 192.168.174.145 ... Done

- Copy tiproxy -> 192.168.174.146 ... Done

- Copy tikv -> 192.168.174.145 ... Done

- Copy tikv -> 192.168.174.146 ... Done

- Copy tikv -> 192.168.174.147 ... Done

- Copy tidb -> 192.168.174.145 ... Done

- Copy tidb -> 192.168.174.146 ... Done

- Copy tidb -> 192.168.174.147 ... Done

- Copy tiflash -> 192.168.174.145 ... Done

- Copy tiflash -> 192.168.174.146 ... Done

- Copy cdc -> 192.168.174.145 ... Done

- Copy cdc -> 192.168.174.146 ... Done

- Copy cdc -> 192.168.174.147 ... Done

- Copy prometheus -> 192.168.174.145 ... Done

- Copy grafana -> 192.168.174.145 ... Done

- Copy alertmanager -> 192.168.174.145 ... Done

- Deploy node_exporter -> 192.168.174.145 ... Done

- Deploy node_exporter -> 192.168.174.146 ... Done

- Deploy node_exporter -> 192.168.174.147 ... Done

- Deploy blackbox_exporter -> 192.168.174.145 ... Done

- Deploy blackbox_exporter -> 192.168.174.146 ... Done

- Deploy blackbox_exporter -> 192.168.174.147 ... Done

+ Copy certificate to remote host

- Copy session certificate tidb -> 192.168.174.145:4000 ... Done

- Copy session certificate tidb -> 192.168.174.146:4000 ... Done

- Copy session certificate tidb -> 192.168.174.147:4000 ... Done

+ Init instance configs

- Generate config pd -> 192.168.174.145:2379 ... Done

- Generate config pd -> 192.168.174.146:2379 ... Done

- Generate config pd -> 192.168.174.147:2379 ... Done

- Generate config tiproxy -> 192.168.174.145:6000 ... Done

- Generate config tiproxy -> 192.168.174.146:6000 ... Done

- Generate config tikv -> 192.168.174.145:20160 ... Done

- Generate config tikv -> 192.168.174.146:20160 ... Done

- Generate config tikv -> 192.168.174.147:20160 ... Done

- Generate config tidb -> 192.168.174.145:4000 ... Done

- Generate config tidb -> 192.168.174.146:4000 ... Done

- Generate config tidb -> 192.168.174.147:4000 ... Done

- Generate config tiflash -> 192.168.174.145:9000 ... Done

- Generate config tiflash -> 192.168.174.146:9000 ... Done

- Generate config cdc -> 192.168.174.145:8300 ... Done

- Generate config cdc -> 192.168.174.146:8300 ... Done

- Generate config cdc -> 192.168.174.147:8300 ... Done

- Generate config prometheus -> 192.168.174.145:9090 ... Done

- Generate config grafana -> 192.168.174.145:3000 ... Done

- Generate config alertmanager -> 192.168.174.145:9093 ... Done

+ Init monitor configs

- Generate config node_exporter -> 192.168.174.145 ... Done

- Generate config node_exporter -> 192.168.174.146 ... Done

- Generate config node_exporter -> 192.168.174.147 ... Done

- Generate config blackbox_exporter -> 192.168.174.145 ... Done

- Generate config blackbox_exporter -> 192.168.174.146 ... Done

- Generate config blackbox_exporter -> 192.168.174.147 ... Done

Enabling component pd

Enabling instance 192.168.174.147:2379

Enabling instance 192.168.174.145:2379

Enabling instance 192.168.174.146:2379

Enable instance 192.168.174.145:2379 success

Enable instance 192.168.174.147:2379 success

Enable instance 192.168.174.146:2379 success

Enabling component tiproxy

Enabling instance 192.168.174.146:6000

Enabling instance 192.168.174.145:6000

Enable instance 192.168.174.145:6000 success

Enable instance 192.168.174.146:6000 success

Enabling component tikv

Enabling instance 192.168.174.147:20160

Enabling instance 192.168.174.145:20160

Enabling instance 192.168.174.146:20160

Enable instance 192.168.174.145:20160 success

Enable instance 192.168.174.147:20160 success

Enable instance 192.168.174.146:20160 success

Enabling component tidb

Enabling instance 192.168.174.147:4000

Enabling instance 192.168.174.145:4000

Enabling instance 192.168.174.146:4000

Enable instance 192.168.174.145:4000 success

Enable instance 192.168.174.146:4000 success

Enable instance 192.168.174.147:4000 success

Enabling component tiflash

Enabling instance 192.168.174.146:9000

Enabling instance 192.168.174.145:9000

Enable instance 192.168.174.145:9000 success

Enable instance 192.168.174.146:9000 success

Enabling component cdc

Enabling instance 192.168.174.147:8300

Enabling instance 192.168.174.146:8300

Enabling instance 192.168.174.145:8300

Enable instance 192.168.174.145:8300 success

Enable instance 192.168.174.147:8300 success

Enable instance 192.168.174.146:8300 success

Enabling component prometheus

Enabling instance 192.168.174.145:9090

Enable instance 192.168.174.145:9090 success

Enabling component grafana

Enabling instance 192.168.174.145:3000

Enable instance 192.168.174.145:3000 success

Enabling component alertmanager

Enabling instance 192.168.174.145:9093

Enable instance 192.168.174.145:9093 success

Enabling component node_exporter

Enabling instance 192.168.174.147

Enabling instance 192.168.174.145

Enabling instance 192.168.174.146

Enable 192.168.174.145 success

Enable 192.168.174.146 success

Enable 192.168.174.147 success

Enabling component blackbox_exporter

Enabling instance 192.168.174.147

Enabling instance 192.168.174.145

Enabling instance 192.168.174.146

Enable 192.168.174.145 success

Enable 192.168.174.146 success

Enable 192.168.174.147 success

Cluster `tidb-test` deployed successfully, you can start it with command: `tiup cluster start tidb-test --init`

tidb-test 為部署的集群名稱。

v8.5.1 為部署的集群版本,可以通過(guò)執(zhí)行 tiup list tidb 來(lái)查看 TiUP 支持的最新可用版本。

初始化配置文件為 topology.yaml。

--user root 表示通過(guò) root 用戶登錄到目標(biāo)主機(jī)完成集群部署,該用戶需要有 ssh 到目標(biāo)機(jī)器的權(quán)限,并且在目標(biāo)機(jī)器有 sudo 權(quán)限。也可以用其他有 ssh 和 sudo 權(quán)限的用戶完成部署。

[-i] 及 [-p] 為可選項(xiàng),如果已經(jīng)配置免密登錄目標(biāo)機(jī),則不需填寫(xiě)。否則選擇其一即可,[-i] 為可登錄到目標(biāo)機(jī)的 root 用戶(或 --user 指定的其他用戶)的私鑰,也可使用 [-p] 交互式輸入該用戶的密碼。

查看 TiUP 管理的集群列表

tiup cluster list

Name User Version Path PrivateKey

---- ---- ------- ---- ----------

tidb-test tidb v8.5.1 /root/.tiup/storage/cluster/clusters/tidb-test /root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa

檢查部署的 TiDB 集群情況

tiup cluster display tidb-test

Cluster type: tidb

Cluster name: tidb-test

Cluster version: v8.5.1

Deploy user: tidb

SSH type: builtin

Grafana URL: http://192.168.174.145:3000

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

192.168.174.145:9093 alertmanager 192.168.174.145 9093/9094 linux/x86_64 Down /tidb-data/alertmanager-9093 /tidb-deploy/alertmanager-9093

192.168.174.145:8300 cdc 192.168.174.145 8300 linux/x86_64 Down /tidb-data/cdc-8300 /tidb-deploy/cdc-8300

192.168.174.146:8300 cdc 192.168.174.146 8300 linux/x86_64 Down /tidb-data/cdc-8300 /tidb-deploy/cdc-8300

192.168.174.147:8300 cdc 192.168.174.147 8300 linux/x86_64 Down /tidb-data/cdc-8300 /tidb-deploy/cdc-8300

192.168.174.145:3000 grafana 192.168.174.145 3000 linux/x86_64 Down - /tidb-deploy/grafana-3000

192.168.174.145:2379 pd 192.168.174.145 2379/2380 linux/x86_64 Down /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.174.146:2379 pd 192.168.174.146 2379/2380 linux/x86_64 Down /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.174.147:2379 pd 192.168.174.147 2379/2380 linux/x86_64 Down /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.174.145:9090 prometheus 192.168.174.145 9090/12020 linux/x86_64 Down /tidb-data/prometheus-9090 /tidb-deploy/prometheus-9090

192.168.174.145:4000 tidb 192.168.174.145 4000/10080 linux/x86_64 Down - /tidb-deploy/tidb-4000

192.168.174.146:4000 tidb 192.168.174.146 4000/10080 linux/x86_64 Down - /tidb-deploy/tidb-4000

192.168.174.147:4000 tidb 192.168.174.147 4000/10080 linux/x86_64 Down - /tidb-deploy/tidb-4000

192.168.174.145:9000 tiflash 192.168.174.145 9000/3930/20170/20292/8234/8123 linux/x86_64 N/A /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.174.146:9000 tiflash 192.168.174.146 9000/3930/20170/20292/8234/8123 linux/x86_64 N/A /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.174.145:20160 tikv 192.168.174.145 20160/20180 linux/x86_64 N/A /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.174.146:20160 tikv 192.168.174.146 20160/20180 linux/x86_64 N/A /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.174.147:20160 tikv 192.168.174.147 20160/20180 linux/x86_64 N/A /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.174.145:6000 tiproxy 192.168.174.145 6000/3080 linux/x86_64 Down - /tiproxy-deploy

192.168.174.146:6000 tiproxy 192.168.174.146 6000/3080 linux/x86_64 Down - /tiproxy-deploy

Total nodes: 19

啟動(dòng)集群

tiup cluster start tidb-test --init

Starting cluster tidb-test...

+ [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.146

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.147

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.147

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.146

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.147

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.146

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.146

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.147

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.146

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.146

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [ Serial ] - StartCluster

Starting component pd

Starting instance 192.168.174.147:2379

Starting instance 192.168.174.145:2379

Starting instance 192.168.174.146:2379

Start instance 192.168.174.145:2379 success

Start instance 192.168.174.147:2379 success

Start instance 192.168.174.146:2379 success

Starting component tiproxy

Starting instance 192.168.174.146:6000

Starting instance 192.168.174.145:6000

Start instance 192.168.174.145:6000 success

Start instance 192.168.174.146:6000 success

Starting component tikv

Starting instance 192.168.174.147:20160

Starting instance 192.168.174.145:20160

Starting instance 192.168.174.146:20160

Start instance 192.168.174.145:20160 success

Start instance 192.168.174.146:20160 success

Start instance 192.168.174.147:20160 success

Starting component tidb

Starting instance 192.168.174.147:4000

Starting instance 192.168.174.145:4000

Starting instance 192.168.174.146:4000

Start instance 192.168.174.145:4000 success

Start instance 192.168.174.146:4000 success

Start instance 192.168.174.147:4000 success

Starting component tiflash

Starting instance 192.168.174.145:9000

Starting instance 192.168.174.146:9000

Start instance 192.168.174.145:9000 success

Start instance 192.168.174.146:9000 success

Starting component cdc

Starting instance 192.168.174.147:8300

Starting instance 192.168.174.146:8300

Starting instance 192.168.174.145:8300

Start instance 192.168.174.145:8300 success

Start instance 192.168.174.147:8300 success

Start instance 192.168.174.146:8300 success

Starting component prometheus

Starting instance 192.168.174.145:9090

Start instance 192.168.174.145:9090 success

Starting component grafana

Starting instance 192.168.174.145:3000

Start instance 192.168.174.145:3000 success

Starting component alertmanager

Starting instance 192.168.174.145:9093

Start instance 192.168.174.145:9093 success

Starting component node_exporter

Starting instance 192.168.174.146

Starting instance 192.168.174.147

Starting instance 192.168.174.145

Start 192.168.174.145 success

Start 192.168.174.147 success

Start 192.168.174.146 success

Starting component blackbox_exporter

Starting instance 192.168.174.146

Starting instance 192.168.174.147

Starting instance 192.168.174.145

Start 192.168.174.145 success

Start 192.168.174.146 success

Start 192.168.174.147 success

+ [ Serial ] - UpdateTopology: cluster=tidb-test

Started cluster `tidb-test` successfully

The root password of TiDB database has been changed.

The new password is: 'Y3+V^*6@v0z5RJ4f7Z'.

Copy and record it to somewhere safe, it is only displayed once, and will not be stored.

The generated password can NOT be get and shown again.

驗(yàn)證集群運(yùn)行狀態(tài)

tiup cluster display tidb-test

Cluster type: tidb

Cluster name: tidb-test

Cluster version: v8.5.1

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://192.168.174.147:2379/dashboard

Grafana URL: http://192.168.174.145:3000

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

192.168.174.145:9093 alertmanager 192.168.174.145 9093/9094 linux/x86_64 Up /tidb-data/alertmanager-9093 /tidb-deploy/alertmanager-9093

192.168.174.145:8300 cdc 192.168.174.145 8300 linux/x86_64 Up /tidb-data/cdc-8300 /tidb-deploy/cdc-8300

192.168.174.146:8300 cdc 192.168.174.146 8300 linux/x86_64 Up /tidb-data/cdc-8300 /tidb-deploy/cdc-8300

192.168.174.147:8300 cdc 192.168.174.147 8300 linux/x86_64 Up /tidb-data/cdc-8300 /tidb-deploy/cdc-8300

192.168.174.145:3000 grafana 192.168.174.145 3000 linux/x86_64 Up - /tidb-deploy/grafana-3000

192.168.174.145:2379 pd 192.168.174.145 2379/2380 linux/x86_64 Up|L /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.174.146:2379 pd 192.168.174.146 2379/2380 linux/x86_64 Up /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.174.147:2379 pd 192.168.174.147 2379/2380 linux/x86_64 Up|UI /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.174.145:9090 prometheus 192.168.174.145 9090/12020 linux/x86_64 Up /tidb-data/prometheus-9090 /tidb-deploy/prometheus-9090

192.168.174.145:4000 tidb 192.168.174.145 4000/10080 linux/x86_64 Up - /tidb-deploy/tidb-4000

192.168.174.146:4000 tidb 192.168.174.146 4000/10080 linux/x86_64 Up - /tidb-deploy/tidb-4000

192.168.174.147:4000 tidb 192.168.174.147 4000/10080 linux/x86_64 Up - /tidb-deploy/tidb-4000

192.168.174.145:9000 tiflash 192.168.174.145 9000/3930/20170/20292/8234/8123 linux/x86_64 Up /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.174.146:9000 tiflash 192.168.174.146 9000/3930/20170/20292/8234/8123 linux/x86_64 Up /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.174.145:20160 tikv 192.168.174.145 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.174.146:20160 tikv 192.168.174.146 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.174.147:20160 tikv 192.168.174.147 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.174.145:6000 tiproxy 192.168.174.145 6000/3080 linux/x86_64 Up - /tiproxy-deploy

192.168.174.146:6000 tiproxy 192.168.174.146 6000/3080 linux/x86_64 Up - /tiproxy-deploy

Total nodes: 19

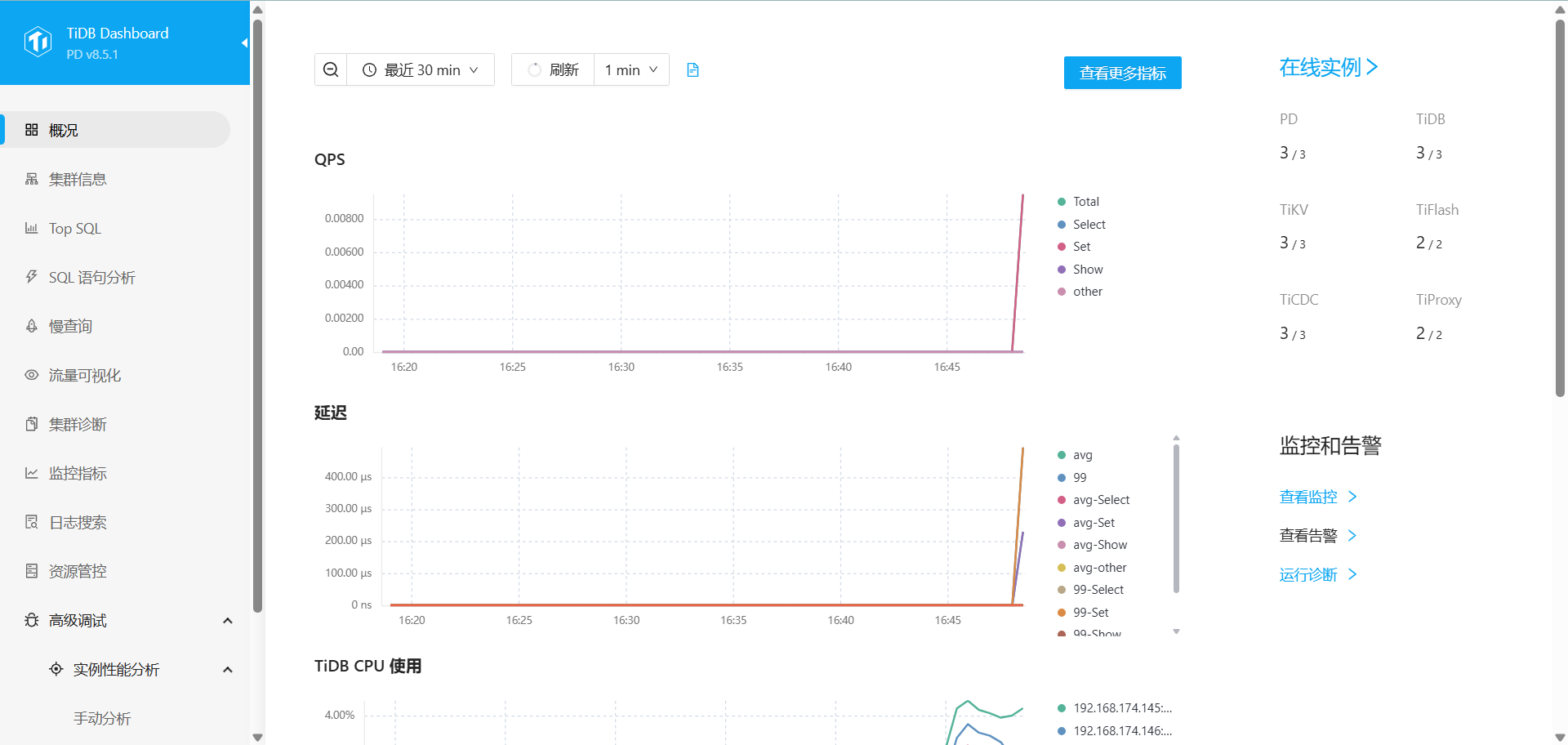

訪問(wèn) tidb 集群 dashboard

Dashboard URL: http://192.168.174.147:2379/dashboard

The root password of TiDB database has been changed.

The new password is: 'Y3+V^*6@v0z5RJ4f7Z'.

A new version of cluster is available: -> v1.16.1

To update this component: tiup update cluster

To update all components: tiup update --all

The component `cluster` version is not installed; downloading from repository.tiup cluster check ./topology.yaml --user root tiup cluster check ./topology.yaml --apply --user root tiup cluster deploy tidb-test v8.5.1 ./topology.yaml --user root

+ Detect CPU Arch Name

- Detecting node 192.168.174.145 Arch info ... Done

- Detecting node 192.168.174.146 Arch info ... Done

- Detecting node 192.168.174.147 Arch info ... Done

+ Detect CPU OS Name

- Detecting node 192.168.174.145 OS info ... Done

- Detecting node 192.168.174.146 OS info ... Done

- Detecting node 192.168.174.147 OS info ... Done

Please confirm your topology:

Cluster type: tidb

Cluster name: tidb-test

Cluster version: v8.5.1

Role Host Ports OS/Arch Directories

---- ---- ----- ------- -----------

pd 192.168.174.145 2379/2380 linux/x86_64 /tidb-deploy/pd-2379,/tidb-data/pd-2379

pd 192.168.174.146 2379/2380 linux/x86_64 /tidb-deploy/pd-2379,/tidb-data/pd-2379

pd 192.168.174.147 2379/2380 linux/x86_64 /tidb-deploy/pd-2379,/tidb-data/pd-2379

tiproxy 192.168.174.145 6000/3080 linux/x86_64 /tiproxy-deploy

tiproxy 192.168.174.146 6000/3080 linux/x86_64 /tiproxy-deploy

tikv 192.168.174.145 20160/20180 linux/x86_64 /tidb-deploy/tikv-20160,/tidb-data/tikv-20160

tikv 192.168.174.146 20160/20180 linux/x86_64 /tidb-deploy/tikv-20160,/tidb-data/tikv-20160

tikv 192.168.174.147 20160/20180 linux/x86_64 /tidb-deploy/tikv-20160,/tidb-data/tikv-20160

tidb 192.168.174.145 4000/10080 linux/x86_64 /tidb-deploy/tidb-4000

tidb 192.168.174.146 4000/10080 linux/x86_64 /tidb-deploy/tidb-4000

tidb 192.168.174.147 4000/10080 linux/x86_64 /tidb-deploy/tidb-4000

tiflash 192.168.174.145 9000/3930/20170/20292/8234/8123 linux/x86_64 /tidb-deploy/tiflash-9000,/tidb-data/tiflash-9000

tiflash 192.168.174.146 9000/3930/20170/20292/8234/8123 linux/x86_64 /tidb-deploy/tiflash-9000,/tidb-data/tiflash-9000

cdc 192.168.174.145 8300 linux/x86_64 /tidb-deploy/cdc-8300,/tidb-data/cdc-8300

cdc 192.168.174.146 8300 linux/x86_64 /tidb-deploy/cdc-8300,/tidb-data/cdc-8300

cdc 192.168.174.147 8300 linux/x86_64 /tidb-deploy/cdc-8300,/tidb-data/cdc-8300

prometheus 192.168.174.145 9090/12020 linux/x86_64 /tidb-deploy/prometheus-9090,/tidb-data/prometheus-9090

grafana 192.168.174.145 3000 linux/x86_64 /tidb-deploy/grafana-3000

alertmanager 192.168.174.145 9093/9094 linux/x86_64 /tidb-deploy/alertmanager-9093,/tidb-data/alertmanager-9093

Attention:

1. If the topology is not what you expected, check your yaml file.

2. Please confirm there is no port/directory conflicts in same host.

Do you want to continue? [y/N]: (default=N) y

+ Generate SSH keys ... Done

+ Download TiDB components

- Download pd:v8.5.1 (linux/amd64) ... Done

- Download tiproxy:v1.3.0 (linux/amd64) ... Done

- Download tikv:v8.5.1 (linux/amd64) ... Done

- Download tidb:v8.5.1 (linux/amd64) ... Done

- Download tiflash:v8.5.1 (linux/amd64) ... Done

- Download cdc:v8.5.1 (linux/amd64) ... Done

- Download prometheus:v8.5.1 (linux/amd64) ... Done

- Download grafana:v8.5.1 (linux/amd64) ... Done

- Download alertmanager: (linux/amd64) ... Done

- Download node_exporter: (linux/amd64) ... Done

- Download blackbox_exporter: (linux/amd64) ... Done

+ Initialize target host environments

- Prepare 192.168.174.145:22 ... Done

- Prepare 192.168.174.146:22 ... Done

- Prepare 192.168.174.147:22 ... Done

+ Deploy TiDB instance

- Copy pd -> 192.168.174.145 ... Done

- Copy pd -> 192.168.174.146 ... Done

- Copy pd -> 192.168.174.147 ... Done

- Copy tiproxy -> 192.168.174.145 ... Done

- Copy tiproxy -> 192.168.174.146 ... Done

- Copy tikv -> 192.168.174.145 ... Done

- Copy tikv -> 192.168.174.146 ... Done

- Copy tikv -> 192.168.174.147 ... Done

- Copy tidb -> 192.168.174.145 ... Done

- Copy tidb -> 192.168.174.146 ... Done

- Copy tidb -> 192.168.174.147 ... Done

- Copy tiflash -> 192.168.174.145 ... Done

- Copy tiflash -> 192.168.174.146 ... Done

- Copy cdc -> 192.168.174.145 ... Done

- Copy cdc -> 192.168.174.146 ... Done

- Copy cdc -> 192.168.174.147 ... Done

- Copy prometheus -> 192.168.174.145 ... Done

- Copy grafana -> 192.168.174.145 ... Done

- Copy alertmanager -> 192.168.174.145 ... Done

- Deploy node_exporter -> 192.168.174.145 ... Done

- Deploy node_exporter -> 192.168.174.146 ... Done

- Deploy node_exporter -> 192.168.174.147 ... Done

- Deploy blackbox_exporter -> 192.168.174.145 ... Done

- Deploy blackbox_exporter -> 192.168.174.146 ... Done

- Deploy blackbox_exporter -> 192.168.174.147 ... Done

+ Copy certificate to remote host

- Copy session certificate tidb -> 192.168.174.145:4000 ... Done

- Copy session certificate tidb -> 192.168.174.146:4000 ... Done

- Copy session certificate tidb -> 192.168.174.147:4000 ... Done

+ Init instance configs

- Generate config pd -> 192.168.174.145:2379 ... Done

- Generate config pd -> 192.168.174.146:2379 ... Done

- Generate config pd -> 192.168.174.147:2379 ... Done

- Generate config tiproxy -> 192.168.174.145:6000 ... Done

- Generate config tiproxy -> 192.168.174.146:6000 ... Done

- Generate config tikv -> 192.168.174.145:20160 ... Done

- Generate config tikv -> 192.168.174.146:20160 ... Done

- Generate config tikv -> 192.168.174.147:20160 ... Done

- Generate config tidb -> 192.168.174.145:4000 ... Done

- Generate config tidb -> 192.168.174.146:4000 ... Done

- Generate config tidb -> 192.168.174.147:4000 ... Done

- Generate config tiflash -> 192.168.174.145:9000 ... Done

- Generate config tiflash -> 192.168.174.146:9000 ... Done

- Generate config cdc -> 192.168.174.145:8300 ... Done

- Generate config cdc -> 192.168.174.146:8300 ... Done

- Generate config cdc -> 192.168.174.147:8300 ... Done

- Generate config prometheus -> 192.168.174.145:9090 ... Done

- Generate config grafana -> 192.168.174.145:3000 ... Done

- Generate config alertmanager -> 192.168.174.145:9093 ... Done

+ Init monitor configs

- Generate config node_exporter -> 192.168.174.145 ... Done

- Generate config node_exporter -> 192.168.174.146 ... Done

- Generate config node_exporter -> 192.168.174.147 ... Done

- Generate config blackbox_exporter -> 192.168.174.145 ... Done

- Generate config blackbox_exporter -> 192.168.174.146 ... Done

- Generate config blackbox_exporter -> 192.168.174.147 ... Done

Enabling component pd

Enabling instance 192.168.174.147:2379

Enabling instance 192.168.174.145:2379

Enabling instance 192.168.174.146:2379

Enable instance 192.168.174.145:2379 success

Enable instance 192.168.174.147:2379 success

Enable instance 192.168.174.146:2379 success

Enabling component tiproxy

Enabling instance 192.168.174.146:6000

Enabling instance 192.168.174.145:6000

Enable instance 192.168.174.145:6000 success

Enable instance 192.168.174.146:6000 success

Enabling component tikv

Enabling instance 192.168.174.147:20160

Enabling instance 192.168.174.145:20160

Enabling instance 192.168.174.146:20160

Enable instance 192.168.174.145:20160 success

Enable instance 192.168.174.147:20160 success

Enable instance 192.168.174.146:20160 success

Enabling component tidb

Enabling instance 192.168.174.147:4000

Enabling instance 192.168.174.145:4000

Enabling instance 192.168.174.146:4000

Enable instance 192.168.174.145:4000 success

Enable instance 192.168.174.146:4000 success

Enable instance 192.168.174.147:4000 success

Enabling component tiflash

Enabling instance 192.168.174.146:9000

Enabling instance 192.168.174.145:9000

Enable instance 192.168.174.145:9000 success

Enable instance 192.168.174.146:9000 success

Enabling component cdc

Enabling instance 192.168.174.147:8300

Enabling instance 192.168.174.146:8300

Enabling instance 192.168.174.145:8300

Enable instance 192.168.174.145:8300 success

Enable instance 192.168.174.147:8300 success

Enable instance 192.168.174.146:8300 success

Enabling component prometheus

Enabling instance 192.168.174.145:9090

Enable instance 192.168.174.145:9090 success

Enabling component grafana

Enabling instance 192.168.174.145:3000

Enable instance 192.168.174.145:3000 success

Enabling component alertmanager

Enabling instance 192.168.174.145:9093

Enable instance 192.168.174.145:9093 success

Enabling component node_exporter

Enabling instance 192.168.174.147

Enabling instance 192.168.174.145

Enabling instance 192.168.174.146

Enable 192.168.174.145 success

Enable 192.168.174.146 success

Enable 192.168.174.147 success

Enabling component blackbox_exporter

Enabling instance 192.168.174.147

Enabling instance 192.168.174.145

Enabling instance 192.168.174.146

Enable 192.168.174.145 success

Enable 192.168.174.146 success

Enable 192.168.174.147 success

Cluster `tidb-test` deployed successfully, you can start it with command: `tiup cluster start tidb-test --init`tidb-test 為部署的集群名稱。

v8.5.1 為部署的集群版本,可以通過(guò)執(zhí)行 tiup list tidb 來(lái)查看 TiUP 支持的最新可用版本。

初始化配置文件為 topology.yaml。

--user root 表示通過(guò) root 用戶登錄到目標(biāo)主機(jī)完成集群部署,該用戶需要有 ssh 到目標(biāo)機(jī)器的權(quán)限,并且在目標(biāo)機(jī)器有 sudo 權(quán)限。也可以用其他有 ssh 和 sudo 權(quán)限的用戶完成部署。

[-i] 及 [-p] 為可選項(xiàng),如果已經(jīng)配置免密登錄目標(biāo)機(jī),則不需填寫(xiě)。否則選擇其一即可,[-i] 為可登錄到目標(biāo)機(jī)的 root 用戶(或 --user 指定的其他用戶)的私鑰,也可使用 [-p] 交互式輸入該用戶的密碼。tiup cluster listName User Version Path PrivateKey

---- ---- ------- ---- ----------

tidb-test tidb v8.5.1 /root/.tiup/storage/cluster/clusters/tidb-test /root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsatiup cluster display tidb-testCluster type: tidb

Cluster name: tidb-test

Cluster version: v8.5.1

Deploy user: tidb

SSH type: builtin

Grafana URL: http://192.168.174.145:3000

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

192.168.174.145:9093 alertmanager 192.168.174.145 9093/9094 linux/x86_64 Down /tidb-data/alertmanager-9093 /tidb-deploy/alertmanager-9093

192.168.174.145:8300 cdc 192.168.174.145 8300 linux/x86_64 Down /tidb-data/cdc-8300 /tidb-deploy/cdc-8300

192.168.174.146:8300 cdc 192.168.174.146 8300 linux/x86_64 Down /tidb-data/cdc-8300 /tidb-deploy/cdc-8300

192.168.174.147:8300 cdc 192.168.174.147 8300 linux/x86_64 Down /tidb-data/cdc-8300 /tidb-deploy/cdc-8300

192.168.174.145:3000 grafana 192.168.174.145 3000 linux/x86_64 Down - /tidb-deploy/grafana-3000

192.168.174.145:2379 pd 192.168.174.145 2379/2380 linux/x86_64 Down /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.174.146:2379 pd 192.168.174.146 2379/2380 linux/x86_64 Down /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.174.147:2379 pd 192.168.174.147 2379/2380 linux/x86_64 Down /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.174.145:9090 prometheus 192.168.174.145 9090/12020 linux/x86_64 Down /tidb-data/prometheus-9090 /tidb-deploy/prometheus-9090

192.168.174.145:4000 tidb 192.168.174.145 4000/10080 linux/x86_64 Down - /tidb-deploy/tidb-4000

192.168.174.146:4000 tidb 192.168.174.146 4000/10080 linux/x86_64 Down - /tidb-deploy/tidb-4000

192.168.174.147:4000 tidb 192.168.174.147 4000/10080 linux/x86_64 Down - /tidb-deploy/tidb-4000

192.168.174.145:9000 tiflash 192.168.174.145 9000/3930/20170/20292/8234/8123 linux/x86_64 N/A /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.174.146:9000 tiflash 192.168.174.146 9000/3930/20170/20292/8234/8123 linux/x86_64 N/A /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.174.145:20160 tikv 192.168.174.145 20160/20180 linux/x86_64 N/A /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.174.146:20160 tikv 192.168.174.146 20160/20180 linux/x86_64 N/A /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.174.147:20160 tikv 192.168.174.147 20160/20180 linux/x86_64 N/A /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.174.145:6000 tiproxy 192.168.174.145 6000/3080 linux/x86_64 Down - /tiproxy-deploy

192.168.174.146:6000 tiproxy 192.168.174.146 6000/3080 linux/x86_64 Down - /tiproxy-deploy

Total nodes: 19tiup cluster start tidb-test --initStarting cluster tidb-test...

+ [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.146

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.147

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.147

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.146

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.147

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.146

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.146

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.147

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.146

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.146

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [ Serial ] - StartCluster

Starting component pd

Starting instance 192.168.174.147:2379

Starting instance 192.168.174.145:2379

Starting instance 192.168.174.146:2379

Start instance 192.168.174.145:2379 success

Start instance 192.168.174.147:2379 success

Start instance 192.168.174.146:2379 success

Starting component tiproxy

Starting instance 192.168.174.146:6000

Starting instance 192.168.174.145:6000

Start instance 192.168.174.145:6000 success

Start instance 192.168.174.146:6000 success

Starting component tikv

Starting instance 192.168.174.147:20160

Starting instance 192.168.174.145:20160

Starting instance 192.168.174.146:20160

Start instance 192.168.174.145:20160 success

Start instance 192.168.174.146:20160 success

Start instance 192.168.174.147:20160 success

Starting component tidb

Starting instance 192.168.174.147:4000

Starting instance 192.168.174.145:4000

Starting instance 192.168.174.146:4000

Start instance 192.168.174.145:4000 success

Start instance 192.168.174.146:4000 success

Start instance 192.168.174.147:4000 success

Starting component tiflash

Starting instance 192.168.174.145:9000

Starting instance 192.168.174.146:9000

Start instance 192.168.174.145:9000 success

Start instance 192.168.174.146:9000 success

Starting component cdc

Starting instance 192.168.174.147:8300

Starting instance 192.168.174.146:8300

Starting instance 192.168.174.145:8300

Start instance 192.168.174.145:8300 success

Start instance 192.168.174.147:8300 success

Start instance 192.168.174.146:8300 success

Starting component prometheus

Starting instance 192.168.174.145:9090

Start instance 192.168.174.145:9090 success

Starting component grafana

Starting instance 192.168.174.145:3000

Start instance 192.168.174.145:3000 success

Starting component alertmanager

Starting instance 192.168.174.145:9093

Start instance 192.168.174.145:9093 success

Starting component node_exporter

Starting instance 192.168.174.146

Starting instance 192.168.174.147

Starting instance 192.168.174.145

Start 192.168.174.145 success

Start 192.168.174.147 success

Start 192.168.174.146 success

Starting component blackbox_exporter

Starting instance 192.168.174.146

Starting instance 192.168.174.147

Starting instance 192.168.174.145

Start 192.168.174.145 success

Start 192.168.174.146 success

Start 192.168.174.147 success

+ [ Serial ] - UpdateTopology: cluster=tidb-test

Started cluster `tidb-test` successfully

The root password of TiDB database has been changed.

The new password is: 'Y3+V^*6@v0z5RJ4f7Z'.

Copy and record it to somewhere safe, it is only displayed once, and will not be stored.

The generated password can NOT be get and shown again.tiup cluster display tidb-testCluster type: tidb

Cluster name: tidb-test

Cluster version: v8.5.1

Deploy user: tidb

SSH type: builtin

Dashboard URL: http://192.168.174.147:2379/dashboard

Grafana URL: http://192.168.174.145:3000

ID Role Host Ports OS/Arch Status Data Dir Deploy Dir

-- ---- ---- ----- ------- ------ -------- ----------

192.168.174.145:9093 alertmanager 192.168.174.145 9093/9094 linux/x86_64 Up /tidb-data/alertmanager-9093 /tidb-deploy/alertmanager-9093

192.168.174.145:8300 cdc 192.168.174.145 8300 linux/x86_64 Up /tidb-data/cdc-8300 /tidb-deploy/cdc-8300

192.168.174.146:8300 cdc 192.168.174.146 8300 linux/x86_64 Up /tidb-data/cdc-8300 /tidb-deploy/cdc-8300

192.168.174.147:8300 cdc 192.168.174.147 8300 linux/x86_64 Up /tidb-data/cdc-8300 /tidb-deploy/cdc-8300

192.168.174.145:3000 grafana 192.168.174.145 3000 linux/x86_64 Up - /tidb-deploy/grafana-3000

192.168.174.145:2379 pd 192.168.174.145 2379/2380 linux/x86_64 Up|L /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.174.146:2379 pd 192.168.174.146 2379/2380 linux/x86_64 Up /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.174.147:2379 pd 192.168.174.147 2379/2380 linux/x86_64 Up|UI /tidb-data/pd-2379 /tidb-deploy/pd-2379

192.168.174.145:9090 prometheus 192.168.174.145 9090/12020 linux/x86_64 Up /tidb-data/prometheus-9090 /tidb-deploy/prometheus-9090

192.168.174.145:4000 tidb 192.168.174.145 4000/10080 linux/x86_64 Up - /tidb-deploy/tidb-4000

192.168.174.146:4000 tidb 192.168.174.146 4000/10080 linux/x86_64 Up - /tidb-deploy/tidb-4000

192.168.174.147:4000 tidb 192.168.174.147 4000/10080 linux/x86_64 Up - /tidb-deploy/tidb-4000

192.168.174.145:9000 tiflash 192.168.174.145 9000/3930/20170/20292/8234/8123 linux/x86_64 Up /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.174.146:9000 tiflash 192.168.174.146 9000/3930/20170/20292/8234/8123 linux/x86_64 Up /tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000

192.168.174.145:20160 tikv 192.168.174.145 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.174.146:20160 tikv 192.168.174.146 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.174.147:20160 tikv 192.168.174.147 20160/20180 linux/x86_64 Up /tidb-data/tikv-20160 /tidb-deploy/tikv-20160

192.168.174.145:6000 tiproxy 192.168.174.145 6000/3080 linux/x86_64 Up - /tiproxy-deploy

192.168.174.146:6000 tiproxy 192.168.174.146 6000/3080 linux/x86_64 Up - /tiproxy-deploy

Total nodes: 19Dashboard URL: http://192.168.174.147:2379/dashboard

The root password of TiDB database has been changed.

The new password is: 'Y3+V^*6@v0z5RJ4f7Z'.

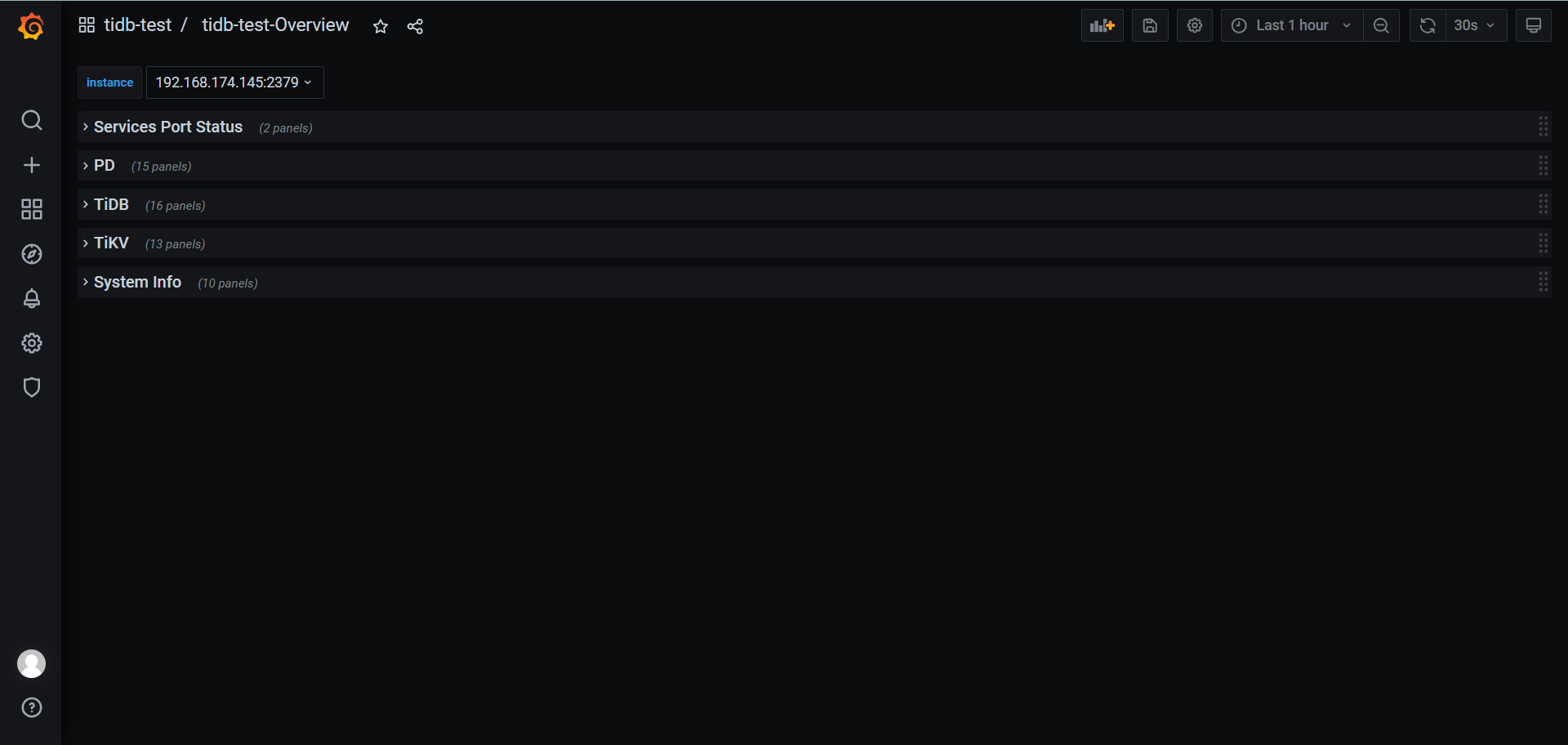

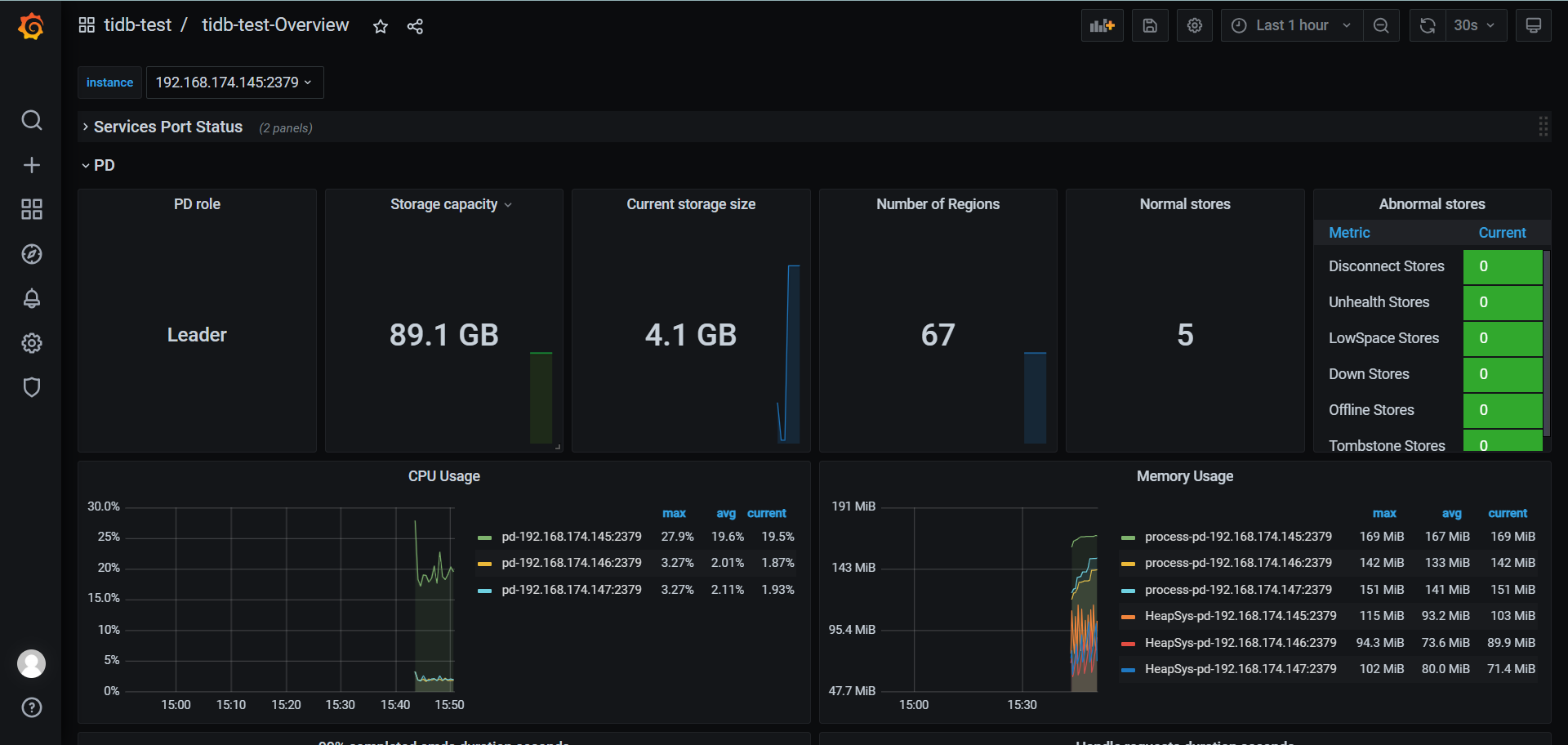

查看 Grafana 監(jiān)控

通過(guò) {Grafana-ip}:3000 登錄 Grafana 監(jiān)控,默認(rèn)用戶名及密碼為 admin/admin

驗(yàn)證數(shù)據(jù)庫(kù)

登錄數(shù)據(jù)庫(kù)

The root password of TiDB database has been changed.

The new password is: 'Y3+V^*6@v0z5RJ4f7Z'.mysql -u root -h 192.168.174.145 -P 4000 -p

or

# tiproxy (推薦)

mysql -u root -h 192.168.174.200 -P 6000 -pWelcome to the MySQL monitor. Commands end with ; or \g.

Your MySQL connection id is 2237661196

Server version: 8.0.11-TiDB-v8.5.1 TiDB Server (Apache License 2.0) Community Edition, MySQL 8.0 compatible

Copyright (c) 2000, 2022, Oracle and/or its affiliates.

Oracle is a registered trademark of Oracle Corporation and/or its

affiliates. Other names may be trademarks of their respective

owners.

Type 'help;' or '\h' for help. Type '\c' to clear the current input statement.

mysql> 數(shù)據(jù)庫(kù)操作

select tidb_version()\G*************************** 1. row ***************************

tidb_version(): Release Version: v8.5.1

Edition: Community

Git Commit Hash: fea86c8e35ad4a86a5e1160701f99493c2ee547c

Git Branch: HEAD

UTC Build Time: 2025-01-16 07:38:34

GoVersion: go1.23.4

Race Enabled: false

Check Table Before Drop: false

Store: tikv

1 row in set (0.00 sec)卸載 tidb 集群

tiup cluster destroy tidb-test██ ██ █████ ██████ ███ ██ ██ ███ ██ ██████

██ ██ ██ ██ ██ ██ ████ ██ ██ ████ ██ ██

██ █ ██ ███████ ██████ ██ ██ ██ ██ ██ ██ ██ ██ ███

██ ███ ██ ██ ██ ██ ██ ██ ██ ██ ██ ██ ██ ██ ██ ██

███ ███ ██ ██ ██ ██ ██ ████ ██ ██ ████ ██████

This operation will destroy tidb v8.5.1 cluster tidb-test and its data.

Are you sure to continue?

(Type "Yes, I know my cluster and data will be deleted." to continue)

: Yes, I know my cluster and data will be deleted.

Destroying cluster...

+ [ Serial ] - SSHKeySet: privateKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa, publicKey=/root/.tiup/storage/cluster/clusters/tidb-test/ssh/id_rsa.pub

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.146

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.146

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.147

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.146

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.147

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.147

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.146

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.146

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.147

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [Parallel] - UserSSH: user=tidb, host=192.168.174.145

+ [ Serial ] - StopCluster

Stopping component alertmanager

Stopping instance 192.168.174.145

Stop alertmanager 192.168.174.145:9093 success

Stopping component grafana

Stopping instance 192.168.174.145

Stop grafana 192.168.174.145:3000 success

Stopping component prometheus

Stopping instance 192.168.174.145

Stop prometheus 192.168.174.145:9090 success

Stopping component cdc

Stopping instance 192.168.174.145

Stop cdc 192.168.174.145:8300 success

Stopping instance 192.168.174.146

Stop cdc 192.168.174.146:8300 success

Stopping instance 192.168.174.147

Stop cdc 192.168.174.147:8300 success

Stopping component tiflash

Stopping instance 192.168.174.146

Stopping instance 192.168.174.145

Stop tiflash 192.168.174.145:9000 success

Stop tiflash 192.168.174.146:9000 success

Stopping component tidb

Stopping instance 192.168.174.147

Stopping instance 192.168.174.145

Stopping instance 192.168.174.146

Stop tidb 192.168.174.145:4000 success

Stop tidb 192.168.174.147:4000 success

Stop tidb 192.168.174.146:4000 success

Stopping component tikv

Stopping instance 192.168.174.147

Stopping instance 192.168.174.146

Stopping instance 192.168.174.145

Stop tikv 192.168.174.145:20160 success

Stop tikv 192.168.174.147:20160 success

Stop tikv 192.168.174.146:20160 success

Stopping component pd

Stopping instance 192.168.174.147

Stopping instance 192.168.174.145

Stopping instance 192.168.174.146

Stop pd 192.168.174.145:2379 success

Stop pd 192.168.174.147:2379 success

Stop pd 192.168.174.146:2379 success

Stopping component node_exporter

Stopping instance 192.168.174.147

Stopping instance 192.168.174.145

Stopping instance 192.168.174.146

Stop 192.168.174.146 success

Stop 192.168.174.147 success

Stop 192.168.174.145 success

Stopping component blackbox_exporter

Stopping instance 192.168.174.147

Stopping instance 192.168.174.145

Stopping instance 192.168.174.146

Stop 192.168.174.145 success

Stop 192.168.174.146 success

Stop 192.168.174.147 success

+ [ Serial ] - DestroyCluster

Destroying component alertmanager

Destroying instance 192.168.174.145

Destroy 192.168.174.145 finished

- Destroy alertmanager paths: [/tidb-data/alertmanager-9093 /tidb-deploy/alertmanager-9093/log /tidb-deploy/alertmanager-9093 /etc/systemd/system/alertmanager-9093.service]

Destroying component grafana

Destroying instance 192.168.174.145

Destroy 192.168.174.145 finished

- Destroy grafana paths: [/tidb-deploy/grafana-3000 /etc/systemd/system/grafana-3000.service]

Destroying component prometheus

Destroying instance 192.168.174.145

Destroy 192.168.174.145 finished

- Destroy prometheus paths: [/tidb-deploy/prometheus-9090 /etc/systemd/system/prometheus-9090.service /tidb-data/prometheus-9090 /tidb-deploy/prometheus-9090/log]

Destroying component cdc

Destroying instance 192.168.174.145

Destroy 192.168.174.145 finished

- Destroy cdc paths: [/tidb-data/cdc-8300 /tidb-deploy/cdc-8300/log /tidb-deploy/cdc-8300 /etc/systemd/system/cdc-8300.service]

Destroying instance 192.168.174.146

Destroy 192.168.174.146 finished

- Destroy cdc paths: [/tidb-deploy/cdc-8300/log /tidb-deploy/cdc-8300 /etc/systemd/system/cdc-8300.service /tidb-data/cdc-8300]

Destroying instance 192.168.174.147

Destroy 192.168.174.147 finished

- Destroy cdc paths: [/tidb-data/cdc-8300 /tidb-deploy/cdc-8300/log /tidb-deploy/cdc-8300 /etc/systemd/system/cdc-8300.service]

Destroying component tiflash

Destroying instance 192.168.174.145

Destroy 192.168.174.145 finished

- Destroy tiflash paths: [/tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000/log /tidb-deploy/tiflash-9000 /etc/systemd/system/tiflash-9000.service]

Destroying instance 192.168.174.146

Destroy 192.168.174.146 finished

- Destroy tiflash paths: [/tidb-data/tiflash-9000 /tidb-deploy/tiflash-9000/log /tidb-deploy/tiflash-9000 /etc/systemd/system/tiflash-9000.service]

Destroying component tidb

Destroying instance 192.168.174.145

Destroy 192.168.174.145 finished

- Destroy tidb paths: [/tidb-deploy/tidb-4000 /etc/systemd/system/tidb-4000.service /tidb-deploy/tidb-4000/log]

Destroying instance 192.168.174.146

Destroy 192.168.174.146 finished

- Destroy tidb paths: [/tidb-deploy/tidb-4000/log /tidb-deploy/tidb-4000 /etc/systemd/system/tidb-4000.service]

Destroying instance 192.168.174.147

Destroy 192.168.174.147 finished

- Destroy tidb paths: [/tidb-deploy/tidb-4000/log /tidb-deploy/tidb-4000 /etc/systemd/system/tidb-4000.service]

Destroying component tikv

Destroying instance 192.168.174.145

Destroy 192.168.174.145 finished

- Destroy tikv paths: [/tidb-data/tikv-20160 /tidb-deploy/tikv-20160/log /tidb-deploy/tikv-20160 /etc/systemd/system/tikv-20160.service]

Destroying instance 192.168.174.146

Destroy 192.168.174.146 finished

- Destroy tikv paths: [/tidb-data/tikv-20160 /tidb-deploy/tikv-20160/log /tidb-deploy/tikv-20160 /etc/systemd/system/tikv-20160.service]

Destroying instance 192.168.174.147

Destroy 192.168.174.147 finished

- Destroy tikv paths: [/tidb-data/tikv-20160 /tidb-deploy/tikv-20160/log /tidb-deploy/tikv-20160 /etc/systemd/system/tikv-20160.service]

Destroying component pd

Destroying instance 192.168.174.145

Destroy 192.168.174.145 finished

- Destroy pd paths: [/tidb-data/pd-2379 /tidb-deploy/pd-2379/log /tidb-deploy/pd-2379 /etc/systemd/system/pd-2379.service]

Destroying instance 192.168.174.146

Destroy 192.168.174.146 finished

- Destroy pd paths: [/tidb-data/pd-2379 /tidb-deploy/pd-2379/log /tidb-deploy/pd-2379 /etc/systemd/system/pd-2379.service]

Destroying instance 192.168.174.147

Destroy 192.168.174.147 finished

- Destroy pd paths: [/tidb-data/pd-2379 /tidb-deploy/pd-2379/log /tidb-deploy/pd-2379 /etc/systemd/system/pd-2379.service]

Destroying monitored 192.168.174.145

Destroying instance 192.168.174.145

Destroy monitored on 192.168.174.145 success

Destroying monitored 192.168.174.146

Destroying instance 192.168.174.146

Destroy monitored on 192.168.174.146 success

Destroying monitored 192.168.174.147

Destroying instance 192.168.174.147

Destroy monitored on 192.168.174.147 success

Clean global directories 192.168.174.145

Clean directory /tidb-deploy on instance 192.168.174.145

Clean directory /tidb-data on instance 192.168.174.145

Clean global directories 192.168.174.145 success

Clean global directories 192.168.174.146

Clean directory /tidb-deploy on instance 192.168.174.146

Clean directory /tidb-data on instance 192.168.174.146

Clean global directories 192.168.174.146 success

Clean global directories 192.168.174.147

Clean directory /tidb-deploy on instance 192.168.174.147

Clean directory /tidb-data on instance 192.168.174.147

Clean global directories 192.168.174.147 success

Delete public key 192.168.174.147

Delete public key 192.168.174.147 success

Delete public key 192.168.174.145

Delete public key 192.168.174.145 success

Delete public key 192.168.174.146

Delete public key 192.168.174.146 success

Destroyed cluster `tidb-test` successfullytopology.yaml

查看代碼

# # Global variables are applied to all deployments and used as the default value of

# # the deployments if a specific deployment value is missing.

global:

# # The user who runs the tidb cluster.

user: "tidb"

# # group is used to specify the group name the user belong to if it's not the same as user.

# group: "tidb"

# # systemd_mode is used to select whether to use sudo permissions. When its value is set to user, there is no need to add global.user to sudoers. The default value is system.

# systemd_mode: "system"

# # SSH port of servers in the managed cluster.

ssh_port: 22

# # Storage directory for cluster deployment files, startup scripts, and configuration files.

deploy_dir: "/tidb-deploy"

# # TiDB Cluster data storage directory

data_dir: "/tidb-data"

# # default listen_host for all components

listen_host: 0.0.0.0

# # Supported values: "amd64", "arm64" (default: "amd64")

arch: "amd64"

# # Resource Control is used to limit the resource of an instance.

# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html

# # Supports using instance-level `resource_control` to override global `resource_control`.

# resource_control:

# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html#MemoryLimit=bytes

# memory_limit: "2G"

# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html#CPUQuota=

# # The percentage specifies how much CPU time the unit shall get at maximum, relative to the total CPU time available on one CPU. Use values > 100% for allotting CPU time on more than one CPU.

# # Example: CPUQuota=200% ensures that the executed processes will never get more than two CPU time.

# cpu_quota: "200%"

# # See: https://www.freedesktop.org/software/systemd/man/systemd.resource-control.html#IOReadBandwidthMax=device%20bytes

# io_read_bandwidth_max: "/dev/disk/by-path/pci-0000:00:1f.2-scsi-0:0:0:0 100M"

# io_write_bandwidth_max: "/dev/disk/by-path/pci-0000:00:1f.2-scsi-0:0:0:0 100M"

component_versions:

tiproxy: "v1.3.0"

# # Monitored variables are applied to all the machines.

monitored:

# # The communication port for reporting system information of each node in the TiDB cluster.

node_exporter_port: 9100

# # Blackbox_exporter communication port, used for TiDB cluster port monitoring.

blackbox_exporter_port: 9115

# # Storage directory for deployment files, startup scripts, and configuration files of monitoring components.

# deploy_dir: "/tidb-deploy/monitored-9100"

# # Data storage directory of monitoring components.

# data_dir: "/tidb-data/monitored-9100"

# # Log storage directory of the monitoring component.

# log_dir: "/tidb-deploy/monitored-9100/log"

# # Server configs are used to specify the runtime configuration of TiDB components.

# # All configuration items can be found in TiDB docs:

# # - TiDB: https://docs.pingcap.com/tidb/stable/tidb-configuration-file

# # - TiKV: https://docs.pingcap.com/tidb/stable/tikv-configuration-file

# # - PD: https://docs.pingcap.com/tidb/stable/pd-configuration-file

# # - TiFlash: https://docs.pingcap.com/tidb/stable/tiflash-configuration

# #

# # All configuration items use points to represent the hierarchy, e.g:

# # readpool.storage.use-unified-pool

# # ^ ^

# # - example: https://github.com/pingcap/tiup/blob/master/examples/topology.example.yaml.

# # You can overwrite this configuration via the instance-level `config` field.

server_configs:

tidb:

graceful-wait-before-shutdown: 15

log.slow-threshold: 300

tiproxy:

ha.virtual-ip: "192.168.174.200/24"

ha.interface: "ens160"

graceful-wait-before-shutdown: 15

tikv:

# server.grpc-concurrency: 4

# raftstore.apply-pool-size: 2

# raftstore.store-pool-size: 2

# rocksdb.max-sub-compactions: 1

# storage.block-cache.capacity: "16GB"

# readpool.unified.max-thread-count: 12

readpool.storage.use-unified-pool: false

readpool.coprocessor.use-unified-pool: true

pd:

schedule.leader-schedule-limit: 4

schedule.region-schedule-limit: 2048

schedule.replica-schedule-limit: 64

replication.enable-placement-rules: true

tiflash:

# Maximum memory usage for processing a single query. Zero means unlimited.

profiles.default.max_memory_usage: 0

# Maximum memory usage for processing all concurrently running queries on the server. Zero means unlimited.

profiles.default.max_memory_usage_for_all_queries: 0

tiflash-learner:

# The allowable number of threads in the pool that flushes Raft data to storage.

raftstore.apply-pool-size: 4

# The allowable number of threads that process Raft, which is the size of the Raftstore thread pool.

raftstore.store-pool-size: 4

# # Server configs are used to specify the configuration of PD Servers.

pd_servers:

# # The ip address of the PD Server.

- host: 192.168.174.145

# # SSH port of the server.

# ssh_port: 22

# # PD Server name

# name: "pd-1"

# # communication port for TiDB Servers to connect.

# client_port: 2379

# # Communication port among PD Server nodes.

# peer_port: 2380

# # PD Server deployment file, startup script, configuration file storage directory.

# deploy_dir: "/tidb-deploy/pd-2379"

# # PD Server data storage directory.

# data_dir: "/tidb-data/pd-2379"

# # PD Server log file storage directory.

# log_dir: "/tidb-deploy/pd-2379/log"

# # numa node bindings.

# numa_node: "0,1"

# # The following configs are used to overwrite the `server_configs.pd` values.

# config:

# schedule.max-merge-region-size: 20

# schedule.max-merge-region-keys: 200000

- host: 192.168.174.146

# ssh_port: 22

# name: "pd-1"

# client_port: 2379

# peer_port: 2380

# deploy_dir: "/tidb-deploy/pd-2379"

# data_dir: "/tidb-data/pd-2379"

# log_dir: "/tidb-deploy/pd-2379/log"

# numa_node: "0,1"

# config:

# schedule.max-merge-region-size: 20

# schedule.max-merge-region-keys: 200000

- host: 192.168.174.147

# ssh_port: 22

# name: "pd-1"

# client_port: 2379

# peer_port: 2380

# deploy_dir: "/tidb-deploy/pd-2379"

# data_dir: "/tidb-data/pd-2379"

# log_dir: "/tidb-deploy/pd-2379/log"

# numa_node: "0,1"

# config:

# schedule.max-merge-region-size: 20

# schedule.max-merge-region-keys: 200000

# # Server configs are used to specify the configuration of TiDB Servers.

tidb_servers:

# # The ip address of the TiDB Server.

- host: 192.168.174.145

# # SSH port of the server.

# ssh_port: 22

# # The port for clients to access the TiDB cluster.

# port: 4000

# # TiDB Server status API port.

# status_port: 10080

# # TiDB Server deployment file, startup script, configuration file storage directory.

# deploy_dir: "/tidb-deploy/tidb-4000"

# # TiDB Server log file storage directory.

# log_dir: "/tidb-deploy/tidb-4000/log"

# # The ip address of the TiDB Server.

- host: 192.168.174.146

# ssh_port: 22

# port: 4000

# status_port: 10080

# deploy_dir: "/tidb-deploy/tidb-4000"

# log_dir: "/tidb-deploy/tidb-4000/log"

- host: 192.168.174.147

# ssh_port: 22

# port: 4000

# status_port: 10080

# deploy_dir: "/tidb-deploy/tidb-4000"

# log_dir: "/tidb-deploy/tidb-4000/log"

# # Server configs are used to specify the configuration of TiKV Servers.

tikv_servers:

# # The ip address of the TiKV Server.

- host: 192.168.174.145

# # SSH port of the server.

# ssh_port: 22

# # TiKV Server communication port.

# port: 20160

# # TiKV Server status API port.

# status_port: 20180

# # TiKV Server deployment file, startup script, configuration file storage directory.

# deploy_dir: "/tidb-deploy/tikv-20160"

# # TiKV Server data storage directory.

# data_dir: "/tidb-data/tikv-20160"

# # TiKV Server log file storage directory.

# log_dir: "/tidb-deploy/tikv-20160/log"

# # The following configs are used to overwrite the `server_configs.tikv` values.

# config:

# log.level: warn

# # The ip address of the TiKV Server.

- host: 192.168.174.146

# ssh_port: 22

# port: 20160

# status_port: 20180

# deploy_dir: "/tidb-deploy/tikv-20160"

# data_dir: "/tidb-data/tikv-20160"

# log_dir: "/tidb-deploy/tikv-20160/log"

# config:

# log.level: warn

- host: 192.168.174.147

# ssh_port: 22

# port: 20160

# status_port: 20180

# deploy_dir: "/tidb-deploy/tikv-20160"

# data_dir: "/tidb-data/tikv-20160"

# log_dir: "/tidb-deploy/tikv-20160/log"

# config:

# log.level: warn

# # Server configs are used to specify the configuration of TiFlash Servers.

tiflash_servers:

# # The ip address of the TiFlash Server.

- host: 192.168.174.145

# ssh_port: 22

# tcp_port: 9000

# flash_service_port: 3930

# flash_proxy_port: 20170

# flash_proxy_status_port: 20292

# metrics_port: 8234

# deploy_dir: "/tidb-deploy/tiflash-9000"

## The `data_dir` will be overwritten if you define `storage.main.dir` configurations in the `config` section.

# data_dir: "/tidb-data/tiflash-9000"

# log_dir: "/tidb-deploy/tiflash-9000/log"

# numa_node: "0,1"

# # The following configs are used to overwrite the `server_configs.tiflash` values.

# config:

# logger.level: "info"

# ## Multi-disk deployment introduced in v4.0.9

# ## Check https://docs.pingcap.com/tidb/stable/tiflash-configuration#multi-disk-deployment for more details.

# ## Example1:

# # storage.main.dir: [ "/nvme_ssd0_512/tiflash", "/nvme_ssd1_512/tiflash" ]

# # storage.main.capacity: [ 536870912000, 536870912000 ]

# ## Example2:

# # storage.main.dir: [ "/sata_ssd0_512/tiflash", "/sata_ssd1_512/tiflash", "/sata_ssd2_512/tiflash" ]

# # storage.latest.dir: [ "/nvme_ssd0_150/tiflash" ]

# # storage.main.capacity: [ 536870912000, 536870912000, 536870912000 ]

# # storage.latest.capacity: [ 161061273600 ]

# learner_config:

# log-level: "info"

# server.labels:

# zone: "zone2"

# dc: "dc2"

# host: "host2"

# # The ip address of the TiKV Server.

- host: 192.168.174.146

# ssh_port: 22

# tcp_port: 9000

# flash_service_port: 3930

# flash_proxy_port: 20170

# flash_proxy_status_port: 20292

# metrics_port: 8234

# deploy_dir: /tidb-deploy/tiflash-9000

# data_dir: /tidb-data/tiflash-9000

# log_dir: /tidb-deploy/tiflash-9000/log

# # Server configs are used to specify the configuration of Prometheus Server.

monitoring_servers:

# # The ip address of the Monitoring Server.

- host: 192.168.174.145

# # SSH port of the server.

# ssh_port: 22

# # Prometheus Service communication port.

# port: 9090

# # ng-monitoring servive communication port

# ng_port: 12020

# # Prometheus deployment file, startup script, configuration file storage directory.

# deploy_dir: "/tidb-deploy/prometheus-8249"

# # Prometheus data storage directory.

# data_dir: "/tidb-data/prometheus-8249"

# # Prometheus log file storage directory.

# log_dir: "/tidb-deploy/prometheus-8249/log"

# # Server configs are used to specify the configuration of Grafana Servers.

grafana_servers:

# # The ip address of the Grafana Server.

- host: 192.168.174.145

# # Grafana web port (browser access)

# port: 3000

# # Grafana deployment file, startup script, configuration file storage directory.

# deploy_dir: /tidb-deploy/grafana-3000

# # Server configs are used to specify the configuration of Alertmanager Servers.

alertmanager_servers:

# # The ip address of the Alertmanager Server.

- host: 192.168.174.145

# # SSH port of the server.

# ssh_port: 22

# # Alertmanager web service port.

# web_port: 9093

# # Alertmanager communication port.

# cluster_port: 9094

# # Alertmanager deployment file, startup script, configuration file storage directory.

# deploy_dir: "/tidb-deploy/alertmanager-9093"

# # Alertmanager data storage directory.

# data_dir: "/tidb-data/alertmanager-9093"

# # Alertmanager log file storage directory.

# log_dir: "/tidb-deploy/alertmanager-9093/log"

cdc_servers:

- host: 192.168.174.145

port: 8300

deploy_dir: "/tidb-deploy/cdc-8300"

data_dir: "/tidb-data/cdc-8300"

log_dir: "/tidb-deploy/cdc-8300/log"

# gc-ttl: 86400

# ticdc_cluster_id: "cluster1"

- host: 192.168.174.146

port: 8300

deploy_dir: "/tidb-deploy/cdc-8300"

data_dir: "/tidb-data/cdc-8300"

log_dir: "/tidb-deploy/cdc-8300/log"

# gc-ttl: 86400

# ticdc_cluster_id: "cluster1"

- host: 192.168.174.147

port: 8300

deploy_dir: "/tidb-deploy/cdc-8300"

data_dir: "/tidb-data/cdc-8300"

log_dir: "/tidb-deploy/cdc-8300/log"

# gc-ttl: 86400

# ticdc_cluster_id: "cluster2"

tiproxy_servers:

- host: 192.168.174.145

deploy_dir: "/tiproxy-deploy"

port: 6000

status_port: 3080

config:

labels: { zone: "east" }

- host: 192.168.174.146

deploy_dir: "/tiproxy-deploy"

port: 6000

status_port: 3080

config:

labels: { zone: "west" }參考文檔

https://docs.pingcap.com/zh/tidb/stable/production-deployment-using-tiup/

浙公網(wǎng)安備 33010602011771號(hào)

浙公網(wǎng)安備 33010602011771號(hào)