實(shí)戰(zhàn)演示k8s部署go服務(wù),實(shí)現(xiàn)滾動更新、重新創(chuàng)建、藍(lán)綠部署、金絲雀發(fā)布(轉(zhuǎn))

1 前言

本文主要實(shí)戰(zhàn)演示k8s部署go服務(wù),實(shí)現(xiàn)滾動更新、重新創(chuàng)建、藍(lán)綠部署、金絲雀發(fā)布

2 go服務(wù)鏡像準(zhǔn)備

2.1 初始化項(xiàng)目

cd /Users/flying/Dev/Go/go-lesson/src/

mkdir goPublish

cd goPublish

go mod init goPublish2.2 編寫main.go

package main

?

import (

"flag"

"github.com/gin-gonic/gin"

"net/http"

"os"

)

?

var version = flag.String("v", "v1", "v1")

?

func main() {

router := gin.Default()

router.GET("", func(c *gin.Context) {

flag.Parse()

hostname, _ := os.Hostname()

c.String(http.StatusOK, "This is version:%s running in pod %s", *version, hostname)

})

router.Run(":8080")

}

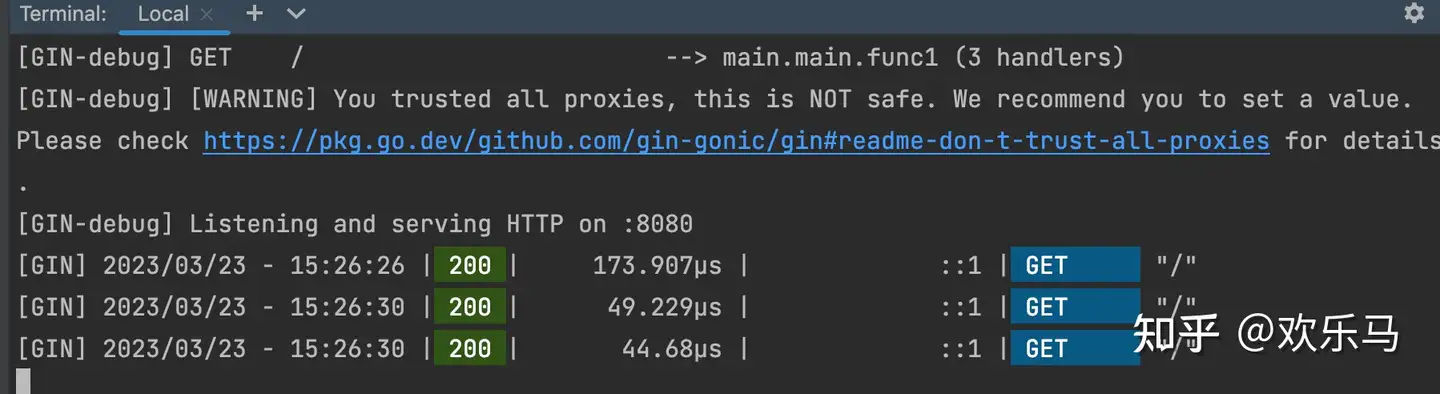

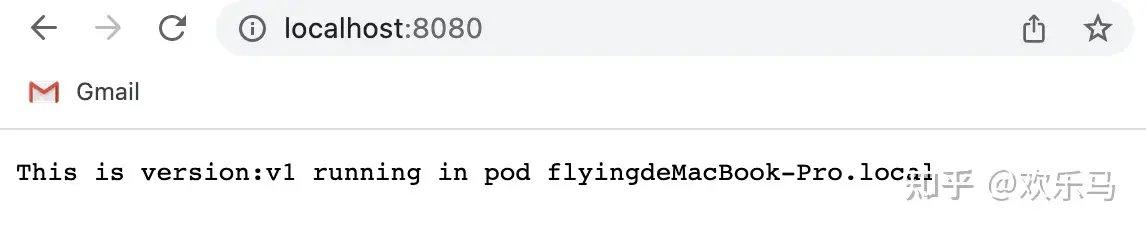

2.3 本地測試運(yùn)行

2.4 編寫Dockerfile

v1.3版本

FROM golang:latest AS build

?

WORKDIR /go/src/test

COPY . /go/src/test

RUN go env -w GOPROXY=https://goproxy.cn,direct

RUN CGO_ENABLED=0 go build -v -o main .

?

FROM alpine AS api

RUN mkdir /app

COPY --from=build /go/src/test/main /app

WORKDIR /app

ENTRYPOINT ["./main", "-v" ,"1.3 "]2.5 構(gòu)建鏡像

docker build -t go-publish:v1.3 .2.6 發(fā)布鏡像到docker hub

docker tag go-publish:v1.3 joycode123/go-publish:v1.3

docker push joycode123/go-publish:v1.3 # 這里需要先注冊docker hub賬號,并在命令行登陸

2.7 重復(fù)上述步驟,構(gòu)建v2.0版本鏡像并發(fā)布到docker hub

修改Dockerfile

FROM golang:latest AS build

?

WORKDIR /go/src/test

COPY . /go/src/test

RUN go env -w GOPROXY=https://goproxy.cn,direct

RUN CGO_ENABLED=0 go build -v -o main .

?

FROM alpine AS api

RUN mkdir /app

COPY --from=build /go/src/test/main /app

WORKDIR /app

ENTRYPOINT ["./main", "-v" ,"2.0 "]構(gòu)建并發(fā)布

docker build -t go-publish:v2.0 .

docker tag go-publish:v2.0 joycode123/go-publish:v2.0

docker push joycode123/go-publish:v2.03 滾動更新

接下來的部分,需要k8s運(yùn)行環(huán)境,這里是一主兩從的k8s環(huán)境來演示

3.1 編寫k8s的rolling-update.yaml文件

apiVersion: apps/v1

kind: Deployment

metadata:

name: rolling-update

namespace: test

spec:

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

selector:

matchLabels:

app: rolling-update

replicas: 4

template:

metadata:

labels:

app: rolling-update

spec:

containers:

- name: rolling-update

command: ["./main","-v","v1.3"]

image: joycode123/go-publish:v1.3

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: rolling-update

namespace: test

spec:

ports:

- port: 8080

protocol: TCP

targetPort: 8080

selector:

app: rolling-update

type: ClusterIP3.2 部署v1.3到k8s中

kubectl create namespace test # 創(chuàng)建test命名空間

kubectl apply -f rollingUpdate.yaml

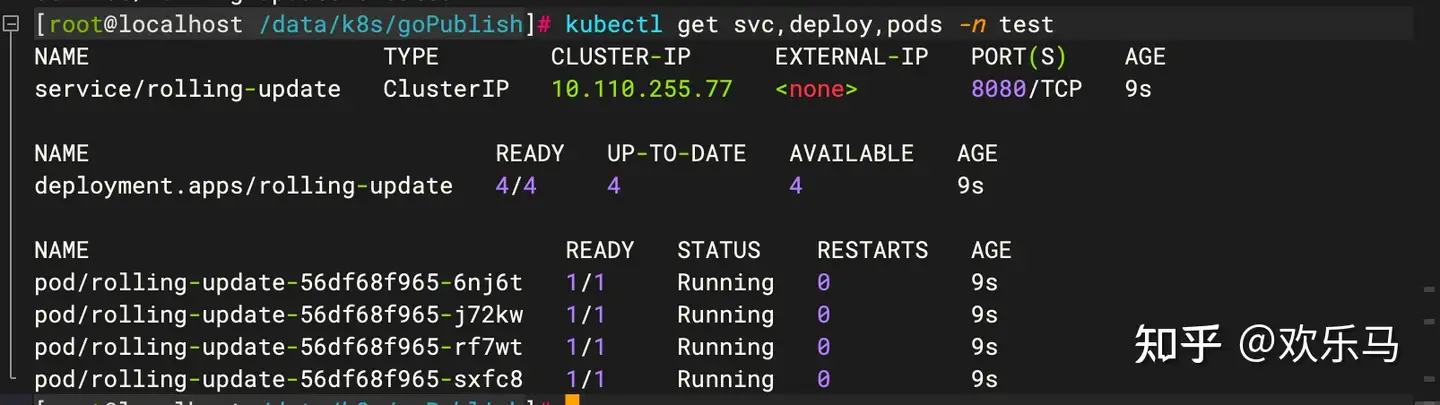

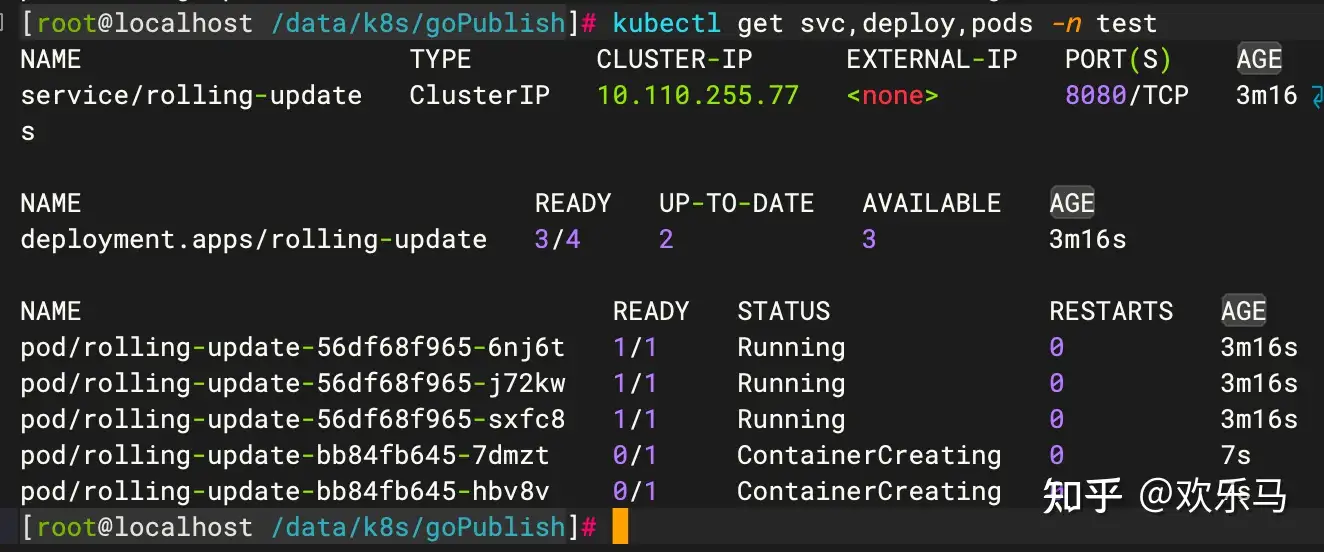

kubectl get svc,deploy,pods -n test

3.3 測試

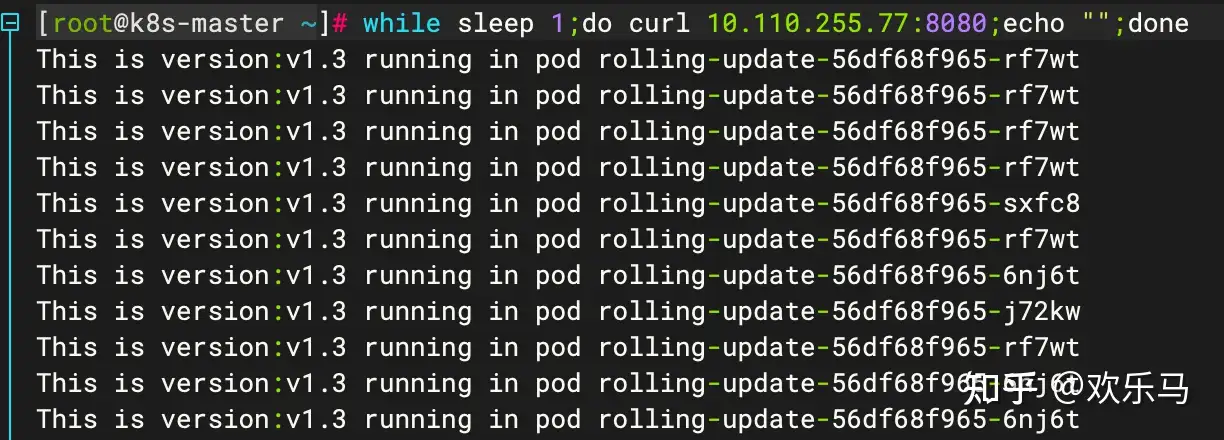

為了便于測試出效果,新開一個窗口,執(zhí)行如下命令

while sleep 1;do curl 10.110.255.77:8080;echo "";done

3.4 部署v2.0到k8s中

修改rollingUpdate.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: rolling-update

namespace: test

spec:

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

selector:

matchLabels:

app: rolling-update

replicas: 4

template:

metadata:

labels:

app: rolling-update

spec:

containers:

- name: rolling-update

command: ["./main","-v","v2.0"]

image: joycode123/go-publish:v2.0

ports:

- containerPort: 8080

---

apiVersion: v1

kind: Service

metadata:

name: rolling-update

namespace: test

spec:

ports:

- port: 8080

protocol: TCP

targetPort: 8080

selector:

app: rolling-update

type: ClusterIP

kubectl apply -f rollingUpdate.yaml

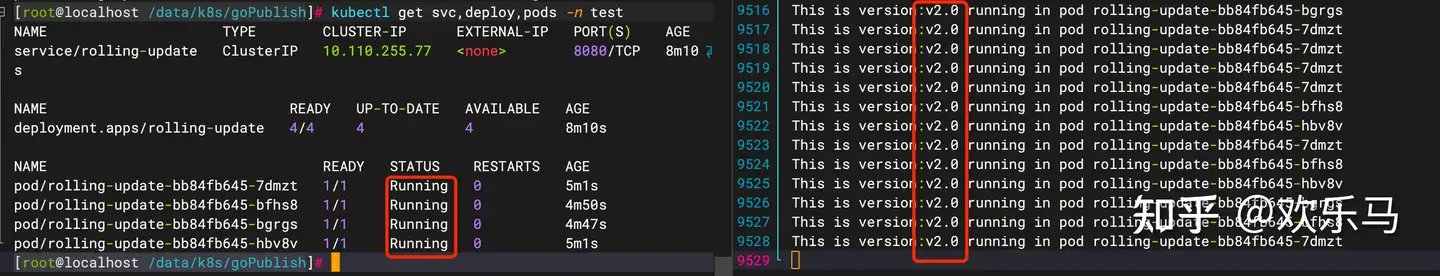

kubectl get svc,deploy,pods -n test

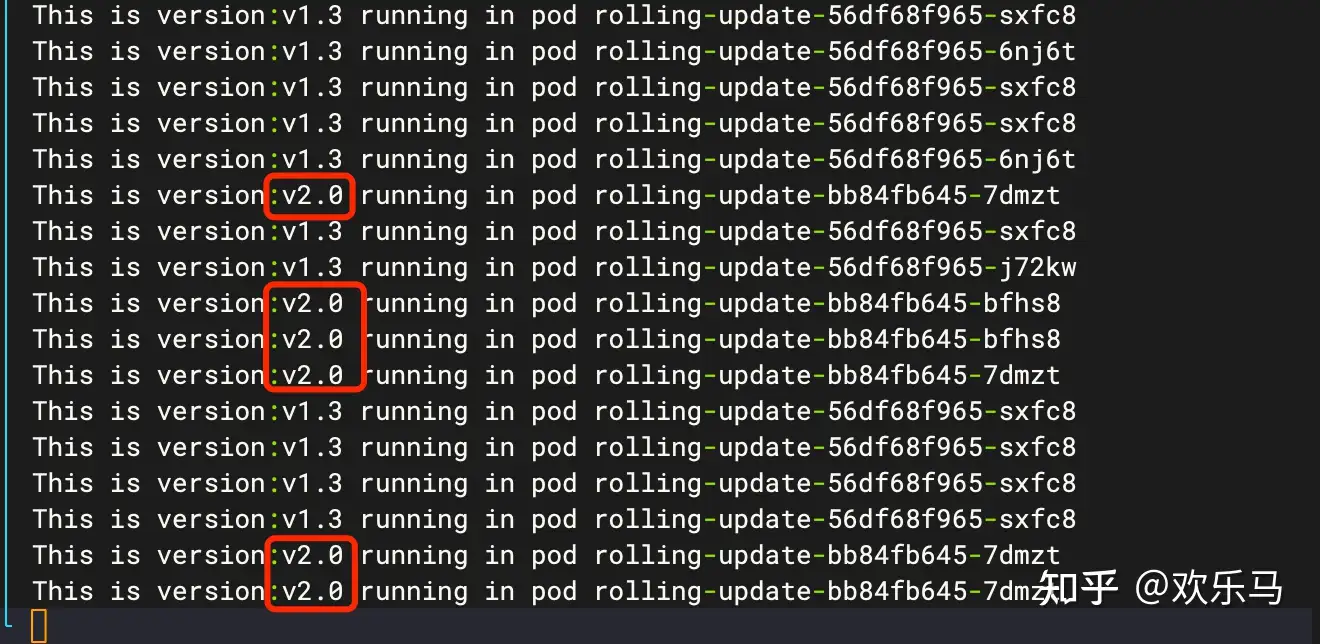

注意觀察測試窗體的變化,可以看到,2.0版本完全部署之前,v1.3和v2.0同時提供服務(wù),當(dāng)v2.0部署完成后,就只剩下v2.0提供服務(wù)了

4 重建部署

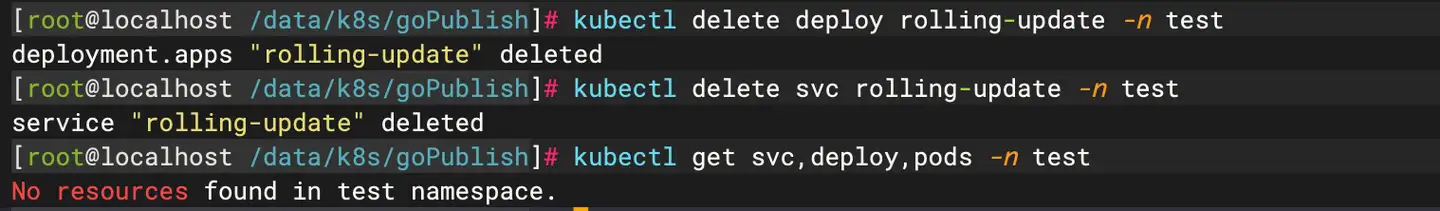

4.1 刪除前面部署的滾動更新deployment、service、pods

kubectl delete deploy rolling-update -n test

kubectl delete svc rolling-update -n test

4.2 編寫recreate.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: recreate

namespace: test

spec:

strategy:

type: Recreate

selector:

matchLabels:

app: recreate

replicas: 4

template:

metadata:

labels:

app: recreate

spec:

containers:

- name: recreate

image: joycode123/go-publish:v1.3

ports:

- containerPort: 8080

livenessProbe:

tcpSocket:

port: 8080

---

apiVersion: v1

kind: Service

metadata:

name: recreate

namespace: test

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: recreate

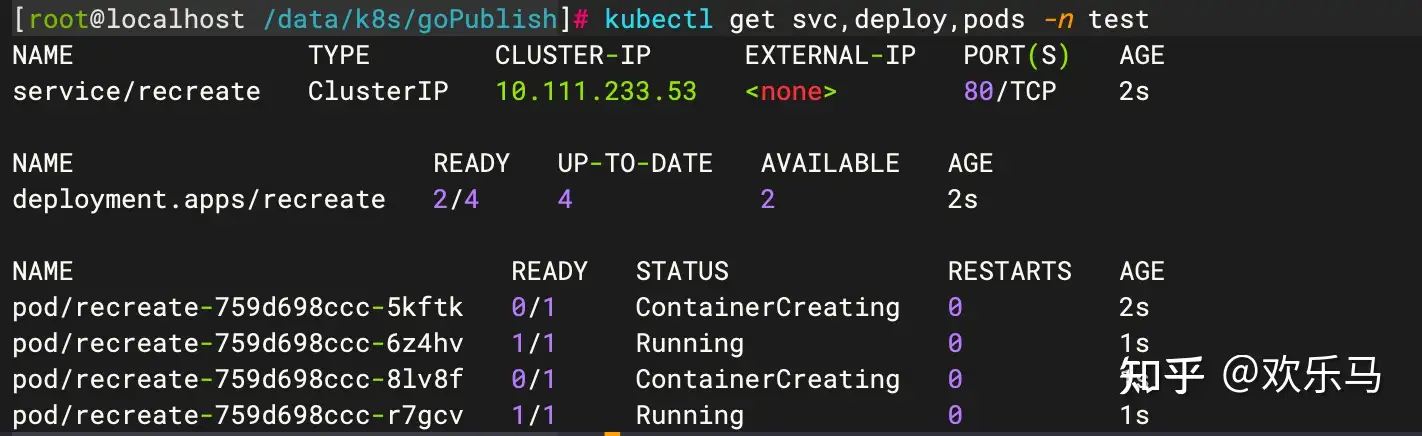

type: ClusterIP4.3 部署v1.3到k8s中

kubectl apply -f recreate.yaml

kubectl get svc,deploy,pods -n test

4.4 測試

為了便于測試出效果,新開一個窗口,執(zhí)行如下命令

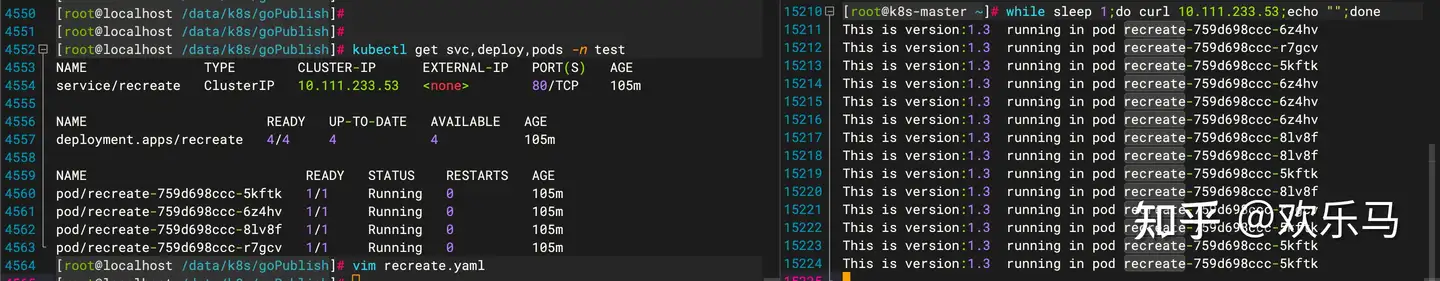

while sleep 1;do curl 10.111.233.53;echo "";done

4.5 部署v2.0

修改recreate.yaml

apiVersion: apps/v1

kind: Deployment

metadata:

name: recreate

namespace: test

spec:

strategy:

type: Recreate

selector:

matchLabels:

app: recreate

replicas: 4

template:

metadata:

labels:

app: recreate

spec:

containers:

- name: recreate

image: joycode123/go-publish:v2.0

ports:

- containerPort: 8080

livenessProbe:

tcpSocket:

port: 8080

---

apiVersion: v1

kind: Service

metadata:

name: recreate

namespace: test

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: recreate

type: ClusterIP

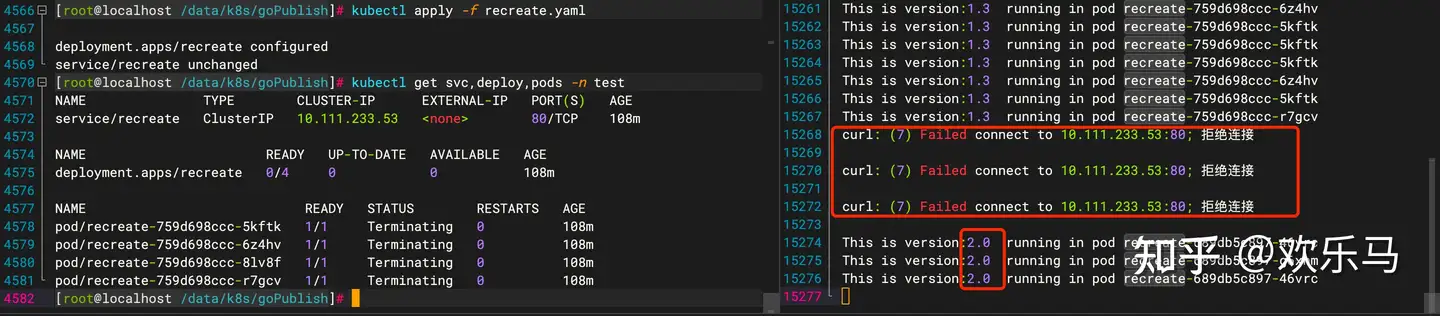

kubectl apply -f recreate.yaml

kubectl get svc,deploy,pods -n test注意觀察,在重建過程中,服務(wù)會有短暫的中斷

5 藍(lán)綠部署

5.1 刪除之前的部署

kubectl delete deploy recreate -n test

kubectl delete svc recreate -n test5.2 編寫blueGreen.yaml文件(green版本)

apiVersion: apps/v1

kind: Deployment

metadata:

name: green

namespace: test

spec:

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

selector:

matchLabels:

app: bluegreen

replicas: 4

template:

metadata:

labels:

app: bluegreen

version: v1.3

spec:

containers:

- name: bluegreen

image: joycode123/go-publish:v1.3

ports:

- containerPort: 80805.3 部署v1.3版本(green版本)

kubectl apply -f blueGreen.yaml

kubectl get deploy,pods -n test5.4 修改blueGreen.yaml(blue版本)

apiVersion: apps/v1

kind: Deployment

metadata:

name: blue

namespace: test

spec:

strategy:

rollingUpdate:

maxSurge: 25%

maxUnavailable: 25%

type: RollingUpdate

selector:

matchLabels:

app: bluegreen

replicas: 4

template:

metadata:

labels:

app: bluegreen

version: v2.0

spec:

containers:

- name: bluegreen

image: joycode123/go-publish:v2.0

ports:

- containerPort: 80805.5 部署v2.0版本(blue版本)

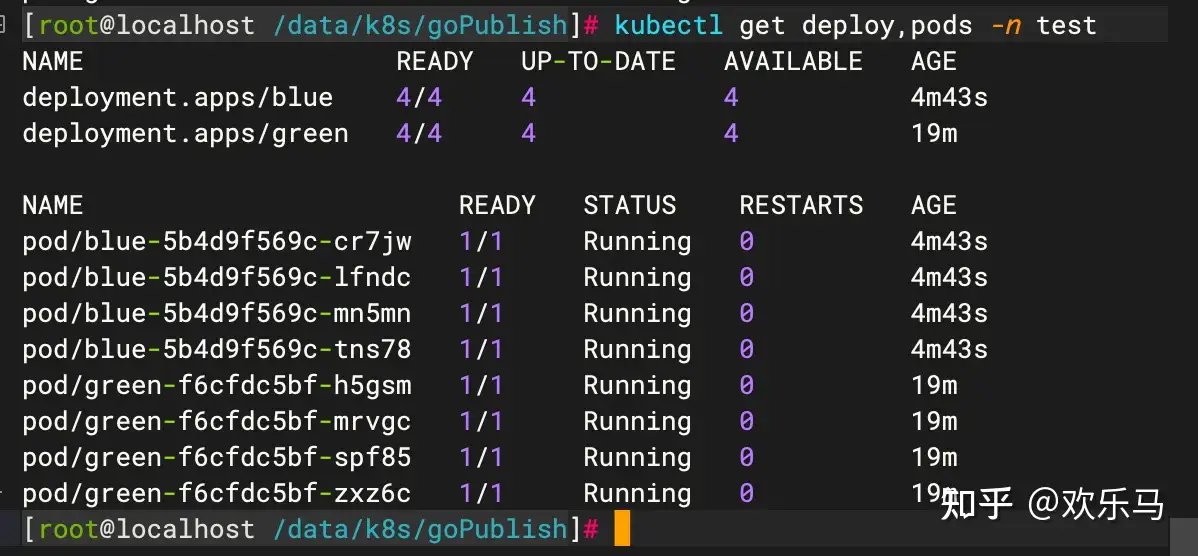

kubectl apply -f blueGreen.yaml

kubectl get deploy,pods -n test

這時,在k8s中同時存在blue版本和green版本在運(yùn)行著

5.6 編寫blueGreenService.yaml

apiVersion: v1

kind: Service

metadata:

name: bluegreen

namespace: test

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: bluegreen #注意,這里的app要和blueGreen.yaml的label的app相一致

version: v1.3

type: ClusterIP

kubectl apply -f blueGreenService.yaml

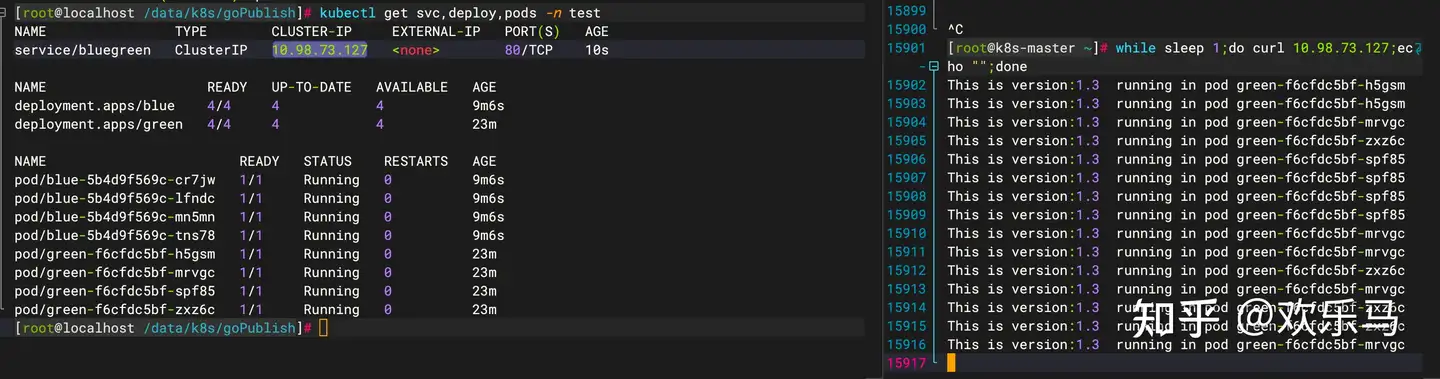

kubectl get svc,deploy,pods -n test5.7 測試訪問

while sleep 1;do curl 10.98.73.127;echo "";done

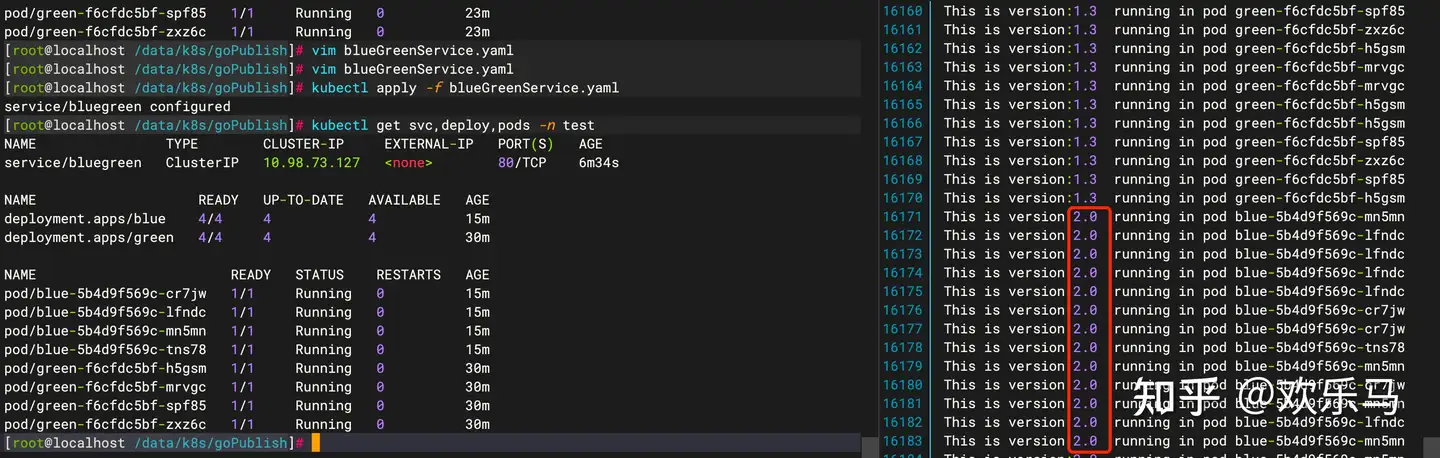

5.8 切換到blue版本(v2.0)

修改blueGreenService.yaml文件

apiVersion: v1

kind: Service

metadata:

name: bluegreen

namespace: test

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: bluegreen

version: v2.0

type: ClusterIP

kubectl apply -f blueGreenService.yaml

kubectl get svc,deploy,pods -n test

可以看出,如果要在藍(lán)綠版本之間切換,只要修改blueGreenService.yaml文件的版本號即可。

6 金絲雀發(fā)布

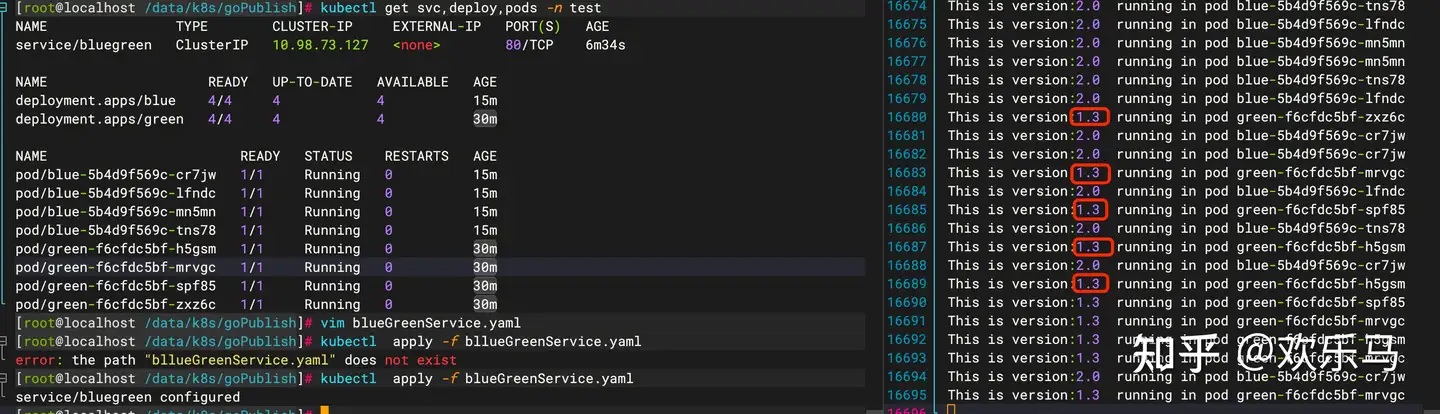

在藍(lán)綠版本發(fā)布的基礎(chǔ)上,修改blueGreenService.yaml

apiVersion: v1

kind: Service

metadata:

name: bluegreen

namespace: test

spec:

ports:

- port: 80

protocol: TCP

targetPort: 8080

selector:

app: bluegreen

type: ClusterIP相比之前的yaml主要是去掉selector選擇器下的version整個KV鍵值對

kubectl apply -f blueGreenService.yaml 接著測試:

這個時候,blue版本和green版本都可以提供服務(wù),如果要設(shè)置權(quán)重,則控制好blue版本和green版本的副本數(shù)量即可實(shí)現(xiàn)。

轉(zhuǎn)自:https://zhuanlan.zhihu.com/p/617025075

浙公網(wǎng)安備 33010602011771號

浙公網(wǎng)安備 33010602011771號