HPCG基準測試的幾種執行方式

0 HPCG簡介

HPCG(High Performance Conjugate Gradients)基準測試是一個高性能計算性能評估工具,它主要用于衡量超級計算機在稀疏矩陣、內存訪問密集型任務下的真實性能,比傳統的 HPL(LINPACK)更貼近很多科學與工程計算場景。

HPL(LINPACK) 側重浮點運算能力(FLOPS),適合反映處理器的峰值計算能力,但偏向計算密集型任務

HPCG 重點考察:

- 稀疏矩陣存取

- 內存帶寬

- 緩存效率

- 通信延遲

因此,HPCG 的成績通常是 HPL 的 0.3%~4% 左右,更接近真實 HPC 應用性能。

HPCG 實現了預條件共軛梯度法 (Preconditioned Conjugate Gradient) 求解三維泊松方程的迭代過程,包含:

- 稀疏矩陣-向量乘法 (SpMV)

- 向量更新 (AXPY)

- 點積 (Dot Product)

- 全局通信(MPI Allreduce)

- 多重網格預條件器

1 標準源代碼執行

- 安裝依賴庫、clone代碼、拷貝編譯配置文件

# dnf install -y gcc gcc-c++ make cmake openmpi openmpi-devel

# git clone https://github.com/hpcg-benchmark/hpcg.git

# cd setup

# cp Make.Linux_MPI Make.kunpeng

- 修改編譯配置文件

# vim setup_make.kunpeng

#HEADER

# -- High Performance Conjugate Gradient Benchmark (HPCG)

# HPCG - 3.1 - March 28, 2019

# Michael A. Heroux

# Scalable Algorithms Group, Computing Research Division

# Sandia National Laboratories, Albuquerque, NM

#

# Piotr Luszczek

# Jack Dongarra

# University of Tennessee, Knoxville

# Innovative Computing Laboratory

#

# (C) Copyright 2013-2019 All Rights Reserved

#

#

# -- Copyright notice and Licensing terms:

#

# Redistribution and use in source and binary forms, with or without

# modification, are permitted provided that the following conditions

# are met:

#

# 1. Redistributions of source code must retain the above copyright

# notice, this list of conditions and the following disclaimer.

#

# 2. Redistributions in binary form must reproduce the above copyright

# notice, this list of conditions, and the following disclaimer in the

# documentation and/or other materials provided with the distribution.

#

# 3. All advertising materials mentioning features or use of this

# software must display the following acknowledgement:

# This product includes software developed at Sandia National

# Laboratories, Albuquerque, NM and the University of

# Tennessee, Knoxville, Innovative Computing Laboratory.

#

# 4. The name of the University, the name of the Laboratory, or the

# names of its contributors may not be used to endorse or promote

# products derived from this software without specific written

# permission.

#

# -- Disclaimer:

#

# THIS SOFTWARE IS PROVIDED BY THE COPYRIGHT HOLDERS AND CONTRIBUTORS

# ``AS IS'' AND ANY EXPRESS OR IMPLIED WARRANTIES, INCLUDING, BUT NOT

# LIMITED TO, THE IMPLIED WARRANTIES OF MERCHANTABILITY AND FITNESS FOR

# A PARTICULAR PURPOSE ARE DISCLAIMED. IN NO EVENT SHALL THE UNIVERSITY

# OR CONTRIBUTORS BE LIABLE FOR ANY DIRECT, INDIRECT, INCIDENTAL,

# SPECIAL, EXEMPLARY, OR CONSEQUENTIAL DAMAGES (INCLUDING, BUT NOT

# LIMITED TO, PROCUREMENT OF SUBSTITUTE GOODS OR SERVICES; LOSS OF USE,

# DATA OR PROFITS; OR BUSINESS INTERRUPTION) HOWEVER CAUSED AND ON ANY

# THEORY OF LIABILITY, WHETHER IN CONTRACT, STRICT LIABILITY, OR TORT

# (INCLUDING NEGLIGENCE OR OTHERWISE) ARISING IN ANY WAY OUT OF THE USE

# OF THIS SOFTWARE, EVEN IF ADVISED OF THE POSSIBILITY OF SUCH DAMAGE.

# ######################################################################

#@HEADER

# ----------------------------------------------------------------------

# - shell --------------------------------------------------------------

# ----------------------------------------------------------------------

#

SHELL = /bin/sh

#

CD = cd

CP = cp

LN_S = ln -s -f

MKDIR = mkdir -p

RM = /bin/rm -f

TOUCH = touch

#

# ----------------------------------------------------------------------

# - HPCG Directory Structure / HPCG library ------------------------------

# ----------------------------------------------------------------------

#

TOPdir = .

SRCdir = $(TOPdir)/src

INCdir = $(TOPdir)/src

BINdir = $(TOPdir)/bin

#

# ----------------------------------------------------------------------

# - Message Passing library (MPI) --------------------------------------

# ----------------------------------------------------------------------

# MPinc tells the C compiler where to find the Message Passing library

# header files, MPlib is defined to be the name of the library to be

# used. The variable MPdir is only used for defining MPinc and MPlib.

#

MPdir = /usr/lib64/openmpi

MPinc = -I$(MPdir)/include

MPlib = -L$(MPdir)/lib -lmpi

#

#

# ----------------------------------------------------------------------

# - HPCG includes / libraries / specifics -------------------------------

# ----------------------------------------------------------------------

#

HPCG_INCLUDES = -I$(INCdir) -I$(INCdir)/$(arch) $(MPinc)

HPCG_LIBS =

#

# - Compile time options -----------------------------------------------

#

# -DHPCG_NO_MPI Define to disable MPI

# -DHPCG_NO_OPENMP Define to disable OPENMP

# -DHPCG_CONTIGUOUS_ARRAYS Define to have sparse matrix arrays long and contiguous

# -DHPCG_DEBUG Define to enable debugging output

# -DHPCG_DETAILED_DEBUG Define to enable very detailed debugging output

#

# By default HPCG will:

# *) Build with MPI enabled.

# *) Build with OpenMP enabled.

# *) Not generate debugging output.

#

HPCG_OPTS = -DHPCG_NO_OPENMP

#

# ----------------------------------------------------------------------

#

HPCG_DEFS = $(HPCG_OPTS) $(HPCG_INCLUDES)

#

# ----------------------------------------------------------------------

# - Compilers / linkers - Optimization flags ---------------------------

# ----------------------------------------------------------------------

#

CXX = mpicxx

#CXXFLAGS = $(HPCG_DEFS) -fomit-frame-pointer -O3 -funroll-loops -W -Wall

CXXFLAGS = -O3 -march=armv8-a

#

LINKER = $(CXX)

LINKFLAGS = $(CXXFLAGS)

#

ARCHIVER = ar

ARFLAGS = r

RANLIB = echo

USE_CUDA = 0

#

# ----------------------------------------------------------------------

#

注意這里禁用了CUDA

- 編譯

cd ..

make arch=kunpeng

cd bin

- 準備執行配置文件

默認的配置文件: hpcg.dat

HPCG benchmark input file

Sandia National Laboratories; University of Tennessee, Knoxville

104 104 104

60

我們的配置文件: hpcg.dat

HPCG benchmark input file

Sandia National Laboratories; University of Tennessee, Knoxville

128 128 128

300

注意上面最后一行表示執行時間,建議是1800s起,300s以下可能會不準。

- 執行:

# mpirun --allow-run-as-root --mca pml ob1 -np 64 ./xhpcg

]# tail HPCG-Benchmark_3.1_2025-07-08_10-21-09.txt

DDOT Timing Variations::Avg DDOT MPI_Allreduce time=2.58093

Final Summary=

Final Summary::HPCG result is VALID with a GFLOP/s rating of=16.1137

Final Summary::HPCG 2.4 rating for historical reasons is=16.1678

Final Summary::Reference version of ComputeDotProduct used=Performance results are most likely suboptimal

Final Summary::Reference version of ComputeSPMV used=Performance results are most likely suboptimal

Final Summary::Reference version of ComputeMG used=Performance results are most likely suboptimal

Final Summary::Reference version of ComputeWAXPBY used=Performance results are most likely suboptimal

Final Summary::Results are valid but execution time (sec) is=310.259

Final Summary::Official results execution time (sec) must be at least=1800

# cat hpcg20250708T100940.txt

WARNING: PERFORMING UNPRECONDITIONED ITERATIONS

Call [0] Number of Iterations [11] Scaled Residual [1.12102e-13]

WARNING: PERFORMING UNPRECONDITIONED ITERATIONS

Call [1] Number of Iterations [11] Scaled Residual [1.12102e-13]

Call [0] Number of Iterations [2] Scaled Residual [2.79999e-17]

Call [1] Number of Iterations [2] Scaled Residual [2.79999e-17]

Departure from symmetry (scaled) for SpMV abs(x'*A*y - y'*A*x) = 7.31869e-10

Departure from symmetry (scaled) for MG abs(x'*Minv*y - y'*Minv*x) = 5.92074e-11

SpMV call [0] Residual [0]

SpMV call [1] Residual [0]

Call [0] Scaled Residual [0.00454823]

Call [1] Scaled Residual [0.00454823]

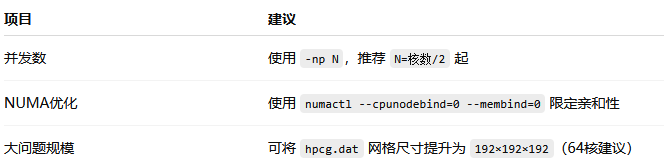

重要參數如下:

此機的HPCG結果為:16.1137 GFLOP/s

allow-run-as-root:這個參數明確告訴 mpirun 允許以 root 用戶身份運行程序。

默認情況下,許多 MPI 實現(尤其是 Open MPI)會出于安全考慮,阻止 root 用戶直接運行并行應用程序,因為這可能帶來安全風險,尤其是在共享集群環境中。如果您的程序需要 root 權限才能運行(或者您正在以 root 身份直接執行 mpirun 命令),但 MPI 庫又默認禁止 root 運行,就會出現權限相關的錯誤。添加此參數可以繞過此安全檢查。

在非生產環境或測試中,如果確實需要 root 權限,可以使用此參數。但在生產環境或多用戶共享集群中,不推薦以 root 身份運行計算任務,通常應該使用普通用戶賬戶。

mca pml ob1: 這個參數是 MPI Component Architecture (MCA) 的一個選項,用于選擇 點對點通信層 (PML - Point-to-Point Messaging Layer) 的具體實現。ob1 是 Open MPI 中一個常用的 PML 組件。

Open MPI 具有高度模塊化的架構,允許用戶為不同的功能(如點對點通信、集體通信、進程管理等)選擇不同的組件。ob1 是 Open MPI 中默認的、也是最常用的 PML 組件之一。它通常使用各種網絡接口(如 InfiniBand、Ethernet 等)進行通信。

顯式指定 ob1 通常是為了確保使用特定的通信機制,或者解決與默認 PML 相關的兼容性/性能問題。在大多數情況下,如果您不指定,mpirun 也會默認使用 ob1,但顯式指定可以確保行為一致性。

np 64: 這個參數指定了要啟動的 MPI 進程(或稱為“秩”或“rank”)的數量。

MPI 程序通過在多個進程之間分配任務來實現并行。每個進程都有一個唯一的 ID (rank),從 0 到 np-1。

64 表示您希望 xhpcg 程序以 64 個并行進程運行。這些進程可以分布在多個計算節點上,也可以全部運行在單個節點上,這取決于您的 hosts 文件配置和 mpirun 的其他資源調度參數。

參考資料

- 軟件測試精品書籍文檔下載持續更新 https://github.com/china-testing/python-testing-examples 請點贊,謝謝!

- 本文涉及的python測試開發庫 謝謝點贊! https://github.com/china-testing/python_cn_resouce

- python精品書籍下載 https://github.com/china-testing/python_cn_resouce/blob/main/python_good_books.md

- Linux精品書籍下載 http://www.rzrgm.cn/testing-/p/17438558.html

- python八字排盤 https://github.com/china-testing/bazi

- 聯系方式:釘ding或V信: pythontesting

- https://mirrors.huaweicloud.com/kunpeng/archive/HPC/benchmark/

- https://developer.nvidia.com/nvidia-hpc-benchmarks-downloads?target_os=Linux&target_arch=x86_64

- https://github.com/davidrohr/hpl-gpu

- https://catalog.ngc.nvidia.com/orgs/nvidia/containers/hpc-benchmarks

- https://github.com/NVIDIA/nvidia-hpcg

- https://www.amd.com/en/developer/zen-software-studio/applications/pre-built-applications/zen-hpl.html

- https://www.netlib.org/benchmark/hpl/

2 Phoronix Test Suite執行

安裝Phoronix Test Suite參見:http://www.rzrgm.cn/testing-/p/18303322

- 安裝 hpcg

# phoronix-test-suite install hpcg

# cd /var/lib/phoronix-test-suite/test-profiles/pts/hpcg-1.3.0

- 修改配置文件

test-definition.xml的內容:

<?xml version="1.0"?>

<!--Phoronix Test Suite v10.8.4-->

<PhoronixTestSuite>

<TestInformation>

<Title>High Performance Conjugate Gradient</Title>

<AppVersion>3.1</AppVersion>

<Description>HPCG is the High Performance Conjugate Gradient and is a new scientific benchmark from Sandia National Lans focused for super-computer testing with modern real-world workloads compared to HPCC.</Description>

<ResultScale>GFLOP/s</ResultScale>

<Proportion>HIB</Proportion>

<TimesToRun>1</TimesToRun>

</TestInformation>

<TestProfile>

<Version>1.3.0</Version>

<SupportedPlatforms>Linux</SupportedPlatforms>

<SoftwareType>Benchmark</SoftwareType>

<TestType>Processor</TestType>

<License>Free</License>

<Status>Verified</Status>

<ExternalDependencies>build-utilities, fortran-compiler, openmpi-development</ExternalDependencies>

<EnvironmentSize>2.4</EnvironmentSize>

<ProjectURL>http://www.hpcg-benchmark.org/</ProjectURL>

<RepositoryURL>https://github.com/hpcg-benchmark/hpcg</RepositoryURL>

<InternalTags>SMP, MPI</InternalTags>

<Maintainer>Michael Larabel</Maintainer>

</TestProfile>

<TestSettings>

<Option>

<DisplayName>X Y Z</DisplayName>

<Identifier>xyz</Identifier>

<Menu>

<Entry>

<Name>104 104 104</Name>

<Value>--nx=104 --ny=104 --nz=104</Value>

</Entry>

<Entry>

<Name>144 144 144</Name>

<Value>--nx=144 --ny=144 --nz=144</Value>

</Entry>

<Entry>

<Name>160 160 160</Name>

<Value>--nx=160 --ny=160 --nz=160</Value>

</Entry>

<Entry>

<Name>192 192 192</Name>

<Value>--nx=192 --ny=192 --nz=192</Value>

</Entry>

</Menu>

</Option>

<Option>

<DisplayName>RT</DisplayName>

<Identifier>time</Identifier>

<ArgumentPrefix>--rt=</ArgumentPrefix>

<Menu>

<Entry>

<Name>300</Name>

<Value>300</Value>

<Message>Shorter run-time</Message>

</Entry>

<Entry>

<Name>1800</Name>

<Value>1800</Value>

<Message>Official run-time</Message>

</Entry>

</Menu>

</Option>

</TestSettings>

</PhoronixTestSuite>

- 執行測試

# phoronix-test-suite benchmark hpcg

Evaluating External Test Dependencies .......................................................................................................................

Phoronix Test Suite v10.8.4

Installed: pts/hpcg-1.3.0

High Performance Conjugate Gradient 3.1:

pts/hpcg-1.3.0

Processor Test Configuration

1: 104 104 104

2: 144 144 144

3: 160 160 160

4: 192 192 192

5: Test All Options

** Multiple items can be selected, delimit by a comma. **

X Y Z: 1

1: 300 [Shorter run-time]

2: 1800 [Official run-time]

3: Test All Options

** Multiple items can be selected, delimit by a comma. **

RT: 1

System Information

PROCESSOR: ARMv8 @ 2.90GHz

Core Count: 128

Cache Size: 224 MB

Scaling Driver: cppc_cpufreq performance

GRAPHICS: Huawei Hi171x [iBMC Intelligent Management chip w/VGA support]

Screen: 1024x768

MOTHERBOARD: WUZHOU BC83AMDAA01-7270Z

BIOS Version: 11.62

Chipset: Huawei HiSilicon

Network: 6 x Huawei HNS GE/10GE/25GE/50GE + 2 x Mellanox MT2892

MEMORY: 16 x 32 GB 4800MT/s Samsung M321R4GA3BB6-CQKET

DISK: 2 x 480GB HWE62ST3480L003N + 3 x 1920GB HWE62ST31T9L005N

File-System: xfs

Mount Options: attr2 inode64 noquota relatime rw

Disk Scheduler: MQ-DEADLINE

Disk Details: Block Size: 4096

OPERATING SYSTEM: Kylin Linux Advanced Server V10

Kernel: 4.19.90-52.22.v2207.ky10.aarch64 (aarch64) 20230314

Display Server: X Server 1.20.8

Compiler: GCC 7.3.0 + CUDA 12.8

Security: itlb_multihit: Not affected

+ l1tf: Not affected

+ mds: Not affected

+ meltdown: Not affected

+ mmio_stale_data: Not affected

+ spec_store_bypass: Mitigation of SSB disabled via prctl

+ spectre_v1: Mitigation of __user pointer sanitization

+ spectre_v2: Not affected

+ srbds: Not affected

+ tsx_async_abort: Not affected

Would you like to save these test results (Y/n): y

Enter a name for the result file: hpcg_45_31

Enter a unique name to describe this test run / configuration:

If desired, enter a new description below to better describe this result set / system configuration under test.

Press ENTER to proceed without changes.

Current Description: ARMv8 testing with a WUZHOU BC83AMDAA01-7270Z (11.62 BIOS) and Huawei Hi171x [iBMC Intelligent Management chip w/VGA support] on Kylin Linux Advanced Server V10 via the Phoronix Test Suite.

New Description:

High Performance Conjugate Gradient 3.1:

pts/hpcg-1.3.0 [X Y Z: 104 104 104 - RT: 300]

Test 1 of 1

Estimated Trial Run Count: 1

Estimated Time To Completion: 38 Minutes [03:16 CDT]

Started Run 1 @ 02:39:00

X Y Z: 104 104 104 - RT: 300:

69.8633

Average: 69.8633 GFLOP/s

Do you want to view the text results of the testing (Y/n): Y

hpcg_45_31

ARMv8 testing with a WUZHOU BC83AMDAA01-7270Z (11.62 BIOS) and Huawei Hi171x [iBMC Intelligent Management chip w/VGA support] on Kylin Linux Advanced Server V10 via the Phoronix Test Suite.

ARMv8:

Processor: ARMv8 @ 2.90GHz (128 Cores), Motherboard: WUZHOU BC83AMDAA01-7270Z (11.62 BIOS), Chipset: Huawei HiSilicon, Memory: 16 x 32 GB 4800MT/s Samsung M321R4GA3BB6-CQKET, Disk: 2 x 480GB HWE62ST3480L003N + 3 x 1920GB HWE62ST31T9L005N, Graphics: Huawei Hi171x [iBMC Intelligent Management chip w/VGA support], Network: 6 x Huawei HNS GE/10GE/25GE/50GE + 2 x Mellanox MT2892

OS: Kylin Linux Advanced Server V10, Kernel: 4.19.90-52.22.v2207.ky10.aarch64 (aarch64) 20230314, Display Server: X Server 1.20.8, Compiler: GCC 7.3.0 + CUDA 12.8, File-System: xfs, Screen Resolution: 1024x768

High Performance Conjugate Gradient 3.1

X Y Z: 104 104 104 - RT: 300

GFLOP/s > Higher Is Better

ARMv8 . 69.86 |==================================================================================================================================================

Would you like to upload the results to OpenBenchmarking.org (y/n): y

Would you like to attach the system logs (lspci, dmesg, lsusb, etc) to the test result (y/n): y

Results Uploaded To: https://openbenchmarking.org/result/2507083-NE-HPCG4531360

可用瀏覽器查看測試結果:

3 NVIDIA HPC Benchmarks

# docker pull nvcr.io/nvidia/hpc-benchmarks:25.04

# vi HPCG.dat

HPCG benchmark input file

Sandia National Laboratories; University of Tennessee, Knoxville

128 128 128

300

# docker run --rm --gpus all --ipc=host --ulimit memlock=-1:-1 \

-v $(pwd):/host_data \

nvcr.io/nvidia/hpc-benchmarks:25.04 \

mpirun -np 1 \

/workspace/hpcg.sh \

--dat /host_data/HPCG.dat \

--cpu-affinity 0 \

--gpu-affinity 0

=========================================================

================= NVIDIA HPC Benchmarks =================

=========================================================

NVIDIA Release 25.04

Copyright (c) 2023, NVIDIA CORPORATION & AFFILIATES. All rights reserved.

Various files include modifications (c) NVIDIA CORPORATION & AFFILIATES. All rights reserved.

This container image and its contents are governed by the NVIDIA Deep Learning Container License.

By pulling and using the container, you accept the terms and conditions of this license:

https://developer.nvidia.com/ngc/nvidia-deep-learning-container-license

WARNING: No InfiniBand devices detected.

Multi-node communication performance may be reduced.

Ensure /dev/infiniband is mounted to this container.

HPCG-NVIDIA 25.4.0 -- NVIDIA accelerated HPCG benchmark -- NVIDIA

Build v0.5.6

Start of application (GPU-Only) ...

Initial Residual = 2838.81

Iteration = 1 Scaled Residual = 0.185703

Iteration = 2 Scaled Residual = 0.101681

...

Iteration = 50 Scaled Residual = 3.94531e-07

GPU Rank Info:

| cuSPARSE version 12.5

| Reference CPU memory = 935.79 MB

| GPU Name: 'NVIDIA GeForce RTX 4090'

| GPU Memory Use: 2223 MB / 24082 MB

| Process Grid: 1x1x1

| Local Domain: 128x128x128

| Number of CPU Threads: 1

| Slice Size: 2048

WARNING: PERFORMING UNPRECONDITIONED ITERATIONS

Call [0] Number of Iterations [11] Scaled Residual [1.19242e-14]

WARNING: PERFORMING UNPRECONDITIONED ITERATIONS

Call [1] Number of Iterations [11] Scaled Residual [1.19242e-14]

Call [0] Number of Iterations [1] Scaled Residual [2.94233e-16]

Call [1] Number of Iterations [1] Scaled Residual [2.94233e-16]

Departure from symmetry (scaled) for SpMV abs(x'*A*y - y'*A*x) = 8.42084e-10

Departure from symmetry (scaled) for MG abs(x'*Minv*y - y'*Minv*x) = 4.21042e-10

SpMV call [0] Residual [0]

SpMV call [1] Residual [0]

Initial Residual = 2838.81

Iteration = 1 Scaled Residual = 0.220178

Iteration = 2 Scaled Residual = 0.118926

...

Iteration = 49 Scaled Residual = 4.98548e-07

Iteration = 50 Scaled Residual = 3.08635e-07

Call [0] Scaled Residual [3.08635e-07]

Call [1] Scaled Residual [3.08635e-07]

Call [2] Scaled Residual [3.08635e-07]

...

Call [1501] Scaled Residual [3.08635e-07]

Call [1502] Scaled Residual [3.08635e-07]

HPCG-Benchmark

version=3.1

Release date=March 28, 2019

Machine Summary=

Machine Summary::Distributed Processes=1

Machine Summary::Threads per processes=1

Global Problem Dimensions=

Global Problem Dimensions::Global nx=128

Global Problem Dimensions::Global ny=128

Global Problem Dimensions::Global nz=128

Processor Dimensions=

Processor Dimensions::npx=1

Processor Dimensions::npy=1

Processor Dimensions::npz=1

Local Domain Dimensions=

Local Domain Dimensions::nx=128

Local Domain Dimensions::ny=128

########## Problem Summary ##########=

Setup Information=

Setup Information::Setup Time=0.00910214

Linear System Information=

Linear System Information::Number of Equations=2097152

Linear System Information::Number of Nonzero Terms=55742968

Multigrid Information=

Multigrid Information::Number of coarse grid levels=3

Multigrid Information::Coarse Grids=

Multigrid Information::Coarse Grids::Grid Level=1

Multigrid Information::Coarse Grids::Number of Equations=262144

Multigrid Information::Coarse Grids::Number of Nonzero Terms=6859000

Multigrid Information::Coarse Grids::Number of Presmoother Steps=1

Multigrid Information::Coarse Grids::Number of Postsmoother Steps=1

Multigrid Information::Coarse Grids::Grid Level=2

Multigrid Information::Coarse Grids::Number of Equations=32768

Multigrid Information::Coarse Grids::Number of Nonzero Terms=830584

Multigrid Information::Coarse Grids::Number of Presmoother Steps=1

Multigrid Information::Coarse Grids::Number of Postsmoother Steps=1

Multigrid Information::Coarse Grids::Grid Level=3

Multigrid Information::Coarse Grids::Number of Equations=4096

Multigrid Information::Coarse Grids::Number of Nonzero Terms=97336

Multigrid Information::Coarse Grids::Number of Presmoother Steps=1

Multigrid Information::Coarse Grids::Number of Postsmoother Steps=1

########## Memory Use Summary ##########=

Memory Use Information=

Memory Use Information::Total memory used for data (Gbytes)=1.49883

Memory Use Information::Memory used for OptimizeProblem data (Gbytes)=0

Memory Use Information::Bytes per equation (Total memory / Number of Equations)=714.697

Memory Use Information::Memory used for linear system and CG (Gbytes)=1.31912

Memory Use Information::Coarse Grids=

Memory Use Information::Coarse Grids::Grid Level=1

Memory Use Information::Coarse Grids::Memory used=0.15755

Memory Use Information::Coarse Grids::Grid Level=2

Memory Use Information::Coarse Grids::Memory used=0.0196946

Memory Use Information::Coarse Grids::Grid Level=3

Memory Use Information::Coarse Grids::Memory used=0.00246271

########## V&V Testing Summary ##########=

Spectral Convergence Tests=

Spectral Convergence Tests::Result=PASSED

Spectral Convergence Tests::Unpreconditioned=

Spectral Convergence Tests::Unpreconditioned::Maximum iteration count=11

Spectral Convergence Tests::Unpreconditioned::Expected iteration count=12

Spectral Convergence Tests::Preconditioned=

Spectral Convergence Tests::Preconditioned::Maximum iteration count=1

Spectral Convergence Tests::Preconditioned::Expected iteration count=2

Departure from Symmetry |x'Ay-y'Ax|/(2*||x||*||A||*||y||)/epsilon=

Departure from Symmetry |x'Ay-y'Ax|/(2*||x||*||A||*||y||)/epsilon::Result=PASSED

Departure from Symmetry |x'Ay-y'Ax|/(2*||x||*||A||*||y||)/epsilon::Departure for SpMV=8.42084e-10

Departure from Symmetry |x'Ay-y'Ax|/(2*||x||*||A||*||y||)/epsilon::Departure for MG=4.21042e-10

########## Iterations Summary ##########=

Iteration Count Information=

Iteration Count Information::Result=PASSED

Iteration Count Information::Reference CG iterations per set=50

Iteration Count Information::Optimized CG iterations per set=50

Iteration Count Information::Total number of reference iterations=75150

Iteration Count Information::Total number of optimized iterations=75150

########## Reproducibility Summary ##########=

Reproducibility Information=

Reproducibility Information::Result=PASSED

Reproducibility Information::Scaled residual mean=3.08635e-07

Reproducibility Information::Scaled residual variance=0

########## Performance Summary (times in sec) ##########=

Benchmark Time Summary=

Benchmark Time Summary::Optimization phase=0.017375

Benchmark Time Summary::DDOT=6.03317

Benchmark Time Summary::WAXPBY=6.80771

Benchmark Time Summary::SpMV=58.5598

Benchmark Time Summary::MG=227.166

Benchmark Time Summary::Total=298.585

Floating Point Operations Summary=

Floating Point Operations Summary::Raw DDOT=9.5191e+11

Floating Point Operations Summary::Raw WAXPBY=9.5191e+11

Floating Point Operations Summary::Raw SpMV=8.54573e+12

Floating Point Operations Summary::Raw MG=4.76988e+13

Floating Point Operations Summary::Total=5.81484e+13

Floating Point Operations Summary::Total with convergence overhead=5.81484e+13

GB/s Summary=

GB/s Summary::Raw Read B/W=1200

GB/s Summary::Raw Write B/W=277.327

GB/s Summary::Raw Total B/W=1477.32

GB/s Summary::Total with convergence and optimization phase overhead=1457.89

GFLOP/s Summary=

GFLOP/s Summary::Raw DDOT=157.779

GFLOP/s Summary::Raw WAXPBY=139.828

GFLOP/s Summary::Raw SpMV=145.932

GFLOP/s Summary::Raw MG=209.974

GFLOP/s Summary::Raw Total=194.747

GFLOP/s Summary::Total with convergence overhead=194.747

GFLOP/s Summary::Total with convergence and optimization phase overhead=192.185

User Optimization Overheads=

User Optimization Overheads::Optimization phase time (sec)=0.017375

User Optimization Overheads::Optimization phase time vs reference SpMV+MG time=0.0396317

DDOT Timing Variations=

DDOT Timing Variations::Min DDOT MPI_Allreduce time=0.220609

DDOT Timing Variations::Max DDOT MPI_Allreduce time=0.220609

DDOT Timing Variations::Avg DDOT MPI_Allreduce time=0.220609

Final Summary=

Final Summary::HPCG result is VALID with a GFLOP/s rating of=192.185

Final Summary::HPCG 2.4 rating for historical reasons is=193.058

Final Summary::Results are valid but execution time (sec) is=298.585

Final Summary::Official results execution time (sec) must be at least=1800

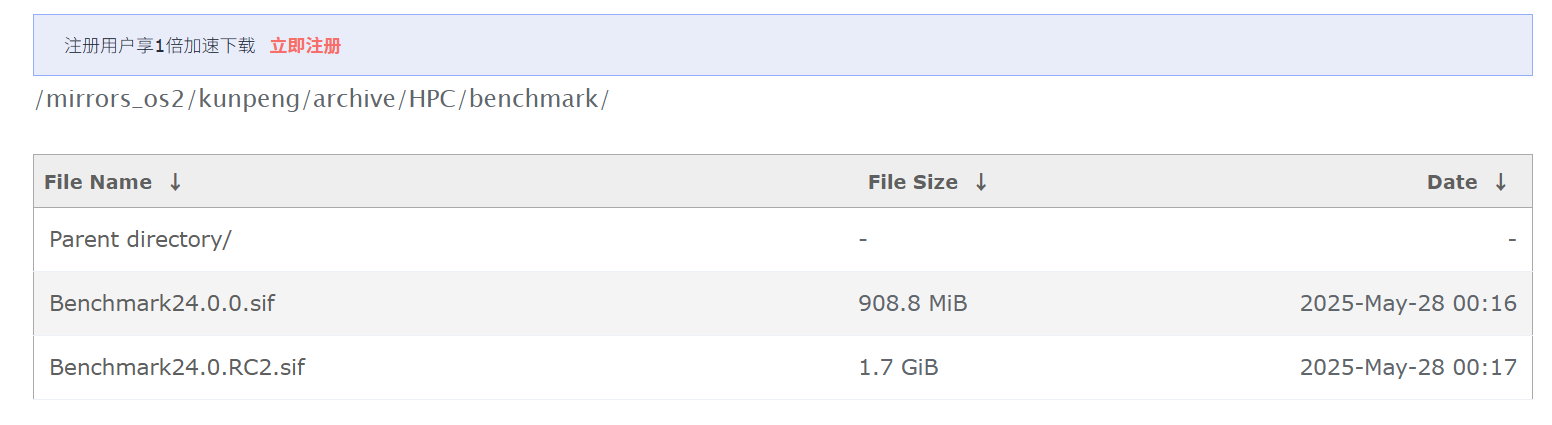

4 鯤鵬920華為基準

https://mirrors.huaweicloud.com/kunpeng/archive/HPC/benchmark/

- 安裝:

# dnf install golang -y

# export VERSION=3.8.7 && # adjust this as necessary

# wget https://github.com/hpcng/singularity/releases/download/v${VERSION}/singularity-${VERSION}.tar.gz

# tar -xzf singularity-${VERSION}.tar.gz && cd singularity

# ./mconfig && make -C builddir && sudo make -C builddir install

- 執行:

sudo singularity exec /home/qatest/benchmark/Benchmark-24.0.RC2-OpenEuler22.03-aarch64.sif mpirun --allow-run-as-root -mca pml ucx -np 128 xhpcg --nx 128 --ny 128 --nz 128 --nt 1800 | tee hpcg.log

沐曦

docker run -it -e HPCC_PERF_DIR=/tmp -u 0 -e HPCC_SMALL_PAGESIZE_ENABLE=1 -e FORCE_ACTIVE_WAIT=2 --device=/dev/dri --device=/dev/htcd --group-add video image.marsgpu.com/hpc-release/hpcg-x201:0.9.0-2.32.0.3-ubuntu20.04-amd64 sh -c '/opt/HPCG/autotest.sh'

浙公網安備 33010602011771號

浙公網安備 33010602011771號