k8s存儲(chǔ)

存儲(chǔ)

- pod運(yùn)行存儲(chǔ),刪除pod的時(shí)候,pod里面寫入的數(shù)據(jù)就沒(méi)有了

一、本地存儲(chǔ)

1、emptydir類型

-

在pod所在的物理主機(jī)上生成的一個(gè)隨機(jī)目錄,也就是臨時(shí)目錄

-

pod容器刪除后,臨時(shí)目錄也會(huì)被刪除

[root@k-master volume]# cat pod1.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod1

name: pod1

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: pod1

resources: {}

volumeMounts:

- name: v1 # 掛載v1的目錄

mountPath: /data1 # 掛載到容器里面的/data1目錄,不存在,會(huì)自動(dòng)創(chuàng)建

volumes:

- name: v1 # 臨時(shí)目錄的名字為v1

emptyDir: {} # 創(chuàng)建一個(gè)臨時(shí)目錄

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

# 創(chuàng)建pod之后,查看pod調(diào)度在哪一個(gè)節(jié)點(diǎn)上面了

[root@k-master volume]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod1 1/1 Running 0 4m50s 10.244.82.181 k-node1 <none> <none>

# 然后在node1上面查看pod1容器的詳細(xì)信息

[root@k-node1 v1]# crictl inspect 1a04aed59dd91 |grep -i mount -C3

"io.kubernetes.container.terminationMessagePolicy": "File",

"io.kubernetes.pod.terminationGracePeriod": "30"

},

"mounts": [

{

"containerPath": "/data1",

"hostPath": "/var/lib/kubelet/pods/99036af5-21a9-4af9-ac1c-f04e9c23dbed/volumes/kubernetes.io~empty-dir/v1", # node1上面創(chuàng)建的臨時(shí)目錄

--

"value": "443"

}

],

"mounts": [

{

"container_path": "/data1",

"host_path": "/var/lib/kubelet/pods/99036af5-21a9-4af9-ac1c-f04e9c23dbed/volumes/kubernetes.io~empty-dir/v1"

--

"root": {

"path": "rootfs"

},

"mounts": [

{

"destination": "/proc",

"type": "proc",

--

"path": "/proc/13150/ns/uts"

},

{

"type": "mount"

},

{

"type": "network",

# 進(jìn)入這個(gè)臨時(shí)目錄即可

[root@k-node1 v1]# pwd

/var/lib/kubelet/pods/99036af5-21a9-4af9-ac1c-f04e9c23dbed/volumes/kubernetes.io~empty-dir/v1

[root@k-node1 v1]# ls

test

# pod刪除后,臨時(shí)目錄也就被刪除了

2、hostPath

-

有一個(gè)缺點(diǎn),當(dāng)刪除了node1上面的pod后,node1上面有一個(gè)掛載目錄,當(dāng)再次創(chuàng)建pod后,調(diào)度在node2上面,因此就不行了,不能實(shí)現(xiàn)掛載,因?yàn)閽燧d目錄在node1上面

-

就是不能在各個(gè)節(jié)點(diǎn)上面進(jìn)行同步

[root@k-master volume]# cat pod1.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod1

name: pod1

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: pod1

resources: {}

volumeMounts:

- name: v1

mountPath: /data1

volumes:

- name: v1

hostPath:

path: /d1 # 宿主機(jī)上不存在的話,會(huì)自動(dòng)創(chuàng)建

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

[root@k-master volume]# kubectl get pod -o wide

NAME READY STATUS RESTARTS AGE IP NODE NOMINATED NODE READINESS GATES

pod1 1/1 Running 0 4m2s 10.244.82.182 k-node1 <none> <none>

# 這個(gè)宿主機(jī)目錄是在節(jié)點(diǎn)上面創(chuàng)建,刪除了pod這個(gè)目錄依然存在

[root@k-node1 /]# ls -d d1

d1

二、網(wǎng)絡(luò)存儲(chǔ)

1、NFS

- 就是不管我的pod在哪一個(gè)節(jié)點(diǎn)上面運(yùn)行,都能存儲(chǔ)數(shù)據(jù),因此NFS服務(wù)就可以實(shí)現(xiàn)了

1、搭建一個(gè)nfs服務(wù)器

- 我是在master機(jī)器上面搭建的

[root@k-master nfsdata]# systemctl enable nfs-server --now

Created symlink /etc/systemd/system/multi-user.target.wants/nfs-server.service → /usr/lib/systemd/system/nfs-server.service.

[root@k-master ~]# mkdir /nfsdata

mkdir /test

chmod o+w /test # 需要加上權(quán)限,才能寫入進(jìn)去

# 編寫一個(gè)配置文件

[root@k-master nfsdata]# cat /etc/exports

/nfsdata *(rw)

# 重啟服務(wù)

2、客戶端掛載nfs

# 測(cè)試NFS服務(wù)器是否正常了

[root@k-master ~]# mount 192.168.50.100:/nfsdata /test

[root@k-master ~]# df -hT /test

Filesystem Type Size Used Avail Use% Mounted on

192.168.50.100:/nfsdata nfs4 50G 5.7G 44G 12% /test

3、編寫一個(gè)pod

[root@k-master volume]# cat pod1.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod1

name: pod1

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: pod1

resources: {}

volumeMounts:

- name: v1

mountPath: /data1

volumes:

- name: v1

nfs:

path: /nfsdata # nfs服務(wù)器掛載的目錄,將這個(gè)目錄掛載到容器里面了,

server: 192.168.50.100 # nfs服務(wù)器地址

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

4、查看nfs掛載目錄

[root@k-master ~]# ls /nfsdata/

1.txt 2.txt 4.txt

2、isccsi存儲(chǔ)

-

分一個(gè)區(qū)20G

-

防火墻要關(guān)閉

[root@k-master ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sr0 11:0 1 12.8G 0 rom

nvme0n1 259:0 0 50G 0 disk

├─nvme0n1p1 259:1 0 500M 0 part /boot

└─nvme0n1p2 259:2 0 49.5G 0 part

└─cs_docker-root 253:0 0 49.5G 0 lvm /

nvme0n2 259:3 0 20G 0 disk

└─nvme0n2p1 259:4 0 20G 0 part

2、安裝target包

[root@k-master ~]# yum -y install target*

[root@k-master ~]# systemctl enable target --now

Created symlink /etc/systemd/system/multi-user.target.wants/target.service → /usr/lib/systemd/system/target.service.

3、配置iscsi

# 剛分區(qū)的盤不需要做格式化

[root@k-master ~]# targetcli

Warning: Could not load preferences file /root/.targetcli/prefs.bin.

targetcli shell version 2.1.53

Copyright 2011-2013 by Datera, Inc and others.

For help on commands, type 'help'.

/> ls /

o- / ......................................................... [...]

o- backstores .............................................. [...]

| o- block .................................. [Storage Objects: 0]

| o- fileio ................................. [Storage Objects: 0]

| o- pscsi .................................. [Storage Objects: 0]

| o- ramdisk ................................ [Storage Objects: 0]

o- iscsi ............................................ [Targets: 0]

o- loopback ......................................... [Targets: 0]

# 創(chuàng)建一個(gè)block1

/> /backstores/block create block1 /dev/nvme0n2p1

Created block storage object block1 using /dev/nvme0n2p1.

/> ls /

o- / ......................................................... [...]

o- backstores .............................................. [...]

| o- block .................................. [Storage Objects: 1]

| | o- block1 .. [/dev/nvme0n2p1 (20.0GiB) write-thru deactivated]

| | o- alua ................................... [ALUA Groups: 1]

| | o- default_tg_pt_gp ....... [ALUA state: Active/optimized]

| o- fileio ................................. [Storage Objects: 0]

| o- pscsi .................................. [Storage Objects: 0]

| o- ramdisk ................................ [Storage Objects: 0]

o- iscsi ............................................ [Targets: 0]

o- loopback ......................................... [Targets: 0]

/>

# 添加一個(gè)iscsi驅(qū)動(dòng)器,有嚴(yán)格的格式要求

/> /iscsi create iqn.2023-03.com.memeda:memeda

Created target iqn.2023-03.com.memeda:memeda.

Created TPG 1.

Global pref auto_add_default_portal=true

Created default portal listening on all IPs (0.0.0.0), port 3260.

/>

/> cd iscsi/iqn.2023-03.com.memeda:memeda/tpg1/

/iscsi/iqn.20...a:memeda/tpg1> ls

o- tpg1 ..................................... [no-gen-acls, no-auth]

o- acls ................................................ [ACLs: 0]

o- luns ................................................ [LUNs: 0]

o- portals .......................................... [Portals: 1]

o- 0.0.0.0:3260 ........................................... [OK]

/iscsi/iqn.20...a:memeda/tpg1>

/iscsi/iqn.20...a:memeda/tpg1> luns/ create /backstores/block/block1

Created LUN 0.

/iscsi/iqn.20...a:memeda/tpg1> ls

o- tpg1 ..................................... [no-gen-acls, no-auth]

o- acls ................................................ [ACLs: 0]

o- luns ................................................ [LUNs: 1]

| o- lun0 ..... [block/block1 (/dev/nvme0n2p1) (default_tg_pt_gp)]

o- portals .......................................... [Portals: 1]

o- 0.0.0.0:3260 ........................................... [OK]

/iscsi/iqn.20...a:memeda/tpg1>

/iscsi/iqn.20...a:memeda/tpg1> acls/ create iqn.2023-03.com.memeda:acl

Created Node ACL for iqn.2023-03.com.memeda:acl

Created mapped LUN 0.

/iscsi/iqn.20...a:memeda/tpg1> ls

o- tpg1 ..................................... [no-gen-acls, no-auth]

o- acls ................................................ [ACLs: 1]

| o- iqn.2023-03.com.memeda:acl ................. [Mapped LUNs: 1]

| o- mapped_lun0 ...................... [lun0 block/block1 (rw)]

o- luns ................................................ [LUNs: 1]

| o- lun0 ..... [block/block1 (/dev/nvme0n2p1) (default_tg_pt_gp)]

o- portals .......................................... [Portals: 1]

o- 0.0.0.0:3260 ........................................... [OK]

/iscsi/iqn.20...a:memeda/tpg1>

/iscsi/iqn.20...a:memeda/tpg1> exit

Global pref auto_save_on_exit=true

Configuration saved to /etc/target/saveconfig.json

4、客戶端安裝iscsi客戶端

[root@k-node1 ~]# yum -y install iscsi*

[root@k-node1 ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.1994-05.com.redhat:56a1ef745c4e

# 修改這個(gè)iscsi號(hào)

[root@k-node2 ~]# cat /etc/iscsi/initiatorname.iscsi

InitiatorName=iqn.2023-03.com.memeda:acl

# 重啟iscsid服務(wù)

5、編寫pod配置文件

[root@k-master volume]# cat pod1.yaml

apiVersion: v1

kind: Pod

metadata:

creationTimestamp: null

labels:

run: pod1

name: pod1

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: pod1

resources: {}

volumeMounts:

- name: v1

mountPath: /data

volumes:

- name: v1

iscsi:

lun: 0

targetPortal: 192.168.50.100:3260

iqn: iqn.2023-03.com.memeda:memeda

readOnly: false

dnsPolicy: ClusterFirst

restartPolicy: Always

status: {}

# lun映射到node1上面的sad,然后映射到容器里面

[root@k-node1 ~]# lsblk

NAME MAJ:MIN RM SIZE RO TYPE MOUNTPOINT

sda 8:0 0 20G 0 disk /var/lib/kubelet/pods/0a64972c-

sr0 11:0 1 12.8G 0 rom

nvme0n1 259:0 0 50G 0 disk

├─nvme0n1p1 259:1 0 500M 0 part /boot

└─nvme0n1p2 259:2 0 49.5G 0 part

└─cs_docker-root

253:0 0 49.5G 0 lvm /

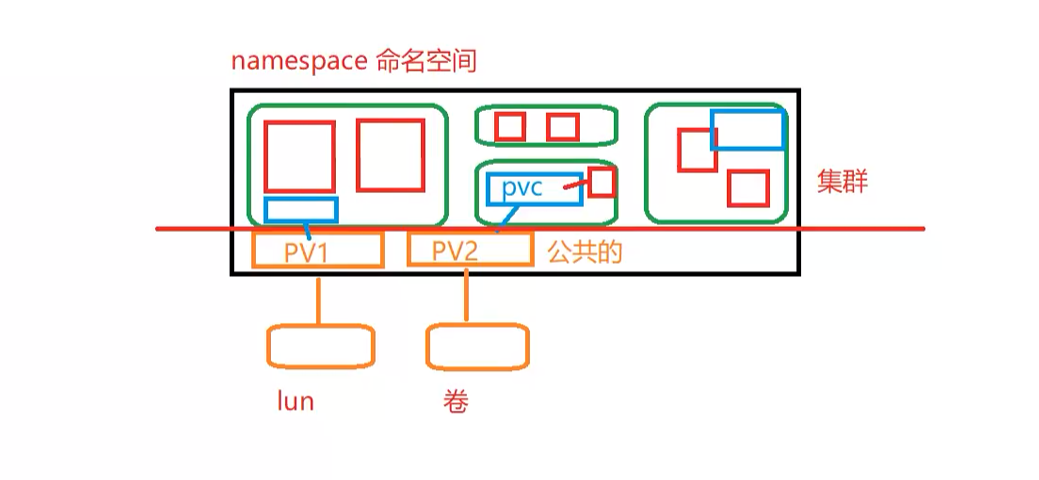

三、持久化存儲(chǔ)

-

pv和pvc

-

底層用的還是傳統(tǒng)存儲(chǔ)

-

pv就是一個(gè)一個(gè)塊,pvc就是使用這個(gè)塊的

-

pvc與pv進(jìn)行綁定

1、pv和pvc

-

pv底層還是使用的存儲(chǔ),nfs或者iscsi存儲(chǔ),或者其他的,把他做成了一個(gè)pv

-

pv做成了之后,是公共的,所有名稱空間下都能看到,全局可見(jiàn)的

-

在一個(gè)名稱空間下面創(chuàng)建一個(gè)pvc綁定pv

-

在一個(gè)名稱空間下面創(chuàng)建一個(gè)pod綁定pvc就能使用了

2、pv和pvc實(shí)驗(yàn)(靜態(tài)創(chuàng)建)

-

nfs充當(dāng)?shù)讓哟鎯?chǔ)

-

首先搭建一個(gè)nfs服務(wù)

1、創(chuàng)建pv

[root@k-master volume]# cat pv1.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv01

spec:

capacity: # 指定這個(gè)pv的大小

storage: 5Gi

accessModes: # 模式

- ReadWriteOnce

nfs:

server: 192.168.50.100 # 這個(gè)pv使用的就是nfs的存儲(chǔ)

path: /nfsdata

[root@k-master volume]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv01 5Gi RWO Retain Available 2m19s

# Retain 是回收的策略

# Available 可用的

# RWO 權(quán)限

# claim為空的,表示pvc還沒(méi)有綁定

1、pv的策略

-

persistentVolumeReclaimPolicy 字段控制

-

retain策略,保留策略

-

刪除pod,pvc,里面的數(shù)據(jù)是保留的,pv也是保留的

-

靜態(tài)的創(chuàng)建pv和pvc的話,策略默認(rèn)是reatin

# 首先刪除pod,然后刪除pvc,pv狀態(tài)就變成了released狀態(tài)了

# 如果在創(chuàng)建一個(gè)pvc的話,就不能綁定了,因?yàn)闋顟B(tài)必須是available

# 如果還要再次使用這個(gè)pv的話,就刪除這個(gè)pv,在創(chuàng)建一個(gè)pv,綁定底層的nfs數(shù)據(jù)

-

delete策略

-

刪除了pod,pvc的話,pv也會(huì)被刪除掉

-

里面的數(shù)據(jù)也會(huì)被刪除掉

-

delete策略,只能用動(dòng)態(tài)配置,靜態(tài)配置不能

-

-

recycle策略被棄用了

2、pv的狀態(tài)

| 狀態(tài)名 | 含義 | 常見(jiàn)觸發(fā)場(chǎng)景 |

|---|---|---|

| Available | 尚未被任何 PVC 綁定,可被申領(lǐng) | 剛創(chuàng)建出來(lái),或回收策略為 Retain 且已被解除綁定 |

| Bound | 已與某條 PVC 成功綁定 | kubectl get pv 最常見(jiàn)的狀態(tài) |

| Released | 綁定的 PVC 已被刪除,但 PV 尚未被回收 | ReclaimPolicy=Retain 時(shí),PVC 刪除后 PV 進(jìn)入此狀態(tài) |

| Failed | 自動(dòng)回收(Recycle/Delete)操作失敗 | 例如底層存儲(chǔ)刪除卷時(shí)返回錯(cuò)誤 |

3、pv的訪問(wèn)模式

-

ReadWriteOnce RWO 單節(jié)點(diǎn)讀寫

-

ReadOnlyMany ROX 多節(jié)點(diǎn)只讀

-

ReadWriteMany RWX 多節(jié)點(diǎn)讀寫

-

ReadWriteOncePod 單節(jié)點(diǎn)pod讀寫

| 訪問(wèn)模式 | 全稱 | 含義 | 典型場(chǎng)景 |

|---|---|---|---|

| ReadWriteOnce | RWO | 單節(jié)點(diǎn)讀寫 | 大多數(shù)塊存儲(chǔ)(EBS、Ceph RBD、iSCSI);一個(gè)節(jié)點(diǎn)掛載,可讀寫。 |

| ReadOnlyMany | ROX | 多節(jié)點(diǎn)只讀 | 共享文件存儲(chǔ)(NFS、CephFS、GlusterFS);多個(gè)節(jié)點(diǎn)可同時(shí)掛載讀取。 |

| ReadWriteMany | RWX | 多節(jié)點(diǎn)讀寫 | 分布式文件系統(tǒng)(NFS、CephFS、Portworx);多個(gè)節(jié)點(diǎn)同時(shí)掛載讀寫。 |

| ReadWriteOncePod | RWOP | 單節(jié)點(diǎn)單 Pod 讀寫 | 1.22+ 引入,針對(duì) CSI 卷;同一節(jié)點(diǎn)上只允許一個(gè) Pod 掛載讀寫,適合 StatefulSet 精細(xì)化調(diào)度。 |

2、創(chuàng)建pvc

- 一個(gè)pv只能與一個(gè)pvc進(jìn)行綁定

[root@k-master volume]# cat pvc1.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-1

spec:

accessModes:

- ReadWriteOnce # 權(quán)限

resources:

requests: # 需要的大小

storage: 5Gi

# 通過(guò)權(quán)限和大小進(jìn)行匹配pv

# 多個(gè)相同的pvc的話,就是隨機(jī)匹配pvc

# 一個(gè)pv只能被一個(gè)pvc進(jìn)行綁定

# pvc的容量小于等于pv的容量才能匹配到

# 如果有多個(gè)pvc的話,那就需要看誰(shuí)創(chuàng)建的快,就先匹配

[root@k-master volume]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-1 Bound pv01 5Gi RWO 52s

[root@k-master volume]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv01 5Gi RWO Retain Bound default/pvc-1 7m14s

1、pvc的狀態(tài)

| 狀態(tài)名 | 含義 | 常見(jiàn)觸發(fā)場(chǎng)景 |

|---|---|---|

| Pending | 尚未找到/綁定到合適的 PV | 集群里沒(méi)有匹配容量、AccessMode、StorageClass 的 PV;或動(dòng)態(tài)供給未就緒 |

| Bound | 已成功綁定到一個(gè) PV | 正常使用的常態(tài) |

| Lost | 所綁定的 PV 意外消失(對(duì)象被刪除或后端存儲(chǔ)不可用) | 手動(dòng)刪除 PV 或底層存儲(chǔ)故障 |

| Terminating | PVC 正在刪除中(對(duì)象處于 metadata.deletionTimestamp 非空) |

執(zhí)行 kubectl delete pvc 后,等待回收或 finalizer 完成 |

3、pv和pvc綁定

-

受到權(quán)限和大小和storagename(存儲(chǔ)類名稱,標(biāo)識(shí))這個(gè)三個(gè)參數(shù)的影響

-

storagename是優(yōu)先級(jí)最高的,即使?jié)M足權(quán)限和大小,pvc沒(méi)有這個(gè)storageClassName的話,就不能綁定,只有pvc有這個(gè)storageClassName的才能綁定

[root@k-master volume]# cat pv2.yaml

apiVersion: v1

kind: PersistentVolume

metadata:

name: pv02

spec:

storageClassName: abc # 存儲(chǔ)卷的名稱為abc

capacity:

storage: 5Gi

accessModes:

- ReadWriteOnce

nfs:

server: 192.168.50.100

path: /nfsdata

[root@k-master volume]# cat pvc2.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc-2

spec:

accessModes:

- ReadWriteOnce

resources:

requests:

storage: 5Gi

[root@k-master volume]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv02 5Gi RWO Retain Available abc 49s

[root@k-master volume]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc-2 Pending 25s

# 只有這個(gè)pvc有storageClassName才能匹配到

4、pod使用pvc

[root@k-master volume]# cat pod2.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: pod2

name: pod2

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: pod2

resources: {}

volumeMounts:

- name: mypv

mountPath: /var/www/html # 掛載到容器里面的/var/www/html目錄下

restartPolicy: Always

volumes:

- name: mypv # 卷的名字

persistentVolumeClaim: # 用pvc來(lái)做這個(gè)卷

claimName: pvc-1 # pvc的名字

status: {}

[root@k-master volume]# kubectl get pod

NAME READY STATUS RESTARTS AGE

pod2 1/1 Running 0 2m22s

[root@k-master ~]# kubectl exec -ti pod2 -- /bin/bash

# 查看掛載點(diǎn)

root@pod2:/var/www/html# df -hT /var/www/html/

Filesystem Type Size Used Avail Use% Mounted on

192.168.50.100:/nfsdata nfs4 50G 5.7G 44G 12% /var/www/html

# 寫入數(shù)據(jù)

root@pod2:/var/www/html# echo 1 > 1.txt

# 發(fā)現(xiàn),最底層的作為pv的nfs存儲(chǔ)里面也有數(shù)據(jù)

[root@k-master volume]# ls /nfsdata/

1.txt

# 這個(gè)pod調(diào)度在node1節(jié)點(diǎn)上,也可以查看到掛載的情況

# 這個(gè)pod的掛載目錄也會(huì)創(chuàng)建出來(lái)的

[root@k-node1 pv01]# df -hT | grep -i nfs

192.168.50.100:/nfsdata nfs4 50G 5.7G 44G 12% /var/lib/kubelet/pods/2ce30dc7-4076-482f-9d78-d5af92d03967/volumes/kubernetes.io~nfs/pv01

[root@k-node1 pv01]# cd /var/lib/kubelet/pods/2ce30dc7-4076-482f-9d78-d5af92d03967/volumes/kubernetes.io~nfs/pv01

[root@k-node1 pv01]# ls

1.txt 2.txt

5、刪除pod,pv的狀態(tài)

- 怎么處理

[root@k-master volume]# kubectl delete -f pod2.yaml

pod "pod2" deleted

[root@k-master volume]# kubectl delete -f pvc1.yaml

persistentvolumeclaim "pvc-1" deleted

# pv的狀態(tài)為released

[root@k-master volume]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv01 5Gi RWO Retain Released default/pvc-1 81s

# 刪除relf下面的字段,就能重新變成available了

[root@k-master volume]# kubectl edit pv pv01

persistentvolume/pv01 edited

[root@k-master volume]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pv01 5Gi RWO Retain Available 2m26s

# 或者刪除pv,然后重新創(chuàng)建即可

3、pv和pvc和pod

-

首先pv是一個(gè)塊,底層使用的是一個(gè)存儲(chǔ),用來(lái)做成pv,使用nfs也可以,或者其他的

-

pvc用來(lái)綁定一個(gè)pv,一個(gè)pv只能綁定一個(gè)pvc

-

pod的持久化存儲(chǔ)的話,就是使用的pvc來(lái)實(shí)現(xiàn)的,pvc掛載到容器的目錄下

-

在這個(gè)目錄下面寫入數(shù)據(jù)的話,就寫入到了最底層的存儲(chǔ)里面了

-

其實(shí)pv和pvc都是虛擬出來(lái)的,最底層的還是一個(gè)存儲(chǔ)

-

pv和pvc方便集中管理

-

最底層的nfs或者其他的也可以管理,也就是分權(quán)管理

-

也就是多級(jí)映射關(guān)系,抽象出來(lái)的

-

最底層的nfs,映射到pv,pv映射到pvc,最后以卷的形式掛載到pod里面即可

3、nfs配置動(dòng)態(tài)卷的供應(yīng)流程

-

就是在定義pvc的時(shí)候,自動(dòng)的創(chuàng)建出pv

-

依靠的就是nfs驅(qū)動(dòng)器

-

動(dòng)態(tài)創(chuàng)建的默認(rèn)的pv策略是delete,可以修改的為retain

-

創(chuàng)建一個(gè)存儲(chǔ)類,映射到nfs存儲(chǔ),然后創(chuàng)建一個(gè)pvc就會(huì)自動(dòng)的創(chuàng)建一個(gè)pv

1、配置nfs服務(wù)器

[root@k-master ~]# cat /etc/exports

/nfsdata *(rw)

2、配置nfs驅(qū)動(dòng)

# 先git clone 一些這個(gè)文件

[root@k-master nfs-deploy]# git clone https://github.com/kubernetes-sigs/nfs-subdir-external-provisioner.git

# 進(jìn)入到deploy目錄下

[root@k-master deploy]# pwd

/root/volume/nfs-deploy/nfs-subdir-external-provisioner/deploy

# 如果名稱空間不是默認(rèn)的default的話,需要修改名稱空間,如果是默認(rèn)的則無(wú)需操作

[root@k-master deploy]# ls

class.yaml kustomization.yaml rbac.yaml test-pod.yaml

deployment.yaml objects test-claim.yaml

[root@k-master deploy]# kubectl apply -f rbac.yaml

serviceaccount/nfs-client-provisioner created

clusterrole.rbac.authorization.k8s.io/nfs-client-provisioner-runner created

clusterrolebinding.rbac.authorization.k8s.io/run-nfs-client-provisioner created

role.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

rolebinding.rbac.authorization.k8s.io/leader-locking-nfs-client-provisioner created

# 修改了deployment.yaml

- name: nfs-client-provisioner

image: registry.k8s.io/sig-storage/nfs-subdir-external-provisioner:v4.0.2

# 如果可以拉取到這個(gè)鏡像,就無(wú)需修改,不行的話,就需要更換鏡像

registry.cn-hangzhou.aliyuncs.com/cloudcs/nfs-subdir-external-provisioner:v4.0.2

# 這個(gè)是別人的網(wǎng)絡(luò)倉(cāng)庫(kù)

# 修改驅(qū)動(dòng)文件中的nfs的一些配置,修改deployment.yaml

- name: NFS_SERVER

value: 192.168.50.100

- name: NFS_PATH

value: /nfsdata

volumes:

- name: nfs-client-root

nfs:

server: 192.168.50.100

path: /nfsdata

[root@k-master deploy]# kubectl apply -f deployment.yaml

deployment.apps/nfs-client-provisioner created

[root@k-master deploy]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-9f74c968-2lm9k 1/1 Running 0 15s

# 這個(gè)pod就跟nfs存儲(chǔ)做了一個(gè)映射,當(dāng)創(chuàng)建一個(gè)pvc(使用的存儲(chǔ)類是nfs-client)的時(shí)候,就會(huì)自動(dòng)的創(chuàng)建一個(gè)pv

3、創(chuàng)建一個(gè)存儲(chǔ)類

[root@k-master ~]# cat class.yaml

apiVersion: storage.k8s.io/v1

kind: StorageClass

metadata:

name: nfs-client

provisioner: k8s-sigs.io/nfs-subdir-external-provisioner

parameters:

archiverOnDelete: "false"

# 默認(rèn)創(chuàng)建出來(lái)的存儲(chǔ)類的策略為delete狀態(tài)

[root@k-master ~]# kubectl get sc

NAME PROVISIONER RECLAIMPOLICY VOLUMEBINDINGMODE ALLOWVOLUMEEXPANSION AGE

nfs-client k8s-sigs.io/nfs-subdir-external-provisioner Delete Immediate false 37s

[root@k-master ~]#

4、創(chuàng)建一個(gè)pvc

- 創(chuàng)建一個(gè)pvc就會(huì)自動(dòng)的創(chuàng)建一個(gè)pv

[root@k-master volume]# cat pvc3.yaml

apiVersion: v1

kind: PersistentVolumeClaim

metadata:

name: pvc3

spec:

accessModes:

- ReadWriteOnce

storageClassName: nfs-client # 使用的存儲(chǔ)類是nfs-client,就會(huì)自動(dòng)的創(chuàng)建pv

resources:

requests:

storage: 5Gi

[root@k-master volume]# kubectl get pv

NAME CAPACITY ACCESS MODES RECLAIM POLICY STATUS CLAIM STORAGECLASS REASON AGE

pvc-37889731-cc0f-4485-8647-0bc46a435faf 5Gi RWO Delete Bound default/pvc3 nfs-client 9s

[root@k-master volume]# kubectl get pvc

NAME STATUS VOLUME CAPACITY ACCESS MODES STORAGECLASS AGE

pvc3 Bound pvc-37889731-cc0f-4485-8647-0bc46a435faf 5Gi RWO nfs-client 13s

5、創(chuàng)建一個(gè)pod

[root@k-master volume]# cat pod2.yaml

apiVersion: v1

kind: Pod

metadata:

labels:

run: pod2

name: pod2

spec:

containers:

- image: nginx

imagePullPolicy: IfNotPresent

name: pod2

resources: {}

volumeMounts:

- name: mypv

mountPath: /var/www/html

restartPolicy: Always

volumes:

- name: mypv

persistentVolumeClaim:

claimName: pvc3

status: {}

[root@k-master volume]# kubectl get pod

NAME READY STATUS RESTARTS AGE

nfs-client-provisioner-9f74c968-2lm9k 1/1 Running 0 20m

pod2 1/1 Running 0 70s

# 就會(huì)在nfsdata這個(gè)目錄創(chuàng)建一個(gè)目錄用來(lái)存儲(chǔ)數(shù)據(jù)

[root@k-master volume]# ls /nfsdata/

1.txt 2.txt default-pvc3-pvc-37889731-cc0f-4485-8647-0bc46a435faf

# 寫入一些數(shù)據(jù)

[root@k-master volume]# ls /nfsdata/default-pvc3-pvc-37889731-cc0f-4485-8647-0bc46a435faf/

11 22

# 刪除pod和pvc

[root@k-master volume]# kubectl delete -f pod2.yaml

pod "pod2" deleted

[root@k-master volume]# kubectl delete -f pvc3.yaml

persistentvolumeclaim "pvc3" deleted

# 這個(gè)nfs的目錄的名字就會(huì)修改

[root@k-master volume]# ls /nfsdata/

archived-default-pvc3-pvc-3cd12cad-47a5-43f3-93a0-8e9a9d4a490c

# 因?yàn)檫@個(gè)是delete策略導(dǎo)致的,防止數(shù)據(jù)立即丟失

四、總結(jié)

-

本地存儲(chǔ)的話,比較笨重

-

靜態(tài)邏輯

-

先有一個(gè)最底層的nfs服務(wù)器,可以讓別人掛載這個(gè)目錄

-

然后創(chuàng)建一個(gè)pv,pvc之間進(jìn)行綁定

-

創(chuàng)建一個(gè)pod綁定pvc,從而寫數(shù)據(jù),寫的數(shù)據(jù)存儲(chǔ)在nfs這個(gè)目錄下

-

-

動(dòng)態(tài)的邏輯

-

創(chuàng)建一個(gè)nfs服務(wù)器

-

創(chuàng)建一個(gè)nfs的驅(qū)動(dòng)器,映射到nfs存儲(chǔ),使用的還是nfs存儲(chǔ)

-

然后創(chuàng)建一個(gè)存儲(chǔ)類,驅(qū)動(dòng)器使用的是上面創(chuàng)建的

-

創(chuàng)建一個(gè)pvc,因?yàn)閜vc是需要pv進(jìn)行綁定的,因此存儲(chǔ)類關(guān)聯(lián)的這個(gè)驅(qū)動(dòng)器就會(huì)自動(dòng)的創(chuàng)建一個(gè)pv,也就是在nfs存儲(chǔ)目錄里面劃分一個(gè)目錄用于存儲(chǔ)

-

創(chuàng)建一個(gè)pod進(jìn)行綁定pvc即可

-

浙公網(wǎng)安備 33010602011771號(hào)

浙公網(wǎng)安備 33010602011771號(hào)