實(shí)驗(yàn)四:神經(jīng)網(wǎng)絡(luò)算法實(shí)驗(yàn)

Posted on 2022-11-15 21:31 木十1342 閱讀(94) 評(píng)論(0) 收藏 舉報(bào)【實(shí)驗(yàn)?zāi)康摹?/p>

理解神經(jīng)網(wǎng)絡(luò)原理,掌握神經(jīng)網(wǎng)絡(luò)前向推理和后向傳播方法;

掌握神經(jīng)網(wǎng)絡(luò)模型的編程實(shí)現(xiàn)方法。

【實(shí)驗(yàn)內(nèi)容】

1.1981年生物學(xué)家格若根(W.Grogan)和維什(W.Wirth)發(fā)現(xiàn)了兩類蚊子(或飛蠓midges),他們測量了這兩類蚊子每個(gè)個(gè)體的翼長和觸角長,數(shù)據(jù)如下:

翼長 觸角長 類別

1.78 1.14 Apf

1.96 1.18 Apf

1.86 1.20 Apf

1.72 1.24 Apf

2.00 1.26 Apf

2.00 1.28 Apf

1.96 1.30 Apf

1.74 1.36 Af

1.64 1.38 Af

1.82 1.38 Af

1.90 1.38 Af

1.70 1.40 Af

1.82 1.48 Af

1.82 1.54 Af

2.08 1.56 Af

現(xiàn)有三只蚊子的相應(yīng)數(shù)據(jù)分別為(1.24,1.80)、(1.28,1.84)、(1.40,2.04),請(qǐng)判斷這三只蚊子的類型。

【實(shí)驗(yàn)報(bào)告要求】

建立三層神經(jīng)網(wǎng)絡(luò)模型,編寫神經(jīng)網(wǎng)絡(luò)訓(xùn)練的推理的代碼,實(shí)現(xiàn)類型預(yù)測;

對(duì)照實(shí)驗(yàn)內(nèi)容,撰寫實(shí)驗(yàn)過程、算法及測試結(jié)果,程序不得使用sklearn庫;

代碼規(guī)范化:命名規(guī)則、注釋;

查閱文獻(xiàn),討論神經(jīng)網(wǎng)絡(luò)的應(yīng)用場景。

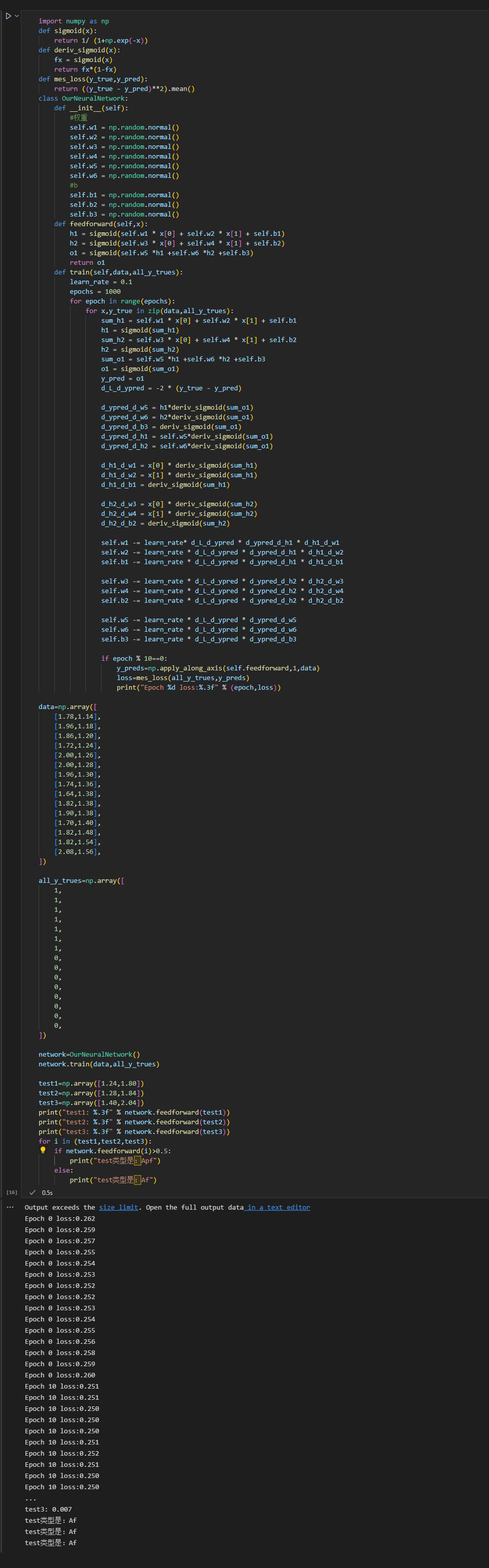

import numpy as np def sigmoid(x): return 1/ (1+np.exp(-x)) def deriv_sigmoid(x): fx = sigmoid(x) return fx*(1-fx) def mes_loss(y_true,y_pred): return ((y_true - y_pred)**2).mean() class OurNeuralNetwork: def __init__(self): #權(quán)重 self.w1 = np.random.normal() self.w2 = np.random.normal() self.w3 = np.random.normal() self.w4 = np.random.normal() self.w5 = np.random.normal() self.w6 = np.random.normal() #b self.b1 = np.random.normal() self.b2 = np.random.normal() self.b3 = np.random.normal() def feedforward(self,x): h1 = sigmoid(self.w1 * x[0] + self.w2 * x[1] + self.b1) h2 = sigmoid(self.w3 * x[0] + self.w4 * x[1] + self.b2) o1 = sigmoid(self.w5 *h1 +self.w6 *h2 +self.b3) return o1 def train(self,data,all_y_trues): learn_rate = 0.1 epochs = 1000 for epoch in range(epochs): for x,y_true in zip(data,all_y_trues): sum_h1 = self.w1 * x[0] + self.w2 * x[1] + self.b1 h1 = sigmoid(sum_h1) sum_h2 = self.w3 * x[0] + self.w4 * x[1] + self.b2 h2 = sigmoid(sum_h2) sum_o1 = self.w5 *h1 +self.w6 *h2 +self.b3 o1 = sigmoid(sum_o1) y_pred = o1 d_L_d_ypred = -2 * (y_true - y_pred) d_ypred_d_w5 = h1*deriv_sigmoid(sum_o1) d_ypred_d_w6 = h2*deriv_sigmoid(sum_o1) d_ypred_d_b3 = deriv_sigmoid(sum_o1) d_ypred_d_h1 = self.w5*deriv_sigmoid(sum_o1) d_ypred_d_h2 = self.w6*deriv_sigmoid(sum_o1) d_h1_d_w1 = x[0] * deriv_sigmoid(sum_h1) d_h1_d_w2 = x[1] * deriv_sigmoid(sum_h1) d_h1_d_b1 = deriv_sigmoid(sum_h1) d_h2_d_w3 = x[0] * deriv_sigmoid(sum_h2) d_h2_d_w4 = x[1] * deriv_sigmoid(sum_h2) d_h2_d_b2 = deriv_sigmoid(sum_h2) self.w1 -= learn_rate* d_L_d_ypred * d_ypred_d_h1 * d_h1_d_w1 self.w2 -= learn_rate * d_L_d_ypred * d_ypred_d_h1 * d_h1_d_w2 self.b1 -= learn_rate * d_L_d_ypred * d_ypred_d_h1 * d_h1_d_b1 self.w3 -= learn_rate * d_L_d_ypred * d_ypred_d_h2 * d_h2_d_w3 self.w4 -= learn_rate * d_L_d_ypred * d_ypred_d_h2 * d_h2_d_w4 self.b2 -= learn_rate * d_L_d_ypred * d_ypred_d_h2 * d_h2_d_b2 self.w5 -= learn_rate * d_L_d_ypred * d_ypred_d_w5 self.w6 -= learn_rate * d_L_d_ypred * d_ypred_d_w6 self.b3 -= learn_rate * d_L_d_ypred * d_ypred_d_b3 if epoch % 10==0: y_preds=np.apply_along_axis(self.feedforward,1,data) loss=mes_loss(all_y_trues,y_preds) print("Epoch %d loss:%.3f" % (epoch,loss)) data=np.array([ [1.78,1.14], [1.96,1.18], [1.86,1.20], [1.72,1.24], [2.00,1.26], [2.00,1.28], [1.96,1.30], [1.74,1.36], [1.64,1.38], [1.82,1.38], [1.90,1.38], [1.70,1.40], [1.82,1.48], [1.82,1.54], [2.08,1.56], ]) all_y_trues=np.array([ 1, 1, 1, 1, 1, 1, 1, 0, 0, 0, 0, 0, 0, 0, 0, ]) network=OurNeuralNetwork() network.train(data,all_y_trues) #預(yù)測 test1=np.array([1.24,1.80]) test2=np.array([1.28,1.84]) test3=np.array([1.40,2.04]) print("test1: %.3f" % network.feedforward(test1)) print("test2: %.3f" % network.feedforward(test2)) print("test3: %.3f" % network.feedforward(test3)) for i in (test1,test2,test3): if network.feedforward(i)>0.5: print("test類型是:Apf") else: print("test類型是:Af")

浙公網(wǎng)安備 33010602011771號(hào)

浙公網(wǎng)安備 33010602011771號(hào)