Pytorch實戰學習(八):基礎RNN

《PyTorch深度學習實踐》完結合集_嗶哩嗶哩_bilibili

Basic RNN

①用于處理序列數據:時間序列、文本、語音.....

②循環過程中權重共享機制

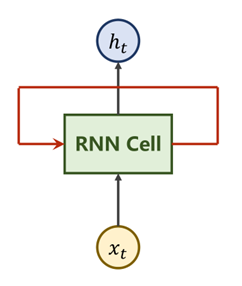

一、RNN原理

① Xt表示時刻t時輸入的數據

② RNN Cell—本質是一個線性層

③ Ht表示時刻t時的輸出(隱藏層)

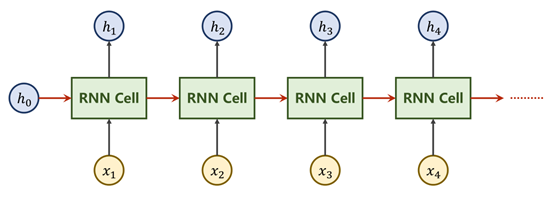

RNN Cell為一個線性層Linear,在t時刻下的N維向量,經過Cell后即可變為一個M維的向量h_t,而與其他線性層不同,RNN Cell為一個共享的線性層,即重復利用,權重共享。

X1…Xn是一組序列數據,每個Xi都至少包含Xi-1的信息

比如h2里面包含了X1的信息

就是要把X1經過RNN Cell處理后的h1向下傳遞,和X2一起輸入到第二個RNN Cell中

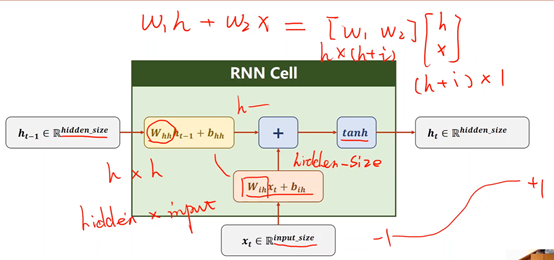

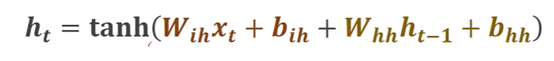

二、RNN 計算過程

三、Pytorch實現

方法①

先創建RNN Cell(要確定好輸入和輸出的維度)

再寫循環

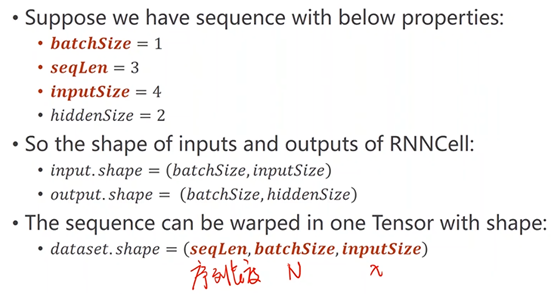

BatchSize—批量大小

SeqLen—樣本數量

InputSize—輸入維度

HiddenSize—隱藏層(輸出)維度

import torch #參數設置 batch_size = 1 seq_len = 3 input_size = 4 hidden_size =2 #構造RNN Cell cell = torch.nn.RNNCell(input_size = input_size, hidden_size = hidden_size) #維度最重要(seq,batch,features) dataset = torch.randn(seq_len,batch_size,input_size) #初始化時設為零向量 hidden = torch.zeros(batch_size, hidden_size) #訓練的循環 for idx,input in enumerate(dataset): print('=' * 20,idx,'=' * 20) print('Input size:', input.shape) # 當前的輸入和上一層的輸出 hidden = cell(input, hidden) print('outputs size: ', hidden.shape) print(hidden)

方法②

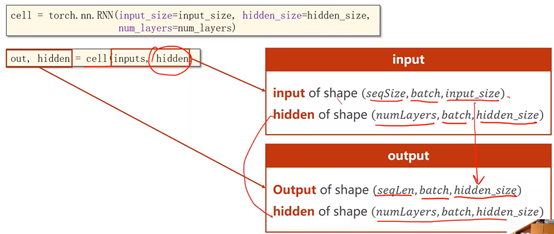

直接調用RNN

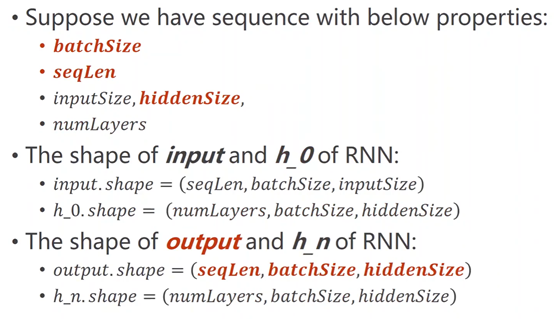

BatchSize—批量大小

SeqLen—樣本數量

InputSize—輸入維度

HiddenSize—隱藏層(輸出)維度

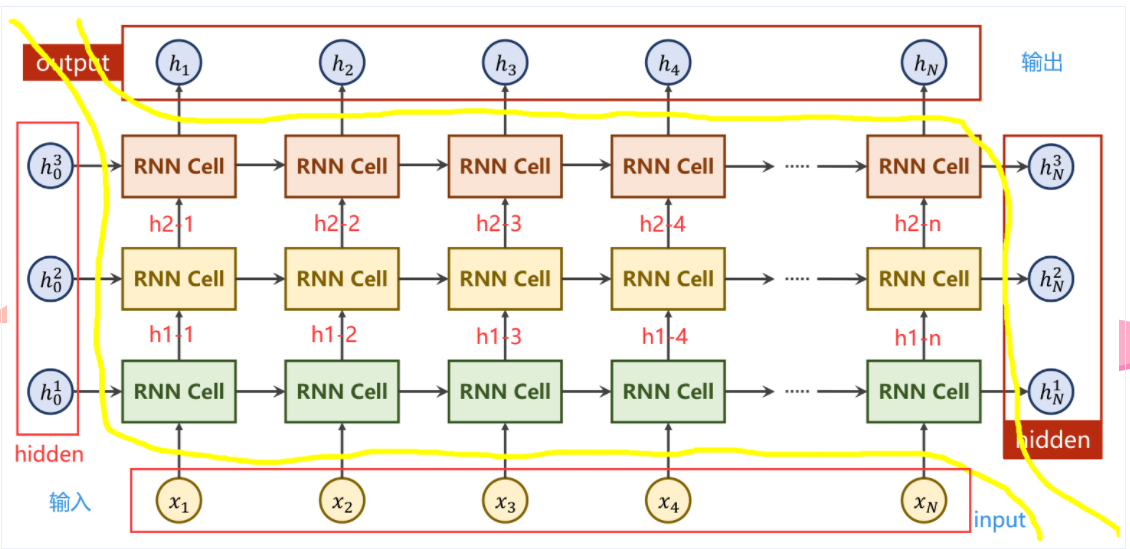

NumLayers—RNN層數

多層RNN

# 直接調用RNN

import torch

batch_size = 1

seq_len = 5

input_size = 4

hidden_size =2

num_layers = 3

#其他參數

#batch_first=True 維度從(SeqLen*Batch*input_size)變為(Batch*SeqLen*input_size)

cell = torch.nn.RNN(input_size = input_size, hidden_size = hidden_size, num_layers = num_layers)

inputs = torch.randn(seq_len, batch_size, input_size)

hidden = torch.zeros(num_layers, batch_size, hidden_size)

out, hidden = cell(inputs, hidden)

print("Output size: ", out.shape)

print("Output: ", out)

print("Hidden size: ", hidden.shape)

print("Hidden: ", hidden)

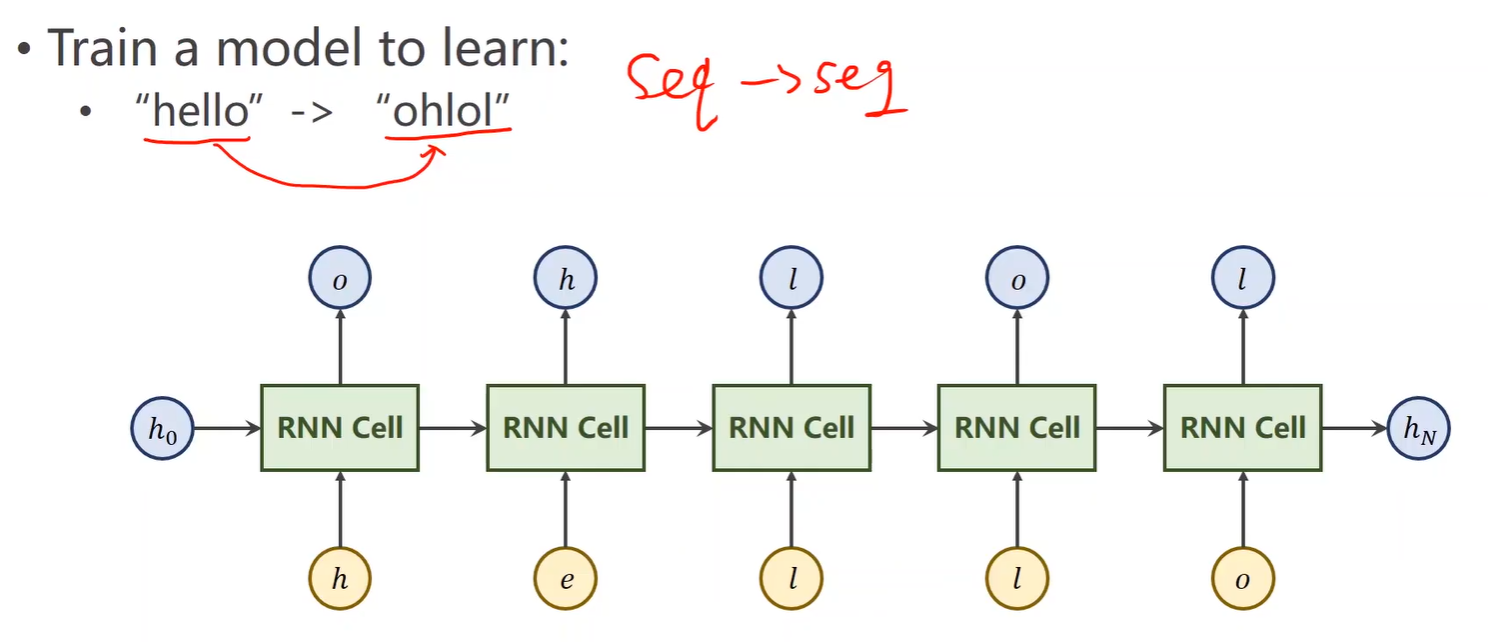

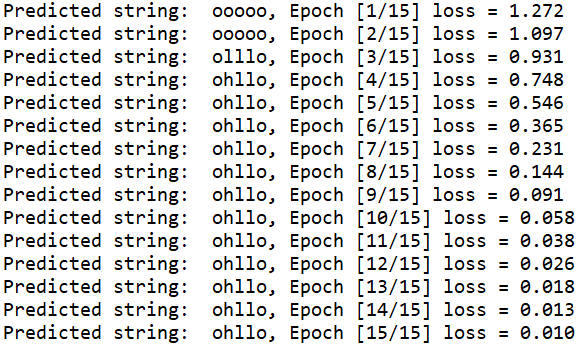

四、案例

一個序列到序列(seq→seq)的問題

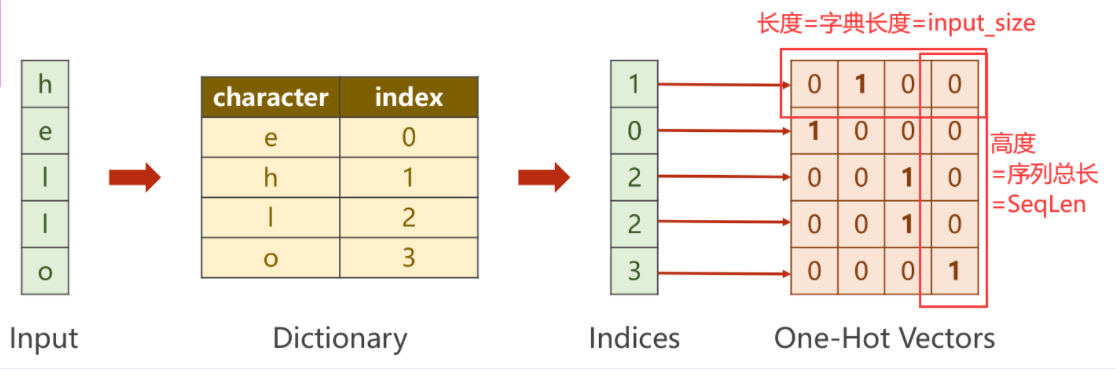

1、先將“Hello”轉化成數值型向量

用 one-hot編碼

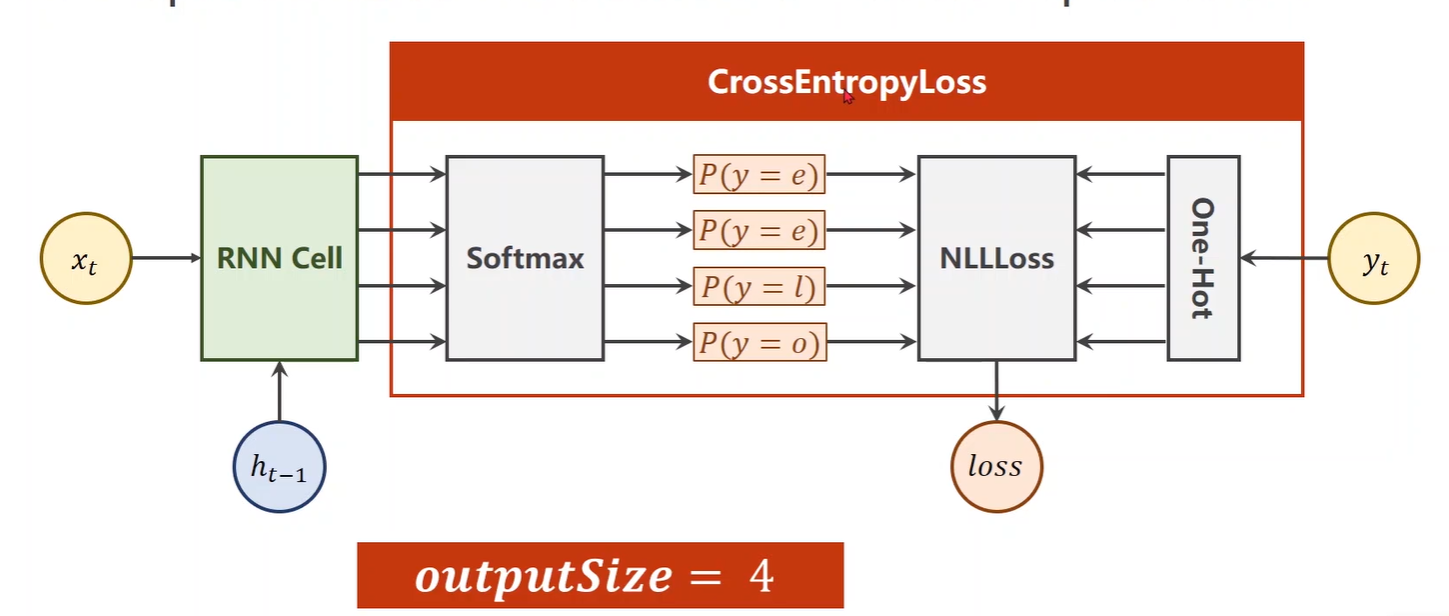

多分類問題(輸出維度為4)經過Softmax求得映射之后的概率分別是多少,再利用輸出對應的獨熱向量,計算NLLLoss

方法① RNN Cell

import torch

input_size = 4

hidden_size = 3

batch_size = 1

#構建輸入輸出字典

idx2char_1 = ['e', 'h', 'l', 'o']

idx2char_2 = ['h', 'l', 'o']

x_data = [1, 0, 2, 2, 3]

y_data = [2, 0, 1, 2, 1]

# y_data = [3, 1, 2, 2, 3]

one_hot_lookup = [[1, 0, 0, 0],

[0, 1, 0, 0],

[0, 0, 1, 0],

[0, 0, 0, 1]]

#構造獨熱向量,此時向量維度為(SeqLen*InputSize)

x_one_hot = [one_hot_lookup[x] for x in x_data]

#view(-1……)保留原始SeqLen,并添加batch_size,input_size兩個維度

inputs = torch.Tensor(x_one_hot).view(-1, batch_size, input_size)

#將labels轉換為(SeqLen*1)的維度

labels = torch.LongTensor(y_data).view(-1, 1)

class Model(torch.nn.Module):

def __init__(self, input_size, hidden_size, batch_size):

super(Model, self).__init__()

self.batch_size = batch_size

self.input_size = input_size

self.hidden_size = hidden_size

self.rnncell = torch.nn.RNNCell(input_size = self.input_size,

hidden_size = self.hidden_size)

def forward(self, input, hidden):

# RNNCell input = (batchsize*inputsize)

# RNNCell hidden = (batchsize*hiddensize)

# h_i = cell(x_i , h_i-1)

hidden = self.rnncell(input, hidden)

return hidden

#初始化零向量作為h0,只有此處用到batch_size

def init_hidden(self):

return torch.zeros(self.batch_size, self.hidden_size)

net = Model(input_size, hidden_size, batch_size)

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(net.parameters(), lr=0.1)

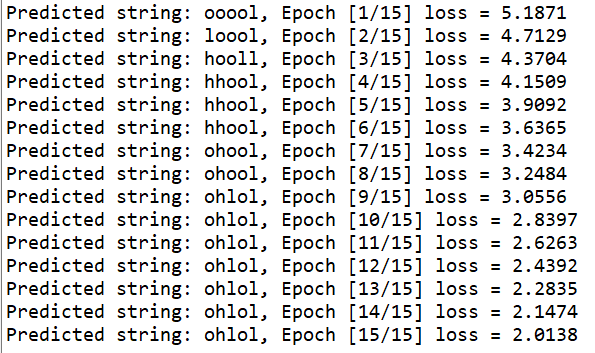

for epoch in range(15):

#損失及梯度置0,創建前置條件h0

loss = 0

optimizer.zero_grad()

hidden = net.init_hidden()

print("Predicted string: ",end="")

#inputs=(seqLen*batchsize*input_size) labels = (seqLen*1)

#input是按序列取的inputs元素(batchsize*inputsize)

#label是按序列去的labels元素(1)

for input, label in zip(inputs, labels):

hidden = net(input, hidden)

#序列的每一項損失都需要累加

loss += criterion(hidden, label)

#多分類取最大,找到四個類中概率最大的下標

_, idx = hidden.max(dim=1)

print(idx2char_2[idx.item()], end='')

loss.backward()

optimizer.step()

print(", Epoch [%d/15] loss = %.4f" % (epoch+1, loss.item()))

方法② RNN

import torch

input_size = 4

hidden_size = 3

batch_size = 1

num_layers = 1

seq_len = 5

#構建輸入輸出字典

idx2char_1 = ['e', 'h', 'l', 'o']

idx2char_2 = ['h', 'l', 'o']

x_data = [1, 0, 2, 2, 3]

y_data = [2, 0, 1, 2, 1]

# y_data = [3, 1, 2, 2, 3]

one_hot_lookup = [[1, 0, 0, 0],

[0, 1, 0, 0],

[0, 0, 1, 0],

[0, 0, 0, 1]]

x_one_hot = [one_hot_lookup[x] for x in x_data]

inputs = torch.Tensor(x_one_hot).view(seq_len, batch_size, input_size)

#labels(seqLen*batchSize,1)為了之后進行矩陣運算,計算交叉熵

labels = torch.LongTensor(y_data)

class Model(torch.nn.Module):

def __init__(self, input_size, hidden_size, batch_size, num_layers=1):

super(Model, self).__init__()

self.batch_size = batch_size #構造H0

self.input_size = input_size

self.hidden_size = hidden_size

self.num_layers = num_layers

self.rnn = torch.nn.RNN(input_size = self.input_size,

hidden_size = self.hidden_size,

num_layers=num_layers)

def forward(self, input):

hidden = torch.zeros(self.num_layers,

self.batch_size,

self.hidden_size)

out, _ = self.rnn(input, hidden)

#reshape成(SeqLen*batchsize,hiddensize)便于在進行交叉熵計算時可以以矩陣進行。

return out.view(-1, self.hidden_size)

net = Model(input_size, hidden_size, batch_size, num_layers)

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(net.parameters(), lr=0.05)

#RNN中的輸入(SeqLen*batchsize*inputsize)

#RNN中的輸出(SeqLen*batchsize*hiddensize)

#labels維度 hiddensize*1

for epoch in range(15):

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

_, idx = outputs.max(dim=1)

idx = idx.data.numpy()

print('Predicted string: ',''.join([idx2char_2[x] for x in idx]), end = '')

print(", Epoch [%d/15] loss = %.3f" % (epoch+1, loss.item()))

五、自然語言處理--詞向量

獨熱編碼在實際問題中容易引起很多問題:

- 獨熱編碼向量維度過高,每增加一個不同的數據,就要增加一維

- 獨熱編碼向量稀疏,每個向量是一個為1其余為0

- 獨熱編碼是硬編碼,編碼情況與數據特征無關

綜上所述,需要一種低維度的、稠密的、可學習數據的編碼方式

1、詞嵌入

(數據降維)將高維、稀疏的樣本 映射到 低維、稠密的空間中

2、網絡結構

2.1 Embedding

Embedding參數說明

①num_embedding:輸入的獨熱向量的維度

②embedding_dim:轉換成詞嵌入的維度

輸入、輸出

③輸入必須是LongTensor類型

④輸出時為為數據增加一個維度(embedding_dim)

2.2線性層 Linear

輸入和輸出的第一個維度(Batch)一直到倒數第二個維度都會保持不變。但會對最后一個維度(in_features)做出改變(out_features)

2.3交叉熵 CrossEntropyLoss

# 增加詞嵌入

import torch

input_size = 4

num_class = 4

hidden_size = 8

embedding_size =10

batch_size = 1

num_layers = 2

seq_len = 5

idx2char_1 = ['e', 'h', 'l', 'o']

idx2char_2 = ['h', 'l', 'o']

x_data = [[1, 0, 2, 2, 3]]

y_data = [3, 1, 2, 2, 3]

#inputs 作為交叉熵中的Inputs,維度為(batchsize,seqLen)

inputs = torch.LongTensor(x_data)

#labels 作為交叉熵中的Target,維度為(batchsize*seqLen)

labels = torch.LongTensor(y_data)

class Model(torch.nn.Module):

def __init__(self):

super(Model, self).__init__()

#嵌入層

self .emb = torch.nn.Embedding(input_size, embedding_size)

self.rnn = torch.nn.RNN(input_size = embedding_size,

hidden_size = hidden_size,

num_layers=num_layers,

batch_first = True)

self.fc = torch.nn.Linear(hidden_size, num_class)

def forward(self, x):

hidden = torch.zeros(num_layers, x.size(0), hidden_size)

x = self.emb(x)

x, _ = self.rnn(x, hidden)

x = self.fc(x)

return x.view(-1, num_class)

net = Model()

criterion = torch.nn.CrossEntropyLoss()

optimizer = torch.optim.Adam(net.parameters(), lr=0.05)

for epoch in range(15):

optimizer.zero_grad()

outputs = net(inputs)

loss = criterion(outputs, labels)

loss.backward()

optimizer.step()

_, idx = outputs.max(dim=1)

idx = idx.data.numpy()

print('Predicted string: ',''.join([idx2char_1[x] for x in idx]), end = '')

print(", Epoch [%d/15] loss = %.3f" % (epoch+1, loss.item()))

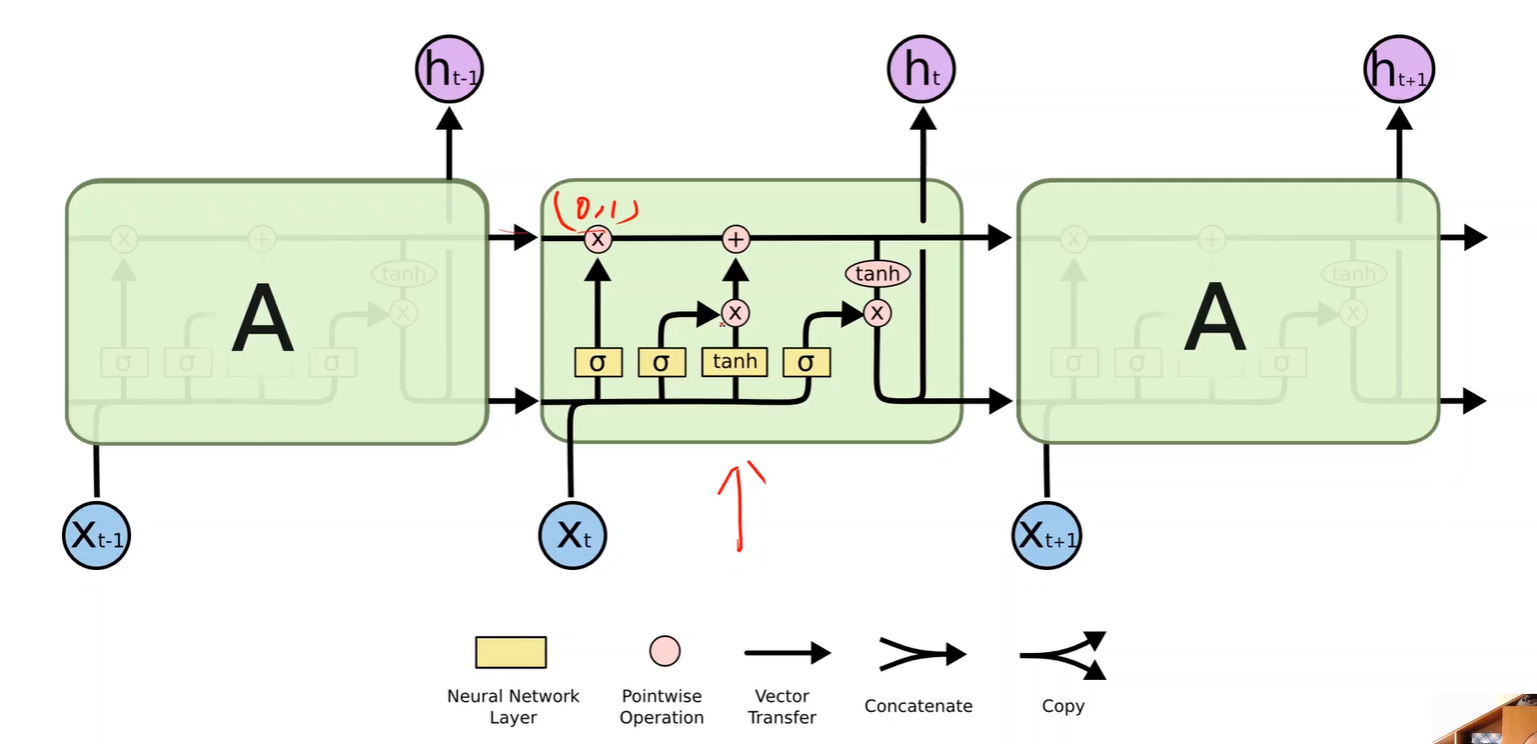

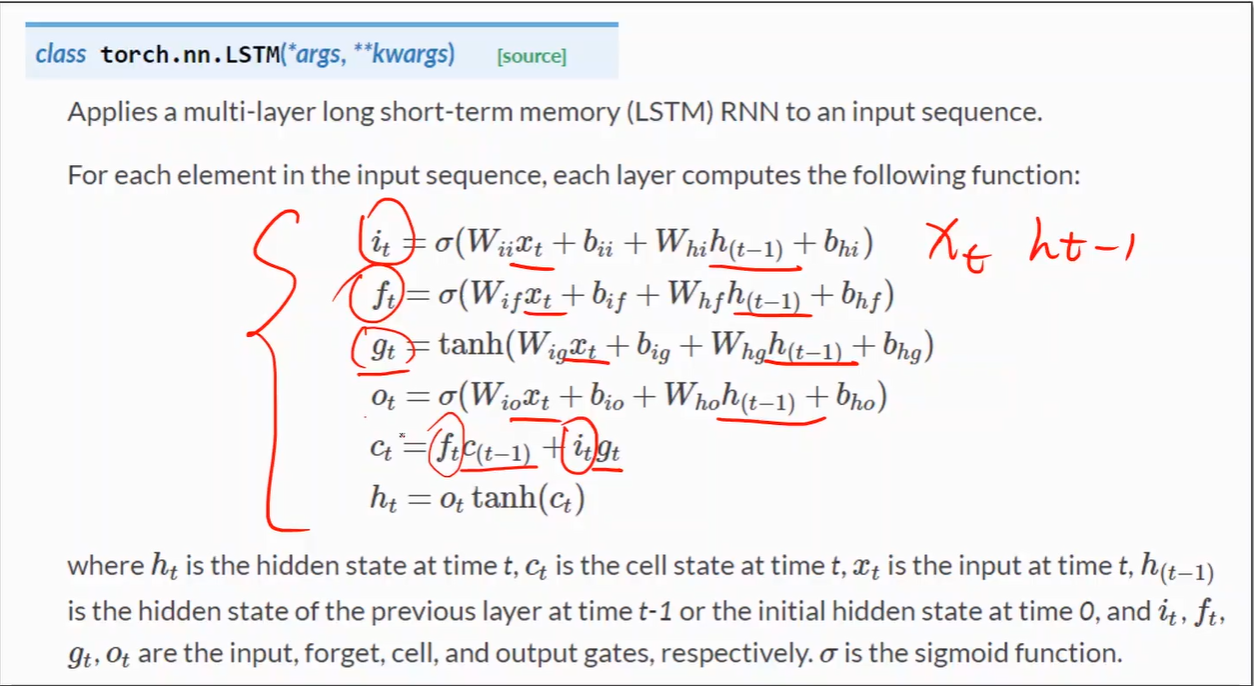

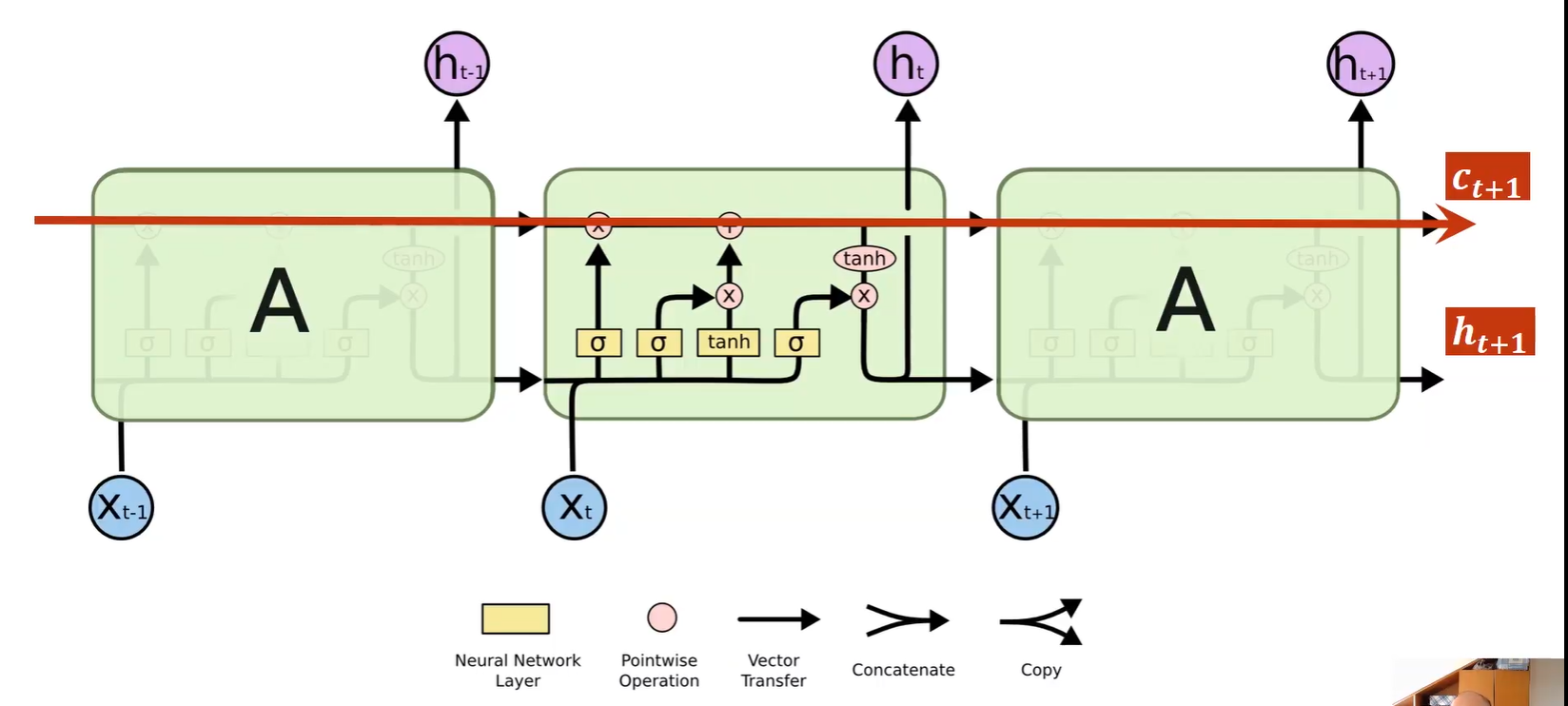

五、LSTM

增加c_t+1的這條路徑,在反向傳播時,提供更快梯度下降的路徑,減少梯度消失

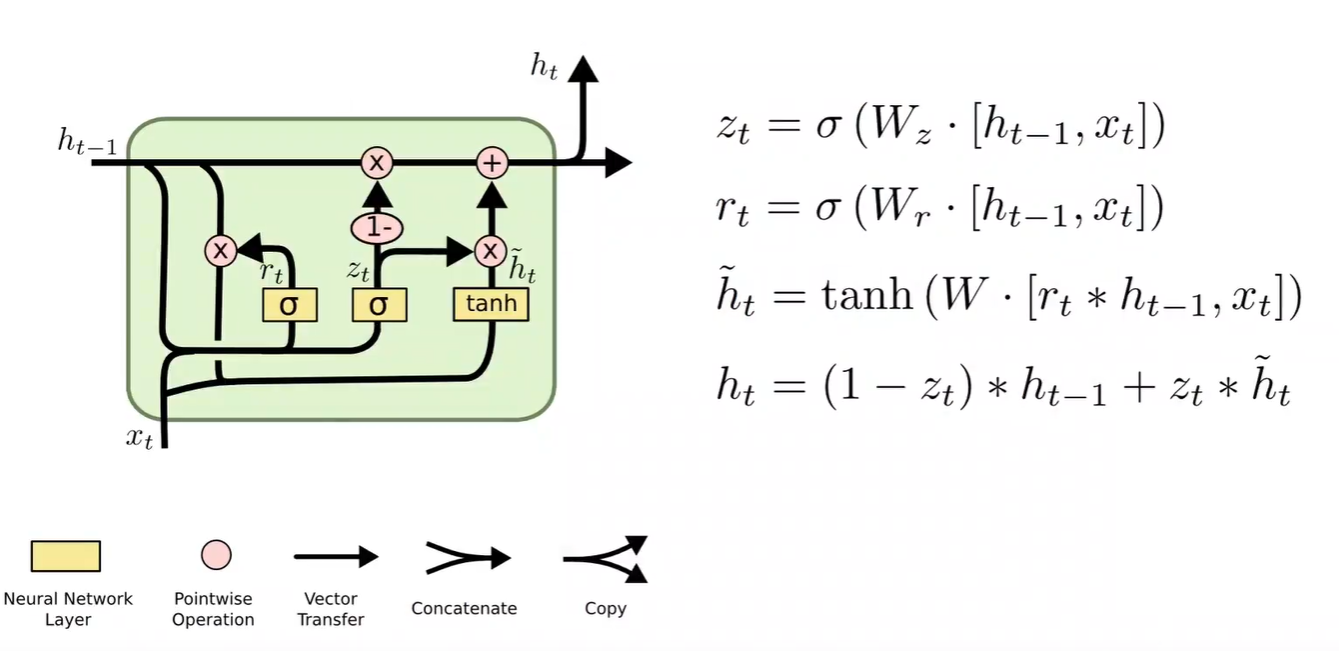

六、GRU

LSTM效果比RNN好得多,但由于計算復雜度上升,運算效率低,訓練時間長

折中方法就可以選擇 GRU

浙公網安備 33010602011771號

浙公網安備 33010602011771號