作業(yè)二

作業(yè)①

-

- 要求:在中國氣象網(wǎng)(http://www.weather.com.cn)給定城市集的7日天氣預報,并保存在數(shù)據(jù)庫。

- 輸出信息:

- Gitee文件夾鏈接

查看代碼

import urllib.request

from bs4 import BeautifulSoup

from bs4 import UnicodeDammit

import sqlite3

db_name = "weathers.db"

conn = sqlite3.connect(db_name)

cursor = conn.cursor()

cursor.execute("""

CREATE TABLE IF NOT EXISTS weathers (

wCity VARCHAR(16),

wDate VARCHAR(16),

wWeather VARCHAR(64),

wTemp VARCHAR(32),

CONSTRAINT pk_weather PRIMARY KEY (wCity, wDate)

)

""")

cursor.execute("DELETE FROM weathers")

conn.commit()

city_code = {

"北京": "101010100",

"上海": "101020100",

"廣州": "101280101",

"深圳": "101280601"

}

headers = {

"User-Agent": (

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) "

"AppleWebKit/537.36 (KHTML, like Gecko) "

"Chrome/122.0.0.0 Safari/537.36"

)

}

# 爬取數(shù)據(jù)

for city in ["北京", "上海", "廣州", "深圳"]:

url = f"http://www.weather.com.cn/weather/{city_code[city]}.shtml"

req = urllib.request.Request(url, headers=headers)

data = urllib.request.urlopen(req).read()

# 處理編碼

dammit = UnicodeDammit(data, ["utf-8", "gbk"])

html = dammit.unicode_markup

soup = BeautifulSoup(html, "lxml")

# 解析天氣列表

lis = soup.select("ul[class='t clearfix'] li")

for li in lis:

try:

date = li.select("h1")[0].text

weather = li.select("p[class='wea']")[0].text

temp = li.select("p[class='tem'] span")[0].text + "/" + li.select("p[class='tem'] i")[0].text

print(f"{city} {date} {weather} {temp}")

cursor.execute(

"INSERT INTO weathers (wCity, wDate, wWeather, wTemp) VALUES (?, ?, ?, ?)",

(city, date, weather, temp)

)

except Exception as e:

print("解析錯誤:", e)

conn.commit()

conn.close()

print("數(shù)據(jù)爬取完成,已保存到 weathers.db")心得體會

要注意處理編碼

作業(yè)②

-

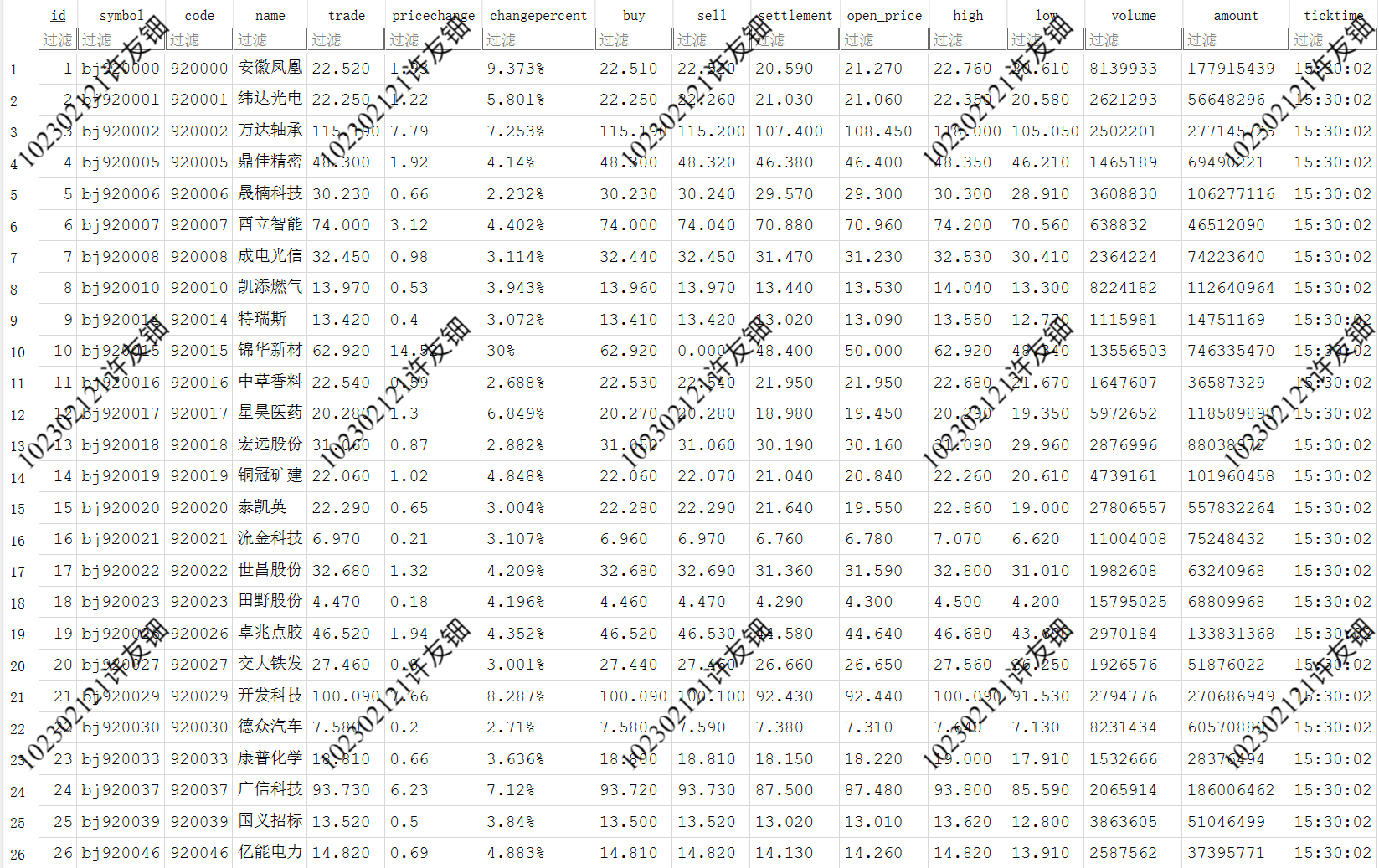

- 要求:用requests和BeautifulSoup庫方法定向爬取股票相關信息,并存儲在數(shù)據(jù)庫中。

- 候選網(wǎng)站:東方財富網(wǎng):https://www.eastmoney.com/

- 新浪股票:http://finance.sina.com.cn/stock/

- 技巧:在谷歌瀏覽器中進入F12調(diào)試模式進行抓包,查找股票列表加載使用的url,并分析api返回的值,并根據(jù)所要求的參數(shù)可適當更改api的請求參數(shù)。根據(jù)URL可觀察請求的參數(shù)f1、f2可獲取不同的數(shù)值,根據(jù)情況可刪減請求的參數(shù)。

- 參考鏈接:https://zhuanlan.zhihu.com/p/50099084

- 輸出信息:

- Gitee文件夾鏈接

import requests

import time

import sqlite3

conn = sqlite3.connect("stocks.db")

cursor = conn.cursor()

# 創(chuàng)建表格

cursor.execute("""

CREATE TABLE IF NOT EXISTS stocks (

id INTEGER PRIMARY KEY AUTOINCREMENT,

symbol TEXT,

code TEXT,

name TEXT,

trade TEXT,

pricechange TEXT,

changepercent TEXT,

buy TEXT,

sell TEXT,

settlement TEXT,

open_price TEXT,

high TEXT,

low TEXT,

volume TEXT,

amount TEXT,

ticktime TEXT

);

""")

url = "https://vip.stock.finance.sina.com.cn/quotes_service/api/json_v2.php/Market_Center.getHQNodeData"

headers = {

"User-Agent": (

"Mozilla/5.0 (Windows NT 10.0; Win64; x64) "

"AppleWebKit/537.36 (KHTML, like Gecko) "

"Chrome/122.0.0.0 Safari/537.36"

),

"Referer": "https://finance.sina.com.cn/",

}

# 獲取多頁數(shù)據(jù)用session防止多次請求連接被拒絕

session = requests.Session()

session.headers.update(headers)

# 爬取十頁

for page in range(1, 11):

# 字典

params = {

"page": page,

"num": 40,

"sort": "symbol",

"asc": 1,

"node": "hs_a",

"symbol": "",

"_s_r_a": "page"

}

print(f"正在抓取第 {page} 頁...")

try:

resp = session.get(url, params=params, timeout=10)

data = resp.json()

if not data:

print("數(shù)據(jù)為空,到末頁了。")

break

print(f"第 {page} 頁返回 {len(data)} 條記錄")

# 給漲幅百分比加%

for stock in data:

changepercent = stock.get("changepercent", "")

if changepercent and not str(changepercent).endswith("%"):

stock["changepercent"] = f"{changepercent}%"

for stock in data:

cursor.execute("""

INSERT INTO stocks (

symbol, code, name, trade, pricechange, changepercent,

buy, sell, settlement, open_price, high, low, volume, amount, ticktime

) VALUES (

:symbol, :code, :name, :trade, :pricechange, :changepercent,

:buy, :sell, :settlement, :open, :high, :low, :volume, :amount, :ticktime

)

""", stock)

conn.commit()

time.sleep(1.5)

except Exception as e:

print(f"第 {page} 頁出錯:{e}")

time.sleep(3)

cursor.close()

conn.close()

print("數(shù)據(jù)已保存到 stocks.db")心得體會

接口返回json數(shù)據(jù)要注意轉(zhuǎn)為Python數(shù)據(jù)結(jié)構(gòu),要記得判斷是否到末頁防止報錯

作業(yè)③

-

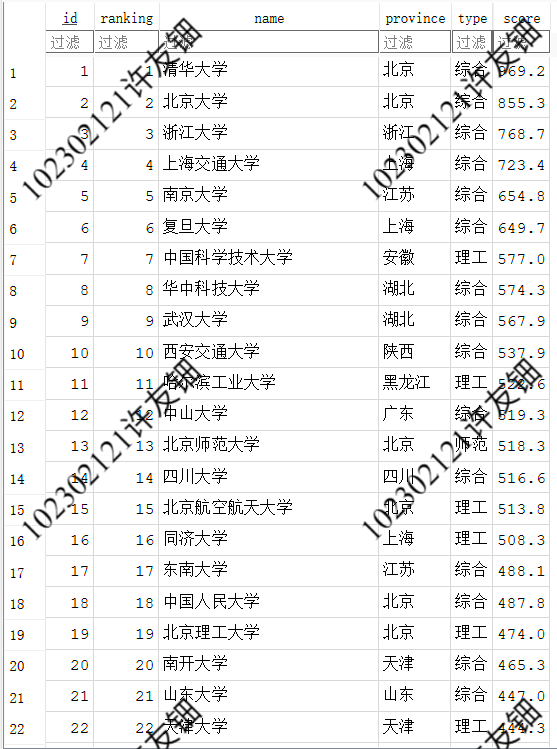

- 要求:爬取中國大學2021主榜(https://www.shanghairanking.cn/rankings/bcur/2021)所有院校信息,并存儲在數(shù)據(jù)庫中,同時將瀏覽器F12調(diào)試分析的過程錄制Gif加入至博客中。

- 技巧:分析該網(wǎng)站的發(fā)包情況,分析獲取數(shù)據(jù)的api

- 輸出信息:

- Gitee文件夾鏈接

import re

import sqlite3

# 參數(shù)字段

params_str = "a, b, c, d, e, f, g, h, i, j, k, l, m, n, o, p, q, r, s, t, u, v, w, x, y, z, A, B, C, D, E, F, G, H, I, J, K, L, M, N, O, P, Q, R, S, T, U, V, W, X, Y, Z, _, $, aa, ab, ac, ad, ae, af, ag, ah, ai, aj, ak, al, am, an, ao, ap, aq, ar, as, at, au, av, aw, ax, ay, az, aA, aB, aC, aD, aE, aF, aG, aH, aI, aJ, aK, aL, aM, aN, aO, aP, aQ, aR, aS, aT, aU, aV, aW, aX, aY, aZ, a_, a$, ba, bb, bc, bd, be, bf, bg, bh, bi, bj, bk, bl, bm, bn, bo, bp, bq, br, bs, bt, bu, bv, bw, bx, by, bz, bA, bB, bC, bD, bE, bF, bG, bH, bI, bJ, bK, bL, bM, bN, bO, bP, bQ, bR, bS, bT, bU, bV, bW, bX, bY, bZ, b_, b$, ca, cb, cc, cd, ce, cf, cg, ch, ci, cj, ck, cl, cm, cn, co, cp, cq, cr, cs, ct, cu, cv, cw, cx, cy, cz, cA, cB, cC, cD, cE, cF, cG, cH, cI, cJ, cK, cL, cM, cN, cO, cP, cQ, cR, cS, cT, cU, cV, cW, cX, cY, cZ, c_, c$, da, db, dc, dd, de, df, dg, dh, di, dj, dk, dl, dm, dn, do0, dp, dq, dr, ds, dt, du, dv, dw, dx, dy, dz, dA, dB, dC, dD, dE, dF, dG, dH, dI, dJ, dK, dL, dM, dN, dO, dP, dQ, dR, dS, dT, dU, dV, dW, dX, dY, dZ, d_, d$, ea, eb, ec, ed, ee, ef, eg, eh, ei, ej, ek, el, em, en, eo, ep, eq, er, es, et, eu, ev, ew, ex, ey, ez, eA, eB, eC, eD, eE, eF, eG, eH, eI, eJ, eK, eL, eM, eN, eO, eP, eQ, eR, eS, eT, eU, eV, eW, eX, eY, eZ, e_, e$, fa, fb, fc, fd, fe, ff, fg, fh, fi, fj, fk, fl, fm, fn, fo, fp, fq, fr, fs, ft, fu, fv, fw, fx, fy, fz, fA, fB, fC, fD, fE, fF, fG, fH, fI, fJ, fK, fL, fM, fN, fO, fP, fQ, fR, fS, fT, fU, fV, fW, fX, fY, fZ, f_, f$, ga, gb, gc, gd, ge, gf, gg, gh, gi, gj, gk, gl, gm, gn, go, gp, gq, gr, gs, gt, gu, gv, gw, gx, gy, gz, gA, gB, gC, gD, gE, gF, gG, gH, gI, gJ, gK, gL, gM, gN, gO, gP, gQ, gR, gS, gT, gU, gV, gW, gX, gY, gZ, g_, g$, ha, hb, hc, hd, he, hf, hg, hh, hi, hj, hk, hl, hm, hn, ho, hp, hq, hr, hs, ht, hu, hv, hw, hx, hy, hz, hA, hB, hC, hD, hE, hF, hG, hH, hI, hJ, hK, hL, hM, hN, hO, hP, hQ, hR, hS, hT, hU, hV, hW, hX, hY, hZ, h_, h$, ia, ib, ic, id, ie, if0, ig, ih, ii, ij, ik, il, im, in0, io, ip, iq, ir, is, it, iu, iv, iw, ix, iy, iz, iA, iB, iC, iD, iE, iF, iG, iH, iI, iJ, iK, iL, iM, iN, iO, iP, iQ, iR, iS, iT, iU, iV, iW, iX, iY, iZ, i_, i$, ja, jb, jc, jd, je, jf, jg, jh, ji, jj, jk, jl, jm, jn, jo, jp, jq, jr, js, jt, ju, jv, jw, jx, jy, jz, jA, jB, jC, jD, jE, jF, jG, jH, jI, jJ, jK, jL, jM, jN, jO, jP, jQ, jR, jS, jT, jU, jV, jW, jX, jY, jZ, j_, j$, ka, kb, kc, kd, ke, kf, kg, kh, ki, kj, kk, kl, km, kn, ko, kp, kq, kr, ks, kt, ku, kv, kw, kx, ky, kz, kA, kB, kC, kD, kE, kF, kG, kH, kI, kJ, kK, kL, kM, kN, kO, kP, kQ, kR, kS, kT, kU, kV, kW, kX, kY, kZ, k_, k$, la, lb, lc, ld, le, lf, lg, lh, li, lj, lk, ll, lm, ln, lo, lp, lq, lr, ls, lt, lu, lv, lw, lx, ly, lz, lA, lB, lC, lD, lE, lF, lG, lH, lI, lJ, lK, lL, lM, lN, lO, lP, lQ, lR, lS, lT, lU, lV, lW, lX, lY, lZ, l_, l$, ma, mb, mc, md, me, mf, mg, mh, mi, mj, mk, ml, mm, mn, mo, mp, mq, mr, ms, mt, mu, mv, mw, mx, my, mz, mA, mB, mC, mD, mE, mF, mG, mH, mI, mJ, mK, mL, mM, mN, mO, mP, mQ, mR, mS, mT, mU, mV, mW, mX, mY, mZ, m_, m$, na, nb, nc, nd, ne, nf, ng, nh, ni, nj, nk, nl, nm, nn, no, np, nq, nr, ns, nt, nu, nv, nw, nx, ny, nz, nA, nB, nC, nD, nE, nF, nG, nH, nI, nJ, nK, nL, nM, nN, nO, nP, nQ, nR, nS, nT, nU, nV, nW, nX, nY, nZ, n_, n$, oa, ob, oc, od, oe, of, og, oh, oi, oj, ok, ol, om, on, oo, op, oq, or, os, ot, ou, ov, ow, ox, oy, oz, oA, oB, oC, oD, oE, oF, oG, oH, oI, oJ, oK, oL, oM, oN, oO, oP, oQ, oR, oS, oT, oU, oV, oW, oX, oY, oZ, o_, o$, pa, pb, pc, pd, pe, pf, pg, ph, pi, pj, pk, pl, pm, pn, po, pp, pq, pr, ps, pt, pu, pv, pw, px, py, pz, pA, pB, pC, pD, pE"

args_str = '"", false, null, 0, "理工", "綜合", true, "師范", "雙一流", "211", "江蘇", "985", "農(nóng)業(yè)", "山東", "河南", "河北", "北京", "遼寧", "陜西", "四川", "廣東", "湖北", "湖南", "浙江", "安徽", "江西", 1, "黑龍江", "吉林", "上海", 2, "福建", "山西", "云南", "廣西", "貴州", "甘肅", "內(nèi)蒙古", "重慶", "天津", "新疆", "467", "496", "2025,2024,2023,2022,2021,2020", "林業(yè)", "5.8", "533", "2023-01-05T00:00:00+08:00", "23.1", "7.3", "海南", "37.9", "28.0", "4.3", "12.1", "16.8", "11.7", "3.7", "4.6", "297", "397", "21.8", "32.2", "16.6", "37.6", "24.6", "13.6", "13.9", "3.3", "5.2", "8.1", "3.9", "5.1", "5.6", "5.4", "2.6", "162", 93.5, 89.4, "寧夏", "青海", "西藏", 7, "11.3", "35.2", "9.5", "35.0", "32.7", "23.7", "33.2", "9.2", "30.6", "8.5", "22.7", "26.3", "8.0", "10.9", "26.0", "3.2", "6.8", "5.7", "13.8", "6.5", "5.5", "5.0", "13.2", "13.3", "15.6", "18.3", "3.0", "21.3", "12.0", "22.8", "3.6", "3.4", "3.5", "95", "109", "117", "129", "138", "147", "159", "185", "191", "193", "196", "213", "232", "237", "240", "267", "275", "301", "309", "314", "318", "332", "334", "339", "341", "354", "365", "371", "378", "384", "388", "403", "416", "418", "420", "423", "430", "438", "444", "449", "452", "457", "461", "465", "474", "477", "485", "487", "491", "501", "508", "513", "518", "522", "528", 83.4, "538", "555", 2021, 11, 14, 10, "12.8", "42.9", "18.8", "36.6", "4.8", "40.0", "37.7", "11.9", "45.2", "31.8", "10.4", "40.3", "11.2", "30.9", "37.8", "16.1", "19.7", "11.1", "23.8", "29.1", "0.2", "24.0", "27.3", "24.9", "39.5", "20.5", "23.4", "9.0", "4.1", "25.6", "12.9", "6.4", "18.0", "24.2", "7.4", "29.7", "26.5", "22.6", "29.9", "28.6", "10.1", "16.2", "19.4", "19.5", "18.6", "27.4", "17.1", "16.0", "27.6", "7.9", "28.7", "19.3", "29.5", "38.2", "8.9", "3.8", "15.7", "13.5", "1.7", "16.9", "33.4", "132.7", "15.2", "8.7", "20.3", "5.3", "0.3", "4.0", "17.4", "2.7", "160", "161", "164", "165", "166", "167", "168", 130.6, 105.5, 2025, "學生、家長、高校管理人員、高教研究人員等", "中國大學排名(主榜)", 25, 13, 12, "全部", "1", "88.0", 5, "2", "36.1", "25.9", "3", "34.3", "4", "35.5", "21.6", "39.2", "5", "10.8", "4.9", "30.4", "6", "46.2", "7", "0.8", "42.1", "8", "32.1", "22.9", "31.3", "9", "43.0", "25.7", "10", "34.5", "10.0", "26.2", "46.5", "11", "47.0", "33.5", "35.8", "25.8", "12", "46.7", "13.7", "31.4", "33.3", "13", "34.8", "42.3", "13.4", "29.4", "14", "30.7", "15", "42.6", "26.7", "16", "12.5", "17", "12.4", "44.5", "44.8", "18", "10.3", "15.8", "19", "32.3", "19.2", "20", "21", "28.8", "9.6", "22", "45.0", "23", "30.8", "16.7", "16.3", "24", "25", "32.4", "26", "9.4", "27", "33.7", "18.5", "21.9", "28", "30.2", "31.0", "16.4", "29", "34.4", "41.2", "2.9", "30", "38.4", "6.6", "31", "4.4", "17.0", "32", "26.4", "33", "6.1", "34", "38.8", "17.7", "35", "36", "38.1", "11.5", "14.9", "37", "14.3", "18.9", "38", "13.0", "39", "27.8", "33.8", "3.1", "40", "41", "28.9", "42", "28.5", "38.0", "34.0", "1.5", "43", "15.1", "44", "31.2", "120.0", "14.4", "45", "149.8", "7.5", "46", "47", "38.6", "48", "49", "25.2", "50", "19.8", "51", "5.9", "6.7", "52", "4.2", "53", "1.6", "54", "55", "20.0", "56", "39.8", "18.1", "57", "35.6", "58", "10.5", "14.1", "59", "8.2", "60", "140.8", "12.6", "61", "62", "17.6", "63", "64", "1.1", "65", "20.9", "66", "67", "68", "2.1", "69", "123.9", "27.1", "70", "25.5", "37.4", "71", "72", "73", "74", "75", "76", "27.9", "7.0", "77", "78", "79", "80", "81", "82", "83", "84", "1.4", "85", "86", "87", "88", "89", "90", "91", "92", "93", "109.0", "94", 235.7, "97", "98", "99", "100", "101", "102", "103", "104", "105", "106", "107", "108", 223.8, "111", "112", "113", "114", "115", "116", 215.5, "119", "120", "121", "122", "123", "124", "125", "126", "127", "128", 206.7, "131", "132", "133", "134", "135", "136", "137", 201, "140", "141", "142", "143", "144", "145", "146", 194.6, "149", "150", "151", "152", "153", "154", "155", "156", "157", "158", 183.3, "169", "170", "171", "172", "173", "174", "175", "176", "177", "178", "179", "180", "181", "182", "183", "184", 169.6, "187", "188", "189", "190", 168.1, 167, "195", 165.5, "198", "199", "200", "201", "202", "203", "204", "205", "206", "207", "208", "209", "210", "212", 160.5, "215", "216", "217", "218", "219", "220", "221", "222", "223", "224", "225", "226", "227", "228", "229", "230", "231", 153.3, "234", "235", "236", 150.8, "239", 149.9, "242", "243", "244", "245", "246", "247", "248", "249", "250", "251", "252", "253", "254", "255", "256", "257", "258", "259", "260", "261", "262", "263", "264", "265", "266", 139.7, "269", "270", "271", "272", "273", "274", 137, "277", "278", "279", "280", "281", "282", "283", "284", "285", "286", "287", "288", "289", "290", "291", "292", "293", "294", "295", "296", "300", 130.2, "303", "304", "305", "306", "307", "308", 128.4, "311", "312", "313", 125.9, "316", "317", 124.9, "320", "321", "Wuyi University", "322", "323", "324", "325", "326", "327", "328", "329", "330", "331", 120.9, 120.8, "Taizhou University", "336", "337", "338", 119.9, 119.7, "343", "344", "345", "346", "347", "348", "349", "350", "351", "352", "353", 115.4, "356", "357", "358", "359", "360", "361", "362", "363", "364", 112.6, "367", "368", "369", "370", 111, "373", "374", "375", "376", "377", 109.4, "380", "381", "382", "383", 107.6, "386", "387", 107.1, "390", "391", "392", "393", "394", "395", "396", "400", "401", "402", 104.7, "405", "406", "407", "408", "409", "410", "411", "412", "413", "414", "415", 101.2, 101.1, 100.9, "422", 100.3, "425", "426", "427", "428", "429", 99, "432", "433", "434", "435", "436", "437", 97.6, "440", "441", "442", "443", 96.5, "446", "447", "448", 95.8, "451", 95.2, "454", "455", "456", 94.8, "459", "460", 94.3, "463", "464", 93.6, "472", "473", 92.3, "476", 91.7, "479", "480", "481", "482", "483", "484", 90.7, 90.6, "489", "490", 90.2, "493", "494", "495", 89.3, "503", "504", "505", "506", "507", 87.4, "510", "511", "512", 86.8, "515", "516", "517", 86.2, "520", "521", 85.8, "524", "525", "526", "527", 84.6, "530", "531", "532", "537", 82.8, "540", "541", "542", "543", "544", "545", "546", "547", "548", "549", "550", "551", "552", "553", "554", 78.1, "557", "558", "559", "560", "561", "562", "563", "564", "565", "566", "567", "568", "569", "570", "571", "572", "573", "574", "575", "576", "577", "578", "579", "580", "581", "582", 4, "2025-04-15T00:00:00+08:00", "logo\u002Fannual\u002Fbcur\u002F2025.png", "軟科中國大學排名于2015年首次發(fā)布,多年來以專業(yè)、客觀、透明的優(yōu)勢贏得了高等教育領域內(nèi)外的廣泛關注和認可,已經(jīng)成為具有重要社會影響力和權(quán)威參考價值的中國大學排名領先品牌。軟科中國大學排名以服務中國高等教育發(fā)展和進步為導向,采用數(shù)百項指標變量對中國大學進行全方位、分類別、監(jiān)測式評價,向?qū)W生、家長和全社會提供及時、可靠、豐富的中國高校可比信息。", 2024, 2023, 2022, 15, 2020, 2019, 2018, 2017, 2016, 2015'

params = [p.strip() for p in params_str.split(",")]

args = [p.strip().strip('"') for p in re.split(r',\s*(?=(?:[^"]*"[^"]*")*[^"]*$)', args_str)]

# 處理布爾值和null

for i, arg in enumerate(args):

if arg == 'true': args[i] = True

elif arg == 'false': args[i] = False

elif arg == 'null': args[i] = None

elif arg.isdigit(): args[i] = int(arg)

elif re.match(r'^\d+\.\d+$', arg): args[i] = float(arg)

mapping = dict(zip(params, args))

with open("payload.js", "r", encoding="utf-8") as f:

text = f.read()

# 參數(shù)替換

def replace(text, mapping):

for i, j in mapping.items():

text = re.sub(rf'(?<!\w){re.escape(i)}(?!\w)', str(j), text)

return text

decoded = replace(text, mapping)

# 正則提取出大學信息

univData = re.search(r'univData:\s*\[(.*?)\],\s*indList:', decoded, re.S)

univ_text = univData.group(1)

univ_items = re.findall(r'\{(?:[^{}]|(?:\{[^{}]*\}))*?\}', univ_text)

# 列表保存大學信息

records = []

for i, it in enumerate(univ_items, 1):

name = re.search(r'univNameCn:"(.*?)"', it)

province = re.search(r'province:([^,]+)', it)

category = re.search(r'univCategory:([^,]+)', it)

score = re.search(r'score:([0-9.]+)', it)

records.append((

i,

name.group(1) if name else "",

province.group(1) if province else "",

category.group(1) if category else "",

float(score.group(1)) if score else None

))

# 保存到數(shù)據(jù)庫

conn = sqlite3.connect("univ.db")

cursor = conn.cursor()

cursor.execute('''CREATE TABLE IF NOT EXISTS univ (

id INTEGER PRIMARY KEY AUTOINCREMENT,

ranking INTEGER,

name TEXT,

province TEXT,

type TEXT,

score REAL

)''')

for record in records:

cursor.execute('''

INSERT INTO univ (ranking, name, province, type, score)

VALUES (?, ?, ?, ?, ?)

''', (

record[0],

record[1],

record[2],

record[3],

record[4]

))

conn.commit()

conn.close()

print("數(shù)據(jù)已保存到 univ.db!")

心得體會

主要是正則表達式不熟悉,導致在替換省份時有些省份沒替換成功,還有實參中布爾值和null的處理

浙公網(wǎng)安備 33010602011771號

浙公網(wǎng)安備 33010602011771號