二進制搭建K8S

一、基礎(chǔ)集群環(huán)境搭建

1.1:k8s高可用集群環(huán)境規(guī)劃信息

1.1.1:多master服務(wù)器統(tǒng)計

| 類型(臺數(shù)) | IP地址 | 說明 |

|

ansible(2) |

192.168.134.11/12 | 集群部署服務(wù)器,和maste在一起 |

| K8Smaster(2) | 192.168.134.11/12 | K8s控制端,通過一個VIP做主備高可用 |

| Harbor(1) | 192.168.134.16 | 鏡像服務(wù)器 |

| etcd(3) | 192.168.134.11/12/13 | 保存k8s集群數(shù)據(jù)的服務(wù)器 |

| haproxy(2) | 192.168.134.15 | 高可用etcd代理服務(wù)器 |

| node(2) | 192.168.134.13/14 | 真正運行容器的服務(wù)器 |

1.2:基礎(chǔ)環(huán)境準(zhǔn)備

系統(tǒng)主機名配置、IP配置、系統(tǒng)參數(shù)優(yōu)化,以及依賴的負載均衡和Harbor部署

1.2.1:系統(tǒng)配置

主機名等系統(tǒng)配置略

1.2.2:高可用負載均衡

k8s高可用反向代理

1.2.2.1:keepalived

root@HA:~# cat /etc/keepalived/keepalived.conf ! Configuration File for keepalived global_defs { notification_email { acassen } notification_email_from Alexandre.Cassen@firewall.loc smtp_server 192.168.200.1 smtp_connect_timeout 30 router_id LVS_DEVEL } vrrp_instance VI_1 { state MASTER interface eth0 garp_master_delay 10 smtp_alert virtual_router_id 88 priority 100 advert_int 1 authentication { auth_type PASS auth_pass 1111 } virtual_ipaddress { 192.168.134.188 eth0 label eth0:1 192.168.134.189 eth0 label eth0:2 } }

1.2.2.2:haproxy

listen stats #狀態(tài)頁 mode http bind 0.0.0.0:9999 stats enable log global stats uri /haproxy-status stats auth haadmin:123456 listen k8s-api-6443 #api的HA bind 192.168.134.188:6443 mode tcp # balance roundrobin server master1 192.168.134.11:6443 check inter 3s fall 3 rise 5 # server master2 192.168.134.12:6443 check inter 3s fall 3 rise 5

1.2.2.3:Harbor之https:

harbor節(jié)點:

root@harbor:/usr/local/src/harbor# mkdir certs root@harbor:/usr/local/src/harbor# cd certs/ openssl genrsa -out /usr/local/src/harbor/certs/harbor-ca.key ##生成私有key openssl req -x509 -new -nodes -key /usr/local/src/harbor/certs/harbor-ca.key -subj "/CN=harbor.linux.com" -days 7120 -out /usr/local/src/harbor/certs/harbor-ca.crt 如果報錯: Can't load /root/.rnd int openssl req -x509 -new -nodes -key 則: touch /root/.rnd 再執(zhí)行一遍: /usr/local/src/harbor/certs/harbor-ca.key -subj "/CN=harbor.linux.com" -days 7120 -out /usr/local/src/harbor/certs/harbor-ca.cro RNG #harbor.linux.com為harbor域名 這里只顯示harbor.cfg文件修改處 hostname = harbor.linux.com

ui_url_protocol = https ssl_cert = /usr/local/src/harbor/certs/harbor-ca.crt ssl_cert_key = /usr/local/src/harbor/certs/harbor-ca.key harbor_admin_password = 123456

最后執(zhí)行:

./install.sh

其他節(jié)點:

client 同步在crt證書:

mkdir /etc/docker/certs.d/harbor.linux.com -p scp 192.168.134.16:/usr/local/src/harbor/certs/harbor-ca.crt /etc/docker/certs.d/harbor.linux.com

1.3:ansible部署

1.3.1:基礎(chǔ)環(huán)境準(zhǔn)備

所有master、node、etc節(jié)點安裝python2.7

apt-get install python2.7 -y ln -sv /usr/bin/python2.7 /usr/bin/python

1.3.2:master節(jié)點安裝ansible

apt install ansible -y

apt-get install sshpass -y 傳送公鑰 #!/bin/bash #目標(biāo)主機 IP=" 192.168.134.11 192.168.134.12 192.168.134.13 192.168.134.14 " for node in ${IP[@]};do echo $node sshpass -p r00tme ssh-copy-id ${node} -o StrictHostKeyChecking=no if [ $? -eq 0 ];then echo "$node copy success" else echo " $node copy failure" fi done

1.3.3:在ansible控制端編排k8s安裝

https://github.com/easzlab/kubeasz/blob/master/docs/setup/00-planning_and_overall_intro.md

1.3.3.1:安裝docker

#!/bin/bash # step 1: 安裝必要的一些系統(tǒng)工具 sudo apt-get update sudo apt-get -y install apt-transport-https ca-certificates curl software-properties-common # step 2: 安裝GPG證書 curl -fsSL https://mirrors.aliyun.com/docker-ce/linux/ubuntu/gpg | sudo apt-key add - # Step 3: 寫入軟件源信息 sudo add-apt-repository "deb [arch=amd64] https://mirrors.aliyun.com/docker-ce/linux/ubuntu $(lsb_release -cs) stable" # Step 4: 更新并安裝Docker-CE sudo apt-get -y update sudo apt-get -y install docker-ce docker-ce-cli

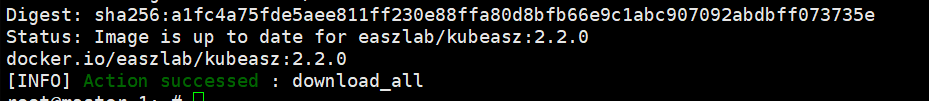

1.3.3.2:下載離線鏡像

# 下載工具腳本easzup, export release=2.2.0 curl -C- -fLO --retry 3 https://github.com/easzlab/kubeasz/releases/download/${release}/easzup chmod +x ./easzup # 使用工具腳本下載 ./easzup -D

1.3.3.3:準(zhǔn)備hosts文件

root@master-1:/etc/ansible# grep -v '#' hosts | grep -v '^$' [etcd] 192.168.134.11 NODE_NAME=master-1 192.168.134.12 NODE_NAME=master-2 192.168.134.13 NODE_NAME=node-1 [kube-master] 192.168.134.11 192.168.134.12 [kube-node] 192.168.134.13 192.168.134.14 [harbor] [ex-lb] 192.168.134.15 LB_ROLE=backup EX_APISERVER_VIP=192.168.134.188 EX_APISERVER_PORT=6443 [chrony] [all:vars] CONTAINER_RUNTIME="docker" CLUSTER_NETWORK="flannel" PROXY_MODE="ipvs" SERVICE_CIDR="10.10.0.0/16" CLUSTER_CIDR="172.20.0.0/16" NODE_PORT_RANGE="30000-60000" CLUSTER_DNS_DOMAIN="linux.local." bin_dir="/usr/bin" ca_dir="/etc/kubernetes/ssl" base_dir="/etc/ansible"

1.3.4:開始按步驟部署

root@master-1:/etc/ansible# ls 01.prepare.yml 03.docker.yml 06.network.yml 22.upgrade.yml 90.setup.yml 99.clean.yml dockerfiles example pics tools 02.etcd.yml 04.kube-master.yml 07.cluster-addon.yml 23.backup.yml 91.start.yml ansible.cfg docs hosts README.md 03.containerd.yml 05.kube-node.yml 11.harbor.yml 24.restore.yml 92.stop.yml bin down manifests roles

1.3.4.1:環(huán)境初始化

root@master-1:/etc/ansible# apt install python-pip -y

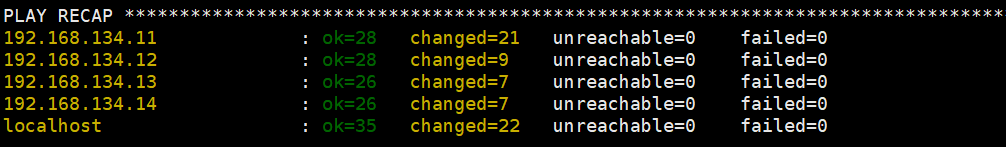

root@master-1:/etc/ansible# ansible-playbook 01.prepare.yml

1.3.4.2:部署etcd集群

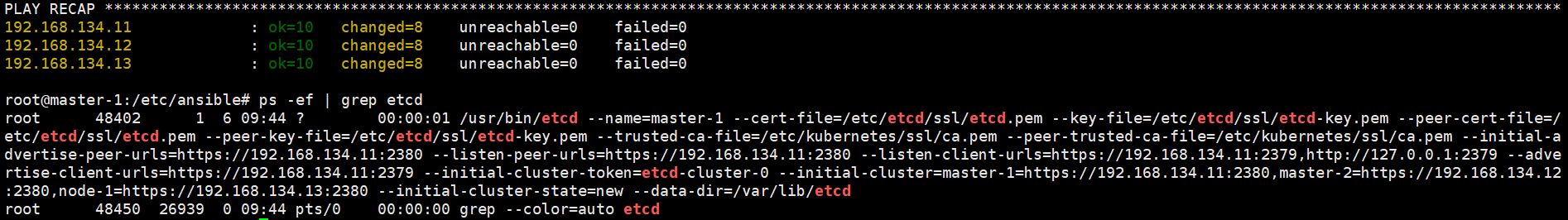

ansible-playbook 02.etcd.yml

各etcd服務(wù)器驗證etcd服務(wù):

root@master-1:/etc/ansible# export NODE_IPS="192.168.134.11 192.168.134.12 192.168.134.13" root@master-1:/etc/ansible# echo $NODE_IPS 192.168.134.11 192.168.134.12 192.168.134.13 root@master-1:/etc/ansible# for ip in ${NODE_IPS}; do ETCDCTL_API=3 /usr/bin/etcdctl --endpoints=https://${ip}:2379 --cacert=/etc/kubernetes/ssl/ca.pem --cert=/etc/etcd/ssl/etcd.pem --key=/etc/etcd/ssl/etcd-key.pem endpoint health; done https://192.168.134.11:2379 is healthy: successfully committed proposal: took = 17.538879ms https://192.168.134.12:2379 is healthy: successfully committed proposal: took = 21.189926ms https://192.168.134.13:2379 is healthy: successfully committed proposal: took = 23.069667ms

1.3.4.3:部署docker

root@master-1:/etc/ansible# ansible-playbook 03.docker.yml

如果需要更換版本,先在/etc/ansible/down 解壓docker-19.03.8.tar,覆蓋掉 cp. /etc/ansible/bin/,最后:ansible-playbook 03.docker.yml

證書替換

1.3.4.4:部署master

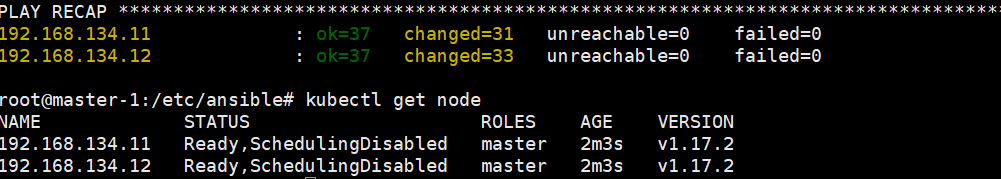

root@master-1:/etc/ansible# ansible-playbook 04.kube-master.yml

1.3.4.5:部署node

1、部署時更換鏡像源,最好先下載下來,上傳到本地harbor root@master-1:/etc/ansible/roles# grep 'SANDBOX_IMAGE' ./* -R ./containerd/defaults/main.yml:SANDBOX_IMAGE: "mirrorgooglecontainers/pause-amd64:3.1

2、部署node

root@master-1:/etc/ansible# ansible-playbook 05.kube-node.yml

1.3.4.6:部署網(wǎng)絡(luò)服務(wù)flannel

ansible-playbook 06.network.yml

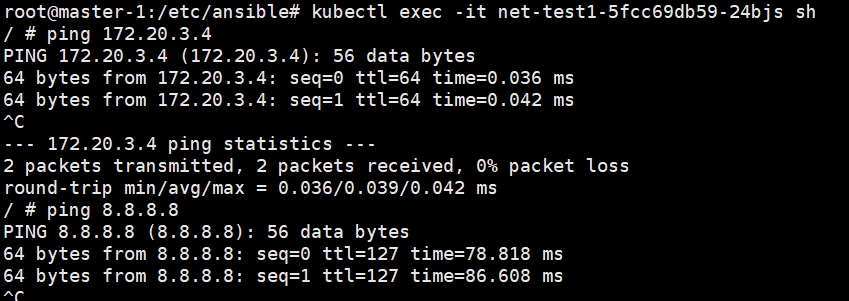

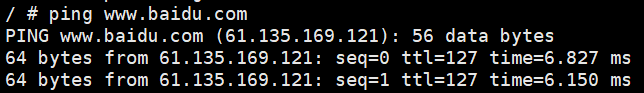

保證網(wǎng)絡(luò)沒問題:

1.3.4.7:添加、刪除master和node節(jié)點

https://github.com/easzlab/kubeasz/blob/master/docs/op/op-master.md

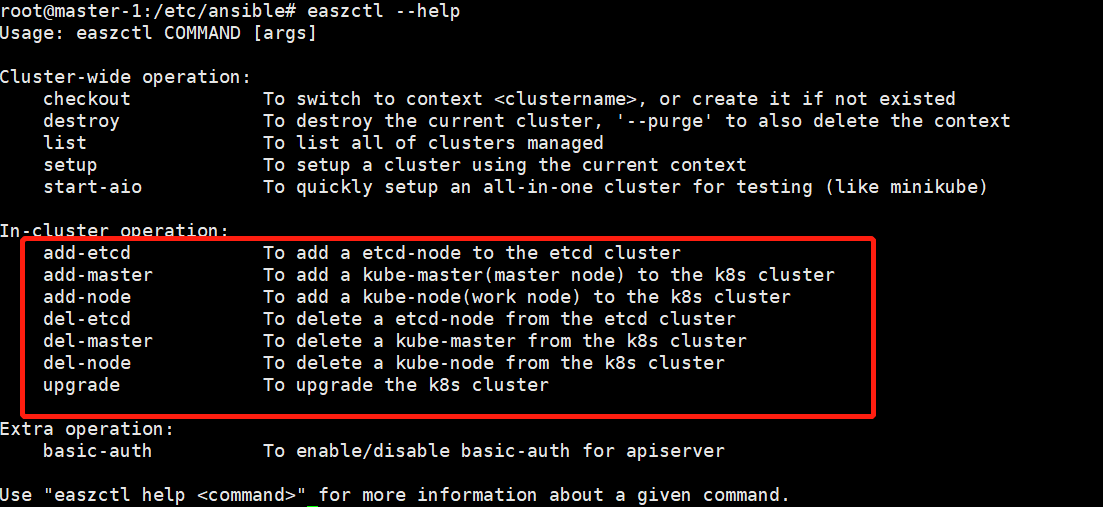

執(zhí)行:

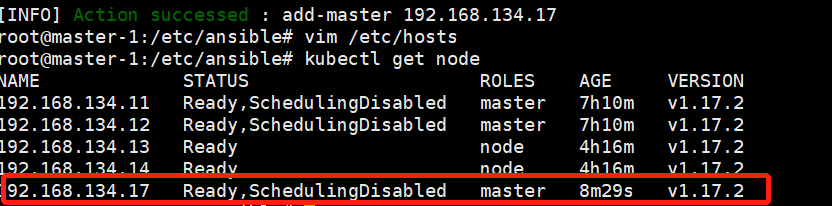

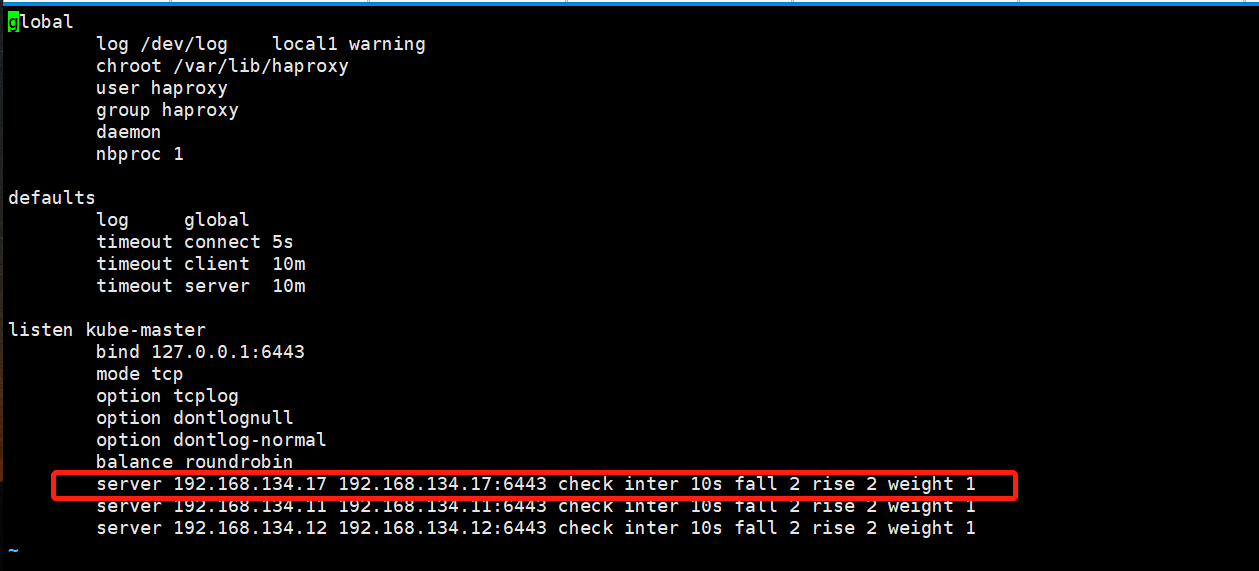

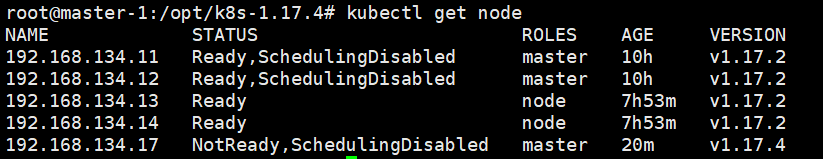

easzctl add-master 192.168.134.17 #添加master節(jié)點

easzctl del-master 192.168.134.17 #添加master節(jié)點

node節(jié)點驗證

1.3.4.8:添加node節(jié)點:

easzctl add-node 192.168.134.18

easzctl del-node 192.168.134.18

1.3.4.9:集群升級:

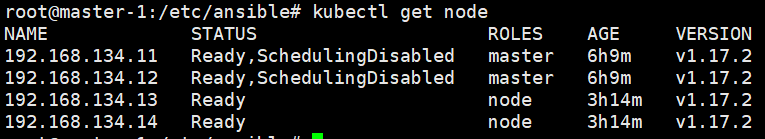

現(xiàn)版本

master節(jié)點:

root@master-1:/etc/ansible# /usr/bin/kube kube-apiserver kube-controller-manager kubectl kubelet kube-proxy kube-scheduler

node 節(jié)點:

root@node-1:~# /usr/bin/kube kubectl kubelet kube-proxy

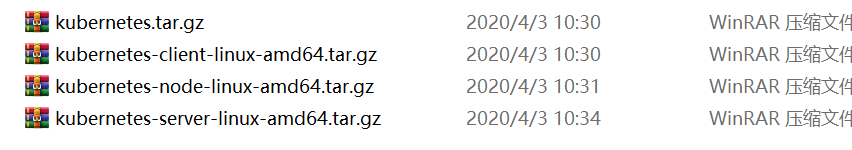

下載:要升級的二進制安裝包

首先備份現(xiàn)有版本

cd /etc/ansible/bin cp kube-apiserver kube-controller-manager kubectl kubelet kube-proxy kube-scheduler /opt/k8s-1.17.2/ #backup 1.17.2 cp kube-apiserver kube-controller-manager kubectl kube-proxy kube-scheduler kubelet /opt/k8s-1.17.4/ #backup 1.17.4

節(jié)點少的情況下:

master節(jié)點需要停掉相關(guān)的服務(wù),然后替換

root@master-3:~# systemctl stop kube-apiserver kube-controller-manager kube-proxy kube-scheduler kubelet 別的機器復(fù)制要升級的二進制過來 scp kube-apiserver kube-controller-manager kubectl kubelet kube-proxy kube-scheduler 192.168.134.17:/usr/bin 然后重啟 systemctl start kube-apiserver kube-controller-manager kube-proxy kube-scheduler kubelet

node節(jié)點需要更換kubectl、kubelet、kube-proxy組件

官方ansible方法:

https://github.com/easzlab/kubeasz/blob/master/docs/op/upgrade.md

復(fù)制替換ansible控制端目錄/etc/ansible/bin對應(yīng)文件

在ansible控制端執(zhí)行ansible-playbook -t upgrade_k8s 22.upgrade.yml

root@master-1:/etc/ansible# easzctl upgrade

驗證:

1.3.5:dashboard插件

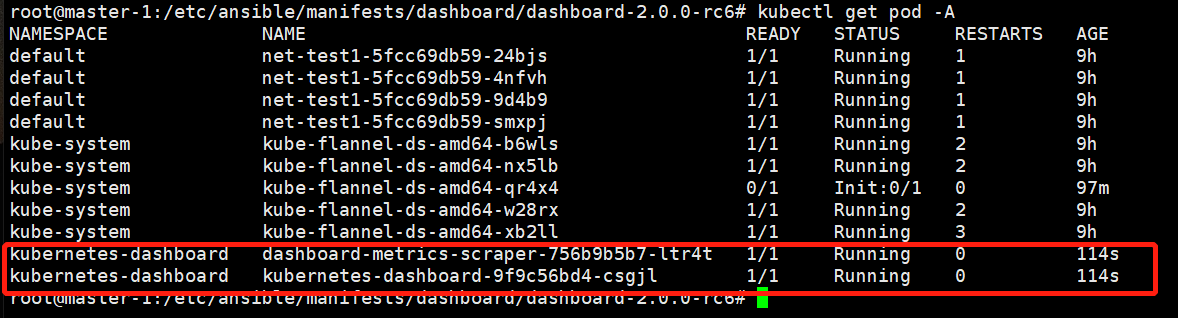

root@master-1:# cd /etc/ansible/manifests/dashboard mkdir dashboard-2.0.0-rc6 cd dashboard-2.0.0-rc6 copy進來 root@master-1:/etc/ansible/manifests/dashboard/dashboard-2.0.0-rc6# ll total 20 drwxr-xr-x 2 root root 4096 Apr 11 23:45 ./ drwxrwxr-x 5 root root 4096 Apr 11 23:15 ../ -rw-r--r-- 1 root root 374 Mar 28 17:44 admin-user.yml -rw-r--r-- 1 root root 7661 Apr 11 23:45 dashboard-2.0.0-rc6.yml 修改配置文件 root@master-1:/etc/ansible/manifests/dashboard/dashboard-2.0.0-rc6# grep image dashboard-2.0.0-rc6.yml image: harbor.linux.com/dashboard/kubernetesui/dashboard:v2.0.0-rc6 imagePullPolicy: IfNotPresent image: harbor.linux.com/dashboard/kubernetesui/metrics-scraper:v1.0.3 執(zhí)行: kubectl apply -f . Error from server (NotFound): error when creating "admin-user.yml": namespaces "kubernetes-dashboard" not found 再執(zhí)行一次 kubectl apply -f .

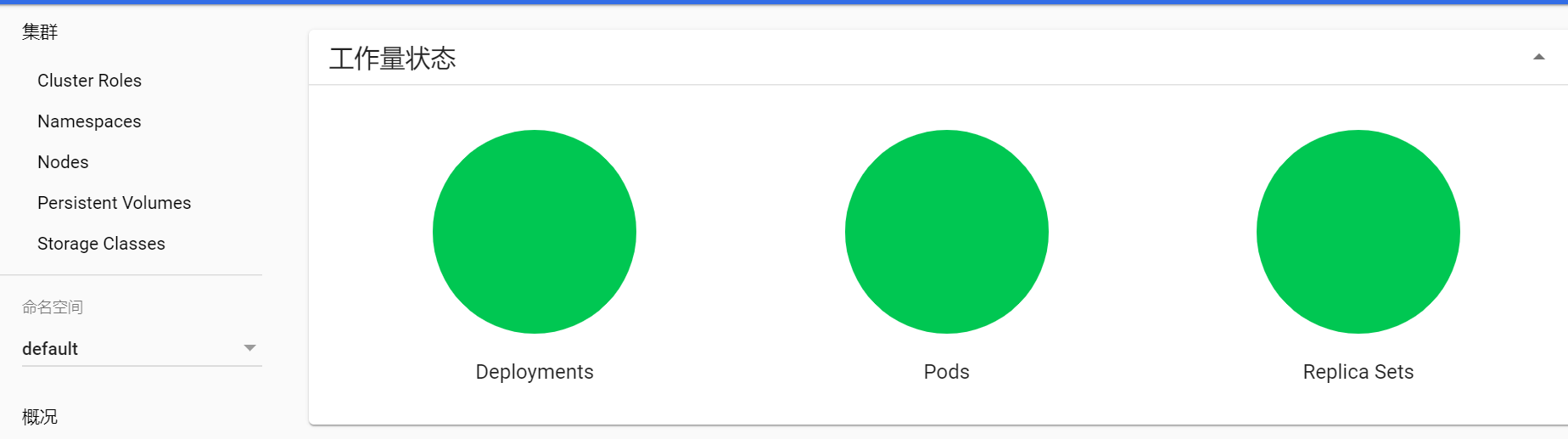

驗證:

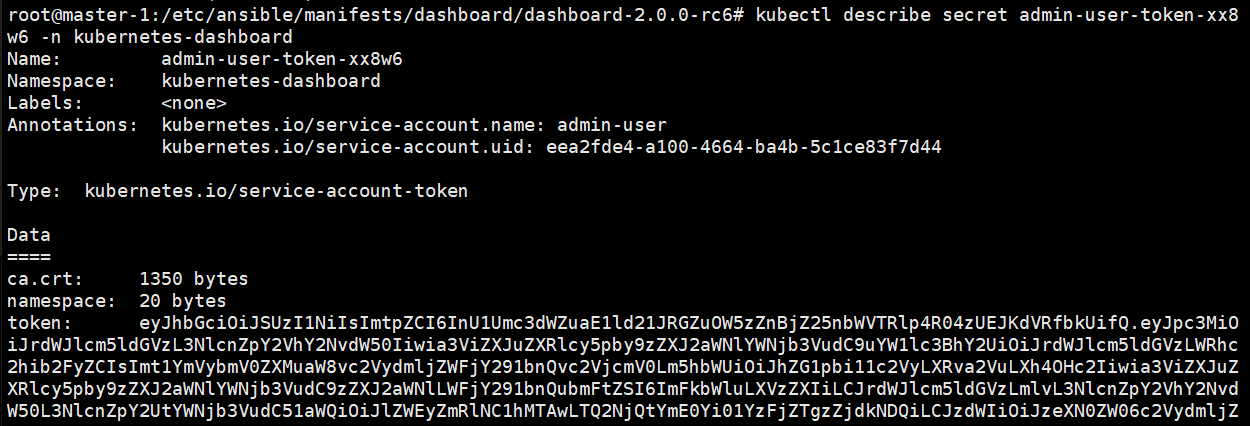

獲取token

root@master-1:/etc/ansible/manifests/dashboard/dashboard-2.0.0-rc6# kubectl get secret -A | grep admin kubernetes-dashboard admin-user-token-xx8w6 kubernetes.io/service-account-token 3 2m46s kubectl describe secret admin-user-token-xx8w6 -n kubernetes-dashboard

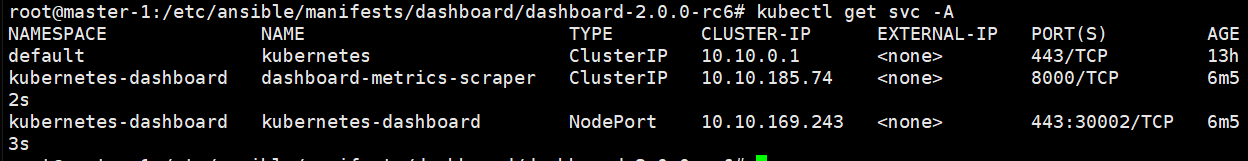

獲取端口

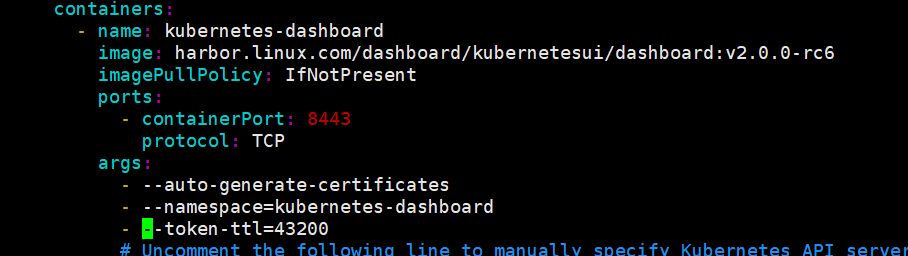

設(shè)置token登錄會話保持時間

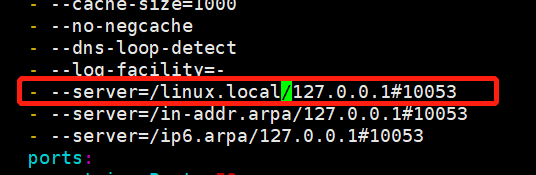

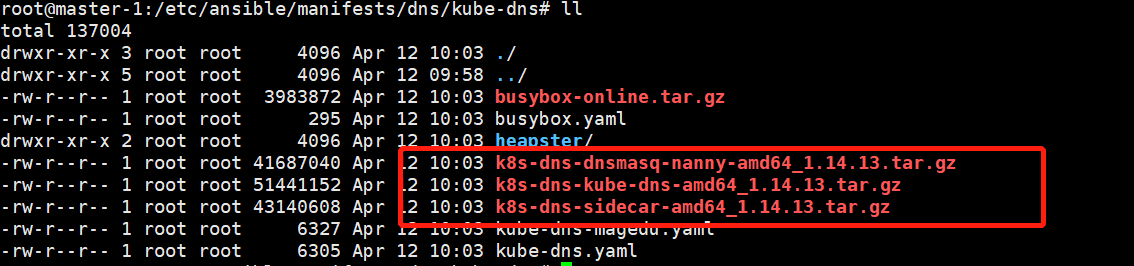

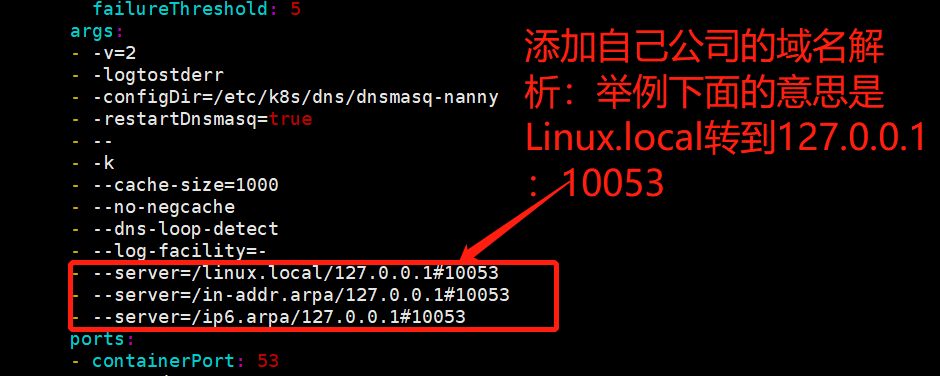

1.3.6:DNS服務(wù)

1.組件介紹(創(chuàng)建在一個容器) kube-dns:提供service name域名的解析 dns-dnsmasq:提供DNS緩存,降低kubedns負載,提高性能 dns-sidecar:定期檢查kubedns和dnsmasq的健康狀態(tài)

1.3.6.1:部署kube-dns

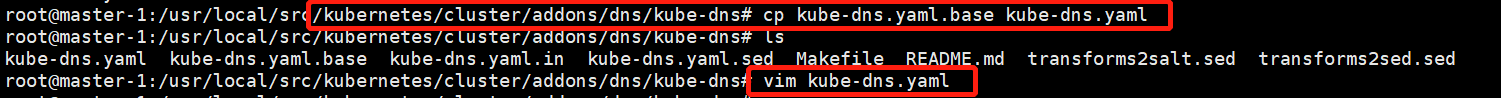

解壓之前k8s升級的二進制文件,找到kube-dns.yaml.base文件:

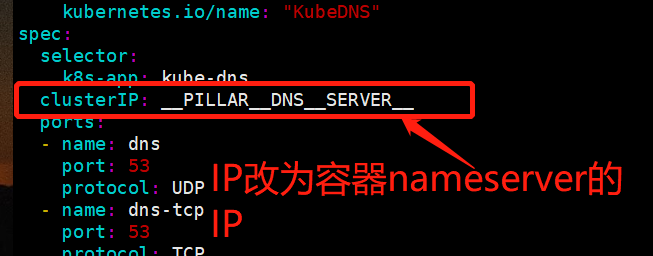

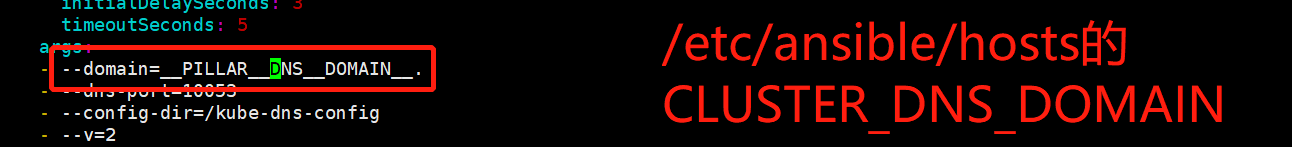

與:__PILLAR__DNS__DOMAIN__相關(guān)的都改成域名

創(chuàng)建CoreDNS和kube-DNS目錄

cd /etc/ansible/manifests/dns mkdir CoreDNS kube-dns

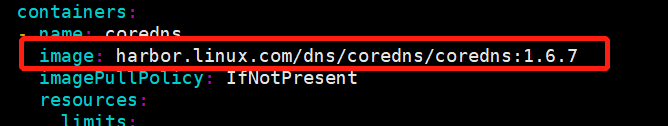

圖中紅框的鏡像導(dǎo)入,創(chuàng)建相關(guān)的container,并上傳到harbor中,同時在配置文件中把image:替換為harbor的地址。

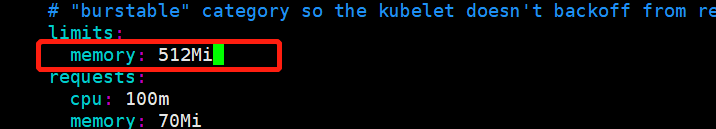

配置:2C、4G

最后

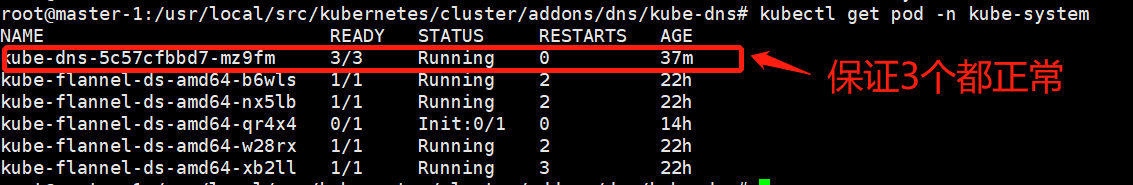

kubectl apply -f kube-dns.yaml

驗證:

1.3.6.2:部署coredns:

https://github.com/coredns/deployment/tree/master/kubernetes

在現(xiàn)有kube-dns的基礎(chǔ)上替換為core-dns,如果沒有kube-dns,直接裝的話,修改core-dns的鏡像地址后直接運行yml文件

git clone https://github.com/coredns/deployment.git

./deploy.sh >coredns.linux.yml

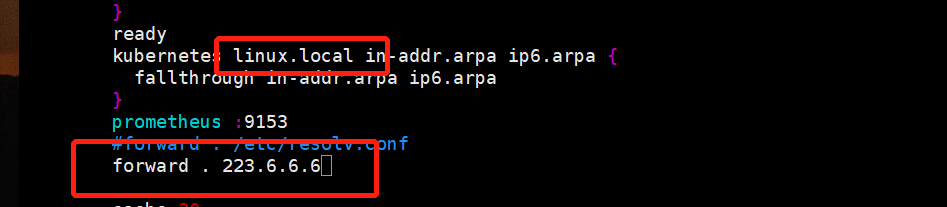

vim coredns.linux.yml #修改配置為下面截圖

docker pull coredns/coredns:1.6.7

kubectl delete -f kube-dns.yaml

kubectl apply -f coredns.linux.yml

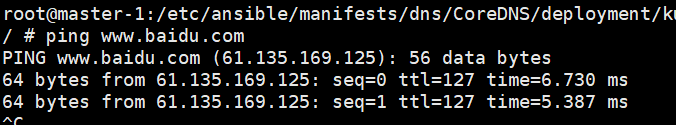

驗證:

浙公網(wǎng)安備 33010602011771號

浙公網(wǎng)安備 33010602011771號