k8s1.28二進制部署

k8s二進制部署

環境:

| 角色 | IP | 操作系統 | 安裝軟件 |

|---|---|---|---|

| master | 172.173.10.110 | CentOS Linux release 7.9.2009 (Core) | api-server,control manager,scheduler,etcd |

| node1 | 172.173.10.110 | CentOS Linux release 7.9.2009 (Core) | kubelet,kube-proxy |

| node2 | 172.173.10.110 | CentOS Linux release 7.9.2009 (Core) | kubelet,kube-proxy |

一、環境初始化

主機名:

hostnamectl set-hostname master

hostnamectl set-hostname node1

hostnamectl set-hostname node2

主機名解析:

cat <<EOF>> /etc/hosts

172.173.10.110 master

172.173.10.111 node1

172.173.10.112 node2

EOF

關閉防火墻和selinux

systemctl disable firewalld --now && setenforce 0 && sed -i 's/^SELINUX=enforcing/SELINUX=disabled/' /etc/selinux/config

時間同步:

timedatectl set-timezone 'Asia/Shanghai'

sed -i '/^server [0-3].centos.pool.ntp.org iburst/d' /etc/chrony.conf && sed -i '/^# Please consider joining the pool/a server ntp.aliyun.com iburst' /etc/chrony.conf && systemctl restart chronyd

關閉交換分區:

swapoff -a && sysctl -w vm.swappiness=0

sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab

優化ssh服務:

sed -i 's@#UseDNS yes@UseDNS no@g' /etc/ssh/sshd_config

sed -i 's@^GSSAPIAuthentication yes@GSSAPIAuthentication no@g' /etc/ssh/sshd_config

配置免密登錄:

ssh-keygen -t rsa

ssh-copy-id master

ssh-copy-id node1

ssh-copy-id node2

所有節點配置limit:

cat >> /etc/security/limits.conf <<'EOF'

* soft nofile 655360

* hard nofile 131072

* soft nproc 655350

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

EOF

內核優化:

cat > /etc/sysctl.d/k8s.conf <<'EOF'

# 以下3個參數是containerd所依賴的內核參數

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

net.ipv6.conf.all.disable_ipv6 = 1

fs.may_detach_mounts = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

sysctl --system

配置yum倉庫:

rm -rf /etc/yum.repos.d/*

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.huaweicloud.com/repository/conf/CentOS-7-anon.repo

curl -o /etc/yum.repos.d/epel.repo https://mirrors.aliyun.com/repo/epel-7.repo

sed -i "s/#baseurl/baseurl/g" /etc/yum.repos.d/epel.repo

sed -i "s/metalink/#metalink/g" /etc/yum.repos.d/epel.repo

sed -i "s@https?://download.fedoraproject.org/pub@https://mirrors.huaweicloud.com@g" /etc/yum.repos.d/epel.repo

yum clean all && yum makecache

安裝常用組件:

yum -y install wget lrzsz vim net-tools bash-completion bind-utils

內核升級:

下載鏈接:Coreix Mirrors

wget https://mirrors.coreix.net/elrepo-archive-archive/kernel/el7/x86_64/RPMS/kernel-lt-5.4.203-1.el7.elrepo.x86_64.rpm

wget https://mirrors.coreix.net/elrepo-archive-archive/kernel/el7/x86_64/RPMS/kernel-lt-devel-5.4.203-1.el7.elrepo.x86_64.rpm

yum -y localinstall *.rpm

rm -rf ./kernel-lt*

#更改啟動順序

awk -F\' '$1=="menuentry " {print $2}' /etc/grub2.cfg

grub2-set-default 0

安裝依賴組件:

yum -y install ipvsadm ipset sysstat conntrack libseccomp

創建要開機自動加載的模塊配置文件

cat > /etc/modules-load.d/ipvs.conf << 'EOF'

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

EOF

修改網卡名稱(可以不做):

vim /etc/default/grub

...

GRUB_CMDLINE_LINUX="... net.ifnames=0 biosdevname=0" #注意是在后面添加配置net.ifnames=0 biosdevname=0

#用grub2-mkconfig重新生成配置。

grub2-mkconfig -o /boot/grub2/grub.cfg

#修改網卡配置

mv /etc/sysconfig/network-scripts/ifcfg-{ens32,eth0}

sed -i 's#ens32#eth0#g' /etc/sysconfig/network-scripts/ifcfg-eth0

cat /etc/sysconfig/network-scripts/ifcfg-eth0

重啟系統即可

#重啟操作系統即可

reboot

驗證加載的模塊

lsmod | grep --color=auto -e ip_vs -e nf_conntrack

uname -r

ifconfig

配置到這款里基本環境就配置好了,如果你是學習環境最好是創建個快照方便回退。

二、安裝k8s組件

2.1 證書工具安裝

下載cfssl工具:Tags · cloudflare/cfssl · GitHub

wget https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssl-certinfo_1.5.0_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssljson_1.5.0_linux_amd64

wget https://github.com/cloudflare/cfssl/releases/download/v1.5.0/cfssl_1.5.0_linux_amd64

chmod +x cfssl*

mv cfssl_1.5.0_linux_amd64 /usr/local/bin/cfssl

mv cfssl-certinfo_1.5.0_linux_amd64 /usr/local/bin/cfssl-certinfo

mv cfssljson_1.5.0_linux_amd64 /usr/local/bin/cfssljson

ll /usr/local/bin/cfssl*

cfssl version

2.2 master節點部署etcd

2.2創建CA證書

ca作為證書頒發機構

2.2.1 配置ca證書請求文件

mkdir -p /data/k8s-work/

cd /data/k8s-work/

cat > ca-csr.json <<"EOF"

{

"CN": "kubernetes",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "kubemsb",

"OU": "CN"

}

],

"ca": {

"expiry": "87600h"

}

}

EOF

2.2.2 創建ca證書

cfssl gencert -initca ca-csr.json | cfssljson -bare ca

2.2.3 配置ca證書策略

cfssl print-defaults config > ca-config.json

cat > ca-config.json <<"EOF"

{

"signing": {

"default": {

"expiry": "87600h"

},

"profiles": {

"kubernetes": {

"usages": [

"signing",

"key encipherment",

"server auth",

"client auth"

],

"expiry": "87600h"

}

}

}

}

EOF

2.3創建etcd證書

2.3.1 配置etcd請求文件

cat > etcd-csr.json <<"EOF"

{

"CN": "etcd",

"hosts": [

"127.0.0.1",

"172.173.10.110"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "kubemsb",

"OU": "CN"

}]

}

EOF

2.3.2 生成etcd證書

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes etcd-csr.json | cfssljson -bare etcd

生成etcd.csr、etcd-key.pem、etcd.pem

這里選3.5.11:

wget https://github.com/etcd-io/etcd/releases/download/v3.5.11/etcd-v3.5.11-linux-amd64.tar.gz

1.解壓etcd的二進制程序包到PATH環境變量路徑

tar -xf etcd-v3.5.11-linux-amd64.tar.gz --strip-components=1 -C /usr/local/bin etcd-v3.5.11-linux-amd64/etcd{,ctl}

etcdctl version

2.準備配置文件

mkdir -p /etc/etcd/ssl /var/lib/etcd/default.etcd

cd /data/k8s-work/

cp ca*.pem /etc/etcd/ssl

cp etcd*.pem /etc/etcd/ssl

# 配置文件

cat <<EOF> /etc/etcd/etcd.conf

#[Member]

ETCD_NAME="etcd1"

ETCD_DATA_DIR="/var/lib/etcd/default.etcd"

ETCD_LISTEN_PEER_URLS="https://172.173.10.110:2380"

ETCD_LISTEN_CLIENT_URLS="https://172.173.10.110:2379,http://127.0.0.1:2379"

#[Clustering]

ETCD_INITIAL_ADVERTISE_PEER_URLS="https://172.173.10.110:2380"

ETCD_ADVERTISE_CLIENT_URLS="https://172.173.10.110:2379"

#ETCD_INITIAL_CLUSTER="etcd1=https://172.173.10.110:2380,etcd2=https://172.173.10.111:2380,etcd3=https://172.173.10.112:2380"

ETCD_INITIAL_CLUSTER="etcd1=https://172.173.10.110:2380"

ETCD_INITIAL_CLUSTER_TOKEN="etcd-cluster"

ETCD_INITIAL_CLUSTER_STATE="new"

EOF

#啟動腳本

cat > /usr/lib/systemd/system/etcd.service <<"EOF"

[Unit]

Description=Etcd Server

After=network.target

After=network-online.target

Wants=network-online.target

[Service]

Type=notify

EnvironmentFile=-/etc/etcd/etcd.conf

WorkingDirectory=/var/lib/etcd/

ExecStart=/usr/local/bin/etcd \

--cert-file=/etc/etcd/ssl/etcd.pem \

--key-file=/etc/etcd/ssl/etcd-key.pem \

--trusted-ca-file=/etc/etcd/ssl/ca.pem \

--peer-cert-file=/etc/etcd/ssl/etcd.pem \

--peer-key-file=/etc/etcd/ssl/etcd-key.pem \

--peer-trusted-ca-file=/etc/etcd/ssl/ca.pem \

--peer-client-cert-auth \

--client-cert-auth

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

說明:

ETCD_NAME:節點名稱,集群中唯一

ETCD_DATA_DIR:數據目錄

ETCD_LISTEN_PEER_URLS:集群通信監聽地址

ETCD_LISTEN_CLIENT_URLS:客戶端訪問監聽地址

ETCD_INITIAL_ADVERTISE_PEER_URLS:集群通告地址

ETCD_ADVERTISE_CLIENT_URLS:客戶端通告地址

ETCD_INITIAL_CLUSTER:集群節點地址

ETCD_INITIAL_CLUSTER_TOKEN:集群Token

ETCD_INITIAL_CLUSTER_STATE:加入集群的當前狀態,new是新集群,existing表示加入已有集群

啟動:

systemctl daemon-reload

systemctl enable etcd --now

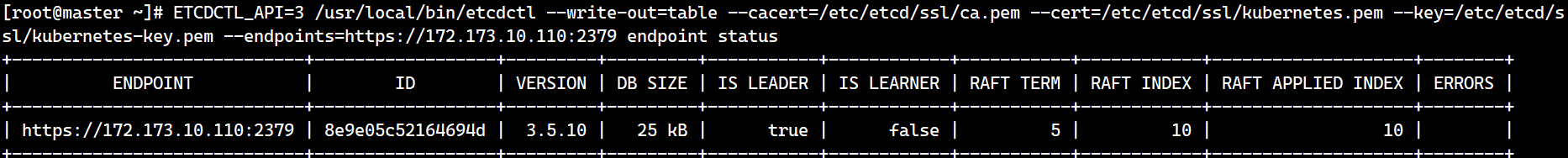

#驗證

ETCDCTL_API=3 /usr/local/bin/etcdctl --write-out=table --cacert=/etc/etcd/ssl/ca.pem --cert=/etc/etcd/ssl/etcd.pem --key=/etc/etcd/ssl/etcd-key.pem --endpoints=https://172.173.10.110:2379 endpoint status

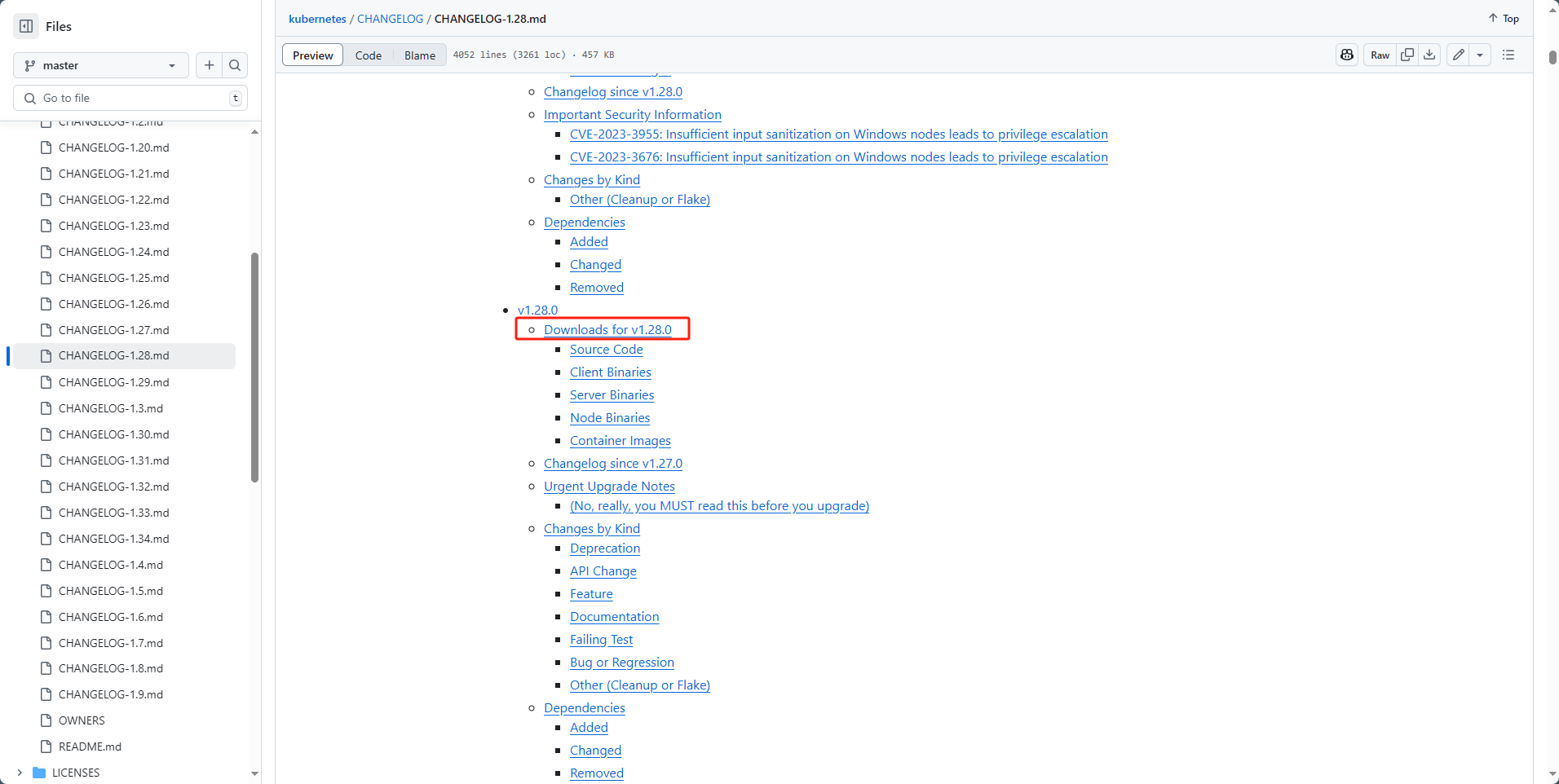

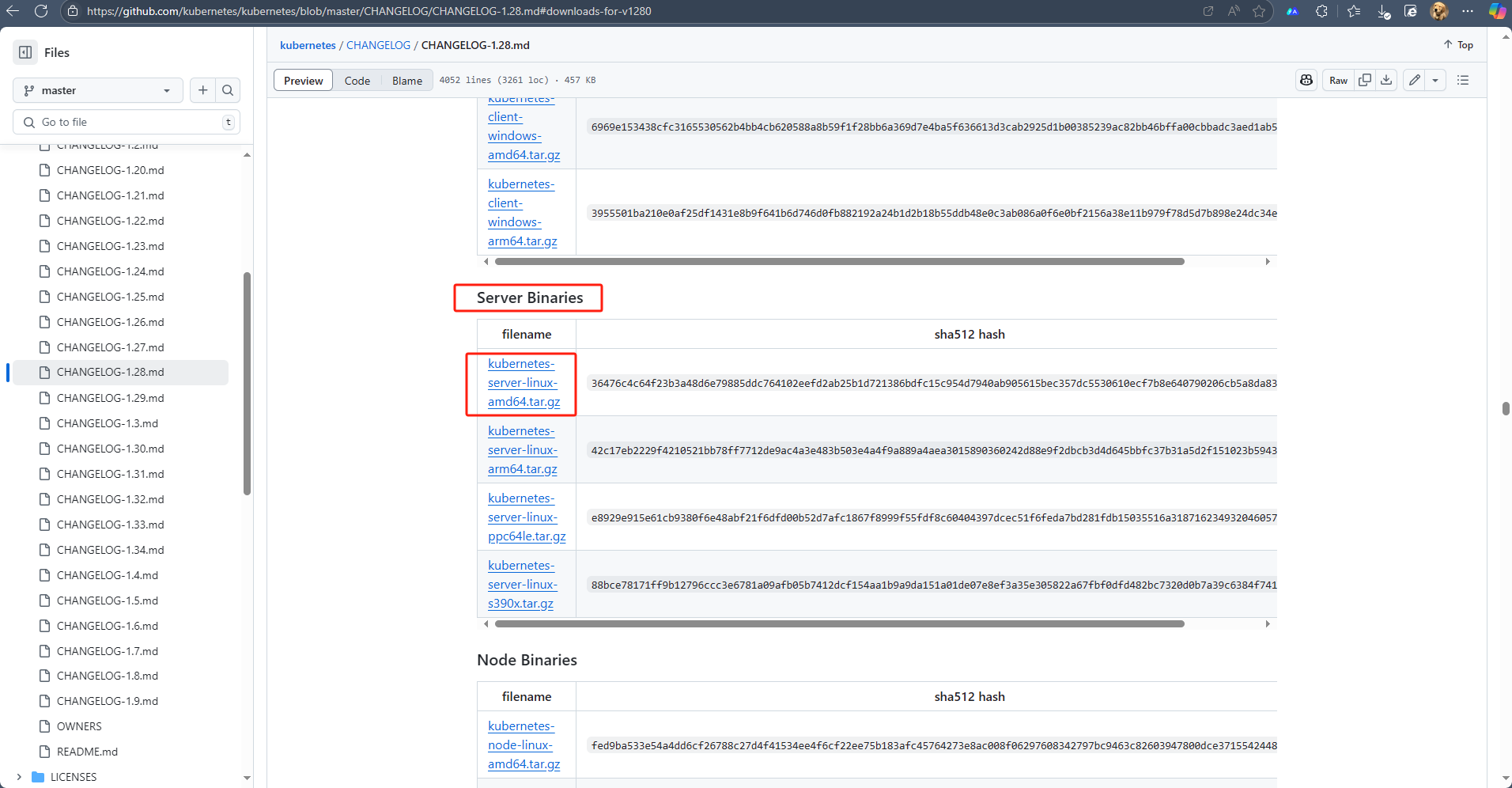

2.4 kubernetes 集群部署

Kubernetes軟件包下載:Kubernetes

tar -xvf kubernetes-server-linux-amd64.tar.gz

cd kubernetes/server/bin/

cp kube-apiserver kube-controller-manager kube-scheduler kubectl /usr/local/bin/

準備配置文件:

mkdir -p /etc/kubernetes/

mkdir -p /etc/kubernetes/ssl

mkdir -p /var/log/kubernetes

2.4.1 安裝api-server

創建apiserver證書請求文件:

cd /data/k8s-work/

cat > kube-apiserver-csr.json << "EOF"

{

"CN": "kubernetes",

"hosts": [

"127.0.0.1",

"172.173.10.110",

"172.173.10.111",

"172.173.10.112",

"10.96.0.1",

"kubernetes",

"kubernetes.default",

"kubernetes.default.svc",

"kubernetes.default.svc.cluster",

"kubernetes.default.svc.cluster.local"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "kubemsb",

"OU": "CN"

}

]

}

EOF

2.4.2 生成apiserver證書及token文件

生成kube-apiserver.csr、kube-apiserver-key.pem、kube-apiserver.pem

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-apiserver-csr.json | cfssljson -bare kube-apiserver

#生成token.csv

cat > token.csv << EOF

$(head -c 16 /dev/urandom | od -An -t x | tr -d ' '),kubelet-bootstrap,10001,"system:kubelet-bootstrap"

EOF

創建apiserver服務配置文件:

cat > /etc/kubernetes/kube-apiserver.conf <<"EOF"

KUBE_APISERVER_OPTS="--enable-admission-plugins=NamespaceLifecycle,NodeRestriction,LimitRanger,ServiceAccount,DefaultStorageClass,ResourceQuota \

--anonymous-auth=false \

--bind-address=172.173.10.110 \

--advertise-address=172.173.10.110 \

--secure-port=6443 \

--authorization-mode=Node,RBAC \

--runtime-config=api/all=true \

--enable-bootstrap-token-auth=true \

--service-cluster-ip-range=10.96.0.0/16 \

--token-auth-file=/etc/kubernetes/token.csv \

--service-node-port-range=30000-32767 \

--tls-cert-file=/etc/kubernetes/ssl/kube-apiserver.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--client-ca-file=/etc/kubernetes/ssl/ca.pem \

--kubelet-client-certificate=/etc/kubernetes/ssl/kube-apiserver.pem \

--kubelet-client-key=/etc/kubernetes/ssl/kube-apiserver-key.pem \

--service-account-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--service-account-issuer=api \

--etcd-cafile=/etc/etcd/ssl/ca.pem \

--etcd-certfile=/etc/etcd/ssl/etcd.pem \

--etcd-keyfile=/etc/etcd/ssl/etcd-key.pem \

--etcd-servers=https://172.173.10.110:2379,https://192.168.198.145:2379,https://192.168.198.146:2379 \

--allow-privileged=true \

--apiserver-count=3 \

--audit-log-maxage=30 \

--audit-log-maxbackup=3 \

--audit-log-maxsize=100 \

--audit-log-path=/var/log/kube-apiserver-audit.log \

--event-ttl=1h \

--v=4"

EOF

啟動腳本:

vim /usr/lib/systemd/system/kube-apiserver.service

[Unit]

Description=Kubernetes API Server

Documentation=https://github.com/kubernetes/kubernetes

After=etcd.service

Wants=etcd.service

[Service]

EnvironmentFile=-/etc/kubernetes/kube-apiserver.conf

ExecStart=/usr/local/bin/kube-apiserver $KUBE_APISERVER_OPTS

Restart=on-failure

RestartSec=5

Type=notify

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

證書拷貝:

cd /data/k8s-work/

cp ca*.pem /etc/kubernetes/ssl/

cp kube-apiserver*.pem /etc/kubernetes/ssl/

cp token.csv /etc/kubernetes/

啟動:

systemctl daemon-reload

systemctl enable --now kube-apiserver

systemctl status kube-apiserver

驗證:

curl --insecure https://172.173.10.110:6443/

2.5 kubelet部署

2.5.1 創建kubectl證書請求文件

cd /data/k8s-work/

cat > admin-csr.json << "EOF"

{

"CN": "admin",

"hosts": [],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:masters",

"OU": "system"

}

]

}

EOF

2.5.2 生成證書文件

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes admin-csr.json | cfssljson -bare admin

2.5.3 復制文件到指定目錄

cp admin*.pem /etc/kubernetes/ssl/

2.5.4 生成kube.config配置文件

kube.config 為 kubectl 的配置文件,包含訪問 apiserver 的所有信息,如 apiserver 地址、CA 證書和自身使用的證書

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://172.173.10.110:6443 --kubeconfig=kube.config

kubectl config set-credentials admin --client-certificate=admin.pem --client-key=admin-key.pem --embed-certs=true --kubeconfig=kube.config

kubectl config set-context kubernetes --cluster=kubernetes --user=admin --kubeconfig=kube.config

kubectl config use-context kubernetes --kubeconfig=kube.config

2.5.5 準備kubectl配置文件并進行角色綁定

mkdir ~/.kube

cp kube.config ~/.kube/config

kubectl create clusterrolebinding kube-apiserver:kubelet-apis --clusterrole=system:kubelet-api-admin --user kubernetes --kubeconfig=/root/.kube/config

2.5.6 查看集群狀態

#查看集群信息

kubectl cluster-info

#查看集群組件狀態

kubectl get componentstatuses

#查看命名空間中資源對象

kubectl get all --all-namespaces

2.6 部署kube-controller-manager

2.6.1 創建kube-controller-manager證書請求文件

cd /data/k8s-work

cat > kube-controller-manager-csr.json << "EOF"

{

"CN": "system:kube-controller-manager",

"key": {

"algo": "rsa",

"size": 2048

},

"hosts": [

"127.0.0.1",

"172.173.10.110"

],

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-controller-manager",

"OU": "system"

}

]

}

EOF

2.6.2 創建kube-controller-manager證書文件

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-controller-manager-csr.json | cfssljson -bare kube-controller-manager

2.6.3 創建kube-controller-manager的kube-controller-manager.kubeconfig

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://172.173.10.110:6443 --kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-credentials system:kube-controller-manager --client-certificate=kube-controller-manager.pem --client-key=kube-controller-manager-key.pem --embed-certs=true --kubeconfig=kube-controller-manager.kubeconfig

kubectl config set-context system:kube-controller-manager --cluster=kubernetes --user=system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

kubectl config use-context system:kube-controller-manager --kubeconfig=kube-controller-manager.kubeconfig

2.6.4 創建kube-controller-manager配置文件

cat > kube-controller-manager.conf << "EOF"

KUBE_CONTROLLER_MANAGER_OPTS=" \

--secure-port=10257 \

--bind-address=127.0.0.1 \

--kubeconfig=/etc/kubernetes/kube-controller-manager.kubeconfig \

--service-cluster-ip-range=10.96.0.0/16 \

--cluster-name=kubernetes \

--cluster-signing-cert-file=/etc/kubernetes/ssl/ca.pem \

--cluster-signing-key-file=/etc/kubernetes/ssl/ca-key.pem \

--allocate-node-cidrs=true \

--cluster-cidr=10.244.0.0/16 \

--root-ca-file=/etc/kubernetes/ssl/ca.pem \

--service-account-private-key-file=/etc/kubernetes/ssl/ca-key.pem \

--leader-elect=true \

--feature-gates=RotateKubeletServerCertificate=true \

--controllers=*,bootstrapsigner,tokencleaner \

--tls-cert-file=/etc/kubernetes/ssl/kube-controller-manager.pem \

--tls-private-key-file=/etc/kubernetes/ssl/kube-controller-manager-key.pem \

--use-service-account-credentials=true \

--v=2"

EOF

2.6.5 創建服務啟動文件

vim kube-controller-manager.service

[Unit]

Description=Kubernetes Controller Manager

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=/etc/kubernetes/kube-controller-manager.conf

ExecStart=/usr/local/bin/kube-controller-manager $KUBE_CONTROLLER_MANAGER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

拷貝配置:

cp kube-controller-manager*.pem /etc/kubernetes/ssl/

cp kube-controller-manager.kubeconfig /etc/kubernetes/

cp kube-controller-manager.conf /etc/kubernetes/

cp kube-controller-manager.service /usr/lib/systemd/system/

啟動:

systemctl daemon-reload

systemctl start kube-controller-manager

systemctl enable kube-controller-manager

systemctl status kube-controller-manager

2.7 部署kube-scheduler

2.7.1 創建kube-scheduler證書請求文件

cd /data/k8s-work

cat > kube-scheduler-csr.json << "EOF"

{

"CN": "system:kube-scheduler",

"hosts": [

"127.0.0.1",

"172.173.10.110"

],

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "system:kube-scheduler",

"OU": "system"

}

]

}

EOF

生成配置文件:

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-scheduler-csr.json | cfssljson -bare kube-scheduler

2.7.3 創建kube-scheduler的kubeconfig

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://172.173.10.110:6443 --kubeconfig=kube-scheduler.kubeconfig

kubectl config set-credentials system:kube-scheduler --client-certificate=kube-scheduler.pem --client-key=kube-scheduler-key.pem --embed-certs=true --kubeconfig=kube-scheduler.kubeconfig

kubectl config set-context system:kube-scheduler --cluster=kubernetes --user=system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

kubectl config use-context system:kube-scheduler --kubeconfig=kube-scheduler.kubeconfig

2.7.4 創建服務配置文件

cat > kube-scheduler.conf << "EOF"

KUBE_SCHEDULER_OPTS=" \

--kubeconfig=/etc/kubernetes/kube-scheduler.kubeconfig \

--leader-elect=true \

--v=2"

EOF

2.7.5創建服務啟動配置文件

vim kube-scheduler.service

[Unit]

Description=Kubernetes Scheduler

Documentation=https://github.com/kubernetes/kubernetes

[Service]

EnvironmentFile=-/etc/kubernetes/kube-scheduler.conf

ExecStart=/usr/local/bin/kube-scheduler $KUBE_SCHEDULER_OPTS

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

2.7.6 拷貝配置

cp kube-scheduler*.pem /etc/kubernetes/ssl/

cp kube-scheduler.kubeconfig /etc/kubernetes/

cp kube-scheduler.conf /etc/kubernetes/

cp kube-scheduler.service /usr/lib/systemd/system/

2.7.7 啟動

systemctl daemon-reload

systemctl enable --now kube-scheduler

systemctl status kube-scheduler

驗證:

kubectl get cs

三、工作節點部署

3.1 node節點安裝docker

3.1.1安裝

curl -o /etc/yum.repos.d/docker-ce.repo https://mirrors.huaweicloud.com/docker-ce/linux/centos/docker-ce.repo

sed -i 's+download.docker.com+mirrors.huaweicloud.com/docker-ce+' /etc/yum.repos.d/docker-ce.repo

yum makecache fast

yum -y install docker-ce-26.1.4

systemctl enable --now docker

3.1.2 修改docker配置

cat << EOF | sudo tee /etc/docker/daemon.json

{

"exec-opts": ["native.cgroupdriver=systemd"]

}

EOF

systemctl restart docker

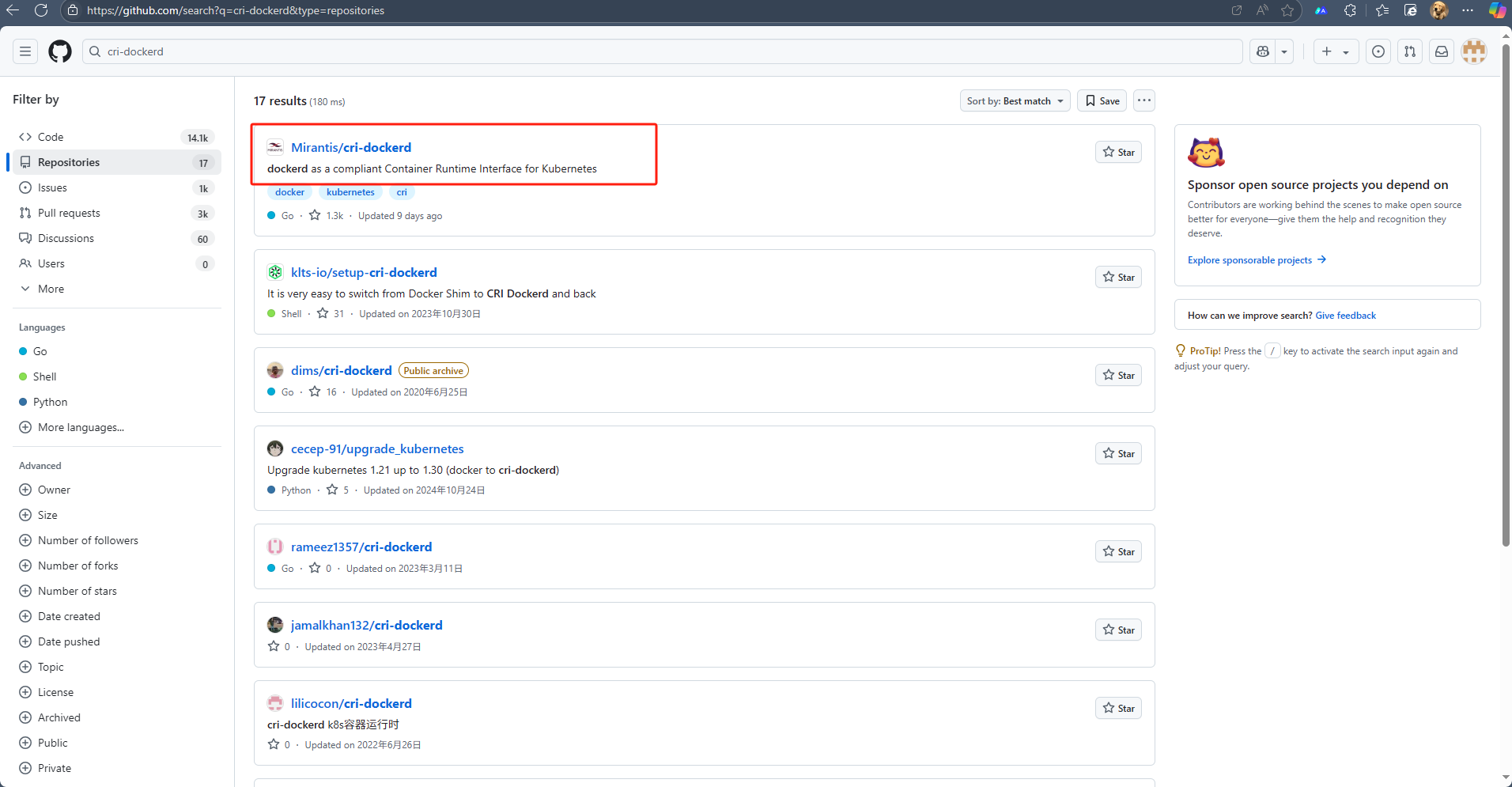

3.1.3 cri-dockerd安裝

wget https://github.com/Mirantis/cri-dockerd/releases/download/v0.3.9/cri-dockerd-0.3.9-3.el7.x86_64.rpm

yum localinstall cri-dockerd-0.3.9-3.el7.x86_64.rpm -y

修改配置:

vi /usr/lib/systemd/system/cri-docker.service

#修改啟動參數,指定國內鏡像

ExecStart=/usr/bin/cri-dockerd --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9 --container-runtime-endpoint fd://

啟動:

systemctl enable --now cri-docker

systemctl status cri-docker

在run目錄下可以看到cri-dockerd.sock ,這個就是后面kubelet調用docker的sock

[root@node1 ~]# ls /run/cri-dockerd.sock

/run/cri-dockerd.sock

(跳過)或者可以選擇二進制方式部署:

# 軟件分發

tar -zxvf cri-dockerd-0.3.9.amd64.tgz

for i in node1 node2;do scp cri-dockerd/cri-dockerd $i:/usr/bin/;done

# 啟動腳本

cd /data/k8s-work

cat >cri-docker.service<<'EOF'

[Unit]

Description=CRI interface for Docker Application Container Engine

Documentation=https://docs.mirantis.com

After=network.target docker.service

Requires=docker.service

[Service]

ExecStart=/usr/bin/cri-dockerd --pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9 --container-runtime-endpoint fd://

Restart=always

StartLimitInterval=0

RestartSec=10

[Install]

WantedBy=multi-user.target

EOF

cat>cri-docker.socket<<'EOF'

[Unit]

Description=CRI Docker Socket for the API

[Socket]

ListenStream=/run/cri-dockerd.sock

SocketMode=0660

SocketUser=root

SocketGroup=docker

[Install]

WantedBy=sockets.target

EOF

for i in node1 node2;do scp cri-docker.service $i:/etc/systemd/system/;done

for i in node1 node2;do scp cri-docker.socket $i:/etc/systemd/system/;done

node節點上啟動:

systemctl daemon-reload

systemctl enable --now cri-docker.socket cri-docker.service

3.2 部署kubelet

在k8s-master上操作

3.2.1 創建kubelet-bootstrap.kubeconfig

cd /data/k8s-work

BOOTSTRAP_TOKEN=$(awk -F "," '{print $1}' /etc/kubernetes/token.csv)

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://172.173.10.110:6443 --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl config set-credentials kubelet-bootstrap --token=${BOOTSTRAP_TOKEN} --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl config use-context default --kubeconfig=kubelet-bootstrap.kubeconfig

kubectl create clusterrolebinding cluster-system-anonymous --clusterrole=cluster-admin --user=kubelet-bootstrap

kubectl create clusterrolebinding kubelet-bootstrap --clusterrole=system:node-bootstrapper --user=kubelet-bootstrap --kubeconfig=kubelet-bootstrap.kubeconfig

回到node節點

node1節點:

mkdir -p /etc/kubernetes/ssl

cat > /etc/kubernetes/kubelet.json << "EOF"

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"authentication": {

"x509": {

"clientCAFile": "/etc/kubernetes/ssl/ca.pem"

},

"webhook": {

"enabled": true,

"cacheTTL": "2m0s"

},

"anonymous": {

"enabled": false

}

},

"authorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"address": "172.173.10.111",

"port": 10250,

"readOnlyPort": 10255,

"cgroupDriver": "systemd",

"hairpinMode": "promiscuous-bridge",

"serializeImagePulls": false,

"clusterDomain": "cluster.local.",

"clusterDNS": ["10.96.0.2"]

}

EOF

node2節點:

mkdir -p /etc/kubernetes/ssl

cat > /etc/kubernetes/kubelet.json << "EOF"

{

"kind": "KubeletConfiguration",

"apiVersion": "kubelet.config.k8s.io/v1beta1",

"authentication": {

"x509": {

"clientCAFile": "/etc/kubernetes/ssl/ca.pem"

},

"webhook": {

"enabled": true,

"cacheTTL": "2m0s"

},

"anonymous": {

"enabled": false

}

},

"authorization": {

"mode": "Webhook",

"webhook": {

"cacheAuthorizedTTL": "5m0s",

"cacheUnauthorizedTTL": "30s"

}

},

"address": "172.173.10.112",

"port": 10250,

"readOnlyPort": 10255,

"cgroupDriver": "systemd",

"hairpinMode": "promiscuous-bridge",

"serializeImagePulls": false,

"clusterDomain": "cluster.local.",

"clusterDNS": ["10.96.0.2"]

}

EOF

3.2.2 創建kubelet服務啟動管理文件

兩個node節點上創建kubelet服務啟動管理文件

mkdir /var/lib/kubelet

cat > /usr/lib/systemd/system/kubelet.service << "EOF"

[Unit]

Description=Kubernetes Kubelet

Documentation=https://github.com/kubernetes/kubernetes

After=docker.service

Requires=docker.service

[Service]

WorkingDirectory=/var/lib/kubelet

ExecStart=/usr/local/bin/kubelet \

--bootstrap-kubeconfig=/etc/kubernetes/kubelet-bootstrap.kubeconfig \

--cert-dir=/etc/kubernetes/ssl \

--kubeconfig=/etc/kubernetes/kubelet.kubeconfig \

--config=/etc/kubernetes/kubelet.json \

--container-runtime-endpoint=unix:///run/cri-dockerd.sock \

--rotate-certificates \

--pod-infra-container-image=registry.aliyuncs.com/google_containers/pause:3.9 \

--v=2

Restart=on-failure

RestartSec=5

[Install]

WantedBy=multi-user.target

EOF

在master節點上配置拷貝過去

#配置拷貝

for i in node1 node2;do scp kubelet-bootstrap.kubeconfig $i:/etc/kubernetes/;done

for i in node1 node2;do scp ca.pem $i:/etc/kubernetes/ssl;done

#安裝包拷貝過去:

cd /root/kubernetes/server/bin

for i in node1 node2;do scp kubelet kube-scheduler $i:/usr/local/bin/;done

再到node節點上啟動:

systemctl daemon-reload

systemctl restart kubelet

systemctl enable --now kubelet

systemctl status kubelet

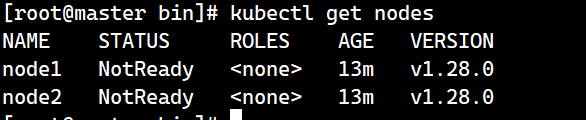

驗證:

master節點上進行驗證:

kubectl get nodes

啟動kubelet后,在/etc/kubunets目錄下會自動生成證書配置文件 kubelet.kubeconfig,在ssl中會看到自動簽發的證書kubelet-client-2025-07-02-14-17-11.pem、kubelet.crt、kubelet.key

#后續如果節點異常,重新簽發證書,需要把ssl里面的證書先刪除,否則會看到節點加入集群失敗

3.3 部署kube-proxy

3.3.1 創建kube-proxy證書請求文件

在k8s-master1上生成證書

cd /data/k8s-work/

cat > kube-proxy-csr.json << "EOF"

{

"CN": "system:kube-proxy",

"key": {

"algo": "rsa",

"size": 2048

},

"names": [

{

"C": "CN",

"ST": "Beijing",

"L": "Beijing",

"O": "kubemsb",

"OU": "CN"

}

]

}

EOF

生成證書:

cfssl gencert -ca=ca.pem -ca-key=ca-key.pem -config=ca-config.json -profile=kubernetes kube-proxy-csr.json | cfssljson -bare kube-proxy

3.3.2 創建kubeconfig文件

kubectl config set-cluster kubernetes --certificate-authority=ca.pem --embed-certs=true --server=https://172.173.10.110:6443 --kubeconfig=kube-proxy.kubeconfig

kubectl config set-credentials kube-proxy --client-certificate=kube-proxy.pem --client-key=kube-proxy-key.pem --embed-certs=true --kubeconfig=kube-proxy.kubeconfig

kubectl config set-context default --cluster=kubernetes --user=kube-proxy --kubeconfig=kube-proxy.kubeconfig

kubectl config use-context default --kubeconfig=kube-proxy.kubeconfig

3.3.3 創建服務配置文件

在node節點上創建

node1:

cat > /etc/kubernetes/kube-proxy.yaml << "EOF"

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 172.173.10.111

clientConnection:

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

clusterCIDR: 10.244.0.0/16

healthzBindAddress: 172.173.10.111:10256

kind: KubeProxyConfiguration

metricsBindAddress: 172.173.10.111:10249

mode: "ipvs"

EOF

node2:

cat > /etc/kubernetes/kube-proxy.yaml << "EOF"

apiVersion: kubeproxy.config.k8s.io/v1alpha1

bindAddress: 172.173.10.112

clientConnection:

kubeconfig: /etc/kubernetes/kube-proxy.kubeconfig

clusterCIDR: 10.244.0.0/16

healthzBindAddress: 172.173.10.112:10256

kind: KubeProxyConfiguration

metricsBindAddress: 172.173.10.112:10249

mode: "ipvs"

EOF

3.3.4 創建服務啟動管理文件

在node節點上創建:

#創建proxy的工作目錄,和服務啟動文件對應

mkdir -p /var/lib/kube-proxy

cat > /usr/lib/systemd/system/kube-proxy.service << "EOF"

[Unit]

Description=Kubernetes Kube-Proxy Server

Documentation=https://github.com/kubernetes/kubernetes

After=network.target

[Service]

WorkingDirectory=/var/lib/kube-proxy

ExecStart=/usr/local/bin/kube-proxy \

--config=/etc/kubernetes/kube-proxy.yaml \

--v=2

Restart=on-failure

RestartSec=5

LimitNOFILE=65536

[Install]

WantedBy=multi-user.target

EOF

3.3.5 同步文件到集群工作節點主機

master:

cd /root/kubernetes/server/bin

for i in node1 node2;do scp kube-proxy $i:/usr/local/bin/; done

cd /data/k8s-work

for i in node1 node2;do scp kube-proxy.kubeconfig $i:/etc/kubernetes/; done

for i in node1 node2;do scp kube-proxy*pem $i:/etc/kubernetes/ssl; done

node節點上啟動服務:

systemctl daemon-reload

systemctl enable --now kube-proxy

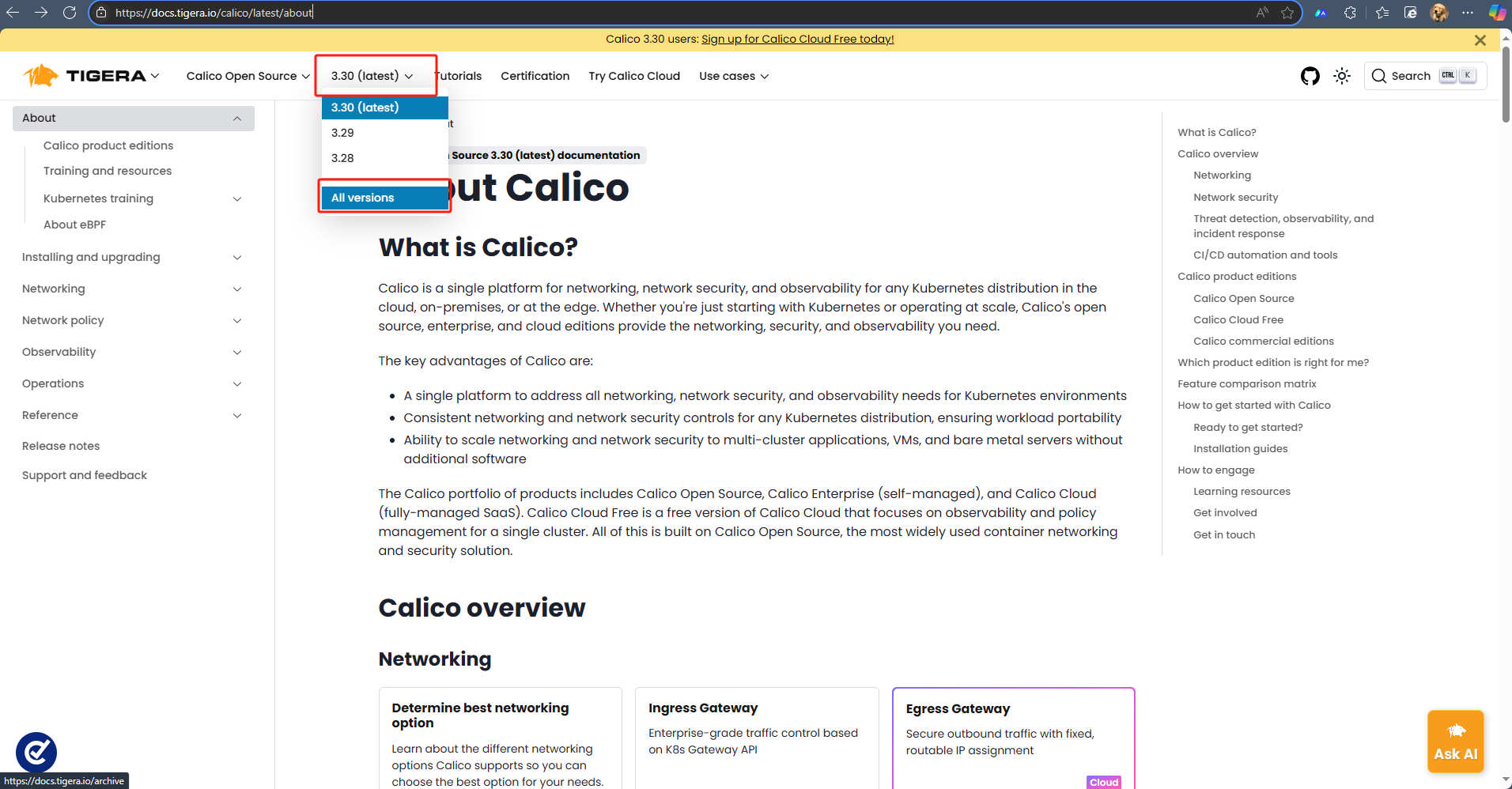

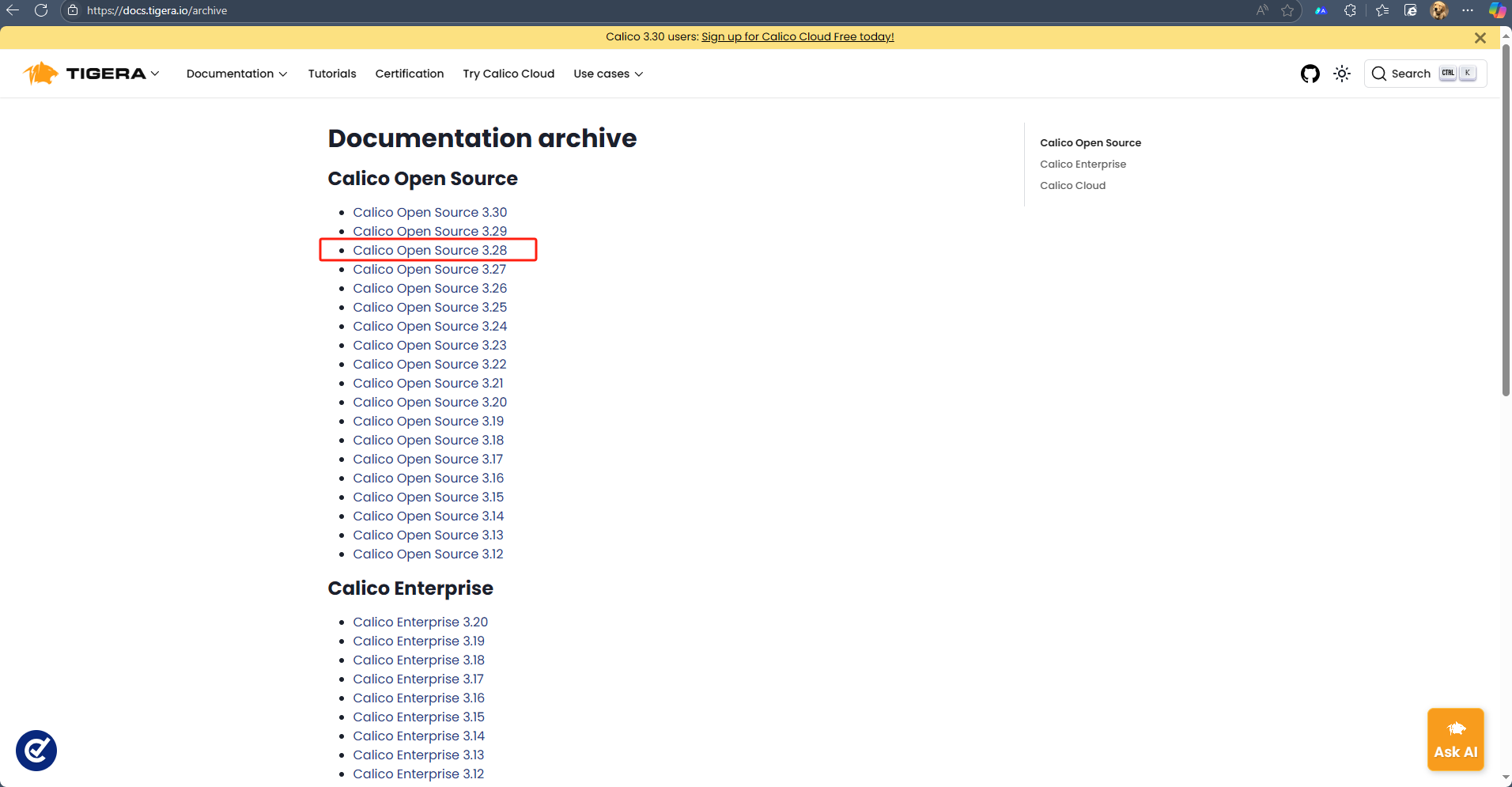

四、部署網絡插件calico

官網:About Calico | Calico Documentation

選擇3.28版本:

先不要執行,因為我么的pod網絡IP和它里面規劃的不一致,需要修改:

curl -O -L https://raw.githubusercontent.com/projectcalico/calico/v3.28.5/manifests/tigera-operator.yaml

curl -O -L https://raw.githubusercontent.com/projectcalico/calico/v3.28.5/manifests/custom-resources.yaml

改一下配置:

sed -i 's#192.168.0.0#10.244.0.0#g' custom-resources.yaml

通過文件創建資源:

[root@master ~]# kubectl create -f tigera-operator.yaml

namespace/tigera-operator created

customresourcedefinition.apiextensions.k8s.io/bgpconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgpfilters.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/bgppeers.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/blockaffinities.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/caliconodestatuses.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/clusterinformations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/felixconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/globalnetworksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/hostendpoints.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamblocks.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamconfigs.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipamhandles.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ippools.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/ipreservations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/kubecontrollersconfigurations.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networkpolicies.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/networksets.crd.projectcalico.org created

customresourcedefinition.apiextensions.k8s.io/apiservers.operator.tigera.io created

customresourcedefinition.apiextensions.k8s.io/imagesets.operator.tigera.io created

customresourcedefinition.apiextensions.k8s.io/installations.operator.tigera.io created

customresourcedefinition.apiextensions.k8s.io/tigerastatuses.operator.tigera.io created

serviceaccount/tigera-operator created

clusterrole.rbac.authorization.k8s.io/tigera-operator created

clusterrolebinding.rbac.authorization.k8s.io/tigera-operator created

deployment.apps/tigera-operator created

等待啟動完成之后繼續創建下一個:

[root@master ~]# kubectl create -f custom-resources.yaml

installation.operator.tigera.io/default created

apiserver.operator.tigera.io/default created

查看創建過程:

watch kubectl get pod -A

再部署部署CoreDNS

cat > coredns.yaml << "EOF"

apiVersion: v1

kind: ServiceAccount

metadata:

name: coredns

namespace: kube-system

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRole

metadata:

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

rules:

- apiGroups:

- ""

resources:

- endpoints

- services

- pods

- namespaces

verbs:

- list

- watch

- apiGroups:

- discovery.k8s.io

resources:

- endpointslices

verbs:

- list

- watch

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

annotations:

rbac.authorization.kubernetes.io/autoupdate: "true"

labels:

kubernetes.io/bootstrapping: rbac-defaults

name: system:coredns

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: system:coredns

subjects:

- kind: ServiceAccount

name: coredns

namespace: kube-system

---

apiVersion: v1

kind: ConfigMap

metadata:

name: coredns

namespace: kube-system

data:

Corefile: |

.:53 {

errors

health {

lameduck 5s

}

ready

kubernetes cluster.local in-addr.arpa ip6.arpa {

fallthrough in-addr.arpa ip6.arpa

}

prometheus :9153

forward . /etc/resolv.conf {

max_concurrent 1000

}

cache 30

loop

reload

loadbalance

}

---

apiVersion: apps/v1

kind: Deployment

metadata:

name: coredns

namespace: kube-system

labels:

k8s-app: kube-dns

kubernetes.io/name: "CoreDNS"

spec:

# replicas: not specified here:

# 1. Default is 1.

# 2. Will be tuned in real time if DNS horizontal auto-scaling is turned on.

strategy:

type: RollingUpdate

rollingUpdate:

maxUnavailable: 1

selector:

matchLabels:

k8s-app: kube-dns

template:

metadata:

labels:

k8s-app: kube-dns

spec:

priorityClassName: system-cluster-critical

serviceAccountName: coredns

tolerations:

- key: "CriticalAddonsOnly"

operator: "Exists"

nodeSelector:

kubernetes.io/os: linux

affinity:

podAntiAffinity:

preferredDuringSchedulingIgnoredDuringExecution:

- weight: 100

podAffinityTerm:

labelSelector:

matchExpressions:

- key: k8s-app

operator: In

values: ["kube-dns"]

topologyKey: kubernetes.io/hostname

containers:

- name: coredns

image: coredns/coredns:1.10.1

imagePullPolicy: IfNotPresent

resources:

limits:

memory: 170Mi

requests:

cpu: 100m

memory: 70Mi

args: [ "-conf", "/etc/coredns/Corefile" ]

volumeMounts:

- name: config-volume

mountPath: /etc/coredns

readOnly: true

ports:

- containerPort: 53

name: dns

protocol: UDP

- containerPort: 53

name: dns-tcp

protocol: TCP

- containerPort: 9153

name: metrics

protocol: TCP

securityContext:

allowPrivilegeEscalation: false

capabilities:

add:

- NET_BIND_SERVICE

drop:

- all

readOnlyRootFilesystem: true

livenessProbe:

httpGet:

path: /health

port: 8080

scheme: HTTP

initialDelaySeconds: 60

timeoutSeconds: 5

successThreshold: 1

failureThreshold: 5

readinessProbe:

httpGet:

path: /ready

port: 8181

scheme: HTTP

dnsPolicy: Default

volumes:

- name: config-volume

configMap:

name: coredns

items:

- key: Corefile

path: Corefile

---

apiVersion: v1

kind: Service

metadata:

name: kube-dns

namespace: kube-system

annotations:

prometheus.io/port: "9153"

prometheus.io/scrape: "true"

labels:

k8s-app: kube-dns

kubernetes.io/cluster-service: "true"

kubernetes.io/name: "CoreDNS"

spec:

selector:

k8s-app: kube-dns

clusterIP: 10.96.0.2

ports:

- name: dns

port: 53

protocol: UDP

- name: dns-tcp

port: 53

protocol: TCP

- name: metrics

port: 9153

protocol: TCP

EOF

創建資源:

[root@master ~]# kubectl apply -f coredns.yaml

serviceaccount/coredns created

clusterrole.rbac.authorization.k8s.io/system:coredns created

clusterrolebinding.rbac.authorization.k8s.io/system:coredns created

configmap/coredns created

deployment.apps/coredns created

service/kube-dns created

測試:

dig -t a www.baidu.com @10.96.0.2

到這里部署完成。

五、Dashboard部署

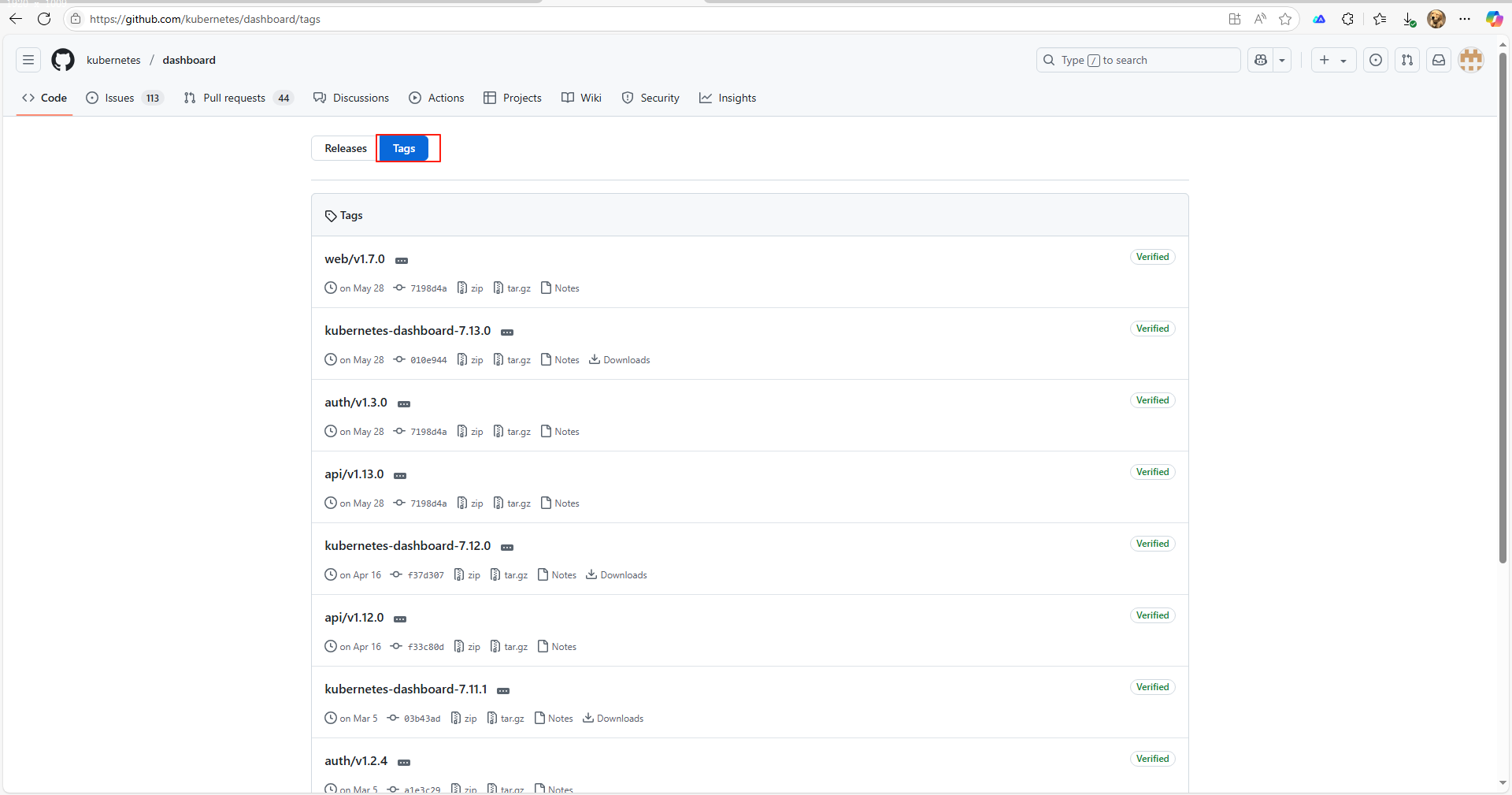

下載:Releases · kubernetes/dashboard

選擇版本:

對應下方有版本適用說明:

這里我們的版本是1.28,選擇這個版本:Release v2.7.0 · kubernetes/dashboard

這里由于鏡像拉取不下來,我是先下載好上傳上去的:

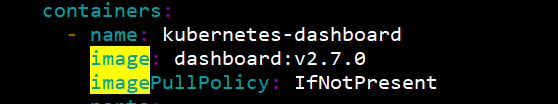

下載yaml文件并修改配置:

wget https://raw.githubusercontent.com/kubernetes/dashboard/v2.7.0/aio/deploy/recommended.yaml

mv recommended.yaml dashboard.yaml

vi dashboard.yaml

spec:

type: NodePort

ports:

- port: 443

targetPort: 8443

nodePort: 32002

。。。

image: dashboard:v2.7.0

imagePullPolicy: IfNotPresent

。。。

image: metrics-scraper:v1.0.8

imagePullPolicy: IfNotPresent

網絡模式改成NodePod,默認是ClusterIP,只能在集群內部訪問,而NodePod可以以節點 IP + 端口訪問。

再把拉取鏡像的方式改為優先使用本地鏡像,不然鏡像也拉取不下來:

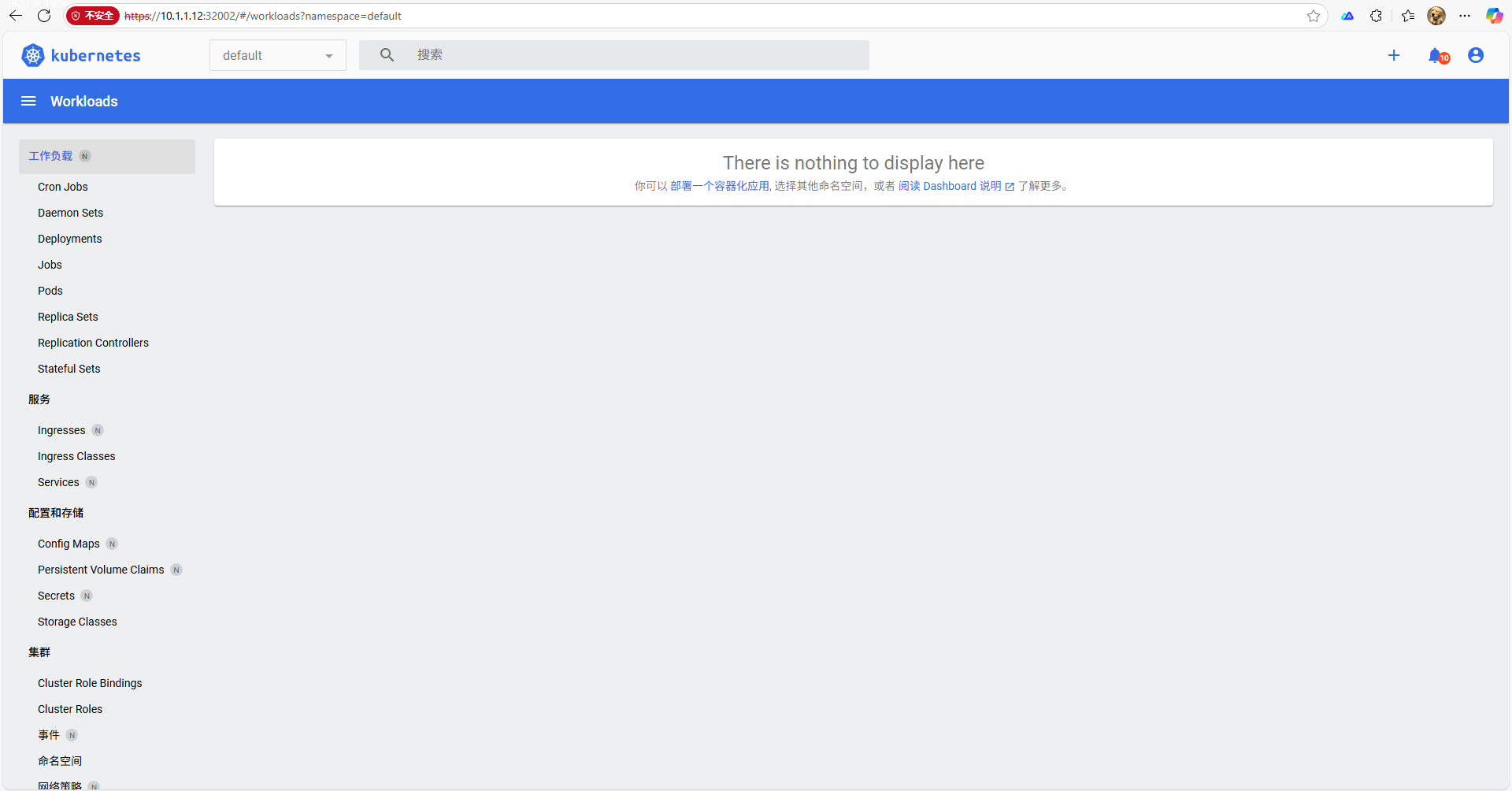

通過配置文件創建資源:

kubectl apply -f dashboard.yaml

訪問測試:https://<node_ip>:32002

一鍵生成訪問 Kubernetes Dashboard 的 Token:

vi dashboard-admin.yaml

---

apiVersion: v1

kind: ServiceAccount

metadata:

name: admin-user

namespace: kubernetes-dashboard

---

apiVersion: rbac.authorization.k8s.io/v1

kind: ClusterRoleBinding

metadata:

name: admin-user-binding

roleRef:

apiGroup: rbac.authorization.k8s.io

kind: ClusterRole

name: cluster-admin

subjects:

- kind: ServiceAccount

name: admin-user

namespace: kubernetes-dashboard

創建token:

kubectl create -f dashboard-admin.yaml

生成token:

kubectl -n kubernetes-dashboard create token admin-user

復制過去web頁面上即可登錄:

浙公網安備 33010602011771號

浙公網安備 33010602011771號