批量下載網(wǎng)絡(luò)圖片或視頻

可復(fù)用可組合。

概述

在互聯(lián)網(wǎng)上瀏覽時,遇到好看的圖片或視頻,總想下載保存起來。在“B站視頻批量下載工具 ”一文中講解了通過API json 來獲取資源地址。本文講述使用Python解析網(wǎng)頁文件來實現(xiàn)批量下載網(wǎng)絡(luò)圖片或視頻的方法。

批量下載網(wǎng)絡(luò)圖片或視頻,主要有四步:

- 生成多個 URL:可能需要從多個URL獲取圖片或視頻資源;

- 抓取網(wǎng)頁內(nèi)容:將網(wǎng)頁內(nèi)容下載下來,為析取圖片視頻資源作準(zhǔn)備;

- 解析網(wǎng)頁內(nèi)容:從網(wǎng)頁內(nèi)容里析取圖片視頻資源,通常是一系列鏈接地址;

- 下載圖片和視頻:調(diào)用下載工具下載連接地址里的圖片或視頻資源。

我們對程序的目標(biāo)依然是:

- 可復(fù)用:盡量寫一次,多處使用。

- 可組合:能夠組合出不同功能的工具。

要做到可復(fù)用,就需要盡量把通用的部分抽離出來,做成選項; 要做到可組合,就需要把功能合理地拆分成多個子任務(wù),用不同程序去實現(xiàn)這些子任務(wù)。

?

生成多個URL

多個URL往往是在一個基礎(chǔ)URL經(jīng)過某種變形得到。可以用含有占位符 :p 的URL模板 t 和參數(shù)組成。

gu.py

from typing import List

from urllib.parse import urlparse

import argparse

def generate_urls(base_url: str, m: int, n: int) -> List[str]:

"""

Generate a series of URLs based on a base URL and transformation rules.

Args:

base_url (str): The base URL to transform

m (int): Start number

n (int): End number (inclusive)

Returns:

List[str]: List of generated URLs

Examples:

>>> generate_urls("https://example.com/xxx:pyyy", 1, 3)

['https://example.com/xxx1yyy', 'https://example.com/xxx2yyy', 'https://example.com/xxx3yyy']

>>> generate_urls("https://example.com/page_:p.php", 1, 3)

['https://example.com/page_1.php', 'https://example.com/page_2.php', 'https://example.com/page_3.php']

"""

if not base_url or not isinstance(m, int) or not isinstance(n, int):

raise ValueError("Invalid input parameters")

if m > n:

raise ValueError("Start number (m) must be less than or equal to end number (n)")

# Parse the URL to validate it

parsed_url = urlparse(base_url)

if not parsed_url.scheme and not base_url.startswith('//'):

raise ValueError("Invalid URL format")

# Handle the $p pattern

if ":p" in base_url:

parts = base_url.split(":p")

if len(parts) != 2:

raise ValueError("Invalid URL pattern: should contain exactly one $p")

prefix, suffix = parts

return [f"{prefix}{i}{suffix}" for i in range(m, n + 1)]

raise ValueError("URL pattern not supported. Use $p as placeholder for numbers")

def parse_range(range_str: str) -> tuple[int, int]:

"""

Parse a range string like "1-3" into start and end numbers.

Args:

range_str (str): Range string (e.g., "1-3")

Returns:

tuple[int, int]: Start and end numbers

"""

try:

start, end = map(int, range_str.split("-"))

return start, end

except ValueError:

raise ValueError("Invalid range format. Use 'start-end' (e.g., '1-3')")

def parse_list(list_str: str) -> List[str]:

"""

Parse a comma-separated string into a list of values.

Args:

list_str (str): Comma-separated string (e.g., "1,2,3")

Returns:

List[str]: List of values

"""

return [item.strip() for item in list_str.split(",")]

def main():

parser = argparse.ArgumentParser(description='Generate a series of URLs based on a pattern')

parser.add_argument('-u', '--url', required=True, help='Base URL with {p} as placeholder')

# Add mutually exclusive group for range or list

group = parser.add_mutually_exclusive_group(required=True)

group.add_argument('-r', '--range', help='Range of numbers (e.g., "1-3")')

group.add_argument('-l', '--list', help='Comma-separated list of values (e.g., "1,2,3")')

args = parser.parse_args()

try:

if args.range:

start, end = parse_range(args.range)

urls = generate_urls(args.url, start, end)

elif args.list:

values = parse_list(args.list)

template = args.url.replace(":p", "{}")

urls = [template.format(value) for value in values]

for url in urls:

print(url)

except ValueError as e:

print(f"Error: {e}")

exit(1)

if __name__ == "__main__":

main()

使用方法:

gu -u "https://www.yituyu.com/gallery/:p/index.html" -l "10234,10140"

就可以生成 https://www.yituyu.com/gallery/10234/index.html, https://www.yituyu.com/gallery/10140/index.html

或者使用

gu -u "https://www.yituyu.com/gallery/:p/index.html" -r 1-3

?

抓取網(wǎng)頁內(nèi)容

web.py

這里使用了 requests 和 chromedriver 。靜態(tài)網(wǎng)頁可以直接用 requests,動態(tài)網(wǎng)頁需要用 chromedriver 模擬打開網(wǎng)頁。有些網(wǎng)頁還需要滾動到最下面加載資源。

import requests

import time

from pytools.common.common import catchExc

from pytools.con.multitasks import IoTaskThreadPool

from selenium import webdriver

from selenium.webdriver.chrome.options import Options

from selenium.webdriver.common.by import By

delay_for_http_req = 0.5 # 500ms

class HTMLGrasper(object):

def __init__(self, conf):

'''

抓取 HTML 網(wǎng)頁內(nèi)容時的配置項

_async: 是否異步加載網(wǎng)頁。 _async = 1 當(dāng)網(wǎng)頁內(nèi)容是動態(tài)生成時,異步加載網(wǎng)頁;

target_id_when_async: 當(dāng) _async = 1 指定。

由于此時會加載到很多噪音內(nèi)容,需要指定 ID 來精確獲取所需的內(nèi)容部分

sleep_when_async: 當(dāng) _async = 1 指定。

異步加載網(wǎng)頁時需要等待的秒數(shù)

'''

self._async = conf.get('async', 0)

self.target_id_when_async = conf.get('targetIdWhenAsync', '')

self.sleep_when_async = conf.get('sleepWhenAsync', 10)

def batch_grab_html_contents(self, urls):

'''

batch get the html contents of urls

'''

grab_html_pool = IoTaskThreadPool(20)

return grab_html_pool.exec(self.get_html_content, urls)

def get_html_content(self, url):

if self._async == 1:

html_content = self.get_html_content_async(url)

if html_content is not None and html_content != '':

html = '<html><head></head><body>' + html_content + '</body></html>'

return html

return self.get_html_content_from_url(url)

def get_html_content_async(self, url):

'''

get html content from dynamic loaed html url

'''

chrome_options = Options()

chrome_options.add_argument('--headless')

chrome_options.add_argument('--disable-gpu')

driver = webdriver.Chrome(chrome_options=chrome_options)

driver.get(url)

time.sleep(self.sleep_when_async)

# 模擬滾動到底部多次以確保加載所有內(nèi)容

last_height = driver.execute_script("return document.body.scrollHeight")

for _ in range(3): # 最多滾動3次

# 滾動到底部

driver.execute_script("window.scrollTo(0, document.body.scrollHeight);")

# 等待加載

time.sleep(2)

# 計算新的滾動高度并與上一次比較

new_height = driver.execute_script("return document.body.scrollHeight")

if new_height == last_height:

break

last_height = new_height

try:

elem = driver.find_element_by_id(self.target_id_when_async)

except:

elem = driver.find_element(By.XPATH, '/html/body')

return elem.get_attribute('innerHTML')

def get_html_content_from_url(self, url):

'''

get html content from html url

'''

r = requests.get(url)

status = r.status_code

if status != 200:

return ''

return r.text

'''

# 利用property裝飾器將獲取name方法轉(zhuǎn)換為獲取對象的屬性

@property

def async(self):

return self._async

# 利用property裝飾器將設(shè)置name方法轉(zhuǎn)換為獲取對象的屬性

@async.setter

def async(self,async):

self._async = async

'''

?

析取圖片或視頻資源

這里使用了 BeautifulSoup. 網(wǎng)頁文件通常是 HTML。因此,需要寫一個程序,從 HTML 內(nèi)容中解析出圖片或資源地址。現(xiàn)代web頁面通常采用 DIV+CSS+JS 框架。 圖片或視頻資源,通常是 a, img, video 之類的標(biāo)簽,或者 class 或 id 指定的元素 。再定位到元素的屬性,比如 href, src 等。此處需要一點 HTML 和 CSS 知識,還有 jQuery 定位元素的知識。

res.py

#!/usr/bin/python3

#_*_encoding:utf-8_*_

import re

import sys

import json

import argparse

from bs4 import BeautifulSoup

from pytools.net.web import HTMLGrasper

save_res_links_file = '/Users/qinshu/joy/reslinks.txt'

server_domain = ''

def parse_args():

description = '''This program is used to batch fetch url resources from specified urls.

eg. python3 res.py -u http://xxx.html -r 'img=jpg,png;class=resLink;id=xyz'

will search resource links from network urls http://xxx.html by specified rules

img = jpg or png OR class = resLink OR id = xyz [ multiple rules ]

python3 tools/res.py -u 'http://tu.heiguang.com/works/show_167480.html' -r 'img=jpg!c'

for <img src="xxx.jpg!c"/>

'''

parser = argparse.ArgumentParser(description=description)

parser.add_argument('-u','--url', nargs='+', help='At least one html urls are required', required=True)

parser.add_argument('-r','--rulepath', nargs=1, help='rules to search resources. if not given, search a hrefs or img resources in given urls', required=False)

parser.add_argument('-o','--output', nargs=1, help='Specify the output file to save the links', required=False)

parser.add_argument('-a','--attribute', nargs=1, help='Extract specified attribute values from matched elements', required=False)

args = parser.parse_args()

init_urls = args.url

rule_path = args.rulepath

output_file = args.output[0] if args.output else save_res_links_file

return (init_urls, rule_path, output_file, args.attribute[0] if hasattr(args, 'attribute') and args.attribute else None)

def get_abs_link(server_domain, link):

try:

link_content = link

if link_content.startswith('//'):

link_content = 'https:' + link_content

if link_content.startswith('/'):

link_content = server_domain + link_content

return link_content

except:

return ''

def batch_get_res_true_link(res_links):

return filter(lambda x: x != '', res_links)

res_tags = set(['img', 'video', 'a', 'div'])

def find_wanted_links(html_content, rule, attribute):

'''

find html links or res links from html by rule.

sub rules such as:

(1) a link with id=[value1,value2,...]

(2) a link with class=[value1,value2,...]

(3) res with src=xxx.jpg|png|mp4|...

a rule is map containing sub rule such as:

{ 'id': [id1, id2, ..., idn] } or

{ 'class': [c1, c2, ..., cn] } or

{ 'img': ['jpg', 'png', ... ]} or

{ 'video': ['mp4', ...]}

'''

#print("html===\n"+html_content+"\n===End")

#print("rule===\n"+str(rule)+"\n===End")

soup = BeautifulSoup(html_content, "lxml")

all_links = []

attribute_links = []

for (key, values) in rule.items():

if key == 'id':

for id in values:

link_soups = soup.find_all('a', id=id)

elif key == 'class':

for cls in values:

link_soups = find_link_soups(soup, ['a', 'img', 'div'], cls)

elif key in res_tags:

link_soups = []

for res_suffix in values:

if res_suffix != "":

link_soups.extend(soup.find_all(key, src=re.compile(res_suffix)))

else:

link_soups = soup.find_all(key)

attribute_links.extend([link.get(attribute) for link in link_soups if link.get(attribute)])

all_links.extend(attribute_links)

return all_links

def find_link_soups(soup, tags, cls):

all_link_soups = []

if len(tags) == 0:

all_link_soups.extend(soup.find_all("a", class_=cls))

else:

for tag in tags:

if cls != "":

link_soups = soup.find_all(tag, class_=cls)

else:

link_soups = soup.find_all(tag)

all_link_soups.extend(link_soups)

return all_link_soups

def validate(link):

valid_suffix = ['png', 'jpg', 'jpeg', 'mp4']

for suf in valid_suffix:

if link.endswith(suf):

return True

if link == '':

return False

if link.endswith('html'):

return False

if 'javascript' in link:

return False

return True

def batch_get_links(urls, rules, output_file, attribute=None):

conf = {"async":1, "target_id_when_async": "page-fav", "sleep_when_async": 10}

grasper = HTMLGrasper(conf)

html_content_list = grasper.batch_grab_html_contents(urls)

all_links = []

for html_content in html_content_list:

for rule in rules:

links = find_wanted_links(html_content, rule, attribute)

all_links.extend(links)

with open(output_file, 'w') as f:

for link in all_links:

print(link)

f.write(link + "\n")

def parse_rules_param(rules_param):

'''

parse rules params to rules json

eg. img=jpg,png;class=resLink;id=xyz to

[{"img":["jpg","png"], "class":["resLink"], "id":["xyz"]}]

'''

default_rules = [{'img': ['jpg','png','jpeg']},{"class":"*"}]

if rules_param:

try:

rules = []

rules_str_arr = rules_param[0].split(";")

for rule_str in rules_str_arr:

rule_arr = rule_str.split("=")

key = rule_arr[0]

value = rule_arr[1].split(",")

rules.append({key: value})

return rules

except ValueError as e:

print('Param Error: invalid rulepath %s %s' % (rule_path_json, e))

sys.exit(1)

return default_rules

def parse_server_domain(url):

parts = url.split('/', 3)

return parts[0] + '//' + parts[2]

def test_batch_get_links():

urls = ['http://dp.pconline.com.cn/list/all_t145.html']

rules = [{"img":["jpg"], "video":["mp4"]}]

batch_get_links(urls, rules, save_res_links_file)

if __name__ == '__main__':

#test_batch_get_links()

(init_urls, rules_param, output_file, attribute) = parse_args()

if not output_file:

output_file = save_res_links_file

# print('init urls: %s' % "\n".join(init_urls))

rule_path = parse_rules_param(rules_param)

server_domain = parse_server_domain(init_urls[0])

# print('rule_path: %s\n server_domain:%s' % (rule_path, server_domain))

batch_get_links(init_urls, rule_path, output_file, attribute)

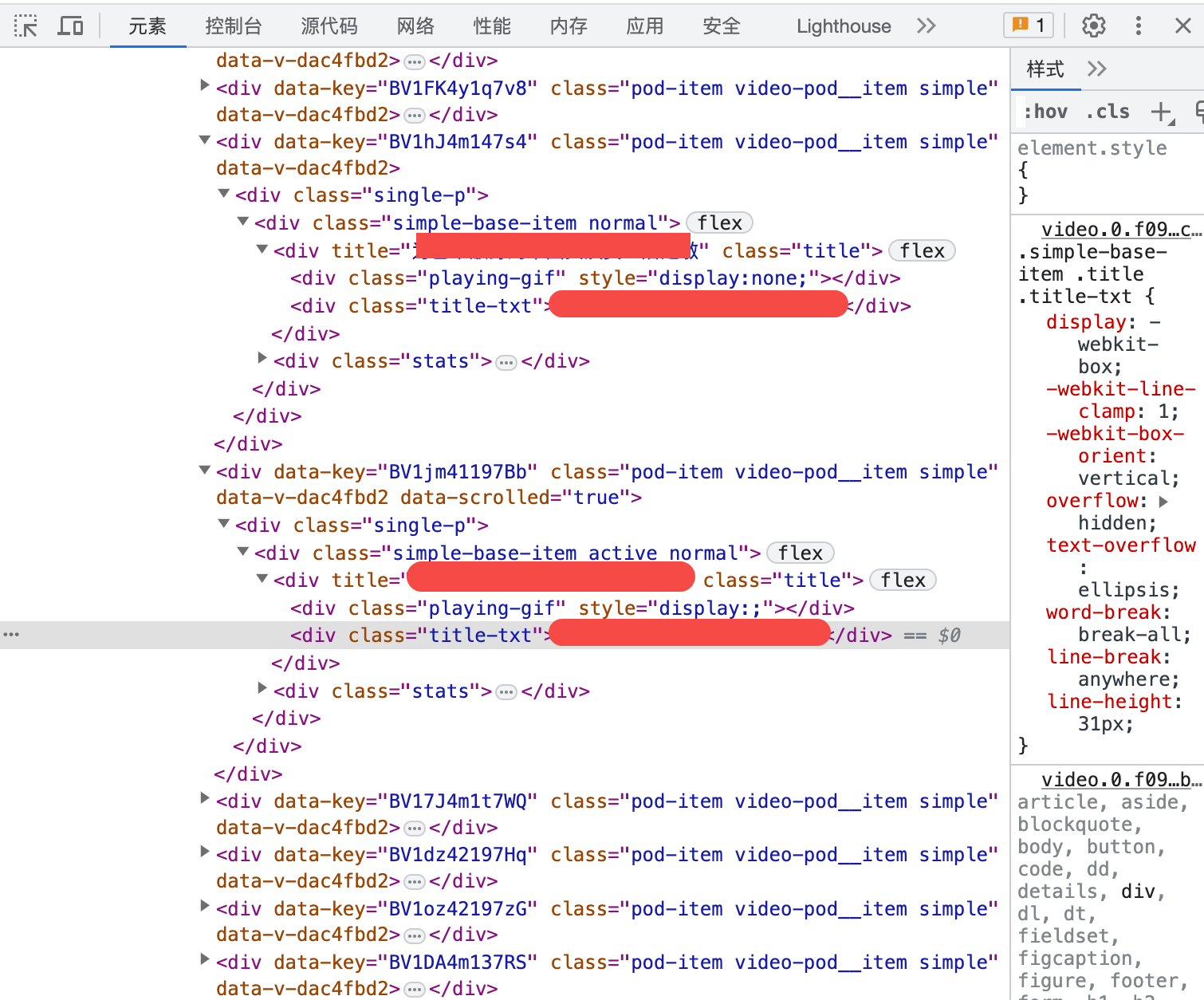

怎么找到對應(yīng)的資源地址?右鍵-控制臺,鼠標(biāo)點擊最左邊那個箭頭指向的小方框,然后在點擊網(wǎng)頁元素,就會定位到網(wǎng)頁元素,下面圖片資源地址就是 img 的 data-src 屬性 或者 src 屬性。 不過這里 src 屬性是需要滾動到最后才能展示所有的,但 data-src 是直接加載的。如果要省時,就可以用 data-src 屬性。好處是快,不足是不通用。

將圖片資源URL下載到 ~/Downloads/links5.txt,整個命令是:

gu -u "https://www.yituyu.com/gallery/:p/index.html" -l "9174,9170" | xargs -I {} python3 ~/tools/pytools/pytools/tools/res.py -u {} -r "class=lazy" -a "data-src" -o ~/Downloads/links5.txt

視頻下載

如果是 視頻,比如 B 站系列視頻

就是 :

res -u 'https://www.bilibili.com/video/BV1jm41197Bb?spm_id_from=333.788.player.switch&vd_source=2a7209c6b9f3816adcc27d449605fc8a' -r 'class=video-pod__item' -a 'data-key' -o ~/Downloads/bvids.txt && fill -t "https://www.bilibili.com/video/:p" -f ~/Downloads/bvids.txt > ~/Downloads/video.txt && dw -f ~/Downloads/video.txt

這里由于獲取的是 bvid,要形成網(wǎng)址,就需要一個填充器,構(gòu)建最終資源URL。

fill.py

import argparse

def fill(template, file_path):

"""

Replace :p in the template with each line from the file and print the result.

fill -t "http://https://www.bilibili.com/video/:p" -f ~/joy/reslinks.txt

"""

try:

with open(file_path, 'r') as file:

for line in file:

line = line.strip() # Remove leading/trailing whitespace

if line: # Skip empty lines

result = template.replace(':p', line)

print(result)

except FileNotFoundError:

print(f"Error: File '{file_path}' not found.")

except Exception as e:

print(f"An error occurred: {e}")

def main():

parser = argparse.ArgumentParser(description='Replace :p in a template with lines from a file.')

parser.add_argument('-t', '--template', required=True, help='The string template containing :p')

parser.add_argument('-f', '--file', required=True, help='The file containing lines to replace :p')

args = parser.parse_args()

fill(args.template, args.file)

if __name__ == '__main__':

main()

下載圖片或視頻

這里采用的是調(diào)用封裝工具命令 you-get 的 y 命令。

dw.py

#!/usr/bin/env python3

import subprocess

import shlex

from pathlib import Path

from typing import Optional, Union, List

import time

import requests

import argparse

default_save_path = "/Users/qinshu/Downloads"

def download(url: str, output_dir: Union[str, Path]) -> Optional[Path]:

output_dir = Path(output_dir)

if url.endswith(".jpg") or url.endswith(".png"):

download_image(url, output_dir / Path(url).name)

else:

download_video(url, output_dir)

return output_dir / Path(url).name

return None

def download_image(url: str, output_file: Union[str, Path]) -> None:

try:

# 發(fā)送 HTTP GET 請求獲取圖片

response = requests.get(url, stream=True)

response.raise_for_status() # 檢查請求是否成功

# 以二進(jìn)制寫入模式保存圖片

with open(output_file, 'wb') as f:

for chunk in response.iter_content(1024):

f.write(chunk)

print(f"圖片已保存至: {output_file}")

except requests.exceptions.RequestException as e:

print(f"下載失敗: {e}")

except Exception as e:

print(f"發(fā)生錯誤: {e}")

def download_video(

video_url: str,

output_dir: Union[str, Path] = Path.cwd(),

timeout: int = 3600, # 1小時超時

retries: int = 1,

verbose: bool = True

) -> Optional[Path]:

"""

使用 y 命令下載視頻

參數(shù):

video_url: 視頻URL (e.g. "https://www.bilibili.com/video/BV1xx411x7xx")

output_dir: 輸出目錄 (默認(rèn)當(dāng)前目錄)

timeout: 超時時間(秒)

retries: 重試次數(shù)

verbose: 顯示下載進(jìn)度

返回:

成功時返回下載的視頻路徑,失敗返回None

"""

if video_url == "":

return None

output_dir = Path(output_dir)

output_dir.mkdir(parents=True, exist_ok=True)

cmd = f"y {shlex.quote(video_url)}"

if verbose:

print(f"開始下載: {video_url}")

print(f"保存到: {output_dir.resolve()}")

print(f"執(zhí)行命令: {cmd}")

for attempt in range(1, retries + 1):

try:

start_time = time.time()

# 使用Popen實現(xiàn)實時輸出

process = subprocess.Popen(

cmd,

shell=True,

cwd=str(output_dir),

stdout=subprocess.PIPE,

stderr=subprocess.STDOUT,

universal_newlines=True,

bufsize=1

)

# 實時打印輸出

while True:

output = process.stdout.readline()

if output == '' and process.poll() is not None:

break

if output and verbose:

print(output.strip())

# 檢查超時

if time.time() - start_time > timeout:

process.terminate()

raise subprocess.TimeoutExpired(cmd, timeout)

# 檢查返回碼

if process.returncode == 0:

if verbose:

print(f"下載成功 (嘗試 {attempt}/{retries})")

return _find_downloaded_file(output_dir, video_url)

else:

raise subprocess.CalledProcessError(process.returncode, cmd)

except (subprocess.TimeoutExpired, subprocess.CalledProcessError) as e:

if attempt < retries:

wait_time = min(attempt * 10, 60) # 指數(shù)退避

if verbose:

print(f"嘗試 {attempt}/{retries} 失敗,{wait_time}秒后重試...")

print(f"錯誤: {str(e)}")

time.sleep(wait_time)

else:

if verbose:

print(f"下載失敗: {str(e)}")

return None

def _find_downloaded_file(directory: Path, video_url: str) -> Optional[Path]:

"""嘗試自動查找下載的文件"""

# 這里可以根據(jù)實際y命令的輸出文件名模式進(jìn)行調(diào)整

# 示例:查找最近修改的視頻文件

video_files = sorted(

directory.glob("*.mp4"),

key=lambda f: f.stat().st_mtime,

reverse=True

)

return video_files[0] if video_files else None

def read_urls_from_file(file_path: Union[str, Path]) -> List[str]:

with open(file_path, 'r') as f:

return [line.strip() for line in f if line.strip()]

def main():

parser = argparse.ArgumentParser(description="下載工具:支持從URL或文件下載視頻和圖片")

parser.add_argument("-u", "--url", help="單個下載URL")

parser.add_argument("-f", "--file", help="包含多個URL的文件路徑(每行一個URL)")

parser.add_argument("-o", "--output", default=".", help="輸出目錄路徑(默認(rèn)為當(dāng)前目錄)")

args = parser.parse_args()

if not args.url and not args.file:

parser.error("必須提供 -u 或 -f 參數(shù)")

if not args.output:

output_dir = default_save_path

else:

output_dir = Path(args.output)

output_dir.mkdir(parents=True, exist_ok=True)

urls = []

if args.url:

urls.append(args.url)

if args.file:

urls.extend(read_urls_from_file(args.file))

for url in urls:

print(f"處理URL: {url}")

result = download(url, output_dir)

if result:

print(f"下載完成: {result}")

else:

print(f"下載失敗: {url}")

if __name__ == "__main__":

main()

y

link=$1

you-get $link --cookies "/Users/qinshu/privateqin/cookies.txt" -f -o /Users/qinshu/Downloads

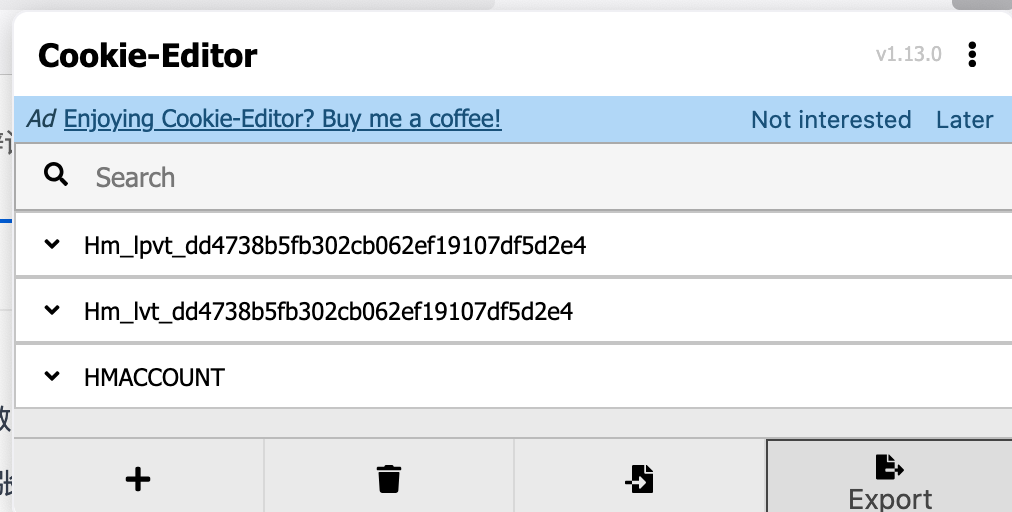

cookies.txt 可以通過 firefox cookieEdit 插件來完成。

注冊工具

要想運行工具,就需要 python /path/to/python_file.py ,每次寫全路徑挺麻煩的。可以寫一個shell 腳本,將寫的 python 工具注冊到 ~/.zshrc ,然后每次 source ~/.zshrc 即可。

#!/bin/bash

# Get the absolute path of the tools directory

TOOLS_DIR="/Users/qinshu/tools/pytools/pytools/tools"

# Add a comment to mark the beginning of our tools section

echo -e "\n# === Python Tools Aliases ===" >> ~/.zshrc

# Loop through all Python files in the tools directory

for file in "$TOOLS_DIR"/*.py; do

if [ -f "$file" ]; then

# Get just the filename without extension

filename=$(basename "$file" .py)

# Skip .DS_Store and any other hidden files

if [[ $filename != .* ]]; then

# Create the alias command

alias_cmd="alias $filename=\"python3 $file\""

# Check if this alias already exists in .zshrc

if ! grep -q "alias $filename=" ~/.zshrc; then

echo "$alias_cmd" >> ~/.zshrc

echo "Added alias for: $filename"

else

echo "Alias already exists for: $filename"

fi

fi

fi

done

echo "All Python tools have been registered in ~/.zshrc"

echo "Please run 'source ~/.zshrc' to apply the changes"

這里實際上就是生成了一系列 alias:

alias gu="python3 /Users/qinshu/tools/pytools/pytools/tools/gu.py"

alias res="python3 /Users/qinshu/tools/pytools/pytools/tools/res.py"

alias dw="python3 /Users/qinshu/tools/pytools/pytools/tools/dw.py"

這樣就可以直接用

gu -u 'https://xxx' -l 1-3

從該網(wǎng)站批量下載圖片的整個命令合起來是:

gu -u "https://www.yituyu.com/gallery/:p/index.html" -l "9174,9170" | xargs -I {} python3 ~/tools/pytools/pytools/tools/res.py -u {} -r "class=lazy" -a "data-src" -o ~/Downloads/links5.txt && dw -f -o ~/Downloads/links5.txt

雖然繁瑣了一點,但是勝在通用。

?

小結(jié)

本文講解了批量下載網(wǎng)絡(luò)圖片或視頻的方法,包括四個主要步驟:生成多個URL、抓取網(wǎng)頁內(nèi)容、析取資源地址、下載資源。每個步驟既獨立又承上啟下,因此做到了可組合。要做到通用,需要掌握一些基本編程知識,尤其是一些表達(dá)式語法,比如jsonpath、HTML/CSS標(biāo)簽語法、jQuery定位元素語法、正則表達(dá)式等。編程是表達(dá),而語言是核心。當(dāng)你理解其中原理時,掌握更多表達(dá)式語法,就能獲得更強的能力,而不僅僅局限于 GUI。GUI只是軟件能力的一個子集而已。

本文所有程序幾乎都是由AI生成,人工簡單調(diào)試。有了AI,編寫工具更方便了。在比較熟練編寫有效提示詞之后,使用AI的進(jìn)階之路是:規(guī)劃清晰的思路,合理拆分子任務(wù),讓AI去幫你完成。

那么,程序員是不是就不再寫代碼了呢?程序員應(yīng)當(dāng)去寫解決復(fù)雜問題的代碼,而不是在無盡的CRUD里遨游。

浙公網(wǎng)安備 33010602011771號

浙公網(wǎng)安備 33010602011771號