51job爬蟲

51job爬蟲

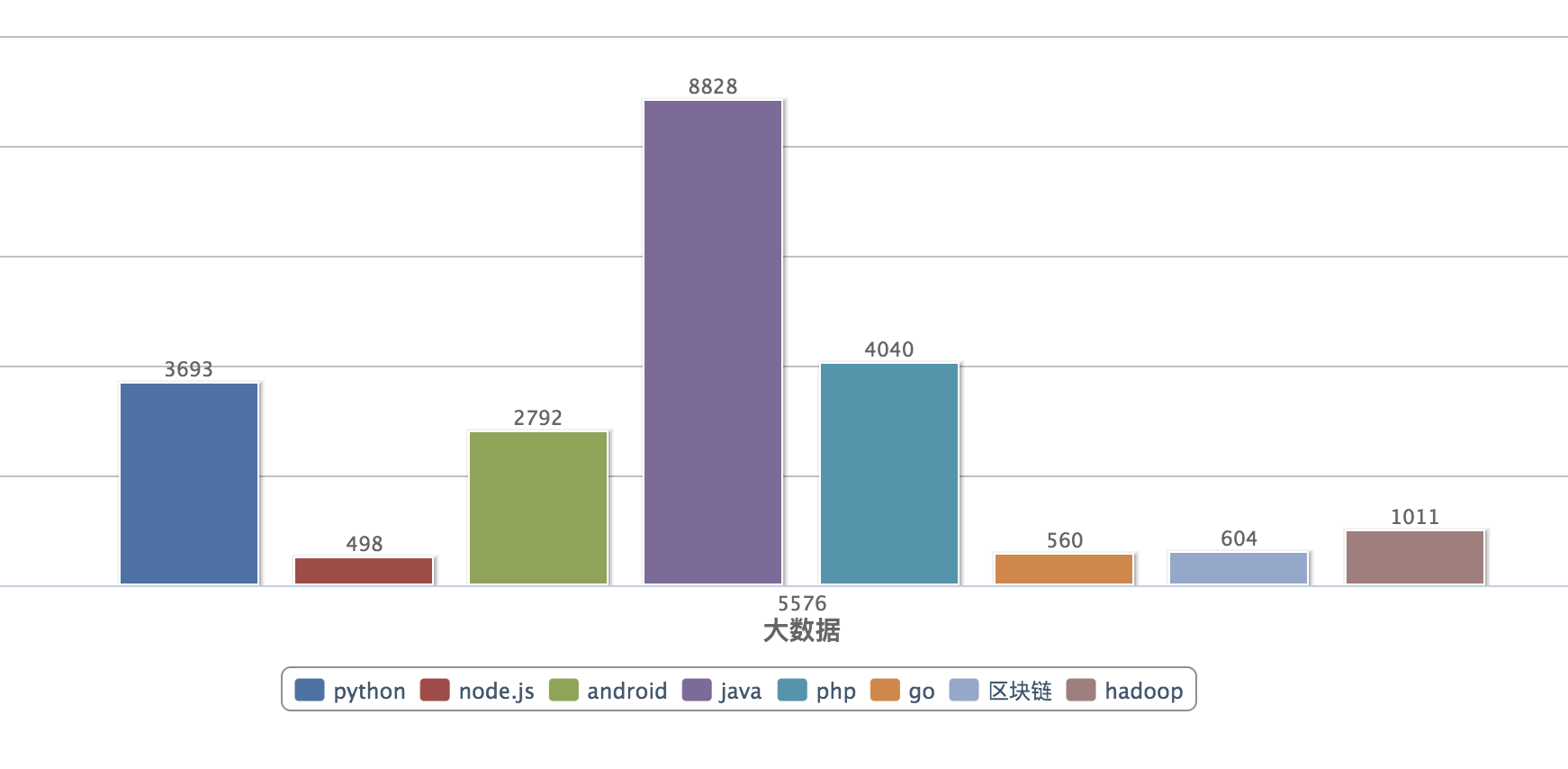

項目使用Python3.7爬取前程無憂對應關鍵字的招聘,保存到mongodb,爬取下來的數據,可分析出目前互聯網的近況,可統計到每個招聘崗位有多少,每個崗位的薪資分布情況

github地址:https://github.com/HowName/51job

- 統計結果圖,java還是老大哥

![]()

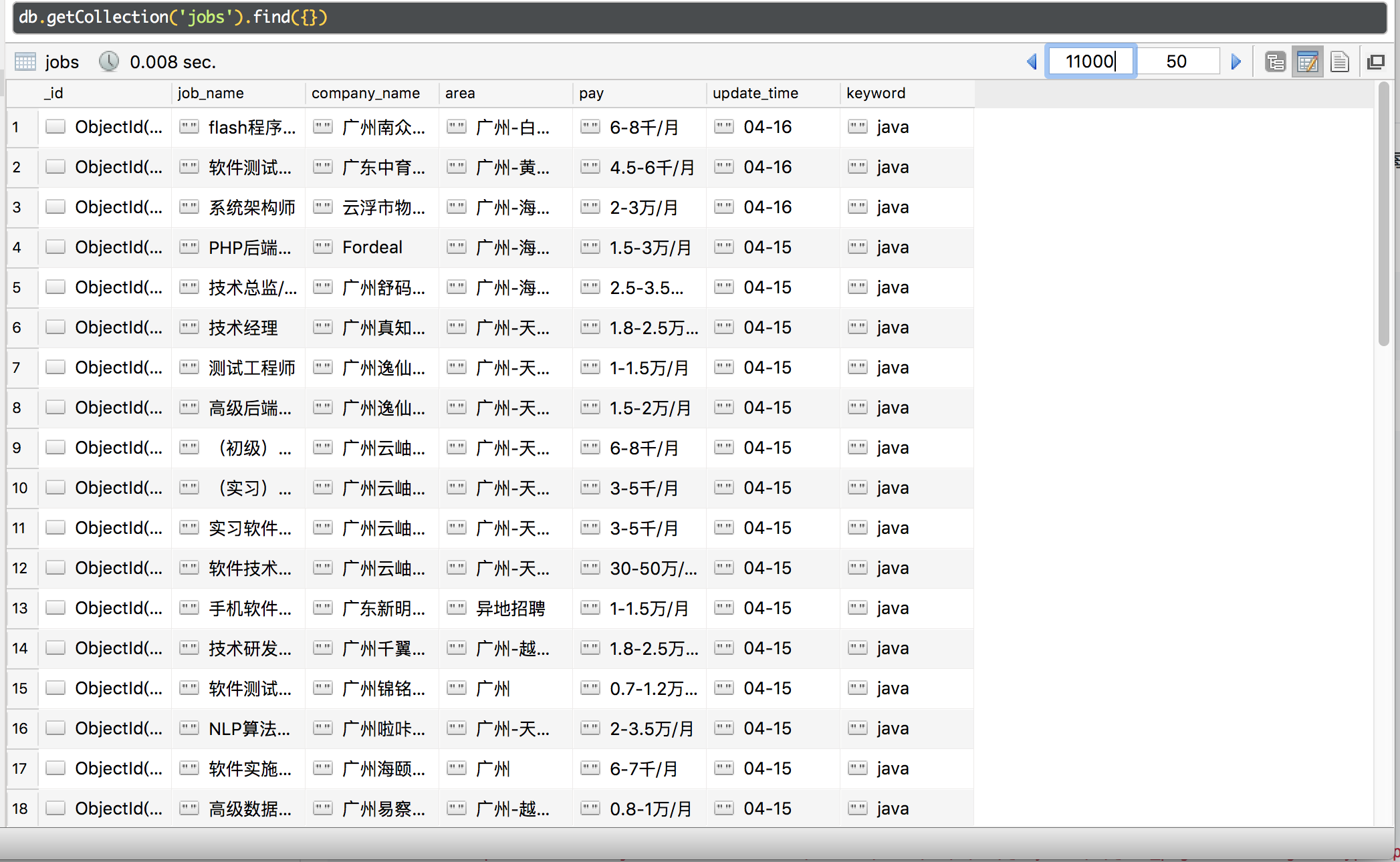

- 爬取效果圖

![]()

- mongodb數據圖

![]()

- 使用到的庫(第三方庫建議使用pip進行安裝)

-

BeautifulSoup4,pymongo,requests,re,time

- 項目主代碼

-

import re import time from bs4 import BeautifulSoup from pack.DbUtil import DbUtil from pack.RequestUtil import RequestUtil db = DbUtil() # 要查找的崗位 keywords = ['php', 'java', 'python', 'node.js', 'go', 'hadoop', 'AI', '算法工程師', 'ios', 'android', '區塊鏈', '大數據'] for keyword in keywords: cur_page = 1 url = 'https://search.51job.com/list/030200,000000,0000,00,9,99,@keyword,2,@cur_page.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=' \ .replace('@keyword', str(keyword)).replace('@cur_page', str(cur_page)) req = RequestUtil() html_str = req.get(url) # 從第一頁中查找總頁數 soup = BeautifulSoup(html_str, 'html.parser') # 推薦使用lxml the_total_page = soup.select('.p_in .td')[0].string.strip() the_total_page = int(re.sub(r"\D", "", the_total_page)) # 取數字 print('keyword:', keyword, 'total page: ', the_total_page) print('start...') while cur_page <= the_total_page: """ 循環獲取每一頁 """ url = 'https://search.51job.com/list/030200,000000,0000,00,9,99,@keyword,2,@cur_page.html?lang=c&stype=&postchannel=0000&workyear=99&cotype=99°reefrom=99&jobterm=99&companysize=99&providesalary=99&lonlat=0%2C0&radius=-1&ord_field=0&confirmdate=9&fromType=&dibiaoid=0&address=&line=&specialarea=00&from=&welfare=' \ .replace('@keyword', str(keyword)).replace('@cur_page', str(cur_page)) req = RequestUtil() html_str = req.get(url) if html_str: soup = BeautifulSoup(html_str, 'html.parser') # print(soup.prettify()) #格式化打印 the_all = soup.select('.dw_table .el') del the_all[0] # 讀取每一項招聘 dict_data = [] for item in the_all: job_name = item.find(name='a').string.strip() company_name = item.select('.t2')[0].find('a').string.strip() area = item.select('.t3')[0].string.strip() pay = item.select('.t4')[0].string update_time = item.select('.t5')[0].string.strip() dict_data.append( {'job_name': job_name, 'company_name': company_name, 'area': area, 'pay': pay, 'update_time': update_time, 'keyword': keyword} ) # 插入mongodb db.insert(dict_data) print('keyword:', keyword, 'success page:', cur_page, 'insert count:', len(dict_data)) time.sleep(0.5) else: print('keyword:', keyword, 'fail page:', cur_page) # 頁數加1 cur_page += 1 else: print('keyword:', keyword, 'fetch end...') else: print('Mission complete!!!')

浙公網安備 33010602011771號

浙公網安備 33010602011771號