langgraph-genui

langgraph-genui

https://github.com/fanqingsong/langgraph-genui

這是一個基于 LangGraph 的微服務架構項目,包含智能體服務和前端對話界面兩個獨立的微服務。

langgraph-genui/

├── agent/ # 智能體微服務

│ ├── src/ # 智能體源代碼

│ ├── langgraph.json # LangGraph 配置

│ ├── pyproject.toml # Python 項目配置

│ ├── requirements.txt # Python 依賴

│ ├── Dockerfile # 智能體 Docker 配置

│ ├── docker-compose.yml # 智能體 Docker Compose 配置

│ ├── start.sh # 智能體啟動腳本

│ └── README.md # 智能體服務說明

├── chatui/ # 前端對話界面微服務

│ ├── src/ # 前端源代碼

│ ├── public/ # 靜態資源

│ ├── package.json # Node.js 項目配置

│ ├── Dockerfile # 前端 Docker 配置

│ ├── docker-compose.yml # 前端 Docker Compose 配置

│ ├── start.sh # 前端啟動腳本

│ └── README.md # 前端服務說明

└── README.md # 項目總體說明

- 功能: LangGraph 智能體后端服務

- 技術棧: Python + LangGraph + Docker

- API: 提供智能體 API 接口

- Studio: 支持 LangGraph Studio 可視化界面

- 功能: 前端對話界面

- 技術棧: Next.js + React + TypeScript + Docker

- 特性: 基于 agent-chat-ui 項目

參考:

Agent Chat UI

https://github.com/langchain-ai/agent-chat-ui

Agent Chat UI is a Next.js application which enables chatting with any LangGraph server with a messages key through a chat interface.

Once the app is running (or if using the deployed site), you'll be prompted to enter:

- Deployment URL: The URL of the LangGraph server you want to chat with. This can be a production or development URL.

- Assistant/Graph ID: The name of the graph, or ID of the assistant to use when fetching, and submitting runs via the chat interface.

- LangSmith API Key: (only required for connecting to deployed LangGraph servers) Your LangSmith API key to use when authenticating requests sent to LangGraph servers.

After entering these values, click Continue. You'll then be redirected to a chat interface where you can start chatting with your LangGraph server.

LangChain 和 Vercel AI SDK 協同打造生成式 UI

https://www.bilibili.com/opus/944968117423964169

生成式 UI 實現流程

為了理解 LangChain 和 Vercel AI SDK 如何協同創建流式 UI,讓我們按圖中的箭頭逐步分析。

請求流程(藍色箭頭)

- 用戶互動:用戶與前端的對話組件進行互動,進行提問(及文件上傳)。

- 請求邏輯:用戶互動觸發客戶端 UI 組件向服務端 RSC 邏輯模塊發送請求,該模塊包含處理請求所需的邏輯。(通常 RSC 邏輯模塊會先向 Next.js React 前端回傳準備好的各類加載界面,前端接收后渲染加載界面)

- 請求 LangChain.js:RSC 邏輯模塊將請求發送給 LangChain.js,作為和后端 LangChain Python 服務之間的橋梁。

- 請求 LangServe:LangChain.js 將請求發送給 LangServe(通過 FastAPI),調用請求中指定的模型或工具;并開始接收流失數據回傳。

響應流程(紫色箭頭)

- 調用應用邏輯:LangServe 處理請求,調用指定的 LLM 應用邏輯。該過程由 LangGraph 管理,按需執行應用的推理邏輯,或者模型上所綁定的工具(例如 Foo 和 Bar)。

- 數據流回傳:LangServe 將模型或工具執行的結果通過數據流的方式,經過 LangChain.js 的 Remote Runnable 對象傳輸回服務端的 RSC 邏輯模塊。

- 流傳輸 UI: RSC 邏輯模塊基于響應數據創建或更新可流式傳輸回前端的流式組件,并將服務端渲染得到的 UI 內容回傳給前端。

- UI 更新:Next.js React 客戶端接收到新的可渲染內容后動態更新前端 UI(如渲染 Foo 工具的組件界面),以新的數據提供無縫和互動的用戶體驗。

總結

LangChain 和 Vercel AI SDK 的結合為構建生成式 UI 應用提供了強大的工具包。通過利用這兩種技術的優勢,開發人員可以創建高度個性化和互動的用戶界面,實時適應用戶行為和偏好。這種集成不僅增強了用戶參與度,還簡化了開發過程,使得構建復雜的 AI 驅動應用變得更加容易。

LangChain Generative UI(生成式UI)

https://www.bilibili.com/video/BV1T4421D7pR/?vd_source=57e261300f39bf692de396b55bf8c41b&spm_id_from=333.788.player.switch

Gen UI Python: https://github.com/bracesproul/gen-ui-python

Gen UI JS: https://github.com/bracesproul/gen-ui Vercel

AI SDK RSC: https://sdk.vercel.ai/docs/ai-sdk-rsc/overview

How to implement generative user interfaces with LangGraph

https://docs.langchain.com/langgraph-platform/generative-ui-react

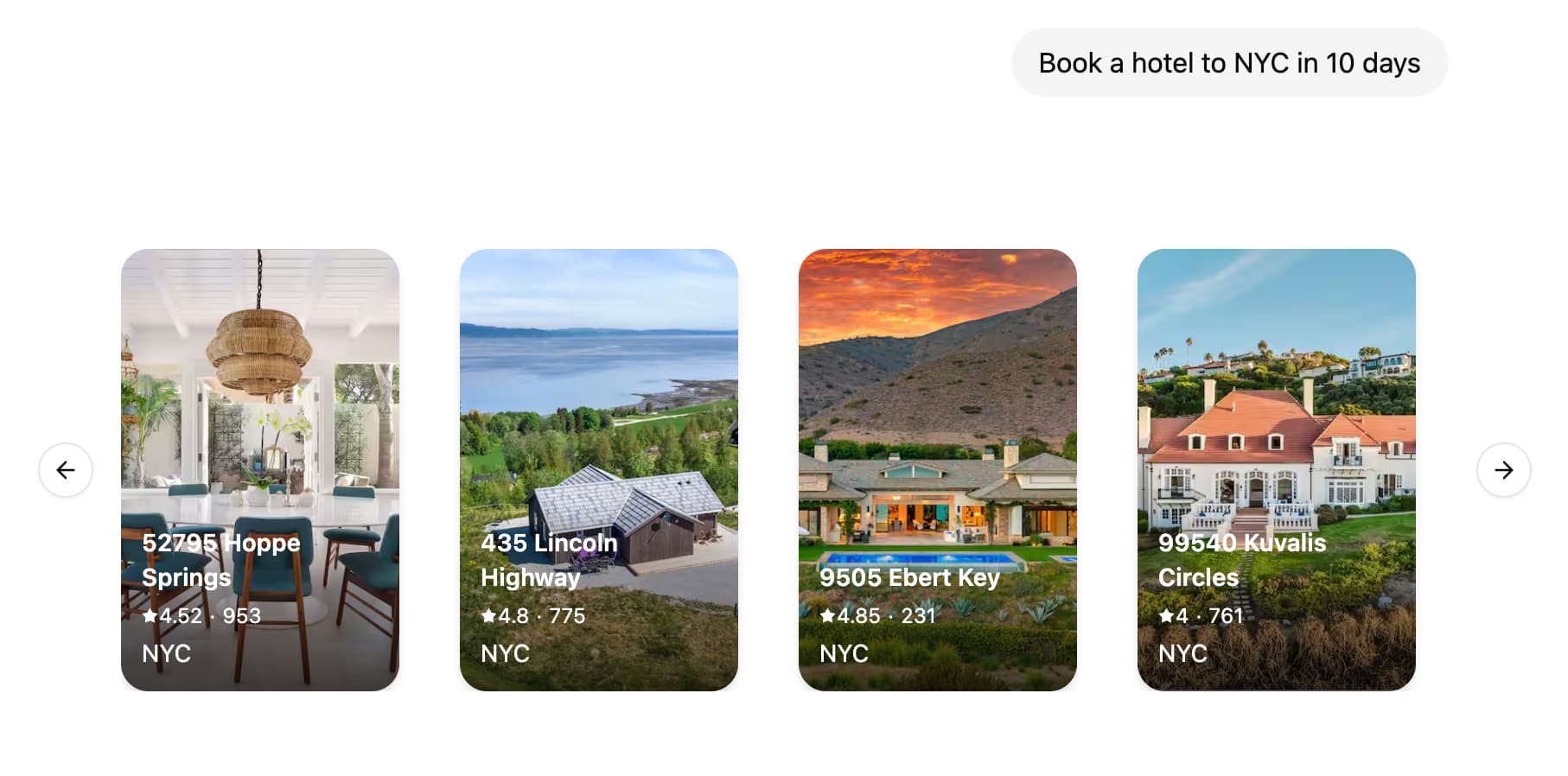

Generative user interfaces (Generative UI) allows agents to go beyond text and generate rich user interfaces. This enables creating more interactive and context-aware applications where the UI adapts based on the conversation flow and AI responses.  LangGraph Platform supports colocating your React components with your graph code. This allows you to focus on building specific UI components for your graph while easily plugging into existing chat interfaces such as Agent Chat and loading the code only when actually needed.

LangGraph Platform supports colocating your React components with your graph code. This allows you to focus on building specific UI components for your graph while easily plugging into existing chat interfaces such as Agent Chat and loading the code only when actually needed.

Agent Chat UI

https://docs.langchain.com/oss/python/langchain/ui

LangChain provides a powerful prebuilt user interface that work seamlessly with agents created using create_agent(). This UI is designed to provide rich, interactive experiences for your agents with minimal setup, whether you’re running locally or in a deployed context (such as LangGraph Platform).

Agent Chat UI

Agent Chat UI is a Next.js application that provides a conversational interface for interacting with any LangChain agent. It supports real-time chat, tool visualization, and advanced features like time-travel debugging and state forking. Agent Chat UI is open source and can be adapted to your application needs.

Connect to your agent

Agent Chat UI can connect to both local and deployed agents. After starting Agent Chat UI, you’ll need to configure it to connect to your agent:

- Graph ID: Enter your graph name (find this under

graphsin yourlanggraph.jsonfile) - Deployment URL: Your LangGraph server’s endpoint (e.g.,

http://localhost:2024for local development, or your deployed agent’s URL) - LangSmith API key (optional): Add your LangSmith API key (not required if you’re using a local LangGraph server)

Once configured, Agent Chat UI will automatically fetch and display any interrupted threads from your agent.

?? Claude Code 演練:LangGraph 構建生成式 UI 界面開發演示 ??

https://www.bilibili.com/video/BV1jZ37zoEgc/?vd_source=57e261300f39bf692de396b55bf8c41b

GAC平臺:https://gaccode.com/signup?ref=UWDADYQI

最佳實踐:https://www.youware.com/project/12j7l4bqao

LangGraph GenUI:https://langchain-ai.github.io/langgraph/cloud/how-tos/generative_ui_react/

如何使用 LangGraph 實現生成式用戶界面

https://github.langchain.ac.cn/langgraphjs/cloud/how-tos/generative_ui_react/#learn-more

生成式用戶界面(Generative UI)允許 Agent 超越文本,生成豐富的用戶界面。這使得創建更具交互性和上下文感知能力的應用成為可能,其中 UI 會根據對話流程和 AI 響應進行調整。

LangGraph 平臺支持將您的 React 組件與您的圖代碼并置。這使您能夠專注于為您的圖構建特定的 UI 組件,同時輕松插入到現有的聊天界面中,例如 Agent Chat,并僅在實際需要時才加載代碼。

import uuid from typing import Annotated, Sequence, TypedDict from langchain_core.messages import AIMessage, BaseMessage from langchain_openai import ChatOpenAI from langgraph.graph import StateGraph from langgraph.graph.message import add_messages from langgraph.graph.ui import AnyUIMessage, ui_message_reducer, push_ui_message class AgentState(TypedDict): # noqa: D101 messages: Annotated[Sequence[BaseMessage], add_messages] ui: Annotated[Sequence[AnyUIMessage], ui_message_reducer] async def weather(state: AgentState): class WeatherOutput(TypedDict): city: str weather: WeatherOutput = ( await ChatOpenAI(model="gpt-4o-mini") .with_structured_output(WeatherOutput) .with_config({"tags": ["nostream"]}) .ainvoke(state["messages"]) ) message = AIMessage( id=str(uuid.uuid4()), content=f"Here's the weather for {weather['city']}", ) # Emit UI elements associated with the message push_ui_message("weather", weather, message=message) return {"messages": [message]} workflow = StateGraph(AgentState) workflow.add_node(weather) workflow.add_edge("__start__", "weather") graph = workflow.compile()

浙公網安備 33010602011771號

浙公網安備 33010602011771號