數據分類實驗的python程序

實驗設置要求:

- 數據集:共12個,從本地文件夾中包含若干個以xlsx為后綴的Excel文件,每個文件中有一個小規模數據,有表頭,最后一列是分類的類別class,其他列是特征,數值的。

- 實驗方法:XGBoost、AdaBoost、SVM (采用rbf核)、Neural Network分類器

- 輸出:分類準確率,即十折交叉驗證的準確率均值和方差,并重復5次實驗,不同數據的實驗結果分別保存至各自的一個csv文件。

- 其他要求:SVC增加rbf參數設置,默認為0.001、MLPClassifier為1層隱層神經網絡,隱層節點為100. XGBoost和AdaBoost弱分類器設置。 cross_val_score增加數據標準化和n_jobs設置。由于數據的類別可能是非連續的字符形式,增加class的映射

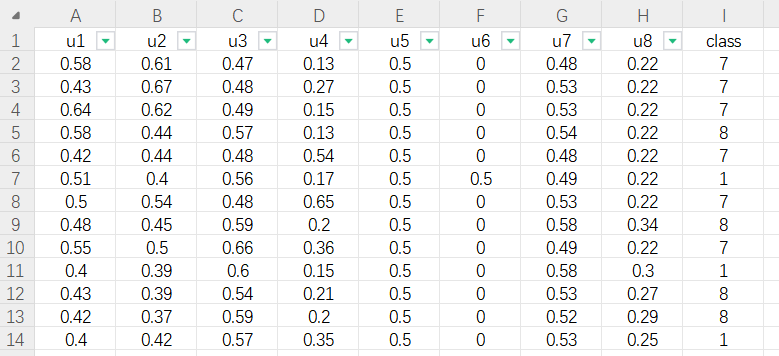

Excel中的數據形式如下:

python程序如下:

import os

import pandas as pd

import numpy as np

from sklearn.model_selection import KFold, cross_val_score

from sklearn.svm import SVC

from sklearn.ensemble import AdaBoostClassifier

from sklearn.neural_network import MLPClassifier

import xgboost as xgb

from sklearn.preprocessing import StandardScaler, LabelEncoder

from sklearn.pipeline import Pipeline

from concurrent.futures import ThreadPoolExecutor

# 1. 讀取文件夾中的所有.xlsx文件

data_folder = "./分類數據集"

file_list = [f for f in os.listdir(data_folder) if f.endswith('.xlsx')]

result_folder = "./results"

# 定義分類器

classifiers = {

"XGBoost": xgb.XGBClassifier(),

"AdaBoost": AdaBoostClassifier(n_estimators=50), #https://scikit-learn.org/stable/modules/generated/sklearn.ensemble.AdaBoostClassifier.html

"SVM_rbf": SVC(C=1.0, kernel="rbf", gamma='scale'), #gamma默認為1 / (n_features * X.var()),

#可以根據數據集進行調整

"Neural_Network": MLPClassifier(hidden_layer_sizes=(100), max_iter=1000)

}

# # 對每一個文件進行分類實驗

# def process_file(file):

# 對每一個文件進行分類實驗

for file in file_list:

df = pd.read_excel(os.path.join(data_folder, file))

X = df.iloc[:, :-1].values

y = df.iloc[:, -1].values

# 類別映射

le = LabelEncoder()

y = le.fit_transform(y)

results = {

"Classifier": [],

"Experiment 1": [],

"Experiment 2": [],

"Experiment 3": [],

"Experiment 4": [],

"Experiment 5": [],

"Mean Accuracy": [],

"Accuracy Variance": []

}

# 使用四種分類器

for clf_name, clf in classifiers.items():

all_accuracies = []

# 使用標準化和分類器創建流水線

pipeline = Pipeline([

('scaler', StandardScaler()),

('classifier', clf)

])

# 重復5次實驗

for exp_num in range(1, 6):

print(clf_name, exp_num)

kf = KFold(n_splits=10, shuffle=True, random_state=None)

accuracies = cross_val_score(pipeline, X, y, cv=kf,n_jobs=16)

results[f"Experiment {exp_num}"].append(np.mean(accuracies))

all_accuracies.extend(accuracies)

results["Classifier"].append(clf_name)

results["Mean Accuracy"].append(np.mean(all_accuracies))

results["Accuracy Variance"].append(np.var(all_accuracies))

# 保存到.csv文件

result_df = pd.DataFrame(results)

result_df.to_csv(os.path.join(result_folder, f"results_{file.replace('.xlsx', '.csv')}"), index=False)

# # 使用多線程處理文件

# with ThreadPoolExecutor() as executor:

# executor.map(process_file, file_list)

print("Experiments completed!")

浙公網安備 33010602011771號

浙公網安備 33010602011771號