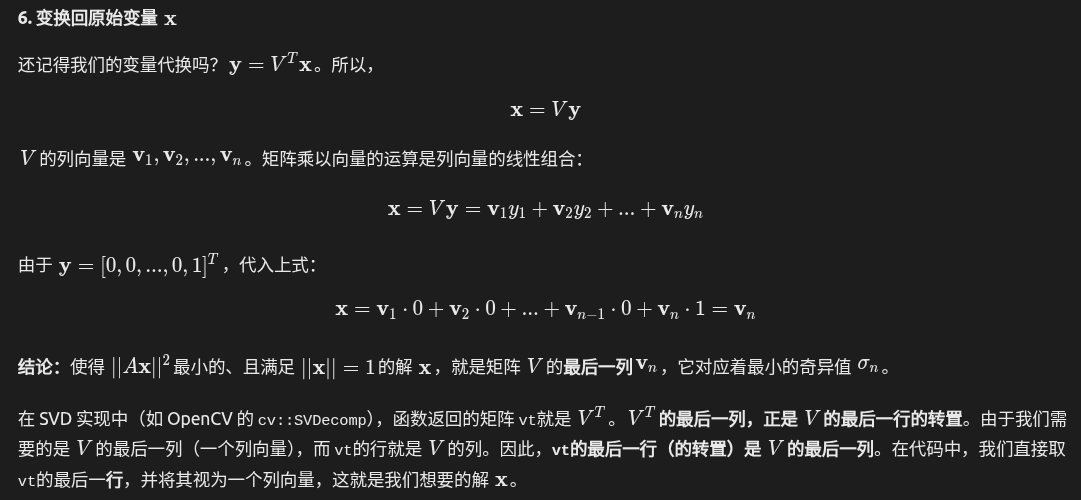

為什么V T 的最后一列就是解?

第一和第二幀產生地圖點后,如果進來第三幀,如何定位第三幀,并將其建立的新地圖點和現(xiàn)有地圖點合并一個坐標系。考慮到第三幀和第二幀的相對位姿尺度未必與第一幀和第二幀一致。參考orb slam2解決

這是一個非常核心的細節(jié)!這個過程被稱為 “地圖點投影匹配”或 “從粗到精的搜索”。ORB-SLAM2 通過一系列精心設計的策略來高效且準確地完成這個匹配。其核心思想是:利用已知的幾何信息來極大地縮小搜索范圍,而不是在整個圖像上進行暴力匹配。以下是詳細的步驟分解:

第一步:投影與創(chuàng)建搜索窗口

- 投影地圖點:

- 對于局部地圖中的每一個地圖點 Pw?(世界坐標系下的3D點),利用第三幀的初始估計位姿Tcw?(來自恒速模型或上一幀位姿)將其投影到第三幀的圖像平面上。

- 投影公式: ppred?=π(Tcw??Pw?),其中 π是相機投影模型。

- 確定搜索窗口和尺度:

- 搜索窗口:由于位姿估計和地圖點位置本身都有不確定性,投影點 ppred?不一定是精確的匹配位置。因此,系統(tǒng)會以 ppred?為中心,建立一個矩形的搜索窗口。

- 尺度不確定性:地圖點是在其被創(chuàng)建的關鍵幀的尺度上被觀測到的。由于第三幀可能與創(chuàng)建該點的關鍵幀距離不同,同一個地圖點在不同幀中可能會出現(xiàn)在不同的圖像金字塔層級(尺度)上。

- 預測尺度:ORB-SLAM2 會根據地圖點與第三幀光心的距離d來預測它應該在第三幀的哪個圖像金字塔層級出現(xiàn)。具體來說,地圖點會記錄它被創(chuàng)建時的一個平均觀測距離 dref?和所在的金字塔層級 lref?。

- 預測的尺度公式大致為: lpred?=lref?+log2?(dref?d?)。

- 這個預測的尺度 lpred?決定了搜索應該在哪個金字塔層級圖像上進行,同時也決定了搜索窗口的半徑(通常高層級的搜索半徑會變小)。

第二步:在搜索窗口內進行匹配(描述子距離計算)

現(xiàn)在,任務是在第三幀上,以上述投影點 ppred?為中心、在預測尺度 lpred?附近的一個小范圍內,找到一個特征點,其ORB描述子與地圖點的ORB描述子最相似。

- 獲取地圖點的描述子:每個地圖點都存儲了一個代表性ORB描述子。這個描述子通常是在它被創(chuàng)建時,所有觀測到它的關鍵幀的描述子中的中值描述子(通過計算每個bit位出現(xiàn)最多的值來得到),這使其對誤差有一定的魯棒性。

- 在搜索窗口內遍歷所有候選特征點:

- 在第三幀上,找出所有位于搜索窗口內,并且所在金字塔層級與預測尺度 lpred?相近(例如 lpred?±1)的特征點。這些點就是潛在的匹配候選點。

- 計算描述子距離:

- 將地圖點的描述子與搜索窗口內每一個候選特征點的描述子進行比較。

- ORB描述子是二進制描述子(256位),比較它們使用漢明距離(Hamming Distance),即兩個二進制串之間不同位的個數(shù)。漢明距離越小,相似度越高。

- 篩選最佳匹配:

- 找出所有候選點中,與地圖點描述子漢明距離最小的那個特征點。

- 為了確保匹配質量,這個最小漢明距離必須低于一個預設的閾值(比如50)。同時,還需要檢查次優(yōu)匹配的質量,即“最近鄰/次近鄰”比率檢驗:最佳匹配的距離要明顯小于次佳匹配的距離(例如,最佳距離 < 0.8 * 次佳距離),以避免模糊匹配。

第三步:幾何驗證與異常值剔除

通過描述子匹配得到的對應關系可能仍然包含錯誤匹配(外點)。ORB-SLAM2 在后續(xù)的位姿優(yōu)化步驟中會進行嚴格的幾何驗證。

- 直接線性變換(DLT)或RANSAC-PnP:在初始匹配階段,有時會使用帶RANSAC的PnP來快速剔除明顯的錯誤匹配。

- 運動-only的BA優(yōu)化(重投影誤差優(yōu)化):這是最核心的步驟。在獲得一組初步的匹配后,系統(tǒng)會構建一個優(yōu)化問題:

Tcw?min?i∑?ρ(∥pi??π(Tcw??Piw?)∥2) - 在這個優(yōu)化過程中,重投影誤差過大的匹配對會被標記為外點。優(yōu)化器(如g2o)會使用魯棒核函數(shù)(如Huber核)來降低這些外點的影響。

- 優(yōu)化完成后,那些誤差始終很大的匹配會被直接剔除。

參考代碼

'''

# 創(chuàng)建環(huán)境

conda create --name yuyislam python=3.10

# 激活環(huán)境

conda activate pytorch_cuda

# 安裝 PyTorch 和 CUDA 11.3(官方推薦組合)

pip install torch torchvision torchaudio --index-url https://download.pytorch.org/whl/cu118

# 安裝 OpenCV 和其他依賴

conda install opencv numpy pandas matplotlib pyyaml

# 使用 pip 安裝兼容 Python 3.10 的 Open3D

pip install open3d

pip install -U g2o-python

使用 environment.yml 文件創(chuàng)建

conda env create -f environment.yml

conda activate yuyislam

data_path = "/home/dongdong/2project/0data/RTK/data_1_nwpuUp/data3_1130_13pm/300_location_14pm" # 修改為您的數(shù)據路徑

'''

import os

import cv2

import numpy as np

import yaml

import pickle

import open3d as o3d

from typing import List, Dict, Tuple, Optional, Set

import matplotlib.pyplot as plt

from collections import deque, defaultdict

import time

class Camera:

def __init__(self, config_path: str):

self.load_config(config_path)

self.K = np.array([

[self.fx, 0, self.cx],

[0, self.fy, self.cy],

[0, 0, 1]

])

# 修正畸變參數(shù)格式為OpenCV期望的形狀

self.dist_coeffs = np.zeros((1, 5), dtype=np.float64)

self.dist_coeffs[0, 0] = self.k1

self.dist_coeffs[0, 1] = self.k2

self.dist_coeffs[0, 2] = self.p1

self.dist_coeffs[0, 3] = self.p2

self.dist_coeffs[0, 4] = self.k3

print(f"相機內參加載成功:")

print(f" 名稱: {self.name}")

print(f" 模型: {self.model}")

print(f" 圖像尺寸: {self.cols} x {self.rows}")

print(f" 焦距: fx={self.fx:.2f}, fy={self.fy:.2f}")

print(f" 主點: cx={self.cx:.2f}, cy={self.cy:.2f}")

print(f" 畸變參數(shù): k1={self.k1}, k2={self.k2}, k3={self.k3}, k4={self.k4}")

print(f" 切向畸變: p1={self.p1}, p2={self.p2}")

print(f" 顏色順序: {self.color_order}")

print(f" 幀率: {self.fps} FPS")

print(f" 畸變參數(shù)形狀: {self.dist_coeffs.shape}")

def load_config(self, config_path: str):

"""從YAML文件加載相機內參"""

if not os.path.exists(config_path):

raise FileNotFoundError(f"相機配置文件不存在: {config_path}")

with open(config_path, 'r') as file:

config = yaml.safe_load(file)

print("原始配置文件內容:")

print(config)

# 正確獲取Camera配置

if 'Camera' in config:

camera_config = config['Camera']

else:

camera_config = config

# 按照您指定的格式讀取相機內參

self.cols = camera_config.get('Camera.cols', 1805)

self.rows = camera_config.get('Camera.rows', 1203)

self.cx = camera_config.get('Camera.cx', 910.8785687265895)

self.cy = camera_config.get('Camera.cy', 602.174293145834)

self.fx = camera_config.get('Camera.fx', 1193.7076128098686)

self.fy = camera_config.get('Camera.fy', 1193.1735265967602)

self.k1 = camera_config.get('Camera.k1', 0.0)

self.k2 = camera_config.get('Camera.k2', 0.0)

self.k3 = camera_config.get('Camera.k3', 0.0)

self.k4 = camera_config.get('Camera.k4', 0.0)

self.p1 = camera_config.get('Camera.p1', 0.0)

self.p2 = camera_config.get('Camera.p2', 0.0)

self.model = camera_config.get('Camera.model', 'perspective')

self.color_order = camera_config.get('Camera.color_order', 'RGB')

self.name = camera_config.get('Camera.name', 'NWPU monocular')

self.fps = camera_config.get('Camera.fps', 10)

class MapPoint:

def __init__(self, point_3d: np.ndarray, descriptor: np.ndarray, creating_kf_id: int):

self.point_3d = point_3d.copy() # 3D坐標(世界坐標系)

self.descriptor = descriptor.copy() if descriptor is not None else None # ORB描述子

self.observations = [] # 觀測列表 [(frame_id, kp_index)]

self.observing_keyframes = set() # 觀測關鍵幀ID集合

self.track_count = 0 # 被跟蹤次數(shù)

self.is_new = True # 是否是新增點

self.creating_kf_id = creating_kf_id # 創(chuàng)建該點的關鍵幀ID

def add_observation(self, frame_id: int, kp_index: int):

"""添加觀測關系"""

if frame_id not in self.observing_keyframes:

self.observations.append((frame_id, kp_index))

self.observing_keyframes.add(frame_id)

self.track_count += 1

if len(self.observing_keyframes) > 1:

self.is_new = False

class KeyFrame:

def __init__(self, frame_id: int, image: np.ndarray, keypoints: List[cv2.KeyPoint],

descriptors: np.ndarray, pose: np.ndarray):

self.frame_id = frame_id

self.image = image

self.keypoints = keypoints # ORB特征點

self.descriptors = descriptors # ORB描述子

self.pose = pose.copy() # 4x4變換矩陣(世界坐標系)

self.map_points = [] # 關聯(lián)的地圖點索引

self.map_point_indices = {} # 關鍵點索引到地圖點索引的映射

class BundleAdjustment:

"""使用g2o進行光束法平差優(yōu)化"""

@staticmethod

def optimize_pose_and_points(keyframes: List[KeyFrame], map_points: List[MapPoint],

camera_K: np.ndarray, max_iterations: int = 10):

"""使用g2o優(yōu)化位姿和地圖點"""

if len(keyframes) < 2 or len(map_points) == 0:

return keyframes, map_points

try:

# 創(chuàng)建優(yōu)化器

optimizer = g2o.SparseOptimizer()

solver = g2o.BlockSolverSE3(g2o.LinearSolverCSparseSE3())

solver = g2o.OptimizationAlgorithmLevenberg(solver)

optimizer.set_algorithm(solver)

# 添加相機參數(shù)

cam = g2o.CameraParameters(

camera_K[0, 0], # fx

[camera_K[0, 2], camera_K[1, 2]], # cx, cy

0 # 基線(單目為0)

)

cam.set_id(0)

optimizer.add_parameter(cam)

# 添加關鍵幀頂點

frame_vertices = {}

for i, kf in enumerate(keyframes):

v_se3 = g2o.VertexSE3Expmap()

v_se3.set_id(i)

v_se3.set_estimate(g2o.SE3Quat(kf.pose[:3, :3], kf.pose[:3, 3]))

v_se3.set_fixed(i == 0) # 固定第一幀

optimizer.add_vertex(v_se3)

frame_vertices[kf.frame_id] = v_se3

# 添加地圖點頂點

point_vertices = {}

for i, mp in enumerate(map_points):

v_p = g2o.VertexPointXYZ()

v_p.set_id(i + len(keyframes))

v_p.set_estimate(mp.point_3d)

v_p.set_marginalized(True)

v_p.set_fixed(False)

optimizer.add_vertex(v_p)

point_vertices[i] = v_p

# 添加邊(觀測)

edge_id = 0

for mp_idx, mp in enumerate(map_points):

for frame_id, kp_idx in mp.observations:

if frame_id in frame_vertices:

# 找到對應的關鍵幀

kf = next((kf for kf in keyframes if kf.frame_id == frame_id), None)

if kf is not None and kp_idx < len(kf.keypoints):

# 創(chuàng)建邊

edge = g2o.EdgeProjectXYZ2UV()

edge.set_vertex(0, point_vertices[mp_idx])

edge.set_vertex(1, frame_vertices[frame_id])

edge.set_measurement([kf.keypoints[kp_idx].pt[0], kf.keypoints[kp_idx].pt[1]])

edge.set_information(np.eye(2))

edge.set_parameter_id(0, 0)

edge.set_id(edge_id)

optimizer.add_edge(edge)

edge_id += 1

# 優(yōu)化

print(f"開始g2o優(yōu)化,關鍵幀: {len(keyframes)}, 地圖點: {len(map_points)}, 邊: {edge_id}")

optimizer.initialize_optimization()

optimizer.optimize(max_iterations)

# 更新優(yōu)化后的位姿和地圖點

for i, kf in enumerate(keyframes):

if kf.frame_id in frame_vertices:

v_se3 = frame_vertices[kf.frame_id]

optimized_pose = v_se3.estimate()

kf.pose[:3, :3] = optimized_pose.rotation().matrix()

kf.pose[:3, 3] = optimized_pose.translation()

for i, mp in enumerate(map_points):

if i in point_vertices:

v_p = point_vertices[i]

mp.point_3d = v_p.estimate()

print("g2o優(yōu)化完成")

except Exception as e:

print(f"g2o優(yōu)化失敗: {e}")

return keyframes, map_points

class MonoSLAM:

def __init__(self, config_path: str, image_folder: str):

self.camera = Camera(config_path)

self.image_folder = image_folder

if not os.path.exists(image_folder):

raise FileNotFoundError(f"圖像文件夾不存在: {image_folder}")

# ORB特征提取器

self.orb = cv2.ORB_create(nfeatures=2000, scaleFactor=1.2, nlevels=8)

self.bf_matcher = cv2.BFMatcher(cv2.NORM_HAMMING, crossCheck=False)

# SLAM狀態(tài)

self.keyframes: List[KeyFrame] = []

self.map_points: List[MapPoint] = []

self.current_frame_id = 0

self.world_pose = np.eye(4) # 世界坐標系位姿(第一幀為原點)

# 可視化

self.vis = None

self.point_cloud = None

self.camera_trajectory = None

# 參數(shù)

self.min_matches = 50

self.keyframe_threshold = 0.1

self.pnp_reprojection_threshold = 3.0

self.descriptor_match_threshold = 50 # 描述子匹配閾值

print("單目SLAM系統(tǒng)初始化完成")

def init_visualization(self):

"""初始化可視化窗口"""

if self.vis is None:

self.vis = o3d.visualization.Visualizer()

self.vis.create_window(window_name='MonoSLAM', width=1200, height=800)

# 設置視角

ctr = self.vis.get_view_control()

ctr.set_front([0, 0, -1])

ctr.set_lookat([0, 0, 0])

ctr.set_up([0, -1, 0])

ctr.set_zoom(0.8)

def load_images(self) -> List[str]:

"""加載并排序圖像文件"""

image_files = [f for f in os.listdir(self.image_folder)

if f.lower().endswith(('.jpg', '.jpeg', '.png', '.JPG', '.JPEG', '.PNG'))]

def extract_number(filename):

numbers = ''.join(filter(str.isdigit, os.path.splitext(filename)[0]))

return int(numbers) if numbers else 0

image_files.sort(key=extract_number)

full_paths = [os.path.join(self.image_folder, f) for f in image_files]

print(f"找到 {len(image_files)} 張圖像")

return full_paths

def extract_features(self, image: np.ndarray) -> Tuple[List[cv2.KeyPoint], np.ndarray]:

"""提取ORB特征點"""

if len(image.shape) == 3:

if self.camera.color_order == 'RGB':

image_gray = cv2.cvtColor(image, cv2.COLOR_RGB2GRAY)

else:

image_gray = cv2.cvtColor(image, cv2.COLOR_BGR2GRAY)

else:

image_gray = image

keypoints, descriptors = self.orb.detectAndCompute(image_gray, None)

if descriptors is None:

descriptors = np.array([])

print(f"提取到 {len(keypoints)} 個特征點")

return keypoints, descriptors

def match_features(self, desc1: np.ndarray, desc2: np.ndarray, ratio_test=0.8) -> List[cv2.DMatch]:

"""匹配特征點"""

if desc1 is None or desc2 is None or len(desc1) == 0 or len(desc2) == 0:

return []

# 使用knn匹配

matches = self.bf_matcher.knnMatch(desc1, desc2, k=2)

# 應用比率測試

good_matches = []

for match_pair in matches:

if len(match_pair) == 2:

m, n = match_pair

if m.distance < ratio_test * n.distance:

good_matches.append(m)

return good_matches

def match_with_map_points(self, descriptors: np.ndarray) -> List[Tuple[int, int]]:

"""使用描述子匹配當前幀特征點與地圖點"""

if len(self.map_points) == 0 or descriptors is None or len(descriptors) == 0:

return []

# 收集所有地圖點的描述子

map_descriptors = []

valid_map_indices = []

for i, mp in enumerate(self.map_points):

if mp.descriptor is not None and len(mp.descriptor) > 0:

map_descriptors.append(mp.descriptor)

valid_map_indices.append(i)

if len(map_descriptors) == 0:

return []

map_descriptors = np.array(map_descriptors)

# 匹配當前幀描述子與地圖點描述子

matches = self.bf_matcher.match(descriptors, map_descriptors)

# 應用距離閾值

good_matches = []

for match in matches:

if match.distance < self.descriptor_match_threshold:

map_point_idx = valid_map_indices[match.trainIdx]

good_matches.append((match.queryIdx, map_point_idx))

print(f"地圖點匹配: {len(good_matches)}/{len(descriptors)}")

return good_matches

def initialize_map(self, image1: np.ndarray, image2: np.ndarray) -> bool:

"""初始化地圖:第一幀和第二幀三角化生成初始地圖"""

print("=== 初始化地圖 ===")

# 提取第一幀特征

kps1, descs1 = self.extract_features(image1)

if len(kps1) < self.min_matches:

print("第一幀特征點不足,無法初始化")

return False

# 提取第二幀特征

kps2, descs2 = self.extract_features(image2)

if len(kps2) < self.min_matches:

print("第二幀特征點不足,無法初始化")

return False

# 匹配特征

matches = self.match_features(descs1, descs2)

print(f"初始化匹配點: {len(matches)}")

if len(matches) < self.min_matches:

print("匹配點不足,無法初始化")

return False

# 估計相對位姿

pts1 = np.float32([kps1[m.queryIdx].pt for m in matches])

pts2 = np.float32([kps2[m.trainIdx].pt for m in matches])

E, mask = cv2.findEssentialMat(pts1, pts2, self.camera.K, method=cv2.RANSAC, prob=0.999, threshold=1.0)

if E is None or mask.sum() < self.min_matches:

print("本質矩陣估計失敗")

return False

_, R, t, mask = cv2.recoverPose(E, pts1, pts2, self.camera.K, mask=mask)

inlier_indices = [i for i in range(len(matches)) if mask[i] > 0]

print(f"位姿估計內點: {len(inlier_indices)}/{len(matches)}")

if len(inlier_indices) < self.min_matches:

print("內點不足,無法初始化")

return False

# 創(chuàng)建第一關鍵幀(世界坐標系原點)

pose1 = np.eye(4)

kf1 = KeyFrame(0, image1, kps1, descs1, pose1)

self.keyframes.append(kf1)

# 創(chuàng)建第二關鍵幀

pose2 = np.eye(4)

pose2[:3, :3] = R

pose2[:3, 3] = t.flatten()

kf2 = KeyFrame(1, image2, kps2, descs2, pose2)

self.keyframes.append(kf2)

# 三角化生成初始地圖點

inlier_matches = [matches[i] for i in inlier_indices]

initial_map_points = self.triangulate_points(pose1, pose2, kps1, kps2, descs1, inlier_matches, 0, 1)

if len(initial_map_points) < 10:

print("三角化點不足,初始化失敗")

self.keyframes = []

return False

# 建立關鍵幀與地圖點的關聯(lián)

for mp_idx, mp in enumerate(initial_map_points):

global_mp_idx = len(self.map_points) + mp_idx

# 關聯(lián)到第一關鍵幀

for obs_kf_id, kp_idx in mp.observations:

if obs_kf_id == 0:

kf1.map_points.append(global_mp_idx)

kf1.map_point_indices[kp_idx] = global_mp_idx

break

# 關聯(lián)到第二關鍵幀

for obs_kf_id, kp_idx in mp.observations:

if obs_kf_id == 1:

kf2.map_points.append(global_mp_idx)

kf2.map_point_indices[kp_idx] = global_mp_idx

break

self.map_points.extend(initial_map_points)

self.world_pose = pose2

print(f"地圖初始化成功: {len(initial_map_points)} 個地圖點")

return True

def triangulate_points(self, pose1: np.ndarray, pose2: np.ndarray,

kp1: List[cv2.KeyPoint], kp2: List[cv2.KeyPoint],

descriptors1: np.ndarray, matches: List[cv2.DMatch],

kf1_id: int, kf2_id: int) -> List[MapPoint]:

"""三角化生成3D點"""

if len(matches) < 8:

return []

# 準備投影矩陣

P1 = self.camera.K @ np.hstack((np.eye(3), np.zeros((3, 1))))

P2 = self.camera.K @ pose2[:3, :]

# 準備匹配點

pts1 = np.float32([kp1[m.queryIdx].pt for m in matches])

pts2 = np.float32([kp2[m.trainIdx].pt for m in matches])

# 三角化

points_4d = cv2.triangulatePoints(P1, P2, pts1.T, pts2.T)

points_3d = points_4d[:3] / points_4d[3]

map_points = []

for i, point in enumerate(points_3d.T):

if point[2] > 0 and not np.any(np.isinf(point)) and not np.any(np.isnan(point)):

# 使用關鍵點的描述子(從描述子數(shù)組中獲取)

if len(descriptors1) > 0 and i < len(descriptors1):

descriptor = descriptors1[matches[i].queryIdx]

else:

descriptor = None

map_point = MapPoint(point.copy(), descriptor, kf2_id)

# 添加觀測關系

map_point.add_observation(kf1_id, matches[i].queryIdx)

map_point.add_observation(kf2_id, matches[i].trainIdx)

map_points.append(map_point)

return map_points

def track_with_map_points(self, image: np.ndarray, kps: List[cv2.KeyPoint], desc: np.ndarray) -> bool:

"""使用地圖點進行跟蹤(第三幀及以后)"""

if len(self.keyframes) < 2 or len(self.map_points) == 0:

return False

print("=== 使用地圖點進行跟蹤 ===")

# 1. 使用描述子匹配當前幀特征點與地圖點

map_matches = self.match_with_map_points(desc)

print(f"地圖點匹配結果: {len(map_matches)} 個匹配")

if len(map_matches) < self.min_matches:

print("地圖點匹配不足,無法進行PnP跟蹤")

return False

# 2. 準備3D-2D對應點進行PnP求解

object_points = []

image_points = []

matched_map_indices = []

for kp_idx, map_idx in map_matches:

if map_idx < len(self.map_points):

object_points.append(self.map_points[map_idx].point_3d)

image_points.append(kps[kp_idx].pt)

matched_map_indices.append(map_idx)

if len(object_points) < 6:

print(f"PnP有效點不足: {len(object_points)}")

return False

object_points = np.float32(object_points)

image_points = np.float32(image_points)

# 3. 使用PnP求解位姿

try:

success, rvec, tvec, inliers = cv2.solvePnPRansac(

object_points, image_points, self.camera.K, self.camera.dist_coeffs,

reprojectionError=self.pnp_reprojection_threshold, iterationsCount=100,

flags=cv2.SOLVEPNP_ITERATIVE

)

except Exception as e:

print(f"PnP求解失敗: {e}")

return False

if not success or inliers is None or len(inliers) < 6:

print("PnP求解失敗")

return False

print(f"PnP成功: 內點 {len(inliers)}/{len(object_points)}")

# 4. 轉換旋轉向量為旋轉矩陣

R, _ = cv2.Rodrigues(rvec)

# 5. 更新世界位姿

new_pose = np.eye(4)

new_pose[:3, :3] = R

new_pose[:3, 3] = tvec.flatten()

self.world_pose = new_pose

# 6. 判斷是否為關鍵幀

last_kf = self.keyframes[-1]

if self.is_keyframe(last_kf.pose, new_pose):

# 創(chuàng)建新關鍵幀

kf = KeyFrame(self.current_frame_id, image, kps, desc, new_pose.copy())

# 7. 與前一關鍵幀三角化新點

kf_matches = self.match_features(last_kf.descriptors, desc)

if len(kf_matches) >= 8:

new_map_points = self.triangulate_points(

last_kf.pose, kf.pose, last_kf.keypoints, kps, last_kf.descriptors, kf_matches,

last_kf.frame_id, kf.frame_id

)

# 建立關聯(lián)

for mp_idx, mp in enumerate(new_map_points):

global_mp_idx = len(self.map_points) + mp_idx

# 關聯(lián)到前一關鍵幀

for obs_kf_id, kp_idx in mp.observations:

if obs_kf_id == last_kf.frame_id:

last_kf.map_points.append(global_mp_idx)

last_kf.map_point_indices[kp_idx] = global_mp_idx

break

# 關聯(lián)到當前關鍵幀

for obs_kf_id, kp_idx in mp.observations:

if obs_kf_id == kf.frame_id:

kf.map_points.append(global_mp_idx)

kf.map_point_indices[kp_idx] = global_mp_idx

break

self.map_points.extend(new_map_points)

print(f"新增 {len(new_map_points)} 個地圖點")

self.keyframes.append(kf)

print(f"創(chuàng)建關鍵幀 {len(self.keyframes)},總地圖點: {len(self.map_points)}")

return True

return True

def is_keyframe(self, prev_pose: np.ndarray, curr_pose: np.ndarray) -> bool:

"""判斷是否為關鍵幀"""

rel_pose = np.linalg.inv(prev_pose) @ curr_pose

R = rel_pose[:3, :3]

t = rel_pose[:3, 3]

translation_norm = np.linalg.norm(t)

rotation_angle = np.arccos(np.clip((np.trace(R) - 1) / 2, -1, 1))

is_kf = translation_norm > self.keyframe_threshold or rotation_angle > 0.1

if is_kf:

print(f"選擇關鍵幀: 平移={translation_norm:.3f}, 旋轉={rotation_angle:.3f}")

return is_kf

def update_visualization(self):

"""更新可視化"""

if self.vis is None:

self.init_visualization()

if self.vis is not None:

self.vis.clear_geometries()

# 添加坐標系

coordinate_frame = o3d.geometry.TriangleMesh.create_coordinate_frame(size=1.0)

if self.vis is not None:

self.vis.add_geometry(coordinate_frame)

if len(self.keyframes) == 0 or len(self.map_points) == 0:

if self.vis is not None:

self.vis.poll_events()

self.vis.update_renderer()

return

try:

# 準備點云數(shù)據

points = [mp.point_3d for mp in self.map_points]

if len(points) > 0:

points_arr = np.array(points, dtype=np.float64)

if len(points_arr.shape) == 2 and points_arr.shape[1] == 3:

point_cloud = o3d.geometry.PointCloud()

point_cloud.points = o3d.utility.Vector3dVector(points_arr)

# 根據觀測次數(shù)設置顏色

colors = []

for mp in self.map_points:

if mp.track_count == 1:

colors.append([0.0, 0.0, 1.0]) # 藍色

elif mp.track_count == 2:

colors.append([0.0, 1.0, 1.0]) # 青色

else:

colors.append([1.0, 0.0, 0.0]) # 紅色

point_cloud.colors = o3d.utility.Vector3dVector(np.array(colors))

if self.vis is not None:

self.vis.add_geometry(point_cloud)

# 更新相機軌跡

if len(self.keyframes) > 1:

trajectory_points = [kf.pose[:3, 3] for kf in self.keyframes]

lines = [[i, i+1] for i in range(len(trajectory_points)-1)]

camera_trajectory = o3d.geometry.LineSet()

camera_trajectory.points = o3d.utility.Vector3dVector(np.array(trajectory_points, dtype=np.float64))

camera_trajectory.lines = o3d.utility.Vector2iVector(lines)

camera_trajectory.paint_uniform_color([0.0, 1.0, 0.0])

if self.vis is not None:

self.vis.add_geometry(camera_trajectory)

# 添加關鍵幀坐標系

for i, kf in enumerate(self.keyframes):

if i % 5 == 0 or i == len(self.keyframes) - 1:

kf_coordinate = o3d.geometry.TriangleMesh.create_coordinate_frame(size=0.3)

kf_coordinate.transform(kf.pose)

if self.vis is not None:

self.vis.add_geometry(kf_coordinate)

if self.vis is not None:

self.vis.poll_events()

self.vis.update_renderer()

if len(points) > 0:

points_array = np.array(points)

print(f"點云范圍: X[{points_array[:,0].min():.2f}, {points_array[:,0].max():.2f}] "

f"Y[{points_array[:,1].min():.2f}, {points_array[:,1].max():.2f}] "

f"Z[{points_array[:,2].min():.2f}, {points_array[:,2].max():.2f}]")

except Exception as e:

print(f"可視化更新失敗: {e}")

import traceback

traceback.print_exc()

def run_slam(self, max_frames: int = 50):

"""運行SLAM系統(tǒng)"""

image_paths = self.load_images()

if len(image_paths) == 0:

return

image_paths = image_paths[:max_frames]

if len(image_paths) < 2:

print("至少需要2張圖像進行初始化")

return

print("\n=== 開始SLAM處理 ===")

# 初始化階段:處理前兩幀

image1 = cv2.imread(image_paths[0])

image2 = cv2.imread(image_paths[1])

if image1 is None or image2 is None:

print("無法讀取前兩幀圖像")

return

# 地圖初始化

if not self.initialize_map(image1, image2):

print("地圖初始化失敗")

return

self.current_frame_id = 2

print("地圖初始化成功,開始跟蹤后續(xù)幀...")

# 跟蹤階段:處理后續(xù)幀

for i in range(2, len(image_paths)):

image_path = image_paths[i]

print(f"\n--- 處理幀 {i+1}/{len(image_paths)}: {os.path.basename(image_path)} ---")

image = cv2.imread(image_path)

if image is None:

continue

kps, desc = self.extract_features(image)

# 從第三幀開始使用地圖點進行跟蹤

success = self.track_with_map_points(image, kps, desc)

if success:

# 更新可視化

if len(self.keyframes) % 2 == 0:

self.update_visualization()

self.current_frame_id += 1

# 顯示當前幀

display_image = cv2.drawKeypoints(image, kps, None, color=(0, 255, 0))

display_image = cv2.resize(display_image, (800, 600))

cv2.imshow('Current Frame', display_image)

if cv2.waitKey(0) & 0xFF == ord('q'):

break

# 最終可視化

self.update_visualization()

print(f"\n=== SLAM處理完成 ===")

print(f"生成關鍵幀數(shù)量: {len(self.keyframes)}")

print(f"生成地圖點數(shù)量: {len(self.map_points)}")

# 保持窗口打開

if self.vis is not None:

print("按 'q' 關閉窗口...")

while True:

self.vis.poll_events()

self.vis.update_renderer()

if cv2.waitKey(100) & 0xFF == ord('q'):

break

cv2.destroyAllWindows()

if self.vis is not None:

try:

self.vis.destroy_window()

except:

pass

# 使用示例

if __name__ == "__main__":

# 設置數(shù)據路徑

data_path = "/home/dongdong/2project/0data/RTK/data_1_nwpuUp/data3_1130_13pm/300_location_14pm"

config_path = os.path.join(data_path, "slam_config", "GNSS_config.yaml")

image_folder = os.path.join(data_path, "images")

try:

slam = MonoSLAM(config_path, image_folder)

slam.run_slam(max_frames=50)

except Exception as e:

print(f"錯誤: {e}")

import traceback

traceback.print_exc()

浙公網安備 33010602011771號

浙公網安備 33010602011771號