@

基本環(huán)境配置

節(jié)點規(guī)劃

| 主機名 | IP地址 | 說明 |

|---|---|---|

| k8s-master01 ~ 03 | 10.0.0.201 ~ 203 | master節(jié)點 * 3 |

| k8s-master-lb | 10.0.0.236 | keepalived虛擬IP |

| k8s-node01 ~ 02 | 10.0.0.204 ~ 205 | worker節(jié)點 * 2 |

網(wǎng)段規(guī)劃及軟件版本

| 配置信息 | 備注 |

|---|---|

| 系統(tǒng)版本 | CentOS 7.9 |

| Docker版本 | 20.10.x |

| Pod網(wǎng)段 | 172.16.0.0/12 |

| Service網(wǎng)段 | 192.168.0.0/16 |

基本配置

所有節(jié)點配置hosts,修改/etc/hosts如下:

10.0.0.201 k8s-master01

10.0.0.202 k8s-master02

10.0.0.203 k8s-master03

10.0.0.236 k8s-master-lb # 如果不是高可用集群,該IP為Master01的IP

10.0.0.204 k8s-node01

10.0.0.205 k8s-node02

yum源配置:

curl -o /etc/yum.repos.d/CentOS-Base.repo https://mirrors.aliyun.com/repo/Centos-7.repo

yum install -y yum-utils device-mapper-persistent-data lvm2

yum-config-manager --add-repo https://mirrors.aliyun.com/docker-ce/linux/centos/docker-ce.repo

cat <<EOF > /etc/yum.repos.d/kubernetes.repo

[kubernetes]

name=Kubernetes

baseurl=https://mirrors.aliyun.com/kubernetes/yum/repos/kubernetes-el7-x86_64/

enabled=1

gpgcheck=1

repo_gpgcheck=1

gpgkey=https://mirrors.aliyun.com/kubernetes/yum/doc/yum-key.gpg https://mirrors.aliyun.com/kubernetes/yum/doc/rpm-package-key.gpg

EOF

sed -i -e '/mirrors.cloud.aliyuncs.com/d' -e '/mirrors.aliyuncs.com/d' /etc/yum.repos.d/CentOS-Base.repo

必備工具安裝:

yum install wget jq psmisc vim net-tools telnet yum-utils device-mapper-persistent-data lvm2 git -y

擁抱云原生,高薪就業(yè)點我了解

所有節(jié)點關(guān)閉防火墻、selinux、dnsmasq、swap:

systemctl disable --now firewalld

systemctl disable --now dnsmasq

systemctl disable --now NetworkManager

setenforce 0

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/sysconfig/selinux

sed -i 's#SELINUX=enforcing#SELINUX=disabled#g' /etc/selinux/config

swapoff -a && sysctl -w vm.swappiness=0

sed -ri '/^[^#]*swap/s@^@#@' /etc/fstab

時間同步:

rpm -ivh http://mirrors.wlnmp.com/centos/wlnmp-release-centos.noarch.rpm

yum install ntpdate -y

ln -sf /usr/share/zoneinfo/Asia/Shanghai /etc/localtime

echo 'Asia/Shanghai' >/etc/timezone

ntpdate time2.aliyun.com

# 加入到crontab

*/5 * * * * /usr/sbin/ntpdate time2.aliyun.com

所有節(jié)點配置limit:

ulimit -SHn 65535

vim /etc/security/limits.conf

# 末尾添加如下內(nèi)容

* soft nofile 65536

* hard nofile 131072

* soft nproc 65535

* hard nproc 655350

* soft memlock unlimited

* hard memlock unlimited

免密鑰配置:

ssh-keygen -t rsa

for i in k8s-master01 k8s-master02 k8s-master03 k8s-node01 k8s-node02;do ssh-copy-id -i .ssh/id_rsa.pub $i;done

下載安裝所有的源碼文件:

cd /root/ ; git clone https://gitee.com/dukuan/k8s-ha-install.git

所有節(jié)點升級系統(tǒng)并重啟:

yum update -y --exclude=kernel* && reboot

內(nèi)核升級配置

下載內(nèi)核:

cd /root

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm

wget http://193.49.22.109/elrepo/kernel/el7/x86_64/RPMS/kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm

從master01節(jié)點傳到其他節(jié)點:

for i in k8s-master02 k8s-master03 k8s-node01 k8s-node02;do scp kernel-ml-4.19.12-1.el7.elrepo.x86_64.rpm kernel-ml-devel-4.19.12-1.el7.elrepo.x86_64.rpm $i:/root/ ; done

所有節(jié)點安裝內(nèi)核:

cd /root && yum localinstall -y kernel-ml*

# 所有節(jié)點更改內(nèi)核啟動順序

grub2-set-default 0 && grub2-mkconfig -o /etc/grub2.cfg

grubby --args="user_namespace.enable=1" --update-kernel="$(grubby --default-kernel)"

# 檢查默認(rèn)內(nèi)核是不是4.19

[root@k8s-master02 ~]# grubby --default-kernel

/boot/vmlinuz-4.19.12-1.el7.elrepo.x86_64

# 所有節(jié)點重啟,然后檢查內(nèi)核是不是4.19

[root@k8s-master02 ~]# uname -a

Linux k8s-master02 4.19.12-1.el7.elrepo.x86_64 #1 SMP Fri Dec 21 11:06:36 EST 2018 x86_64 x86_64 x86_64 GNU/Linux

所有節(jié)點安裝ipvsadm:

yum install ipvsadm ipset sysstat conntrack libseccomp -y

所有節(jié)點配置ipvs模塊:

modprobe -- ip_vs

modprobe -- ip_vs_rr

modprobe -- ip_vs_wrr

modprobe -- ip_vs_sh

modprobe -- nf_conntrack

vim /etc/modules-load.d/ipvs.conf

# 加入以下內(nèi)容

ip_vs

ip_vs_lc

ip_vs_wlc

ip_vs_rr

ip_vs_wrr

ip_vs_lblc

ip_vs_lblcr

ip_vs_dh

ip_vs_sh

ip_vs_fo

ip_vs_nq

ip_vs_sed

ip_vs_ftp

ip_vs_sh

nf_conntrack

ip_tables

ip_set

xt_set

ipt_set

ipt_rpfilter

ipt_REJECT

ipip

# 設(shè)置開機自動加載

systemctl enable --now systemd-modules-load.service

所有節(jié)點配置k8s內(nèi)核:

cat <<EOF > /etc/sysctl.d/k8s.conf

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-iptables = 1

net.bridge.bridge-nf-call-ip6tables = 1

fs.may_detach_mounts = 1

net.ipv4.conf.all.route_localnet = 1

vm.overcommit_memory=1

vm.panic_on_oom=0

fs.inotify.max_user_watches=89100

fs.file-max=52706963

fs.nr_open=52706963

net.netfilter.nf_conntrack_max=2310720

net.ipv4.tcp_keepalive_time = 600

net.ipv4.tcp_keepalive_probes = 3

net.ipv4.tcp_keepalive_intvl =15

net.ipv4.tcp_max_tw_buckets = 36000

net.ipv4.tcp_tw_reuse = 1

net.ipv4.tcp_max_orphans = 327680

net.ipv4.tcp_orphan_retries = 3

net.ipv4.tcp_syncookies = 1

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.ip_conntrack_max = 65536

net.ipv4.tcp_max_syn_backlog = 16384

net.ipv4.tcp_timestamps = 0

net.core.somaxconn = 16384

EOF

sysctl --system

重啟服務(wù)器后,測試配置是否還在加載:

reboot

lsmod | grep --color=auto -e ip_vs -e nf_conntrack

高薪K8s全棧架構(gòu)師視頻點我了解,提供完善并且免費的售后服務(wù)、免費更新、免費技術(shù)問答、直擊年薪30萬!

K8s組件及Runtime安裝

Containerd安裝

所有節(jié)點安裝docker-ce-20.10:

yum install docker-ce-20.10.* docker-ce-cli-20.10.* -y

配置Containerd所需的模塊(所有節(jié)點):

# cat <<EOF | sudo tee /etc/modules-load.d/containerd.conf

overlay

br_netfilter

EOF

所有節(jié)點加載模塊:

# modprobe -- overlay

# modprobe -- br_netfilter

所有節(jié)點,配置Containerd所需的內(nèi)核:

cat <<EOF | sudo tee /etc/sysctl.d/99-kubernetes-cri.conf

net.bridge.bridge-nf-call-iptables = 1

net.ipv4.ip_forward = 1

net.bridge.bridge-nf-call-ip6tables = 1

EOF

所有節(jié)點加載內(nèi)核:

# sysctl --system

所有節(jié)點配置Containerd的配置文件:

# mkdir -p /etc/containerd

# containerd config default | tee /etc/containerd/config.toml

所有節(jié)點將Containerd的Cgroup改為Systemd:

# vim /etc/containerd/config.toml

找到containerd.runtimes.runc.options,添加SystemdCgroup = true

所有節(jié)點將sandbox_image的Pause鏡像改成符合自己版本的地址registry.cn-hangzhou.aliyuncs.com/google_containers/pause:3.6:

所有節(jié)點啟動Containerd,并配置開機自啟動:

# systemctl daemon-reload

# systemctl enable --now containerd

所有節(jié)點配置crictl客戶端連接的運行時位置:

# cat > /etc/crictl.yaml <<EOF

runtime-endpoint: unix:///run/containerd/containerd.sock

image-endpoint: unix:///run/containerd/containerd.sock

timeout: 10

debug: false

EOF

K8s組件安裝

所有節(jié)點安裝1.23最新版本kubeadm、kubelet和kubectl:

# yum install kubeadm-1.23* kubelet-1.23* kubectl-1.23* -y

更改Kubelet的配置使用Containerd作為Runtime:

# cat >/etc/sysconfig/kubelet<<EOF

KUBELET_KUBEADM_ARGS="--container-runtime=remote --runtime-request-timeout=15m --container-runtime-endpoint=unix:///run/containerd/containerd.sock"

EOF

設(shè)置Kubelet開機自啟動:

# systemctl daemon-reload

# systemctl enable --now kubelet

高可用實現(xiàn)

所有Master節(jié)點通過yum安裝HAProxy和KeepAlived:

yum install keepalived haproxy -y

所有Master節(jié)點配置HAProxy:

[root@k8s-master01 etc]# mkdir /etc/haproxy

[root@k8s-master01 etc]# vim /etc/haproxy/haproxy.cfg

global

maxconn 2000

ulimit-n 16384

log 127.0.0.1 local0 err

stats timeout 30s

defaults

log global

mode http

option httplog

timeout connect 5000

timeout client 50000

timeout server 50000

timeout http-request 15s

timeout http-keep-alive 15s

frontend monitor-in

bind *:33305

mode http

option httplog

monitor-uri /monitor

frontend k8s-master

bind 0.0.0.0:16443

bind 127.0.0.1:16443

mode tcp

option tcplog

tcp-request inspect-delay 5s

default_backend k8s-master

backend k8s-master

mode tcp

option tcplog

option tcp-check

balance roundrobin

default-server inter 10s downinter 5s rise 2 fall 2 slowstart 60s maxconn 250 maxqueue 256 weight 100

server k8s-master01 10.0.0.201:6443 check

server k8s-master02 10.0.0.202:6443 check

server k8s-master03 10.0.0.203:6443 check

所有Master節(jié)點配置KeepAlived,

Master01節(jié)點的配置:

[root@k8s-master01 etc]# mkdir /etc/keepalived

[root@k8s-master01 ~]# vim /etc/keepalived/keepalived.conf

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state MASTER

interface ens33

mcast_src_ip 10.0.0.201

virtual_router_id 51

priority 101

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.0.0.236

}

track_script {

chk_apiserver

}

}

Master02節(jié)點的配置:

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

mcast_src_ip 10.0.0.202

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.0.0.236

}

track_script {

chk_apiserver

}

}

Master03節(jié)點的配置:

! Configuration File for keepalived

global_defs {

router_id LVS_DEVEL

script_user root

enable_script_security

}

vrrp_script chk_apiserver {

script "/etc/keepalived/check_apiserver.sh"

interval 5

weight -5

fall 2

rise 1

}

vrrp_instance VI_1 {

state BACKUP

interface ens33

mcast_src_ip 10.0.0.203

virtual_router_id 51

priority 100

advert_int 2

authentication {

auth_type PASS

auth_pass K8SHA_KA_AUTH

}

virtual_ipaddress {

10.0.0.236

}

track_script {

chk_apiserver

}

}

所有master節(jié)點配置KeepAlived健康檢查文件:

[root@k8s-master01 keepalived]# cat /etc/keepalived/check_apiserver.sh

#!/bin/bash

err=0

for k in $(seq 1 3)

do

check_code=$(pgrep haproxy)

if [[ $check_code == "" ]]; then

err=$(expr $err + 1)

sleep 1

continue

else

err=0

break

fi

done

if [[ $err != "0" ]]; then

echo "systemctl stop keepalived"

/usr/bin/systemctl stop keepalived

exit 1

else

exit 0

fi

啟動haproxy和keepalived:

[root@k8s-master01 keepalived]# systemctl daemon-reload

[root@k8s-master01 keepalived]# systemctl enable --now haproxy

[root@k8s-master01 keepalived]# systemctl enable --now keepalived

高薪K8s全棧架構(gòu)師視頻點我了解,提供完善并且免費的售后服務(wù)、免費更新、免費技術(shù)問答、直擊年薪30萬! 來都來了點我看看吧~

集群初始化

Master01初始化

vim kubeadm-config.yaml

apiVersion: kubeadm.k8s.io/v1beta2

bootstrapTokens:

- groups:

- system:bootstrappers:kubeadm:default-node-token

token: 7t2weq.bjbawausm0jaxury

ttl: 24h0m0s

usages:

- signing

- authentication

kind: InitConfiguration

localAPIEndpoint:

advertiseAddress: 10.0.0.201

bindPort: 6443

nodeRegistration:

criSocket: /run/containerd/containerd.sock

name: k8s-master01

taints:

- effect: NoSchedule

key: node-role.kubernetes.io/master

---

apiServer:

certSANs:

- 10.0.0.236

timeoutForControlPlane: 4m0s

apiVersion: kubeadm.k8s.io/v1beta2

certificatesDir: /etc/kubernetes/pki

clusterName: kubernetes

controlPlaneEndpoint: 10.0.0.236:16443

controllerManager: {}

dns:

type: CoreDNS

etcd:

local:

dataDir: /var/lib/etcd

imageRepository: registry.cn-hangzhou.aliyuncs.com/google_containers

kind: ClusterConfiguration

kubernetesVersion: v1.23.1 # 更改此處的版本號和kubeadm version一致

networking:

dnsDomain: cluster.local

podSubnet: 172.16.0.0/12

serviceSubnet: 192.168.0.0/16

scheduler: {}

更新kubeadm文件:

kubeadm config migrate --old-config kubeadm-config.yaml --new-config new.yaml

將new.yaml文件復(fù)制到其他master節(jié)點:

for i in k8s-master02 k8s-master03; do scp new.yaml $i:/root/; done

所有Master節(jié)點提前下載鏡像:

kubeadm config images pull --config /root/new.yaml

Master01節(jié)點初始化:

kubeadm init --config /root/new.yaml --upload-certs

初始化成功后信息如下:

kubeadm join 10.0.0.236:16443 --token 7t2weq.bjbawausm0jaxury \

--discovery-token-ca-cert-hash sha256:df72788de04bbc2e8fca70becb8a9e8503a962b5d7cd9b1842a0c39930d08c94 \

--control-plane --certificate-key c595f7f4a7a3beb0d5bdb75d9e4eff0a60b977447e76c1d6885e82c3aa43c94c

Please note that the certificate-key gives access to cluster sensitive data, keep it secret!

As a safeguard, uploaded-certs will be deleted in two hours; If necessary, you can use

"kubeadm init phase upload-certs --upload-certs" to reload certs afterward.

Then you can join any number of worker nodes by running the following on each as root:

kubeadm join 10.0.0.236:16443 --token 7t2weq.bjbawausm0jaxury \

--discovery-token-ca-cert-hash sha256:df72788de04bbc2e8fca70becb8a9e8503a962b5d7cd9b1842a0c39930d08c94

Master01節(jié)點配置環(huán)境變量,用于訪問Kubernetes集群:

cat <<EOF >> /root/.bashrc

export KUBECONFIG=/etc/kubernetes/admin.conf

EOF

source /root/.bashrc

添加Master節(jié)點

使用上述初始化命令生產(chǎn)的join命令添加即可:

kubeadm join 10.0.0.236:16443 --token 7t2weq.bjbawausm0jaxury \

--discovery-token-ca-cert-hash sha256:df72788de04bbc2e8fca70becb8a9e8503a962b5d7cd9b1842a0c39930d08c94 \

--control-plane --certificate-key c595f7f4a7a3beb0d5bdb75d9e4eff0a60b977447e76c1d6885e82c3aa43c94c

添加Worker節(jié)點

kubeadm join 10.0.0.236:16443 --token 7t2weq.bjbawausm0jaxury \

--discovery-token-ca-cert-hash sha256:df72788de04bbc2e8fca70becb8a9e8503a962b5d7cd9b1842a0c39930d08c94

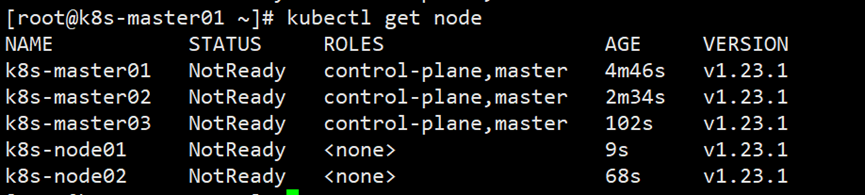

添加完成后結(jié)果如下:

CNI插件Calico安裝

只在master01執(zhí)行:

cd /root/k8s-ha-install && git checkout manual-installation-v1.23.x && cd calico/

修改Pod網(wǎng)段:

POD_SUBNET=`cat /etc/kubernetes/manifests/kube-controller-manager.yaml | grep cluster-cidr= | awk -F= '{print $NF}'`

sed -i "s#POD_CIDR#${POD_SUBNET}#g" calico.yaml

kubectl apply -f calico.yaml

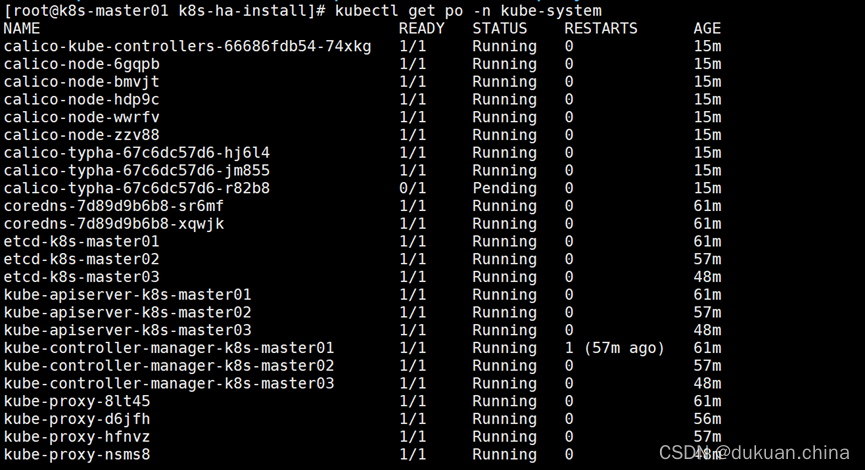

創(chuàng)建完成后,等待幾分鐘后查看狀態(tài):

Metrics Server部署

將Master01節(jié)點的front-proxy-ca.crt復(fù)制到所有Node節(jié)點:

scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-node01:/etc/kubernetes/pki/front-proxy-ca.crt

scp /etc/kubernetes/pki/front-proxy-ca.crt k8s-node(其他節(jié)點自行拷貝):/etc/kubernetes/pki/front-proxy-ca.crt

安裝metrics server:

cd /root/k8s-ha-install/kubeadm-metrics-server

# kubectl create -f comp.yaml

serviceaccount/metrics-server created

clusterrole.rbac.authorization.k8s.io/system:aggregated-metrics-reader created

clusterrole.rbac.authorization.k8s.io/system:metrics-server created

rolebinding.rbac.authorization.k8s.io/metrics-server-auth-reader created

clusterrolebinding.rbac.authorization.k8s.io/metrics-server:system:auth-delegator created

clusterrolebinding.rbac.authorization.k8s.io/system:metrics-server created

service/metrics-server created

deployment.apps/metrics-server created

apiservice.apiregistration.k8s.io/v1beta1.metrics.k8s.io created

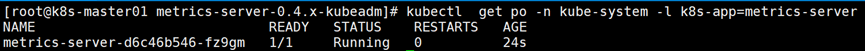

查看Metrics Server狀態(tài):

狀態(tài)正常后,查看度量指標(biāo):

# kubectl top node

NAME CPU(cores) CPU% MEMORY(bytes) MEMORY%

k8s-master01 153m 3% 1701Mi 44%

k8s-master02 125m 3% 1693Mi 44%

k8s-master03 129m 3% 1590Mi 41%

k8s-node01 73m 1% 989Mi 25%

k8s-node02 64m 1% 950Mi 24%

# kubectl top po -A

NAMESPACE NAME CPU(cores) MEMORY(bytes)

kube-system calico-kube-controllers-66686fdb54-74xkg 2m 17Mi

kube-system calico-node-6gqpb 21m 85Mi

kube-system calico-node-bmvjt 29m 76Mi

kube-system calico-node-hdp9c 15m 82Mi

kube-system calico-node-wwrfv 23m 86Mi

kube-system calico-node-zzv88 22m 84Mi

kube-system calico-typha-67c6dc57d6-hj6l4 2m 23Mi

kube-system calico-typha-67c6dc57d6-jm855 2m 22Mi

kube-system coredns-7d89d9b6b8-sr6mf 1m 16Mi

kube-system coredns-7d89d9b6b8-xqwjk 1m 16Mi

kube-system etcd-k8s-master01 24m 96Mi

kube-system etcd-k8s-master02 20m 91Mi

kube-system etcd-k8s-master03 21m 92Mi

kube-system kube-apiserver-k8s-master01 41m 502Mi

kube-system kube-apiserver-k8s-master02 35m 476Mi

kube-system kube-apiserver-k8s-master03 71m 480Mi

kube-system kube-controller-manager-k8s-master01 15m 65Mi

kube-system kube-controller-manager-k8s-master02 1m 26Mi

kube-system kube-controller-manager-k8s-master03 2m 27Mi

kube-system kube-proxy-8lt45 1m 18Mi

kube-system kube-proxy-d6jfh 1m 18Mi

kube-system kube-proxy-hfnvz 1m 19Mi

kube-system kube-proxy-nsms8 1m 18Mi

kube-system kube-proxy-xmlhq 3m 21Mi

kube-system kube-scheduler-k8s-master01 2m 26Mi

kube-system kube-scheduler-k8s-master02 2m 24Mi

kube-system kube-scheduler-k8s-master03 2m 24Mi

kube-system metrics-server-d54b585c4-4dqpf 46m 16Mi

高薪K8s全棧架構(gòu)師視頻點我了解,提供完善并且免費的售后服務(wù)、免費更新、免費技術(shù)問答、直擊年薪30萬! 來都來了點我看看吧~

Dashboard部署

安裝

cd /root/k8s-ha-install/dashboard/

[root@k8s-master01 dashboard]# kubectl create -f .

serviceaccount/admin-user created

clusterrolebinding.rbac.authorization.k8s.io/admin-user created

namespace/kubernetes-dashboard created

serviceaccount/kubernetes-dashboard created

service/kubernetes-dashboard created

secret/kubernetes-dashboard-certs created

secret/kubernetes-dashboard-csrf created

secret/kubernetes-dashboard-key-holder created

configmap/kubernetes-dashboard-settings created

role.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrole.rbac.authorization.k8s.io/kubernetes-dashboard created

rolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

clusterrolebinding.rbac.authorization.k8s.io/kubernetes-dashboard created

deployment.apps/kubernetes-dashboard created

service/dashboard-metrics-scraper created

deployment.apps/dashboard-metrics-scraper created

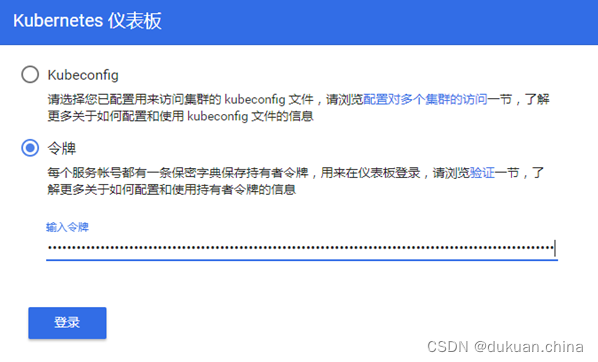

登錄Dashboard

查看dashboard端口號:

# kubectl get svc kubernetes-dashboard -n kubernetes-dashboard

查看管理員Token:

[root@k8s-master01 1.1.1]# kubectl -n kube-system describe secret $(kubectl -n kube-system get secret | grep admin-user | awk '{print $1}')

Name: admin-user-token-r4vcp

Namespace: kube-system

Labels: <none>

Annotations: kubernetes.io/service-account.name: admin-user

kubernetes.io/service-account.uid: 2112796c-1c9e-11e9-91ab-000c298bf023

Type: kubernetes.io/service-account-token

Data

====

ca.crt: 1025 bytes

namespace: 11 bytes

token: eyJhbGciOiJSUzI1NiIsImtpZCI6IiJ9.eyJpc3MiOiJrdWJlcm5ldGVzL3NlcnZpY2VhY2NvdW50Iiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9uYW1lc3BhY2UiOiJrdWJlLXN5c3RlbSIsImt1YmVybmV0ZXMuaW8vc2VydmljZWFjY291bnQvc2VjcmV0Lm5hbWUiOiJhZG1pbi11c2VyLXRva2VuLXI0dmNwIiwia3ViZXJuZXRlcy5pby9zZXJ2aWNlYWNjb3VudC9zZXJ2aWNlLWFjY291bnQubmFtZSI6ImFkbWluLXVzZXIiLCJrdWJlcm5ldGVzLmlvL3NlcnZpY2VhY2NvdW50L3NlcnZpY2UtYWNjb3VudC51aWQiOiIyMTEyNzk2Yy0xYzllLTExZTktOTFhYi0wMDBjMjk4YmYwMjMiLCJzdWIiOiJzeXN0ZW06c2VydmljZWFjY291bnQ6a3ViZS1zeXN0ZW06YWRtaW4tdXNlciJ9.bWYmwgRb-90ydQmyjkbjJjFt8CdO8u6zxVZh-19rdlL_T-n35nKyQIN7hCtNAt46u6gfJ5XXefC9HsGNBHtvo_Ve6oF7EXhU772aLAbXWkU1xOwQTQynixaypbRIas_kiO2MHHxXfeeL_yYZRrgtatsDBxcBRg-nUQv4TahzaGSyK42E_4YGpLa3X3Jc4t1z0SQXge7lrwlj8ysmqgO4ndlFjwPfvg0eoYqu9Qsc5Q7tazzFf9mVKMmcS1ppPutdyqNYWL62P1prw_wclP0TezW1CsypjWSVT4AuJU8YmH8nTNR1EXn8mJURLSjINv6YbZpnhBIPgUGk1JYVLcn47w

通過任意宿主機+端口號即可訪問Dashboard:

高薪K8s全棧架構(gòu)師視頻點我了解,提供完善并且免費的售后服務(wù)、免費更新、免費技術(shù)問答、直擊年薪30萬! 來都來了點我看看吧~

參考:K8s架構(gòu)師視頻

K8s技術(shù)交流群:

浙公網(wǎng)安備 33010602011771號

浙公網(wǎng)安備 33010602011771號