如何用卷積神經(jīng)網(wǎng)絡(luò)CNN識別手寫數(shù)字集?

前幾天用CNN識別手寫數(shù)字集,后來看到kaggle上有一個比賽是識別手寫數(shù)字集的,已經(jīng)進行了一年多了,目前有1179個有效提交,最高的是100%,我做了一下,用keras做的,一開始用最簡單的MLP,準確率只有98.19%,然后不斷改進,現(xiàn)在是99.78%,然而我看到排名第一是100%,心碎 = =,于是又改進了一版,現(xiàn)在把最好的結(jié)果記錄一下,如果提升了再來更新。

手寫數(shù)字集相信大家應(yīng)該很熟悉了,這個程序相當于學一門新語言的“Hello World”,或者mapreduce的“WordCount”:)這里就不多做介紹了,簡單給大家看一下:

1 # Author:Charlotte 2 # Plot mnist dataset 3 from keras.datasets import mnist 4 import matplotlib.pyplot as plt 5 # load the MNIST dataset 6 (X_train, y_train), (X_test, y_test) = mnist.load_data() 7 # plot 4 images as gray scale 8 plt.subplot(221) 9 plt.imshow(X_train[0], cmap=plt.get_cmap('PuBuGn_r')) 10 plt.subplot(222) 11 plt.imshow(X_train[1], cmap=plt.get_cmap('PuBuGn_r')) 12 plt.subplot(223) 13 plt.imshow(X_train[2], cmap=plt.get_cmap('PuBuGn_r')) 14 plt.subplot(224) 15 plt.imshow(X_train[3], cmap=plt.get_cmap('PuBuGn_r')) 16 # show the plot 17 plt.show()

圖:

1.BaseLine版本

一開始我沒有想過用CNN做,因為比較耗時,所以想看看直接用比較簡單的算法看能不能得到很好的效果。之前用過機器學習算法跑過一遍,最好的效果是SVM,96.8%(默認參數(shù),未調(diào)優(yōu)),所以這次準備用神經(jīng)網(wǎng)絡(luò)做。BaseLine版本用的是MultiLayer Percepton(多層感知機)。這個網(wǎng)絡(luò)結(jié)構(gòu)比較簡單,輸入--->隱含--->輸出。隱含層采用的rectifier linear unit,輸出直接選取的softmax進行多分類。

網(wǎng)絡(luò)結(jié)構(gòu):

代碼:

1 # coding:utf-8 2 # Baseline MLP for MNIST dataset 3 import numpy 4 from keras.datasets import mnist 5 from keras.models import Sequential 6 from keras.layers import Dense 7 from keras.layers import Dropout 8 from keras.utils import np_utils 9 10 seed = 7 11 numpy.random.seed(seed) 12 #加載數(shù)據(jù) 13 (X_train, y_train), (X_test, y_test) = mnist.load_data() 14 15 num_pixels = X_train.shape[1] * X_train.shape[2] 16 X_train = X_train.reshape(X_train.shape[0], num_pixels).astype('float32') 17 X_test = X_test.reshape(X_test.shape[0], num_pixels).astype('float32') 18 19 X_train = X_train / 255 20 X_test = X_test / 255 21 22 # 對輸出進行one hot編碼 23 y_train = np_utils.to_categorical(y_train) 24 y_test = np_utils.to_categorical(y_test) 25 num_classes = y_test.shape[1] 26 27 # MLP模型 28 def baseline_model(): 29 model = Sequential() 30 model.add(Dense(num_pixels, input_dim=num_pixels, init='normal', activation='relu')) 31 model.add(Dense(num_classes, init='normal', activation='softmax')) 32 model.summary() 33 model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) 34 return model 35 36 # 建立模型 37 model = baseline_model() 38 39 # Fit 40 model.fit(X_train, y_train, validation_data=(X_test, y_test), nb_epoch=10, batch_size=200, verbose=2) 41 42 #Evaluation 43 scores = model.evaluate(X_test, y_test, verbose=0) 44 print("Baseline Error: %.2f%%" % (100-scores[1]*100))#輸出錯誤率

結(jié)果:

1 Layer (type) Output Shape Param # Connected to 2 ==================================================================================================== 3 dense_1 (Dense) (None, 784) 615440 dense_input_1[0][0] 4 ____________________________________________________________________________________________________ 5 dense_2 (Dense) (None, 10) 7850 dense_1[0][0] 6 ==================================================================================================== 7 Total params: 623290 8 ____________________________________________________________________________________________________ 9 Train on 60000 samples, validate on 10000 samples 10 Epoch 1/10 11 3s - loss: 0.2791 - acc: 0.9203 - val_loss: 0.1420 - val_acc: 0.9579 12 Epoch 2/10 13 3s - loss: 0.1122 - acc: 0.9679 - val_loss: 0.0992 - val_acc: 0.9699 14 Epoch 3/10 15 3s - loss: 0.0724 - acc: 0.9790 - val_loss: 0.0784 - val_acc: 0.9745 16 Epoch 4/10 17 3s - loss: 0.0509 - acc: 0.9853 - val_loss: 0.0774 - val_acc: 0.9773 18 Epoch 5/10 19 3s - loss: 0.0366 - acc: 0.9898 - val_loss: 0.0626 - val_acc: 0.9794 20 Epoch 6/10 21 3s - loss: 0.0265 - acc: 0.9930 - val_loss: 0.0639 - val_acc: 0.9797 22 Epoch 7/10 23 3s - loss: 0.0185 - acc: 0.9956 - val_loss: 0.0611 - val_acc: 0.9811 24 Epoch 8/10 25 3s - loss: 0.0150 - acc: 0.9967 - val_loss: 0.0616 - val_acc: 0.9816 26 Epoch 9/10 27 4s - loss: 0.0107 - acc: 0.9980 - val_loss: 0.0604 - val_acc: 0.9821 28 Epoch 10/10 29 4s - loss: 0.0073 - acc: 0.9988 - val_loss: 0.0611 - val_acc: 0.9819 30 Baseline Error: 1.81%

可以看到結(jié)果還是不錯的,正確率98.19%,錯誤率只有1.81%,而且只迭代十次效果也不錯。這個時候我還是沒想到去用CNN,而是想如果迭代100次,會不會效果好一點?于是我迭代了100次,結(jié)果如下:

Epoch 100/100

8s - loss: 4.6181e-07 - acc: 1.0000 - val_loss: 0.0982 - val_acc: 0.9854

Baseline Error: 1.46%

從結(jié)果中可以看出,迭代100次也只提高了0.35%,沒有突破99%,所以就考慮用CNN來做。

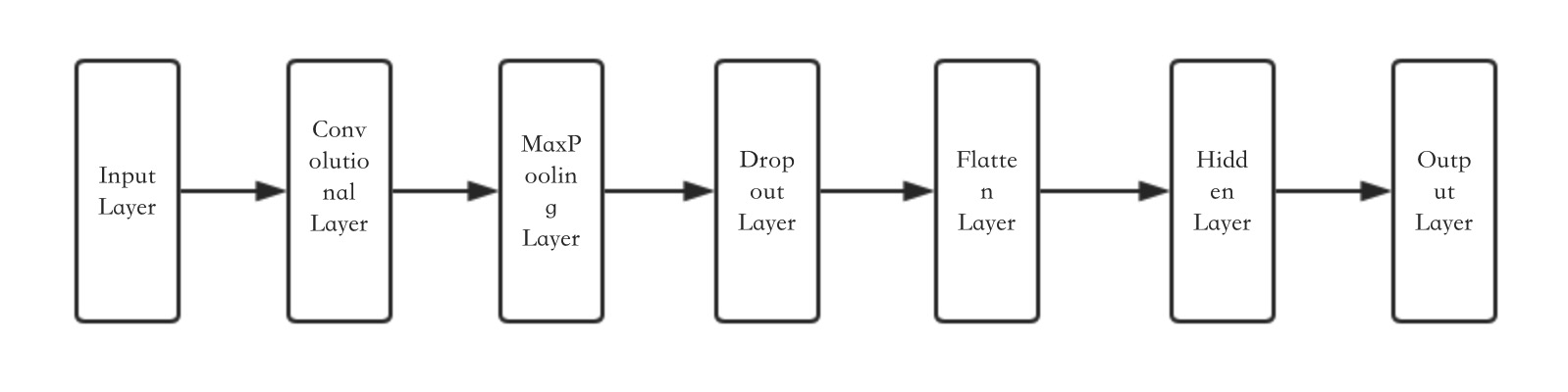

2.簡單的CNN網(wǎng)絡(luò)

keras的CNN模塊還是很全的,由于這里著重講CNN的結(jié)果,對于CNN的基本知識就不展開講了。

網(wǎng)絡(luò)結(jié)構(gòu):

代碼:

1 #coding: utf-8 2 #Simple CNN 3 import numpy 4 from keras.datasets import mnist 5 from keras.models import Sequential 6 from keras.layers import Dense 7 from keras.layers import Dropout 8 from keras.layers import Flatten 9 from keras.layers.convolutional import Convolution2D 10 from keras.layers.convolutional import MaxPooling2D 11 from keras.utils import np_utils 12 13 seed = 7 14 numpy.random.seed(seed) 15 16 #加載數(shù)據(jù) 17 (X_train, y_train), (X_test, y_test) = mnist.load_data() 18 # reshape to be [samples][channels][width][height] 19 X_train = X_train.reshape(X_train.shape[0], 1, 28, 28).astype('float32') 20 X_test = X_test.reshape(X_test.shape[0], 1, 28, 28).astype('float32') 21 22 # normalize inputs from 0-255 to 0-1 23 X_train = X_train / 255 24 X_test = X_test / 255 25 26 # one hot encode outputs 27 y_train = np_utils.to_categorical(y_train) 28 y_test = np_utils.to_categorical(y_test) 29 num_classes = y_test.shape[1] 30 31 # define a simple CNN model 32 def baseline_model(): 33 # create model 34 model = Sequential() 35 model.add(Convolution2D(32, 5, 5, border_mode='valid', input_shape=(1, 28, 28), activation='relu')) 36 model.add(MaxPooling2D(pool_size=(2, 2))) 37 model.add(Dropout(0.2)) 38 model.add(Flatten()) 39 model.add(Dense(128, activation='relu')) 40 model.add(Dense(num_classes, activation='softmax')) 41 # Compile model 42 model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) 43 return model 44 45 # build the model 46 model = baseline_model() 47 48 # Fit the model 49 model.fit(X_train, y_train, validation_data=(X_test, y_test), nb_epoch=10, batch_size=128, verbose=2) 50 51 # Final evaluation of the model 52 scores = model.evaluate(X_test, y_test, verbose=0) 53 print("CNN Error: %.2f%%" % (100-scores[1]*100))

結(jié)果:

1 ____________________________________________________________________________________________________ 2 Layer (type) Output Shape Param # Connected to 3 ==================================================================================================== 4 convolution2d_1 (Convolution2D) (None, 32, 24, 24) 832 convolution2d_input_1[0][0] 5 ____________________________________________________________________________________________________ 6 maxpooling2d_1 (MaxPooling2D) (None, 32, 12, 12) 0 convolution2d_1[0][0] 7 ____________________________________________________________________________________________________ 8 dropout_1 (Dropout) (None, 32, 12, 12) 0 maxpooling2d_1[0][0] 9 ____________________________________________________________________________________________________ 10 flatten_1 (Flatten) (None, 4608) 0 dropout_1[0][0] 11 ____________________________________________________________________________________________________ 12 dense_1 (Dense) (None, 128) 589952 flatten_1[0][0] 13 ____________________________________________________________________________________________________ 14 dense_2 (Dense) (None, 10) 1290 dense_1[0][0] 15 ==================================================================================================== 16 Total params: 592074 17 ____________________________________________________________________________________________________ 18 Train on 60000 samples, validate on 10000 samples 19 Epoch 1/10 20 32s - loss: 0.2412 - acc: 0.9318 - val_loss: 0.0754 - val_acc: 0.9766 21 Epoch 2/10 22 32s - loss: 0.0726 - acc: 0.9781 - val_loss: 0.0534 - val_acc: 0.9829 23 Epoch 3/10 24 32s - loss: 0.0497 - acc: 0.9852 - val_loss: 0.0391 - val_acc: 0.9858 25 Epoch 4/10 26 32s - loss: 0.0413 - acc: 0.9870 - val_loss: 0.0432 - val_acc: 0.9854 27 Epoch 5/10 28 34s - loss: 0.0323 - acc: 0.9897 - val_loss: 0.0375 - val_acc: 0.9869 29 Epoch 6/10 30 36s - loss: 0.0281 - acc: 0.9909 - val_loss: 0.0424 - val_acc: 0.9864 31 Epoch 7/10 32 36s - loss: 0.0223 - acc: 0.9930 - val_loss: 0.0328 - val_acc: 0.9893 33 Epoch 8/10 34 36s - loss: 0.0198 - acc: 0.9939 - val_loss: 0.0381 - val_acc: 0.9880 35 Epoch 9/10 36 36s - loss: 0.0156 - acc: 0.9954 - val_loss: 0.0347 - val_acc: 0.9884 37 Epoch 10/10 38 36s - loss: 0.0141 - acc: 0.9955 - val_loss: 0.0318 - val_acc: 0.9893 39 CNN Error: 1.07%

迭代的結(jié)果中,loss和acc為訓練集的結(jié)果,val_loss和val_acc為驗證機的結(jié)果。從結(jié)果上來看,效果不錯,比100次迭代的MLP(1.46%)提升了0.39%,CNN的誤差率為1.07%。這里的CNN的網(wǎng)絡(luò)結(jié)構(gòu)還是比較簡單的,如果把CNN的結(jié)果再加幾層,邊復(fù)雜一代,結(jié)果是否還能提升?

3.Larger CNN

這一次我加了幾層卷積層,代碼:

1 # Larger CNN 2 import numpy 3 from keras.datasets import mnist 4 from keras.models import Sequential 5 from keras.layers import Dense 6 from keras.layers import Dropout 7 from keras.layers import Flatten 8 from keras.layers.convolutional import Convolution2D 9 from keras.layers.convolutional import MaxPooling2D 10 from keras.utils import np_utils 11 12 seed = 7 13 numpy.random.seed(seed) 14 # load data 15 (X_train, y_train), (X_test, y_test) = mnist.load_data() 16 # reshape to be [samples][pixels][width][height] 17 X_train = X_train.reshape(X_train.shape[0], 1, 28, 28).astype('float32') 18 X_test = X_test.reshape(X_test.shape[0], 1, 28, 28).astype('float32') 19 # normalize inputs from 0-255 to 0-1 20 X_train = X_train / 255 21 X_test = X_test / 255 22 # one hot encode outputs 23 y_train = np_utils.to_categorical(y_train) 24 y_test = np_utils.to_categorical(y_test) 25 num_classes = y_test.shape[1] 26 # define the larger model 27 def larger_model(): 28 # create model 29 model = Sequential() 30 model.add(Convolution2D(30, 5, 5, border_mode='valid', input_shape=(1, 28, 28), activation='relu')) 31 model.add(MaxPooling2D(pool_size=(2, 2))) 32 model.add(Convolution2D(15, 3, 3, activation='relu')) 33 model.add(MaxPooling2D(pool_size=(2, 2))) 34 model.add(Dropout(0.2)) 35 model.add(Flatten()) 36 model.add(Dense(128, activation='relu')) 37 model.add(Dense(50, activation='relu')) 38 model.add(Dense(num_classes, activation='softmax')) 39 # Compile model 40 model.summary() 41 model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) 42 return model 43 # build the model 44 model = larger_model() 45 # Fit the model 46 model.fit(X_train, y_train, validation_data=(X_test, y_test), nb_epoch=69, batch_size=200, verbose=2) 47 # Final evaluation of the model 48 scores = model.evaluate(X_test, y_test, verbose=0) 49 print("Large CNN Error: %.2f%%" % (100-scores[1]*100))

結(jié)果:

___________________________________________________________________________________________________ Layer (type) Output Shape Param # Connected to ==================================================================================================== convolution2d_1 (Convolution2D) (None, 30, 24, 24) 780 convolution2d_input_1[0][0] ____________________________________________________________________________________________________ maxpooling2d_1 (MaxPooling2D) (None, 30, 12, 12) 0 convolution2d_1[0][0] ____________________________________________________________________________________________________ convolution2d_2 (Convolution2D) (None, 15, 10, 10) 4065 maxpooling2d_1[0][0] ____________________________________________________________________________________________________ maxpooling2d_2 (MaxPooling2D) (None, 15, 5, 5) 0 convolution2d_2[0][0] ____________________________________________________________________________________________________ dropout_1 (Dropout) (None, 15, 5, 5) 0 maxpooling2d_2[0][0] ____________________________________________________________________________________________________ flatten_1 (Flatten) (None, 375) 0 dropout_1[0][0] ____________________________________________________________________________________________________ dense_1 (Dense) (None, 128) 48128 flatten_1[0][0] ____________________________________________________________________________________________________ dense_2 (Dense) (None, 50) 6450 dense_1[0][0] ____________________________________________________________________________________________________ dense_3 (Dense) (None, 10) 510 dense_2[0][0] ==================================================================================================== Total params: 59933 ____________________________________________________________________________________________________ Train on 60000 samples, validate on 10000 samples Epoch 1/10 34s - loss: 0.3789 - acc: 0.8796 - val_loss: 0.0811 - val_acc: 0.9742 Epoch 2/10 34s - loss: 0.0929 - acc: 0.9710 - val_loss: 0.0462 - val_acc: 0.9854 Epoch 3/10 35s - loss: 0.0684 - acc: 0.9786 - val_loss: 0.0376 - val_acc: 0.9869 Epoch 4/10 35s - loss: 0.0546 - acc: 0.9826 - val_loss: 0.0332 - val_acc: 0.9890 Epoch 5/10 35s - loss: 0.0467 - acc: 0.9856 - val_loss: 0.0289 - val_acc: 0.9897 Epoch 6/10 35s - loss: 0.0402 - acc: 0.9873 - val_loss: 0.0291 - val_acc: 0.9902 Epoch 7/10 34s - loss: 0.0369 - acc: 0.9880 - val_loss: 0.0233 - val_acc: 0.9924 Epoch 8/10 36s - loss: 0.0336 - acc: 0.9894 - val_loss: 0.0258 - val_acc: 0.9913 Epoch 9/10 39s - loss: 0.0317 - acc: 0.9899 - val_loss: 0.0219 - val_acc: 0.9926 Epoch 10/10 40s - loss: 0.0268 - acc: 0.9916 - val_loss: 0.0220 - val_acc: 0.9919 Large CNN Error: 0.81%

效果不錯,現(xiàn)在的準確率是99.19%

4.最終版本

網(wǎng)絡(luò)結(jié)構(gòu)沒變,只是在每一層后面加了dropout,結(jié)果居然有顯著提升。一開始迭代500次,跑死我了,結(jié)果過擬合了,然后觀察到69次的時候結(jié)果就已經(jīng)很好了,就選擇了迭代69次。

1 # Larger CNN for the MNIST Dataset 2 import numpy 3 from keras.datasets import mnist 4 from keras.models import Sequential 5 from keras.layers import Dense 6 from keras.layers import Dropout 7 from keras.layers import Flatten 8 from keras.layers.convolutional import Convolution2D 9 from keras.layers.convolutional import MaxPooling2D 10 from keras.utils import np_utils 11 import matplotlib.pyplot as plt 12 from keras.constraints import maxnorm 13 from keras.optimizers import SGD 14 # fix random seed for reproducibility 15 seed = 7 16 numpy.random.seed(seed) 17 # load data 18 (X_train, y_train), (X_test, y_test) = mnist.load_data() 19 # reshape to be [samples][pixels][width][height] 20 X_train = X_train.reshape(X_train.shape[0], 1, 28, 28).astype('float32') 21 X_test = X_test.reshape(X_test.shape[0], 1, 28, 28).astype('float32') 22 # normalize inputs from 0-255 to 0-1 23 X_train = X_train / 255 24 X_test = X_test / 255 25 # one hot encode outputs 26 y_train = np_utils.to_categorical(y_train) 27 y_test = np_utils.to_categorical(y_test) 28 num_classes = y_test.shape[1] 29 ###raw 30 # define the larger model 31 def larger_model(): 32 # create model 33 model = Sequential() 34 model.add(Convolution2D(30, 5, 5, border_mode='valid', input_shape=(1, 28, 28), activation='relu')) 35 model.add(MaxPooling2D(pool_size=(2, 2))) 36 model.add(Dropout(0.4)) 37 model.add(Convolution2D(15, 3, 3, activation='relu')) 38 model.add(MaxPooling2D(pool_size=(2, 2))) 39 model.add(Dropout(0.4)) 40 model.add(Flatten()) 41 model.add(Dense(128, activation='relu')) 42 model.add(Dropout(0.4)) 43 model.add(Dense(50, activation='relu')) 44 model.add(Dropout(0.4)) 45 model.add(Dense(num_classes, activation='softmax')) 46 # Compile model 47 model.compile(loss='categorical_crossentropy', optimizer='adam', metrics=['accuracy']) 48 return model 49 50 # build the model 51 model = larger_model() 52 # Fit the model 53 model.fit(X_train, y_train, validation_data=(X_test, y_test), nb_epoch=200, batch_size=200, verbose=2) 54 # Final evaluation of the model 55 scores = model.evaluate(X_test, y_test, verbose=0) 56 print("Large CNN Error: %.2f%%" % (100-scores[1]*100))

結(jié)果:

1 ____________________________________________________________________________________________________ 2 Layer (type) Output Shape Param # Connected to 3 ==================================================================================================== 4 convolution2d_1 (Convolution2D) (None, 30, 24, 24) 780 convolution2d_input_1[0][0] 5 ____________________________________________________________________________________________________ 6 maxpooling2d_1 (MaxPooling2D) (None, 30, 12, 12) 0 convolution2d_1[0][0] 7 ____________________________________________________________________________________________________ 8 convolution2d_2 (Convolution2D) (None, 15, 10, 10) 4065 maxpooling2d_1[0][0] 9 ____________________________________________________________________________________________________ 10 maxpooling2d_2 (MaxPooling2D) (None, 15, 5, 5) 0 convolution2d_2[0][0] 11 ____________________________________________________________________________________________________ 12 dropout_1 (Dropout) (None, 15, 5, 5) 0 maxpooling2d_2[0][0] 13 ____________________________________________________________________________________________________ 14 flatten_1 (Flatten) (None, 375) 0 dropout_1[0][0] 15 ____________________________________________________________________________________________________ 16 dense_1 (Dense) (None, 128) 48128 flatten_1[0][0] 17 ____________________________________________________________________________________________________ 18 dense_2 (Dense) (None, 50) 6450 dense_1[0][0] 19 ____________________________________________________________________________________________________ 20 dense_3 (Dense) (None, 10) 510 dense_2[0][0] 21 ==================================================================================================== 22 Total params: 59933 23 ____________________________________________________________________________________________________ 24 Train on 60000 samples, validate on 10000 samples 25 Epoch 1/69 26 34s - loss: 0.4248 - acc: 0.8619 - val_loss: 0.0832 - val_acc: 0.9746 27 Epoch 2/69 28 35s - loss: 0.1147 - acc: 0.9638 - val_loss: 0.0518 - val_acc: 0.9831 29 Epoch 3/69 30 35s - loss: 0.0887 - acc: 0.9719 - val_loss: 0.0452 - val_acc: 0.9855 31 、、、 32 Epoch 66/69 33 38s - loss: 0.0134 - acc: 0.9955 - val_loss: 0.0211 - val_acc: 0.9943 34 Epoch 67/69 35 38s - loss: 0.0114 - acc: 0.9960 - val_loss: 0.0171 - val_acc: 0.9950 36 Epoch 68/69 37 38s - loss: 0.0116 - acc: 0.9959 - val_loss: 0.0192 - val_acc: 0.9956 38 Epoch 69/69 39 38s - loss: 0.0132 - acc: 0.9969 - val_loss: 0.0188 - val_acc: 0.9978 40 Large CNN Error: 0.22% 41 42 real 41m47.350s 43 user 157m51.145s 44 sys 6m5.829s

這是目前的最好結(jié)果,99.78%,然而還有很多地方可以提升,下次準確率提高了再來更 。

總結(jié):

1.CNN在圖像識別上確實比傳統(tǒng)的MLP有優(yōu)勢,比傳統(tǒng)的機器學習算法也有優(yōu)勢(不過也有通過隨機森林取的很好效果的)

2.加深網(wǎng)絡(luò)結(jié)構(gòu),即多加幾層卷積層有助于提升準確率,但是也能大大降低運行速度

3.適當加Dropout可以提高準確率

4.激活函數(shù)最好,算了,直接說就選relu吧,沒有為啥,就因為relu能避免梯度消散這一點應(yīng)該選它,訓練速度快等其他優(yōu)點下次專門總結(jié)一篇文章再說吧。

5.迭代次數(shù)不是越多越好,很可能會過擬合,自己可以做一個收斂曲線,keras里可以用history函數(shù)plot一下,看算法是否收斂,還是發(fā)散。

浙公網(wǎng)安備 33010602011771號

浙公網(wǎng)安備 33010602011771號