容災恢復 | 記一次K8S集群中etcd數據快照的備份恢復實踐

設為「?? 星標」帶你從基礎入門 到 全棧實踐 再到 放棄學習!

涉及 網絡安全運維、應用開發、物聯網IOT、學習路徑 、個人感悟 等知識分享。

希望各位看友多多支持【關注、點贊、評論、收藏、投幣】,助力每一個夢想。

【WeiyiGeek Blog's - 花開堪折直須折,莫待無花空折枝 】

作者主頁: 【 https://weiyigeek.top 】

博客地址: 【 https://blog.weiyigeek.top 】

作者答疑學習交流群:歡迎各位志同道合的朋友一起學習交流【點擊 ?? 加入交流群】, 或者關注公眾號回復【學習交流群】。

原文地址: https://mp.weixin.qq.com/s/eblhCVjEFdvw7B-tlNTi-w

0x00 前言簡述

描述:在 Kubernetes 集群中所有操作的資源數據都是存儲在 etcd 數據庫上, 所以防止集群節點癱瘓未正常工作或在集群遷移時,以及在出現異常的情況下能盡快的恢復集群數據,則我們需要定期針對etcd集群數據進行相應的容災操作。

在K8S集群中或者Docker環境中,我們可以非常方便的針對 etcd 數據進行備份,通我們常在一個節點上對 etcd 做快照就能夠實現etcd數據的備份,其快照文件包含所有 Kubernetes 狀態和關鍵信息, 有了etcd集群數據備份后,例如在災難場景(例如丟失所有控制平面節點)下也能快速恢復 Kubernetes 集群,Boss再也不同擔心系統起不來呢。

0x01 環境準備

1.安裝的二進制 etcdctl

描述: etcdctl 二進制文件可以在 github.com/coreos/etcd/releases 選擇對應的版本下載,例如可以執行以下 install_etcdctl.sh 的腳本,修改其中的版本信息。

install_etcdctl.sh

#!/bin/bash

# Author: WeiyiGeek

# Description: etcd 與 etcdctl 下載安裝

ETCD_VER=v3.5.5

ETCD_DIR=etcd-download

DOWNLOAD_URL=https://github.com/coreos/etcd/releases/download

# Download

mkdir ${ETCD_DIR}

cd ${ETCD_DIR}

wget ${DOWNLOAD_URL}/${ETCD_VER}/etcd-${ETCD_VER}-linux-amd64.tar.gz

tar -xzvf etcd-${ETCD_VER}-linux-amd64.tar.gz

# Install

cd etcd-${ETCD_VER}-linux-amd64

cp etcdctl /usr/local/bin/

驗證安裝:

$ etcdctl version

etcdctl version: 3.5.5

API version: 3.5

2.拉取帶有 etcdctl 的 Docker 鏡像

操作流程:

# 鏡像拉取與容器創建。

docker run --rm \

-v /data/backup:/backup \

-v /etc/kubernetes/pki/etcd:/etc/kubernetes/pki/etcd \

--env ETCDCTL_API=3 \

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.1-0 \

/bin/sh -c "etcdctl version"

安裝驗證:

3.5.1-0: Pulling from google_containers/etcd

e8614d09b7be: Pull complete

45b6afb4a92f: Pull complete

.......

Digest: sha256:64b9ea357325d5db9f8a723dcf503b5a449177b17ac87d69481e126bb724c263

Status: Downloaded newer image for registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.1-0

etcdctl version: 3.5.1

API version: 3.5

3.Kubernetes 使用 etcdctl 鏡像創建Pod

# 鏡像拉取

crictl pull registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.5-0

# Pod 創建以及安裝驗證

$ kubectl run etcdctl --image=registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.5-0 --command -- /usr/local/bin/etcdctl version

$ kubectl logs -f etcdctl

etcdctl version: 3.5.5

API version: 3.5

$ kubectl delete pod etcdctl

0x02 備份實踐

1.使用二進制安裝 etcdctl 客戶端工具

溫馨提示: 如果是單節點 Kubernetes 我們只需要對其的 etcd 數據庫進行快照備份, 如果是多主多從的集群,我們則需依次備份多個 master 節點中 etcd,防止在備份時etc數據被更改!

此處實踐環境為多master高可用集群節點, 即三主節點、四從工作節點,若你對K8s集群不了解或者項搭建高可用集群的朋友,關注 WeiyiGeek 公眾號回復【Kubernetes學習之路匯總】即可獲得學習資料:

https://www.weiyigeek.top/wechat.html?key=Kubernetes學習之路匯總

$ kubectl get node

NAME STATUS ROLES AGE VERSION

weiyigeek-107 Ready control-plane,master 279d v1.23.1

weiyigeek-108 Ready control-plane,master 278d v1.23.1

weiyigeek-109 Ready control-plane,master 278d v1.23.1

weiyigeek-223 Ready work 278d v1.23.1

weiyigeek-224 Ready work 278d v1.23.1

weiyigeek-225 Ready work 279d v1.23.1

weiyigeek-226 Ready work 118d v1.23.1

操作流程:

# 1.創建etcd快照備份目錄

$ mkdir -pv /backup

# 2.查看etcd證書

$ ls /etc/kubernetes/pki/etcd/

ca.crt ca.key healthcheck-client.crt healthcheck-client.key peer.crt peer.key server.crt server.key

# 3.查看 etcd 地址以及服務

$ kubectl get pod -n kube-system -o wide | grep "etcd"

etcd-weiyigeek-107 1/1 Running 1 279d 192.168.12.107 weiyigeek-107 <none><none>

etcd-weiyigeek-108 1/1 Running 0 278d 192.168.12.108 weiyigeek-108 <none><none>

etcd-weiyigeek-109 1/1 Running 0 278d 192.168.12.109 weiyigeek-109 <none><none>

# 4.此時在 107、 108 、109 主節點上查看你監聽情況

$ netstat -ano | grep "107:2379"

tcp 0 0 192.168.12.107:2379 0.0.0.0:* LISTEN off (0.00/0/0)

$ netstat -ano | grep "108:2379"

tcp 0 0 192.168.12.108:2379 0.0.0.0:* LISTEN off (0.00/0/0)

$ netstat -ano | grep "109:2379"

tcp 0 0 192.168.12.109:2379 0.0.0.0:* LISTEN off (0.00/0/0)

# 5. 使用etcdctl客戶端工具依次備份節點中的數據

$ etcdctl --endpoints=https://10.20.176.212:2379 \

--cacert=/etc/kubernetes/pki/etcd/ca.crt \

--cert=/etc/kubernetes/pki/etcd/healthcheck-client.crt \

--key=/etc/kubernetes/pki/etcd/healthcheck-client.key \

snapshot save /backup/etcd-snapshot.db

{"level":"info","ts":"2022-10-23T16:32:26.020+0800","caller":"snapshot/v3_snapshot.go:65","msg":"created temporary db file","path":"/backup/etcd-snapshot.db.part"}

{"level":"info","ts":"2022-10-23T16:32:26.034+0800","logger":"client","caller":"v3/maintenance.go:211","msg":"opened snapshot stream; downloading"}

{"level":"info","ts":"2022-10-23T16:32:26.034+0800","caller":"snapshot/v3_snapshot.go:73","msg":"fetching snapshot","endpoint":"https://10.20.176.212:2379"}

{"level":"info","ts":"2022-10-23T16:32:26.871+0800","logger":"client","caller":"v3/maintenance.go:219","msg":"completed snapshot read; closing"}

{"level":"info","ts":"2022-10-23T16:32:26.946+0800","caller":"snapshot/v3_snapshot.go:88","msg":"fetched snapshot","endpoint":"https://10.20.176.212:2379","size":"112 MB","took":"now"}

{"level":"info","ts":"2022-10-23T16:32:26.946+0800","caller":"snapshot/v3_snapshot.go:97","msg":"saved","path":"/backup/etcd-snapshot.db"}

Snapshot saved at /backup/etcd-snapshot.db

通過etcdctl查詢Kubernetes中etcd數據,由于Kubernetes使用etcd v3版本的API,而且etcd 集群中默認使用tls認證,所以先配置幾個環境變量。

# 1.環境變量

export ETCDCTL_API=3

export ETCDCTL_CACERT=/etc/kubernetes/pki/etcd/ca.crt

export ETCDCTL_CERT=/etc/kubernetes/pki/etcd/peer.crt

export ETCDCTL_KEY=/etc/kubernetes/pki/etcd/peer.key

# 2.查詢集群中所有的key列表

# –-prefix:表示查找所有以/registry為前綴的key

# --keys-only=true:表示只給出key不給出value

etcdctl --endpoints=https://10.20.176.212:2379 get /registry --prefix --keys-only=true | head -n 1

/registry/apiextensions.k8s.io/customresourcedefinitions/bgpconfigurations.crd.projectcalico.org

# 3.查詢某個key的值

# -–keys-only=false : 表示要給出value,該參數默認值即為false,

# -w=json :表示輸出json格式

etcdctl --endpoints=https://10.20.176.212:2379 get /registry/namespaces/default --prefix --keys-only=false -w=json | python3 -m json.tool

etcdctl --endpoints=https://10.20.176.212:2379 get /registry/namespaces/default --prefix --keys-only=false -w=json | python3 -m json.tool

{

"header": {

"cluster_id": 11404821125176160774,

"member_id": 7099450421952911102,

"revision": 30240109,

"raft_term": 3

},

"kvs": [

{

"key": "L3JlZ2lzdHJ5L25hbWVzcGFjZXMvZGVmYXVsdA==",

"create_revision": 192,

"mod_revision": 192,

"version": 1,

"value": "azhzAAoPCg.......XNwYWNlEGgAiAA=="

}

],

"count": 1

}

# 4.其 Key / Value 都是采用 base64 編碼

echo -e "L3JlZ2lzdHJ5L25hbWVzcGFjZXMvZGVmYXVsdA==" | base64 -d

echo -e "azhzAAoPCgJ2MRIJTmFtZXNwYWNlEogCCu0BCgdkZWZhdWx0EgAaACIAKiQ5ZDQyYmYxMy03OGM0LTQ4NzQtOThiYy05NjNlMDg1MDYyZjYyADgAQggIo8yllQYQAFomChtrdWJlcm5ldGVzLmlvL21ldGFkYXRhLm5hbWUSB2RlZmF1bHR6AIoBfQoOa3ViZS1hcGlzZXJ2ZXISBlVwZGF0ZRoCdjEiCAijzKWVBhAAMghGaWVsZHNWMTpJCkd7ImY6bWV0YWRhdGEiOnsiZjpsYWJlbHMiOnsiLiI6e30sImY6a3ViZXJuZXRlcy5pby9tZXRhZGF0YS5uYW1lIjp7fX19fUIAEgwKCmt1YmVybmV0ZXMaCAoGQWN0aXZlGgAiAA==" | base64 -d

# 5.實際上述value編碼解碼后的的內容如下:

$ kubectl get ns default -o yaml

apiVersion: v1

kind: Namespace

metadata:

creationTimestamp: "2022-06-15T04:54:59Z"

labels:

kubernetes.io/metadata.name: default

name: default

resourceVersion: "192"

uid: 9d42bf13-78c4-4874-98bc-963e085062f6

spec:

finalizers:

- kubernetes

status:

phase: Active

# 5.Pod 資源信息查看

etcdctl --endpoints=https://10.20.176.212:2379 get /registry/pods/default --prefix --keys-only=true

# /registry/pods/default/nfs-dev-nfs-subdir-external-provisioner-cf7684f8b-fzl9h

# /registry/pods/default/nfs-local-nfs-subdir-external-provisioner-6f97d44bb8-424tk

etcdctl --endpoints=https://10.20.176.212:2379 get /registry/pods/default --prefix --keys-only=true -w=json | python3 -m json.tool

{

"header": {

"cluster_id": 11404821125176160774,

"member_id": 7099450421952911102,

"revision": 30442415,

"raft_term": 3

},

"kvs": [

{ # 實際上該編碼是 /registry/pods/default/nfs-dev-nfs-subdir-external-provisioner-cf7684f8b-fzl9h

"key": "L3JlZ2lzdHJ5L3BvZHMvZGVmYXVsdC9uZnMtZGV2LW5mcy1zdWJkaXItZXh0ZXJuYWwtcHJvdmlzaW9uZXItY2Y3Njg0ZjhiLWZ6bDlo",

"create_revision": 5510865,

"mod_revision": 5510883,

"version": 5

},

{

"key": "L3JlZ2lzdHJ5L3BvZHMvZGVmYXVsdC9uZnMtbG9jYWwtbmZzLXN1YmRpci1leHRlcm5hbC1wcm92aXNpb25lci02Zjk3ZDQ0YmI4LTQyNHRr",

"create_revision": 5510967,

"mod_revision": 5510987,

"version": 5

}

],

"count": 2

}

2.使用Docker鏡像安裝 etcdctl 客戶端工具

描述: 在裝有Docker環境的機器,我們可以非常方便的備份K8s集群中的etcd數據庫,此處我已經安裝好了Docker,若有不了解Docker或者需要搭建Docker環境中童鞋。

# 1.Docker 實踐環境

$ docker version

Client: Docker Engine - Community

Version 20.10.3

.......

Server: Docker Engine - Community

Engine:

Version: 20.10.3

# 2.etcd 備份文件存儲的目錄

$ mkdir -vp /backup

# 3.執行docker創建容器,在備份數據庫后便刪除該容器。

$ docker run --rm \

-v /backup:/backup \

-v /etc/kubernetes/pki/etcd:/etc/kubernetes/pki/etcd \

--env ETCDCTL_API=3 \

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.1-0 \

/bin/sh -c "etcdctl --endpoints=https://192.168.12.107:2379 \

--cacert=/etc/kubernetes/pki/etcd/ca.crt \

--key=/etc/kubernetes/pki/etcd/healthcheck-client.key \

--cert=/etc/kubernetes/pki/etcd/healthcheck-client.crt \

snapshot save /backup/etcd-snapshot.db"

# {"level":"info","ts":1666515535.63076,"caller":"snapshot/v3_snapshot.go:68","msg":"created temporary db file","path":"/backup/etcd-snapshot.db.part"}

# {"level":"info","ts":1666515535.6411893,"logger":"client","caller":"v3/maintenance.go:211","msg":"opened snapshot stream; downloading"}

# {"level":"info","ts":1666515535.6419039,"caller":"snapshot/v3_snapshot.go:76","msg":"fetching snapshot","endpoint":"https://192.168.12.107:2379"}

# {"level":"info","ts":1666515535.9170482,"logger":"client","caller":"v3/maintenance.go:219","msg":"completed snapshot read; closing"}

# {"level":"info","ts":1666515535.931862,"caller":"snapshot/v3_snapshot.go:91","msg":"fetched snapshot","endpoint":"https://192.168.12.107:2379","size":"9.0 MB","took":"now"}

# {"level":"info","ts":1666515535.9322069,"caller":"snapshot/v3_snapshot.go:100","msg":"saved","path":"/backup/etcd-snapshot.db"}

Snapshot saved at /backup/etcd-snapshot.db

# 4.查看備份的etcd快照文件

ls -alh /backup/etcd-snapshot.db

-rw------- 1 root root 8.6M Oct 23 16:58 /backup/etcd-snapshot.db

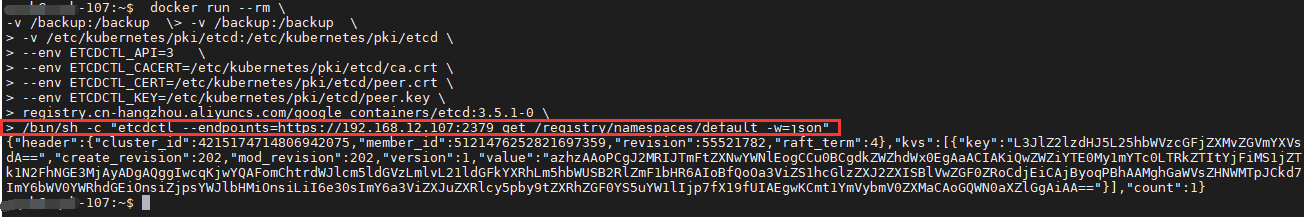

使用 Docker 容器查看 k8s 集群中的etcd數據庫中的數據。

$ docker run --rm \

-v /backup:/backup \

-v /etc/kubernetes/pki/etcd:/etc/kubernetes/pki/etcd \

--env ETCDCTL_API=3 \

--env ETCDCTL_CACERT=/etc/kubernetes/pki/etcd/ca.crt \

--env ETCDCTL_CERT=/etc/kubernetes/pki/etcd/peer.crt \

--env ETCDCTL_KEY=/etc/kubernetes/pki/etcd/peer.key \

registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.1-0 \

/bin/sh -c "etcdctl --endpoints=https://192.168.12.107:2379 get /registry/namespaces/default -w=json"

執行結果:

3.在kubernetes集群中快速創建pod進行手動備份

準備一個Pod資源清單并部署

cat <<EOF | kubectl apply -f -

apiVersion: v1

kind: Pod

metadata:

name: etcd-backup

labels:

tool: backup

spec:

containers:

- name: redis

image: registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.5-0

imagePullPolicy: IfNotPresent

command:

- sh

- -c

- "etcd"

env:

- name: ETCDCTL_API

value: "3"

- name: ETCDCTL_CACERT

value: "/etc/kubernetes/pki/etcd/ca.crt"

- name: ETCDCTL_CERT

value: "/etc/kubernetes/pki/etcd/healthcheck-client.crt"

- name: ETCDCTL_KEY

value: "/etc/kubernetes/pki/etcd/healthcheck-client.key"

volumeMounts:

- name: "pki"

mountPath: "/etc/kubernetes"

- name: "backup"

mountPath: "/backup"

volumes:

- name: pki

hostPath:

path: "/etc/kubernetes" # 證書目錄

type: "DirectoryOrCreate"

- name: "backup"

hostPath:

path: "/storage/dev/backup" # 數據備份目錄

type: "DirectoryOrCreate"

restartPolicy: Never

nodeSelector:

node-role.kubernetes.io/master: "" # 綁定在主節點中

EOF

pod/etcd-backup created

進入到該Pod終端之中執行相應的備份命令

~$ kubectl exec -it etcd-backup sh

# 快照備份

sh-5.1# export RAND=$RANDOM

sh-5.1# etcdctl --endpoints=https://192.168.12.107:2379 snapshot save /backup/etcd-107-${RAND}-snapshot.db

sh-5.1# etcdctl --endpoints=https://192.168.12.108:2379 snapshot save /backup/etcd-108-${RAND}-snapshot.db

Snapshot saved at /backup/etcd-108-32616-snapshot.db

sh-5.1# etcdctl --endpoints=https://192.168.12.109:2379 snapshot save /backup/etcd-109-${RAND}-snapshot.db

# etcd 節點成員

sh-5.1# etcdctl member list --endpoints=https://192.168.12.107:2379 --endpoints=https://192.168.12.108:2379 --endpoints=https://192.168.12.109:2379

2db31a5d67ec1034, started, weiyigeek-108, https://192.168.12.108:2380, https://192.168.12.108:2379, false

42efe7cca897d765, started, weiyigeek-109, https://192.168.12.109:2380, https://192.168.12.109:2379, false

471323846709334f, started, weiyigeek-107, https://192.168.12.107:2380, https://192.168.12.107:2379, false

# etcd 節點健康信息

sh-5.1# etcdctl endpoint health --endpoints=https://192.168.12.107:2379 --endpoints=https://192.168.12.108:2379 --endpoints=https://192.168.12.109:2379

https://192.168.12.107:2379 is healthy: successfully committed proposal: took = 11.930331ms

https://192.168.12.109:2379 is healthy: successfully committed proposal: took = 11.930993ms

https://192.168.12.108:2379 is healthy: successfully committed proposal: took = 109.515933ms

# etcd 節點狀態及空間占用信息

sh-5.1# etcdctl endpoint status --endpoints=https://192.168.12.107:2379 --endpoints=https://192.168.12.108:2379 --endpoints=https://192.168.12.109:2379

https://192.168.12.107:2379, 471323846709334f, 3.5.1, 9.2 MB, false, false, 4, 71464830, 71464830,

https://192.168.12.108:2379, 2db31a5d67ec1034, 3.5.1, 9.2 MB, false, false, 4, 71464830, 71464830,

https://192.168.12.109:2379, 42efe7cca897d765, 3.5.1, 9.2 MB, true, false, 4, 71464830, 71464830, # 此處為主

至此,手動備份etcd集群數據快照完畢!

4.在kubernetes集群中使用CronJob資源控制器進行定時備份

首先準備一個cronJob資源清單:

cat > etcd-database-backup.yaml <<'EOF'

apiVersion: batch/v1

kind: CronJob

metadata:

name: etcd-database-backup

annotations:

descript: "etcd數據庫定時備份"

spec:

schedule: "*/5 * * * *" # 表示每5分鐘運行一次

jobTemplate:

spec:

template:

spec:

containers:

- name: etcdctl

image: registry.cn-hangzhou.aliyuncs.com/google_containers/etcd:3.5.5-0

env:

- name: ETCDCTL_API

value: "3"

- name: ETCDCTL_CACERT

value: "/etc/kubernetes/pki/etcd/ca.crt"

- name: ETCDCTL_CERT

value: "/etc/kubernetes/pki/etcd/healthcheck-client.crt"

- name: ETCDCTL_KEY

value: "/etc/kubernetes/pki/etcd/healthcheck-client.key"

command:

- /bin/sh

- -c

- |

export RAND=$RANDOM

etcdctl --endpoints=https://192.168.12.107:2379 snapshot save /backup/etcd-107-${RAND}-snapshot.db

etcdctl --endpoints=https://192.168.12.108:2379 snapshot save /backup/etcd-108-${RAND}-snapshot.db

etcdctl --endpoints=https://192.168.12.109:2379 snapshot save /backup/etcd-109-${RAND}-snapshot.db

volumeMounts:

- name: "pki"

mountPath: "/etc/kubernetes"

- name: "backup"

mountPath: "/backup"

imagePullPolicy: IfNotPresent

volumes:

- name: "pki"

hostPath:

path: "/etc/kubernetes"

type: "DirectoryOrCreate"

- name: "backup"

hostPath:

path: "/storage/dev/backup" # 數據備份目錄

type: "DirectoryOrCreate"

nodeSelector: # 將Pod綁定在主節點之中,否則只能將相關證書放在各個節點能訪問的nfs共享存儲中

node-role.kubernetes.io/master: ""

restartPolicy: Never

EOF

創建cronjob資源清單:

kubectl apply -f etcd-database-backup.yaml

# cronjob.batch/etcd-database-backup created

查看創建的cronjob資源及其集群etcd備份:

NAME SCHEDULE SUSPEND ACTIVE LAST SCHEDULE AGE

cronjob.batch/etcd-database-backup */5 * * * * False 0 21s 14m

NAME READY STATUS RESTARTS AGE

pod/etcd-database-backup-27776740-rhzkk 0/1 Completed 0 21s

查看定時Pod日志以及備份文件:

kubectl logs -f pod/etcd-database-backup-27776740-rhzkk

Snapshot saved at /backup/etcd-107-25615-snapshot.db

Snapshot saved at /backup/etcd-108-25615-snapshot.db

Snapshot saved at /backup/etcd-109-25615-snapshot.db

$ ls -lt # 顯示最新備份的文件按照時間排序

total 25M

-rw------- 1 root root 8.6M Oct 24 21:12 etcd-107-25615-snapshot.db

-rw------- 1 root root 7.1M Oct 24 21:12 etcd-108-25615-snapshot.db

-rw------- 1 root root 8.8M Oct 24 21:12 etcd-109-25615-snapshot.db

至此集群中的etcd快照數據備份完畢!

0x02 恢復實踐

1.單master節點恢復

描述: 當單master集群節點資源清單數據丟失時,我們可采用如下方式進行快速恢復數據。

操作流程:

溫馨提示: 如果是單節點的k8S集群則使用如下命令恢復

mv /etc/kubernetes/manifests/ /etc/kubernetes/manifests-backup/

mv /var/lib/etcd /var/lib/etcd.bak

ETCDCTL_API=3 etcdctl snapshot restore /backup/etcd-212-32616-snapshot.db --data-dir=/var/lib/etcd/ --endpoints=https://10.20.176.212:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key

2.多master節點恢復

1.溫馨提示,此處的集群etcd數據庫是安裝在Kubernetes集群之中的,并非外部獨立安裝部署的。

$ kubectl get pod -n kube-system etcd-devtest-master-212 etcd-devtest-master-213 etcd-devtest-master-214

NAME READY STATUS RESTARTS AGE

etcd-devtest-master-212 1/1 Running 3 134d

etcd-devtest-master-213 1/1 Running 0 134d

etcd-devtest-master-214 1/1 Running 0 134d

2.前面我們提到過,在進行恢復前需要查看 etcd 集群當前成員以及監控狀態。

# etcd 集群成員列表

ETCDCTL_API=3 etcdctl member list --endpoints=https://10.20.176.212:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key

6286508b550016fe, started, devtest-master-212, https://10.20.176.212:2380, https://10.20.176.212:2379, false

9dd15f852caf8e05, started, devtest-master-214, https://10.20.176.214:2380, https://10.20.176.214:2379, false

e0f23bd90b7c7c0d, started, devtest-master-213, https://10.20.176.213:2380, https://10.20.176.213:2379, false

# etcd 集群節點狀態查看主從節點

ETCDCTL_API=3 etcdctl endpoint status --endpoints=https://10.20.176.212:2379 --endpoints=https://10.20.176.213:2379 --endpoints=https://10.20.176.214:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --write-out table

# etcd 集群節點健康信息篩選出不健康的節點

ETCDCTL_API=3 etcdctl endpoint health --endpoints=https://10.20.176.212:2379 --endpoints=https://10.20.176.213:2379 --endpoints=https://10.20.176.214:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key

https://10.20.176.212:2379 is healthy: successfully committed proposal: took = 14.686875ms

https://10.20.176.214:2379 is healthy: successfully committed proposal: took = 16.201187ms

https://10.20.176.213:2379 is healthy: successfully committed proposal: took = 18.962462ms

3.停掉所有Master機器的kube-apiserver和etcd ,然后在利用備份進行恢復該節點的etcd數據。

mv /etc/kubernetes/manifests/ /etc/kubernetes/manifests-backup/

# 在該節點上刪除 /var/lib/etcd

mv /var/lib/etcd /var/lib/etcd.bak

# 利用快照進行恢復,注意etcd集群中可以用同一份snapshot恢復。

ETCDCTL_API=3 etcdctl snapshot restore /backup/etcd-212-32616-snapshot.db --data-dir=/var/lib/etcd --name=devtest-master-212 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --initial-cluster-token=etcd-cluster-0 --initial-cluster=devtest-master-212=https://10.20.176.212:2380,devtest-master-213=https://10.20.176.213:2380,devtest-master-214=https://10.20.176.214:2380 --initial-advertise-peer-urls=https://10.20.176.212:2380

溫馨提示: 當節點加入控制平面 control-plane 后為 API Server、Controller Manager 和 Scheduler 生成靜態Pod配置清單,主機上的kubelet服務會監視 /etc/kubernetes/manifests目錄中的配置清單的創建、變動和刪除等狀態變動,并根據變動完成Pod創建、更新或刪除操作。因此,這兩個階段創建生成的各配置清單將會啟動Master組件的相關Pod

4.然后啟動 etcd 和 apiserver 并查看 pods是否恢復正常。

# 查看 etcd pod

$ kubectl get pod -n kube-system -l component=etcd

# 查看組件健康狀態

$ kubectl get componentstatuses

Warning: v1 ComponentStatus is deprecated in v1.19+

NAME STATUS MESSAGE ERROR

scheduler Healthy ok

controller-manager Healthy ok

etcd-0 Healthy {"health":"true","reason":""}

3.K8S集群中etcd數據庫節點數據不一致問題解決實踐

描述: 在日常巡檢業務時,發現etcd數據不一致,執行kubectl get pod -n xxx獲取的信息資源不一樣, etcdctl 直接查詢了 etcd 集群狀態和集群數據,返回結果顯示 3 個節點狀態都正常,且 RaftIndex 一致,觀察 etcd 的日志也并未發現報錯信息,唯一可疑的地方是 3 個節點的 dbsize 差別較大,得出的結論是 etcd 數據不一致。

$ ETCDCTL_API=3 etcdctl endpoint status --endpoints=https://192.168.12.108:2379 --endpoints=https://192.168.12.107:2379 --endpoints=https://192.168.12.109:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --write-out table

+-----------------------------+------------------+---------+---------+-----------+-----------+------------+

| ENDPOINT | ID | VERSION | DB SIZE | IS LEADER | RAFT TERM | RAFT INDEX |

+-----------------------------+------------------+---------+---------+-----------+-----------+------------+

| https://192.168.12.108:2379 | 2db31a5d67ec1034 | 3.5.1 | 7.4 MB | false | 4 | 72291872 |

| https://192.168.12.107:2379 | 471323846709334f | 3.5.1 | 9.0 MB | false | 4 | 72291872 |

| https://192.168.12.109:2379 | 42efe7cca897d765 | 3.5.1 | 9.2 MB | true | 4 | 72291872 |

+-----------------------------+------------------+---------+---------+-----------+-----------+------------+

由于從 kube-apiserver 的日志中同樣無法提取出能夠幫助解決問題的有用信息,起初我們只能猜測可能是 kube-apiserver 的緩存更新異常導致的。正當我們要從這個切入點去解決問題時,該同事反饋了一個更詭異的問題——自己新創建的 Pod,通過 kubectl查詢 Pod 列表,突然消失了!納尼?這是什么騷操作?經過我們多次測試查詢發現,通過 kubectl 來 list pod 列表,該 pod 有時候能查到,有時候查不到。那么問題來了,K8s api 的 list 操作是沒有緩存的,數據是 kube-apiserver 直接從 etcd 拉取返回給客戶端的,難道是 etcd 本身出了問題?

眾所周知,etcd 本身是一個強一致性的 KV 存儲,在寫操作成功的情況下,兩次讀請求不應該讀取到不一樣的數據。懷著不信邪的態度,我們通過 etcdctl 直接查詢了 etcd 集群狀態和集群數據,返回結果顯示 3 個節點狀態都正常,且 RaftIndex 一致,觀察 etcd 的日志也并未發現報錯信息,唯一可疑的地方是 3 個節點的 dbsize 差別較大。接著,我們又將 client 訪問的 endpoint 指定為不同節點地址來查詢每個節點的 key 的數量,結果發現 3 個節點返回的 key 的數量不一致,甚至兩個不同節點上 Key 的數量差最大可達到幾千!而直接通過 etcdctl 查詢剛才創建的 Pod,發現訪問某些 endpoint 能夠查詢到該 pod,而訪問其他 endpoint 則查不到。

至此,基本可以確定 etcd 集群的節點之間確實存在數據不一致現象。

解決思路

解決實踐: 此處將weiyigeek-109中的etcd數據備份恢復到weiyigeek-107與weiyigeek-108節點中。

# 1.導出 DB SIZE 最大的 etcd 節點數據(操作前一定要備份各 etcd 節點數據)。

etcdctl --endpoints=https://192.168.12.107:2379 snapshot save /backup/etcd-107-snapshot.db

etcdctl --endpoints=https://192.168.12.108:2379 snapshot save /backup/etcd-108-snapshot.db

etcdctl --endpoints=https://192.168.12.109:2379 snapshot save /backup/etcd-109-snapshot.db # 最完整的etd數據節點(它是leader)

# 補充:如果需要遷移leader,執行如下命令將leader交于weiyigeek-109

etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --endpoints=192.168.12.107:2379 move-leader 42efe7cca897d765

# 2.備份異常節點上 etcd 目錄

mv /var/lib/etcd /var/lib/etcd.bak

# 或者壓縮備份

tar -czvf etcd-20230308bak.taz.gz /var/lib/etcd

# 3.從etcd集群中移除異常節點,注意此處指定的endpoints是正常的leader節點。

$ etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --endpoints=192.168.12.109:2379 member list

# 異常 異常的 weiyigeek-107 節點

$ etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --endpoints=192.168.12.109:2379 member remove 2db31a5d67ec1034

# 4.修改weiyigeek-107節點上的etcd服務, 先將該節點節點再次加入etcd集群。。

$ mv /etc/kubernetes/manifests/etcd.yaml /etc/kubernetes/manifests-backup/etcd.yaml

$ vim /etc/kubernetes/manifests-backup/etcd.yaml

- --initial-cluster=weiyigeek-109=https://192.168.12.109:2380,weiyigeek-108=https://192.168.12.108:2380,weiyigeek-107=https://192.168.12.107:2380

- --initial-cluster-state=existing

$ kubectl exec -it -n kube-system etcd-weiyigeek-107 sh

$ export ETCDCTL_API=3

$ ETCD_ENDPOINTS=192.168.12.109:2379

$ etcdctl --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key --endpoints=$ETCD_ENDPOINTS member add weiyigeek-107 --peer-urls=https://192.168.12.107:2380

Member 8cecf1f50b91e502 added to cluster 71d4ff56f6fd6e70

# 5.加入到集群后,啟動 weiyigeek-107 節點中的 etcd 服務

mv /etc/kubernetes/manifests-backup/etcd.yaml /etc/kubernetes/manifests/etcd.yaml

驗證結果

解決之后可以發現節點啟動正常,數據也變成一致,各Etcd節點keys的數量是一致的都是1976,且數據大小都變成一致了。

$ for count in {107..109};do ETCDCTL_API=3 etcdctl get / --prefix --keys-only --endpoints=https://192.168.12.${count}:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key | wc -l;done

1976

$ for count in {107..109};do ETCDCTL_API=3 etcdctl endpoint status --endpoints=https://192.168.12.${count}:2379 --cacert=/etc/kubernetes/pki/etcd/ca.crt --cert=/etc/kubernetes/pki/etcd/peer.crt --key=/etc/kubernetes/pki/etcd/peer.key ;done

https://192.168.12.107:2379, 8cecf1f50b91e502, 3.5.1, 9.2 MB, false, 9, 72291872

https://192.168.12.108:2379, 35672f075108e36a, 3.5.1, 9.2 MB, false, 9, 72291872

https://192.168.12.109:2379, 42efe7cca897d765, 3.5.1, 9.2 MB, true, 9, 72291872

至此,K8S集群中etcd數據不一致解決與恢復完畢,希望大家多多支持!

原文地址: https://blog.weiyigeek.top/2022/10-15-690.html

本文至此完畢,更多技術文章,盡情期待下一章節!

溫馨提示:唯一極客技術博客文章在線瀏覽【極客全棧修煉】小程序上線了,涉及網絡安全、系統運維、應用開發、物聯網實戰、全棧文章,希望和大家一起學習進步,歡迎瀏覽交流!(希望大家多多提提意見)

專欄書寫不易,如果您覺得這個專欄還不錯的,請給這篇專欄 【點個贊、投個幣、收個藏、關個注,轉個發,留個言】(人間六大情),這將對我的肯定,謝謝!。

溫馨提示: 由于作者水平有限,本章錯漏缺點在所難免,希望讀者批評指正,并請在文章末尾留下您寶貴的經驗知識,聯系郵箱地址 master@weiyigeek.top 或者關注公眾號 WeiyiGeek 聯系我。

本文來自博客園,作者:全棧工程師修煉指南,轉載請注明原文鏈接:http://www.rzrgm.cn/WeiyiGeek/p/17192341.html。

歡迎關注博主【WeiyiGeek】公眾號以及【極客全棧修煉】小程序

描述:在 Kubernetes 集群中所有操作的資源數據都是存儲在 etcd 數據庫上, 所以防止集群節點癱瘓未正常工作或在集群遷移時,以及在出現異常的情況下能盡快的恢復集群數據,則我們需要定期針對etcd集群數據進行相應的容災操作。

在K8S集群中或者Docker環境中,我們可以非常方便的針對 etcd 數據進行備份,通我們常在一個節點上對 etcd 做快照就能夠實現etcd數據的備份,其快照文件包含所有 Kubernetes 狀態和關鍵信息, 有了etcd集群數據備份后,例如在災難場景(例如丟失所有控制平面節點)下也能快速恢復 Kubernetes 集群,Boss再也不同擔心系統起不來呢。

描述:在 Kubernetes 集群中所有操作的資源數據都是存儲在 etcd 數據庫上, 所以防止集群節點癱瘓未正常工作或在集群遷移時,以及在出現異常的情況下能盡快的恢復集群數據,則我們需要定期針對etcd集群數據進行相應的容災操作。

在K8S集群中或者Docker環境中,我們可以非常方便的針對 etcd 數據進行備份,通我們常在一個節點上對 etcd 做快照就能夠實現etcd數據的備份,其快照文件包含所有 Kubernetes 狀態和關鍵信息, 有了etcd集群數據備份后,例如在災難場景(例如丟失所有控制平面節點)下也能快速恢復 Kubernetes 集群,Boss再也不同擔心系統起不來呢。

浙公網安備 33010602011771號

浙公網安備 33010602011771號