《Python深度學習》筆記(二):Keras基礎

keras常用數(shù)據(jù)集

常用數(shù)據(jù)集 Datasets - Keras 中文文檔

鑒于官網(wǎng)下載較慢,個人將下載好的數(shù)據(jù)已打包(見鏈接 提取碼:8j6x),下載后替換C:\Users\Administrator\.keras中的datasets文件夾即可。

基本概念

-

在機器學習中,分類問題中的某個列別叫做類(class).數(shù)據(jù)點叫做樣本(sample).某一個樣本對應的類叫做標簽(label)。

-

張量:它是一個數(shù)據(jù)容器。是矩陣像任意維度的推廣,張量的維度(dimension)通常叫作軸(axis).

-

標量(0D張量)

-

僅包含一個數(shù)字的張量叫作標量(scaler,也叫做標量張量、零維張量、0D張量)

-

張量的個數(shù)也叫做階(rank)

-

標量張量有0個軸。

-

-

向量(1D張量)

- 數(shù)字組成的數(shù)組叫作向量或一維張量。以為張量只有一個軸。

x.ndim

- 數(shù)字組成的數(shù)組叫作向量或一維張量。以為張量只有一個軸。

import numpy as np

x=np.array([12,3,6,14,7])

x.ndim

1

上述向量有5個元素,所以被稱為5D向量。

5D向量和5D張量的區(qū)別:

5D向量只有一個軸,沿著軸有五個維度;5D張量有5個軸(沿著每個軸可能有人一個維度)

維度:表示沿著某個軸上的元素個數(shù)

矩陣

- 向量組成的數(shù)組叫作矩陣或二維張量,兩個軸(行和列)

- 第一個軸上的元素叫作行(row),第二個軸上的元素叫作列。

3D張量與更高維張量

- 將多個矩陣組合成的一個新的數(shù)組,可以得到一個3D張量。

張量的關鍵屬性:

- 軸的個數(shù)(階),張量的ndim.

- 形狀 shape

- 數(shù)據(jù)類型(Python庫中通常叫作dtype)

數(shù)據(jù)批量:

- 通常來說,深度學習中所有數(shù)據(jù)張量的第一個軸(0軸,因為索引從0開始)都是樣本軸(sample axis,有時也叫樣本維度)。

- 如:

batch=train_images[:128] batch=train_images[128:256] 對于這種批量張量,第一個軸(0軸)叫作批量軸(batch axis)或批量維度(batch dimension)

常見的數(shù)據(jù)張量:

- 向量數(shù)據(jù):2D張量,形狀為(samples(樣本軸),features(特征軸));

- 時間序列數(shù)據(jù)或序列數(shù)據(jù):3D張量,形狀(samples,timesteps,features);

- 圖像:4D張量,形狀為(samples,height,width,channels)

或(samples,channels,height,width); - 視頻:5D張量,形狀為:(samples,frames,height,width,channels)或

(samples,frames,channels,height,width).

逐元素計算

- relu運算和加法

廣播:

較小的張量會被廣播(broadcast),以匹配較大張量的形狀

步驟:

- 向較小的張量添加軸(叫作廣播軸),使其ndim與較大的張量相同。

- 將較小的張量沿著新軸重復,使其形狀與較大的張量相同。

張量點積

- 點積運算,也叫張量積(tensor product,不要與逐元素的乘積弄混)

- 在numpy、Keras、tensorflow中都是適用

*實現(xiàn)逐元素乘積。 - np.dot()實現(xiàn)點積運算。

矩陣點積運算的條件:第一個矩陣的列(1軸的維度)數(shù)和第二個矩陣的行數(shù)(0軸的維度)相同

Keras簡介

一個Python深度學習框架。

重要特性:

- 相同的代碼可以在CPU或GPU上無縫轉換運行;

- 具有用戶友好的API,便于快速開發(fā)深度學習模型的原型;

- 內置支持卷積網(wǎng)絡(用于計算機視覺)、循環(huán)網(wǎng)絡(用于時序處理)以及二者的任意組合;

- 支持任意網(wǎng)絡架構:

多輸入或多輸出模型、層共享、模型共享等。

Keras 是一個模型級( model-level)的庫,為開發(fā)深度學習模型提供了高層次的構建模塊。它不處理張量操作、求微分等低層次的運算。相反,它依賴于一個專門的、高度優(yōu)化的張量庫來完成這些運算,這個張量庫就是 Keras 的后端引擎( backend engine)。 Keras 沒有選擇單個張量庫并將 Keras 實現(xiàn)與這個庫綁定,而是以模塊化的方式處理這個問題。因此,幾個不同的后端引擎都可以無縫嵌入到 Keras 中。目前, Keras 有三個后端實現(xiàn): TensorFlow 后端、Theano 后端和微軟認知工具包( CNTK, Microsoft cognitive toolkit)后端。未來 Keras 可能會擴展到支持更多的深度學習引擎。

Keras工作流程:

- 定義訓練數(shù)據(jù):輸入張量和目標張量;

- 定義層組成的網(wǎng)絡(或模型),將輸入映射到目標;

- 配置學習過程:選擇損失函數(shù)、優(yōu)化器和需要監(jiān)控的指標

- 調用模型的fit方法在訓練數(shù)據(jù)上進行迭代。

搭建流程案例:

- 1.深度學習的基礎組件

from keras import models

from keras import layers

model=models.Sequential()

model.add(layers.Dense(32,input_shape=(784,)))

#第二層未指定形狀,將會自動推斷

model.add(layers.Dense(32))

- 2.模型:層構成的網(wǎng)絡

深度學習模型是層構成的有向無環(huán)圖。常見的拓撲結構:

- 雙分支(two-branch)網(wǎng)絡

- 多頭網(wǎng)絡

- Inception模塊

- 3. 損失函數(shù)與優(yōu)化器:配置學習過程的關鍵

- 損失函數(shù)(目標函數(shù)):在訓練過程中需要將其最小化,它能夠衡量當前任務是否已成功完成。

- 優(yōu)化器——決定如何基于損失函數(shù)對網(wǎng)絡進行更新,它執(zhí)行的是隨機梯度下降(SGD的某一個變體。

案例1:電影評論分類:二分類問題.

數(shù)據(jù)集:IMDB.它包含來自互聯(lián)網(wǎng)電影數(shù)據(jù)庫( IMDB)的 50 000 條嚴重兩極分化的評論。數(shù)據(jù)集被分為用于訓練的 25 000 條評論與用于測試的 25 000 條評論,訓練集和測試集都包含 50% 的正面評論和 50% 的負面評論.

#加載數(shù)據(jù)集

from keras.datasets import imdb

#僅保留訓練數(shù)據(jù)中前10000個最常出現(xiàn)的單詞

(train_data,train_labels),(test_data,test_labels)=imdb.load_data('imdb.npz',num_words=10000)

print(train_data[0])

#每一條評論由單詞對應的索引組成

[1, 14, 22, 16, 43, 530, 973, 1622, 1385, 65, 458, 4468, 66, 3941, 4, 173, 36, 256, 5, 25, 100, 43, 838, 112, 50, 670, 2, 9, 35, 480, 284, 5, 150, 4, 172, 112, 167, 2, 336, 385, 39, 4, 172, 4536, 1111, 17, 546, 38, 13, 447, 4, 192, 50, 16, 6, 147, 2025, 19, 14, 22, 4, 1920, 4613, 469, 4, 22, 71, 87, 12, 16, 43, 530, 38, 76, 15, 13, 1247, 4, 22, 17, 515, 17, 12, 16, 626, 18, 2, 5, 62, 386, 12, 8, 316, 8, 106, 5, 4, 2223, 5244, 16, 480, 66, 3785, 33, 4, 130, 12, 16, 38, 619, 5, 25, 124, 51, 36, 135, 48, 25, 1415, 33, 6, 22, 12, 215, 28, 77, 52, 5, 14, 407, 16, 82, 2, 8, 4, 107, 117, 5952, 15, 256, 4, 2, 7, 3766, 5, 723, 36, 71, 43, 530, 476, 26, 400, 317, 46, 7, 4, 2, 1029, 13, 104, 88, 4, 381, 15, 297, 98, 32, 2071, 56, 26, 141, 6, 194, 7486, 18, 4, 226, 22, 21, 134, 476, 26, 480, 5, 144, 30, 5535, 18, 51, 36, 28, 224, 92, 25, 104, 4, 226, 65, 16, 38, 1334, 88, 12, 16, 283, 5, 16, 4472, 113, 103, 32, 15, 16, 5345, 19, 178, 32]

print(train_labels[0])

#0表示負面(negative),1代表正面(postive)

1

#word_index是一個將單詞映射成整數(shù)索引的字典

#get_word_index()數(shù)據(jù)集可以單獨下載保存到根目錄

word_index=imdb.get_word_index()

reverse_word_index=dict(

[(value,key) for (key,value) in word_index.items()]

)

decoded_review=" ".join(

[reverse_word_index.get(i-3,"?") for i in train_data[0]]

)

#

print(decoded_review)

? this film was just brilliant casting location scenery story direction everyone's really suited the part they played and you could just imagine being there robert ? is an amazing actor and now the same being director ? father came from the same scottish island as myself so i loved the fact there was a real connection with this film the witty remarks throughout the film were great it was just brilliant so much that i bought the film as soon as it was released for ? and would recommend it to everyone to watch and the fly fishing was amazing really cried at the end it was so sad and you know what they say if you cry at a film it must have been good and this definitely was also ? to the two little boy's that played the ? of norman and paul they were just brilliant children are often left out of the ? list i think because the stars that play them all grown up are such a big profile for the whole film but these children are amazing and should be praised for what they have done don't you think the whole story was so lovely because it was true and was someone's life after all that was shared with us all

注意,索引減去了 3,因為 0、 1、 2是為“padding”(填充)、“start of sequence”(序列開始)、“unknown”(未知詞)分別保留的索引

#準備數(shù)據(jù)

def vectorize_sequences(sequences,dimension=10000):

results=np.zeros((len(sequences),dimension))

#one_hot編碼

for i,sequence in enumerate(sequences):

#指定索引為1

results[i,sequence]=1.

return results

將列表轉換成張量。轉換方法有以下兩種:

- 填充列表,使其具有相同的長度,再將列表轉換成形狀為(samples,word_indices)的整數(shù)張量,然后網(wǎng)絡第一層適用能處理這種整數(shù)張量的層(即Embedding層);

- 對列表進行one-hot編碼

#數(shù)據(jù)向量化

x_train=vectorize_sequences(train_data)

x_test=vectorize_sequences(test_data)

x_train[0]

array([0., 1., 1., ..., 0., 0., 0.])

#標簽向量化

y_train=np.asarray(train_labels).astype('float32')

y_test=np.asanyarray(test_labels).astype('float32')

print(y_train)

[1. 0. 0. ... 0. 1. 0.]

# 構建網(wǎng)絡

# 每個帶有 relu 激活的 Dense 層都實現(xiàn)了下列張量運算:output = relu(dot(W, input) + b)

對于這種 Dense 層的堆疊,你需要確定以下兩個關鍵架構:

- 網(wǎng)絡有多少層;

- 每層有多少個隱藏單元。

本節(jié)的構建策略:

- 兩個中間層,每層16個隱藏單元;

- 第三層輸出一個標量,預測當前評論的結果

#模型定義

from keras import models

from keras import layers

model=models.Sequential()

model.add(layers.Dense(16,activation='relu',input_shape=(10000,)))

model.add(layers.Dense(16,activation='relu'))

model.add(layers.Dense(1,activation='sigmoid'))

什么是激活函數(shù)?為什么要使用激活函數(shù)?

如果沒有 relu 等激活函數(shù)(也叫非線性), Dense 層將只包含兩個線性運算——點積和加法:

output = dot(W, input) + b

這樣 Dense 層就只能學習輸入數(shù)據(jù)的線性變換(仿射變換):該層的假設空間是從輸入數(shù)據(jù)到 16 位空間所有可能的線性變換集合。這種假設空間非常有限,無法利用多個表示層的優(yōu)勢,因為多個線性層堆疊實現(xiàn)的仍是線性運算,添加層數(shù)并不會擴展假設空間。為了得到更豐富的假設空間,從而充分利用多層表示的優(yōu)勢,你需要添加非線性或激活函數(shù)。 relu 是深度學習中最常用的激活函數(shù),但還有許多其他函數(shù)可選,它們都有類似的奇怪名稱,比如 prelu、 elu 等。

#損失函數(shù)和優(yōu)化器

#1. 編譯模型

model.compile(optimizer='rmsprop',

loss='binary_crossentropy',

metrics=['accuracy']

)

注:

上述代碼將優(yōu)化器、損失函數(shù)和指標作為字符串傳入,這是因為 rmsprop、 binary_crossentropy 和 accuracy 都是 Keras 內置的一部分。有時你可能希望配置自定義優(yōu)化器的參數(shù),或者傳入自定義的損失函數(shù)或指標函數(shù)。前者可通過向 optimizer 參數(shù)傳入一個優(yōu)化器類實例來實現(xiàn);后者可通過向 loss 和 metrics 參數(shù)傳入函數(shù)對象來實現(xiàn),如下所示。

#配置優(yōu)化器

from keras import optimizers

from keras import metrics

model.compile(optimizer=optimizers.RMSprop(lr=0.001),

loss='binary_crossentropy',

metrics=[metrics.binary_accuracy]

)

#留出驗證集

x_val=x_train[:10000]

partial_x_train=x_train[10000:]

y_val=y_train[:10000]

partial_y_train=y_train[10000:]

#訓練模型

#訓練20個輪次

#使用512個樣本組成小批量

history=model.fit(

partial_x_train,

partial_y_train,

epochs=20,

batch_size=512,

validation_data=(x_val,y_val)

)

Train on 15000 samples, validate on 10000 samples

Epoch 1/20

15000/15000 [==============================] - 10s 692us/step - loss: 0.5350 - binary_accuracy: 0.7935 - val_loss: 0.4228 - val_binary_accuracy: 0.8566

Epoch 2/20

15000/15000 [==============================] - 7s 449us/step - loss: 0.3324 - binary_accuracy: 0.9013 - val_loss: 0.3218 - val_binary_accuracy: 0.8852

Epoch 3/20

15000/15000 [==============================] - 5s 335us/step - loss: 0.2418 - binary_accuracy: 0.9261 - val_loss: 0.2933 - val_binary_accuracy: 0.8861

Epoch 4/20

15000/15000 [==============================] - 5s 314us/step - loss: 0.1931 - binary_accuracy: 0.9385 - val_loss: 0.2746 - val_binary_accuracy: 0.8912

Epoch 5/20

15000/15000 [==============================] - 5s 308us/step - loss: 0.1587 - binary_accuracy: 0.9505 - val_loss: 0.2757 - val_binary_accuracy: 0.8897

Epoch 6/20

15000/15000 [==============================] - 5s 320us/step - loss: 0.1308 - binary_accuracy: 0.9597 - val_loss: 0.2837 - val_binary_accuracy: 0.8864

Epoch 7/20

15000/15000 [==============================] - 5s 340us/step - loss: 0.1110 - binary_accuracy: 0.9661 - val_loss: 0.2984 - val_binary_accuracy: 0.8849

Epoch 8/20

15000/15000 [==============================] - 5s 333us/step - loss: 0.0908 - binary_accuracy: 0.9733 - val_loss: 0.3157 - val_binary_accuracy: 0.8849

Epoch 9/20

15000/15000 [==============================] - 5s 332us/step - loss: 0.0754 - binary_accuracy: 0.9790 - val_loss: 0.3344 - val_binary_accuracy: 0.8809

Epoch 10/20

15000/15000 [==============================] - 5s 326us/step - loss: 0.0638 - binary_accuracy: 0.9836 - val_loss: 0.3696 - val_binary_accuracy: 0.8777

Epoch 11/20

15000/15000 [==============================] - 5s 344us/step - loss: 0.0510 - binary_accuracy: 0.9877 - val_loss: 0.3839 - val_binary_accuracy: 0.8770

Epoch 12/20

15000/15000 [==============================] - 5s 300us/step - loss: 0.0424 - binary_accuracy: 0.9907 - val_loss: 0.4301 - val_binary_accuracy: 0.8704

Epoch 13/20

15000/15000 [==============================] - 5s 314us/step - loss: 0.0362 - binary_accuracy: 0.9919 - val_loss: 0.4360 - val_binary_accuracy: 0.8746

Epoch 14/20

15000/15000 [==============================] - 5s 307us/step - loss: 0.0304 - binary_accuracy: 0.9936 - val_loss: 0.5087 - val_binary_accuracy: 0.8675

Epoch 15/20

15000/15000 [==============================] - 5s 313us/step - loss: 0.0212 - binary_accuracy: 0.9971 - val_loss: 0.4931 - val_binary_accuracy: 0.8710

Epoch 16/20

15000/15000 [==============================] - 5s 340us/step - loss: 0.0191 - binary_accuracy: 0.9969 - val_loss: 0.5738 - val_binary_accuracy: 0.8661

Epoch 17/20

15000/15000 [==============================] - 5s 344us/step - loss: 0.0138 - binary_accuracy: 0.9987 - val_loss: 0.5637 - val_binary_accuracy: 0.8697

Epoch 18/20

15000/15000 [==============================] - 6s 395us/step - loss: 0.0129 - binary_accuracy: 0.9983 - val_loss: 0.5944 - val_binary_accuracy: 0.8665

Epoch 19/20

15000/15000 [==============================] - 5s 357us/step - loss: 0.0075 - binary_accuracy: 0.9996 - val_loss: 0.6259 - val_binary_accuracy: 0.8671

Epoch 20/20

15000/15000 [==============================] - 5s 301us/step - loss: 0.0077 - binary_accuracy: 0.9991 - val_loss: 0.6556 - val_binary_accuracy: 0.8670

history

注意,調用 model.fit() 返回了一個 History 對象。這個對象有一個成員 history,它

是一個字典,包含訓練過程中的所有數(shù)據(jù)。

history_dict=history.history

history_dict.keys()

dict_keys(['val_loss', 'val_binary_accuracy', 'loss', 'binary_accuracy'])

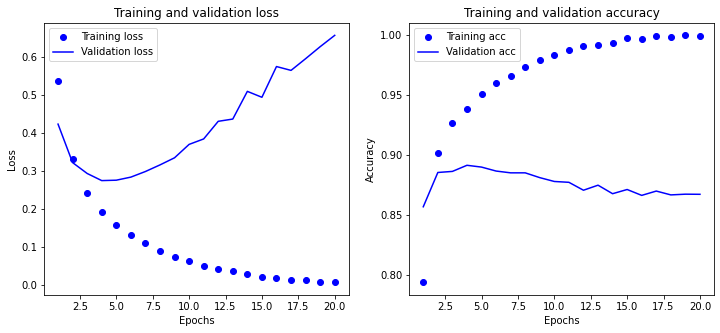

#繪制訓練損失和驗證損失

import matplotlib.pyplot as plt

loss_values=history_dict['loss']

val_loss_values=history_dict['val_loss']

epochs=range(1,len(loss_values)+1)

fig,axes=plt.subplots(nrows=1,ncols=2,figsize=(12,5))

Axes=axes.flatten()

#繪制訓練損失和驗證損失

Axes[0].plot(epochs,loss_values,'bo',label='Training loss')

Axes[0].plot(epochs,val_loss_values,'b',label='Validation loss')

Axes[0].set_title('Training and validation loss')

Axes[0].set_xlabel('Epochs')

Axes[0].set_ylabel('Loss')

Axes[0].legend()

#訓練訓練精度和驗證精度

acc=history_dict['binary_accuracy']

val_acc=history_dict['val_binary_accuracy']

Axes[1].plot(epochs,acc,'bo',label='Training acc')

Axes[1].plot(epochs,val_acc,'b',label='Validation acc')

Axes[1].set_title('Training and validation accuracy')

Axes[1].set_xlabel('Epochs')

Axes[1].set_ylabel('Accuracy')

Axes[1].legend()

plt.show()

#重新訓練一個新的網(wǎng)絡,訓練4輪

model=models.Sequential()

model.add(layers.Dense(16,activation='relu',input_shape=(10000,)))

model.add(layers.Dense(16,activation='relu'))

model.add(layers.Dense(1,activation='sigmoid'))

model.compile(

optimizer='rmsprop',

loss='binary_crossentropy',

metrics=['accuracy']

)

model.fit(x_train,y_train,epochs=4,batch_size=512)

results=model.evaluate(x_test,y_test)

Epoch 1/4

25000/25000 [==============================] - 6s 252us/step - loss: 0.4470 - acc: 0.8242

Epoch 2/4

25000/25000 [==============================] - 6s 224us/step - loss: 0.2557 - acc: 0.9103

Epoch 3/4

25000/25000 [==============================] - 6s 253us/step - loss: 0.1969 - acc: 0.9303

Epoch 4/4

25000/25000 [==============================] - 6s 233us/step - loss: 0.1645 - acc: 0.9422

25000/25000 [==============================] - 24s 955us/step

# 使用訓練好的網(wǎng)絡在新數(shù)據(jù)上生成預測結果

model.predict(x_test)

array([[0.29281223],

[0.99967945],

[0.93715465],

...,

[0.16654661],

[0.15739296],

[0.7685658 ]], dtype=float32)

小結

- 通常需要對原始數(shù)據(jù)進行大量預處理,以便將其轉換為張量輸入到神經(jīng)網(wǎng)絡中。單詞序列可以編碼為二進制向量,但也有其他編碼方式。

- 帶有 relu 激活的 Dense 層堆疊,可以解決很多種問題(包括情感分類),你可能會經(jīng)常用到這種模型。

- 對于二分類問題(兩個輸出類別),網(wǎng)絡的最后一層應該是只有一個單元并使用 sigmoid激活的 Dense 層,網(wǎng)絡輸出應該是 0~1 范圍內的標量,表示概率值。

- 對于二分類問題的 sigmoid 標量輸出,你應該使用 binary_crossentropy 損失函數(shù)。

- 無論你的問題是什么, rmsprop 優(yōu)化器通常都是足夠好的選擇。這一點你無須擔心。

- 隨著神經(jīng)網(wǎng)絡在訓練數(shù)據(jù)上的表現(xiàn)越來越好,模型最終會過擬合,并在前所未見的數(shù)據(jù)上得到越來越差的結果。一定要一直監(jiān)控模型在訓練集之外的數(shù)據(jù)上的性能。

案例2:新聞分類:多分類問題

-

任務:本節(jié)你會構建一個網(wǎng)絡,將路透社新聞劃分為 46 個互斥的主題。因為有多個類別,所以這是多分類( multiclass classification)問題的一個例子。因為每個數(shù)據(jù)點只能劃分到一個類別,所以更具體地說,這是單標簽、多分類( single-label, multiclass classification)問題的一個例子。如果每個數(shù)據(jù)點可以劃分到多個類別(主題),那它就是一個多標簽、多分類( multilabel,multiclass classification)問題。

-

數(shù)據(jù)集:reuters

本節(jié)使用路透社數(shù)據(jù)集,它包含許多短新聞及其對應的主題,由路透社在 1986 年發(fā)布。它是一個簡單的、廣泛使用的文本分類數(shù)據(jù)集。它包括 46 個不同的主題:某些主題的樣本更多,但訓練集中每個主題都有至少 10 個樣本。

#加載數(shù)據(jù)

from keras.datasets import reuters

(train_data,train_labels),(test_data,test_labels)=reuters.load_data(num_words=10000)

print(train_data.shape,test_data.shape)

(8982,) (2246,)

#將索引解碼為新聞文本

word_index=reuters.get_word_index()

reverse_word_index=dict(

[(value,key) for (key,value) in word_index.items()]

)

decoded_review=" ".join(

[reverse_word_index.get(i-3,"?") for i in train_data[0]]

)

#

print(decoded_review)

? ? ? said as a result of its december acquisition of space co it expects earnings per share in 1987 of 1 15 to 1 30 dlrs per share up from 70 cts in 1986 the company said pretax net should rise to nine to 10 mln dlrs from six mln dlrs in 1986 and rental operation revenues to 19 to 22 mln dlrs from 12 5 mln dlrs it said cash flow per share this year should be 2 50 to three dlrs reuter 3

#編碼數(shù)據(jù)

#調用前面的方法向量化

x_train=vectorize_sequences(train_data)

x_test=vectorize_sequences(test_data)

#將標簽向量化有兩種方法:你可以將標簽列表轉換為整數(shù)張量,或者使用 one-hot 編碼。

#one-hot 編碼是分類數(shù)據(jù)廣泛使用的一種格式,也叫分類編碼( categorical encoding)。

def to_one_hot(labels,dimension=46):

results=np.zeros((len(labels),dimension))

for i,label in enumerate(labels):

results[i,label]=1.

return results

one_hot_train_labels=to_one_hot(train_labels)

one_hot_test_data=to_one_hot(test_labels)

#使用內置函數(shù)

from keras.utils.np_utils import to_categorical

one_hot_train_labels=to_categorical(train_labels)

one_hot_test_labels=to_categorical(test_labels)

注:

- 這個主題分類問題與前面的電影評論分類問題類似,兩個例子都是試圖對簡短的文本片段進行分類。但這個問題有一個新的約束條件:輸出類別的數(shù)量從 2 個變?yōu)?46 個。輸出空間的維度要大得多。

- 對于前面用過的 Dense 層的堆疊,每層只能訪問上一層輸出的信息。如果某一層丟失了與分類問題相關的一些信息,那么這些信息無法被后面的層找回,也就是說,每一層都可能成為信息瓶頸。上一個例子使用了 16 維的中間層,但對這個例子來說 16 維空間可能太小了,無法學會區(qū)分 46 個不同的類別。這種維度較小的層可能成為信息瓶頸,永久地丟失相關信息。

- 出于這個原因,下面將使用維度更大的層,包含 64 個單元。

#構建網(wǎng)絡

from keras import models

from keras import layers

model=models.Sequential()

model.add(layers.Dense(64,activation='relu',input_shape=(10000,)))

model.add(layers.Dense(64,activation='relu'))

model.add(layers.Dense(46,activation='softmax'))

注:model.add(layers.Dense(46,activation='softmax'))報錯:TypeError: softmax() got an unexpected keyword argument 'axis'?

- 原來的配置:tensorflow1.4.0 keras2.1.6.

- 參照關于TypeError: softmax() got an unexpected keyword argument axis的解決方案_人工智能_dqefd2e4f1的博客-CSDN博客修改配置:

- tensorflow=1.5.0 keras=2.0.8 最后重啟核.

- softmax函數(shù)(剖析Keras源碼之激活函數(shù)softmax_人工智能_yuanyuneixin1的專欄-CSDN博客 ](https://blog.csdn.net/yuanyuneixin1/article/details/103753264)

將不會報錯!

注:

- 網(wǎng)絡的最后一層是大小為 46 的 Dense 層。這意味著,對于每個輸入樣本,網(wǎng)絡都會輸出一個 46 維向量。這個向量的每個元素(即每個維度)代表不同的輸出類別。

- 最后一層使用了 softmax 激活。你在 MNIST 例子中見過這種用法。網(wǎng)絡將輸出在 46個不同輸出類別上的概率分布——對于每一個輸入樣本,網(wǎng)絡都會輸出一個 46 維向量,其中 output[i] 是樣本屬于第 i 個類別的概率。 46 個概率的總和為 1。

- 對于這個例子,最好的損失函數(shù)是 categorical_crossentropy(分類交叉熵)。它用于衡量兩個概率分布之間的距離,這里兩個概率分布分別是網(wǎng)絡輸出的概率分布和標簽的真實分布。通過將這兩個分布的距離最小化,訓練網(wǎng)絡可使輸出結果盡可能接近真實標簽。

model.compile(optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['accuracy'])

#留出驗證集

from sklearn.model_selection import train_test_split

x_val,partial_x_train,y_val,partial_y_train=train_test_split(x_train,one_hot_train_labels,test_size=1000,random_state=12345)

print(x_val.shape,partial_x_train.shape,y_val.shape,partial_y_train.shape)

(7982, 10000) (1000, 10000) (7982, 46) (1000, 46)

#模型訓練

history=model.fit(partial_x_train,

partial_y_train,

epochs=20,

batch_size=512,

validation_data=(x_val,y_val))

Train on 1000 samples, validate on 7982 samples

Epoch 1/20

1000/1000 [==============================] - 2s - loss: 3.7190 - acc: 0.2570 - val_loss: 3.3810 - val_acc: 0.4119

Epoch 2/20

1000/1000 [==============================] - 1s - loss: 3.1404 - acc: 0.5020 - val_loss: 2.8883 - val_acc: 0.4787

Epoch 3/20

1000/1000 [==============================] - 2s - loss: 2.5471 - acc: 0.5540 - val_loss: 2.5269 - val_acc: 0.5170

Epoch 4/20

1000/1000 [==============================] - 2s - loss: 2.0929 - acc: 0.5890 - val_loss: 2.2767 - val_acc: 0.5345

Epoch 5/20

1000/1000 [==============================] - 2s - loss: 1.7469 - acc: 0.6510 - val_loss: 2.0901 - val_acc: 0.5542

Epoch 6/20

1000/1000 [==============================] - 1s - loss: 1.4851 - acc: 0.6960 - val_loss: 1.9564 - val_acc: 0.5644

Epoch 7/20

1000/1000 [==============================] - 1s - loss: 1.2927 - acc: 0.7230 - val_loss: 1.8765 - val_acc: 0.5645

Epoch 8/20

1000/1000 [==============================] - 1s - loss: 1.1319 - acc: 0.7650 - val_loss: 1.7886 - val_acc: 0.5876

Epoch 9/20

1000/1000 [==============================] - 2s - loss: 0.9924 - acc: 0.8000 - val_loss: 1.7118 - val_acc: 0.6208

Epoch 10/20

1000/1000 [==============================] - 1s - loss: 0.8799 - acc: 0.8300 - val_loss: 1.6660 - val_acc: 0.6353

Epoch 11/20

1000/1000 [==============================] - 2s - loss: 0.7850 - acc: 0.8490 - val_loss: 1.6195 - val_acc: 0.6427

Epoch 12/20

1000/1000 [==============================] - 1s - loss: 0.7013 - acc: 0.8720 - val_loss: 1.5924 - val_acc: 0.6480

Epoch 13/20

1000/1000 [==============================] - 1s - loss: 0.6261 - acc: 0.8920 - val_loss: 1.5671 - val_acc: 0.6507

Epoch 14/20

1000/1000 [==============================] - 2s - loss: 0.5618 - acc: 0.9040 - val_loss: 1.5389 - val_acc: 0.6571

Epoch 15/20

1000/1000 [==============================] - 2s - loss: 0.5050 - acc: 0.9170 - val_loss: 1.5127 - val_acc: 0.6669

Epoch 16/20

1000/1000 [==============================] - 1s - loss: 0.4539 - acc: 0.9290 - val_loss: 1.5007 - val_acc: 0.6681

Epoch 17/20

1000/1000 [==============================] - 1s - loss: 0.4064 - acc: 0.9460 - val_loss: 1.4882 - val_acc: 0.6704

Epoch 18/20

1000/1000 [==============================] - 1s - loss: 0.3644 - acc: 0.9520 - val_loss: 1.4782 - val_acc: 0.6731

Epoch 19/20

1000/1000 [==============================] - 1s - loss: 0.3261 - acc: 0.9580 - val_loss: 1.4771 - val_acc: 0.6744

Epoch 20/20

1000/1000 [==============================] - 2s - loss: 0.2914 - acc: 0.9640 - val_loss: 1.4711 - val_acc: 0.6761

history_dict=history.history

print(history_dict.keys())

dict_keys(['val_loss', 'val_acc', 'loss', 'acc'])

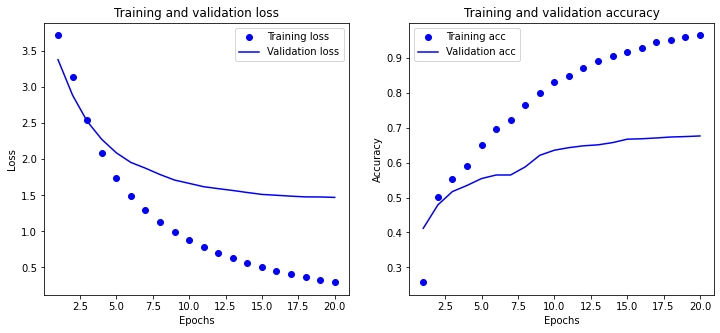

#繪制訓練損失和驗證損失

import matplotlib.pyplot as plt

loss_values=history_dict['loss']

val_loss_values=history_dict['val_loss']

epochs=range(1,len(loss_values)+1)

fig,axes=plt.subplots(nrows=1,ncols=2,figsize=(12,5))

Axes=axes.flatten()

#繪制訓練損失和驗證損失

Axes[0].plot(epochs,loss_values,'bo',label='Training loss')

Axes[0].plot(epochs,val_loss_values,'b',label='Validation loss')

Axes[0].set_title('Training and validation loss')

Axes[0].set_xlabel('Epochs')

Axes[0].set_ylabel('Loss')

Axes[0].legend()

#訓練訓練精度和驗證精度

acc=history_dict['acc']

val_acc=history_dict['val_acc']

Axes[1].plot(epochs,acc,'bo',label='Training acc')

Axes[1].plot(epochs,val_acc,'b',label='Validation acc')

Axes[1].set_title('Training and validation accuracy')

Axes[1].set_xlabel('Epochs')

Axes[1].set_ylabel('Accuracy')

Axes[1].legend()

plt.show()

#重新訓練一個新的網(wǎng)絡

model=models.Sequential()

model.add(layers.Dense(64,activation='relu',input_shape=(10000,)))

model.add(layers.Dense(64,activation='relu'))

model.add(layers.Dense(46,activation='softmax'))

model.compile(

optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['accuracy'])

model.fit(partial_x_train,

partial_y_train,

epochs=9,

batch_size=512,

validation_data=(x_val,y_val))

results=model.evaluate(x_test,one_hot_test_labels)

Train on 1000 samples, validate on 7982 samples

Epoch 1/9

1000/1000 [==============================] - 2s - loss: 3.7028 - acc: 0.2430 - val_loss: 3.3254 - val_acc: 0.4801

Epoch 2/9

1000/1000 [==============================] - 1s - loss: 3.0536 - acc: 0.5380 - val_loss: 2.7722 - val_acc: 0.5229

Epoch 3/9

1000/1000 [==============================] - 1s - loss: 2.3973 - acc: 0.5660 - val_loss: 2.3857 - val_acc: 0.5474

Epoch 4/9

1000/1000 [==============================] - 1s - loss: 1.9276 - acc: 0.6510 - val_loss: 2.1499 - val_acc: 0.5484

Epoch 5/9

1000/1000 [==============================] - 1s - loss: 1.6058 - acc: 0.6970 - val_loss: 1.9552 - val_acc: 0.5906

Epoch 6/9

1000/1000 [==============================] - 1s - loss: 1.3476 - acc: 0.7520 - val_loss: 1.8334 - val_acc: 0.6118

Epoch 7/9

1000/1000 [==============================] - 1s - loss: 1.1597 - acc: 0.7830 - val_loss: 1.7383 - val_acc: 0.6318

Epoch 8/9

1000/1000 [==============================] - 1s - loss: 1.0092 - acc: 0.8160 - val_loss: 1.6727 - val_acc: 0.6407

Epoch 9/9

1000/1000 [==============================] - 2s - loss: 0.8877 - acc: 0.8390 - val_loss: 1.6122 - val_acc: 0.6585

2208/2246 [============================>.] - ETA: 0s

print(results)

[1.6328595385207518, 0.6607301870521858]

import copy

test_labels_copy=copy.copy(test_labels)

np.random.shuffle(test_labels_copy)

hits_array = np.array(test_labels) == np.array(test_labels_copy)

float(np.sum(hits_array)) / len(test_labels)

0.17764915405164738

注:

這種方法可以得到約 80%(個人只有66%) 的精度。對于平衡的二分類問題,完全隨機的分類器能夠得到50% 的精度。但在這個例子中,完全隨機的精度約為 19%(個人18%),所以上述結果相當不錯,至少和隨機的基準比起來還不錯。

predictions=model.predict(x_test)

print(predictions.shape)

(2246, 46)

#最大的元素就是預測類別,即概率最大的類別

y_pred=np.argmax(predictions,axis=1)

y_true=np.argmax(one_hot_test_labels,axis=1)

from sklearn.metrics import confusion_matrix

confusion_matrix(y_true,y_pred)

array([[ 0, 3, 0, ..., 0, 0, 0],

[ 0, 74, 0, ..., 0, 0, 0],

[ 0, 9, 0, ..., 0, 0, 0],

...,

[ 0, 2, 0, ..., 2, 0, 0],

[ 0, 1, 0, ..., 0, 0, 0],

[ 0, 0, 0, ..., 0, 0, 0]], dtype=int64)

#驗證中間維度的重要性:準確率58%

#重新訓練一個新的網(wǎng)絡

model=models.Sequential()

model.add(layers.Dense(64,activation='relu',input_shape=(10000,)))

model.add(layers.Dense(4,activation='relu'))

model.add(layers.Dense(46,activation='softmax'))

model.compile(

optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['accuracy'])

model.fit(partial_x_train,

partial_y_train,

epochs=20,

batch_size=128,

validation_data=(x_val,y_val))

results=model.evaluate(x_test,one_hot_test_labels)

print(results)

Train on 1000 samples, validate on 7982 samples

Epoch 1/20

1000/1000 [==============================] - 2s - loss: 3.7911 - acc: 0.0310 - val_loss: 3.7182 - val_acc: 0.0392

Epoch 2/20

1000/1000 [==============================] - 2s - loss: 3.6316 - acc: 0.0500 - val_loss: 3.6050 - val_acc: 0.0353

Epoch 3/20

1000/1000 [==============================] - 2s - loss: 3.4741 - acc: 0.0730 - val_loss: 3.4998 - val_acc: 0.0492

Epoch 4/20

1000/1000 [==============================] - 2s - loss: 3.3161 - acc: 0.0850 - val_loss: 3.3881 - val_acc: 0.0510

Epoch 5/20

1000/1000 [==============================] - 2s - loss: 3.1592 - acc: 0.0970 - val_loss: 3.2817 - val_acc: 0.0573

Epoch 6/20

1000/1000 [==============================] - 2s - loss: 2.9879 - acc: 0.1220 - val_loss: 3.1765 - val_acc: 0.1793

Epoch 7/20

1000/1000 [==============================] - 2s - loss: 2.8128 - acc: 0.2730 - val_loss: 3.0404 - val_acc: 0.2483

Epoch 8/20

1000/1000 [==============================] - 2s - loss: 2.6299 - acc: 0.2970 - val_loss: 2.9262 - val_acc: 0.2541

Epoch 9/20

1000/1000 [==============================] - 1s - loss: 2.4480 - acc: 0.3000 - val_loss: 2.8121 - val_acc: 0.2675

Epoch 10/20

1000/1000 [==============================] - 2s - loss: 2.2736 - acc: 0.3020 - val_loss: 2.6856 - val_acc: 0.2640

Epoch 11/20

1000/1000 [==============================] - 2s - loss: 2.1041 - acc: 0.3040 - val_loss: 2.5970 - val_acc: 0.2684

Epoch 12/20

1000/1000 [==============================] - 2s - loss: 1.9482 - acc: 0.3040 - val_loss: 2.4678 - val_acc: 0.2642

Epoch 13/20

1000/1000 [==============================] - 2s - loss: 1.7972 - acc: 0.3040 - val_loss: 2.4264 - val_acc: 0.2689

Epoch 14/20

1000/1000 [==============================] - 2s - loss: 1.6472 - acc: 0.4030 - val_loss: 2.3173 - val_acc: 0.5169

Epoch 15/20

1000/1000 [==============================] - 2s - loss: 1.5000 - acc: 0.6720 - val_loss: 2.2494 - val_acc: 0.5416

Epoch 16/20

1000/1000 [==============================] - 2s - loss: 1.3657 - acc: 0.6830 - val_loss: 2.1867 - val_acc: 0.5490

Epoch 17/20

1000/1000 [==============================] - 2s - loss: 1.2502 - acc: 0.6880 - val_loss: 2.0991 - val_acc: 0.5695

Epoch 18/20

1000/1000 [==============================] - 2s - loss: 1.1553 - acc: 0.6990 - val_loss: 2.0940 - val_acc: 0.5723

Epoch 19/20

1000/1000 [==============================] - 2s - loss: 1.0724 - acc: 0.7260 - val_loss: 2.0683 - val_acc: 0.5811

Epoch 20/20

1000/1000 [==============================] - 2s - loss: 1.0063 - acc: 0.7470 - val_loss: 2.0576 - val_acc: 0.5862

2176/2246 [============================>.] - ETA: 0s[2.1026238074604144, 0.5819234194122885]

#驗證中間維度的重要性:32個隱藏單元,準確率71%

#重新訓練一個新的網(wǎng)絡

model=models.Sequential()

model.add(layers.Dense(64,activation='relu',input_shape=(10000,)))

model.add(layers.Dense(32,activation='relu'))

model.add(layers.Dense(46,activation='softmax'))

model.compile(

optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['accuracy'])

model.fit(partial_x_train,

partial_y_train,

epochs=20,

batch_size=128,

validation_data=(x_val,y_val))

results=model.evaluate(x_test,one_hot_test_labels)

print(results)

Train on 1000 samples, validate on 7982 samples

Epoch 1/20

1000/1000 [==============================] - 2s - loss: 3.4260 - acc: 0.3100 - val_loss: 2.8872 - val_acc: 0.5085

Epoch 2/20

1000/1000 [==============================] - 2s - loss: 2.3377 - acc: 0.5830 - val_loss: 2.2268 - val_acc: 0.5501

Epoch 3/20

1000/1000 [==============================] - 2s - loss: 1.6347 - acc: 0.6930 - val_loss: 1.8780 - val_acc: 0.6317

Epoch 4/20

1000/1000 [==============================] - 2s - loss: 1.2201 - acc: 0.7790 - val_loss: 1.6869 - val_acc: 0.65750

Epoch 5/20

1000/1000 [==============================] - 2s - loss: 0.9387 - acc: 0.8220 - val_loss: 1.5694 - val_acc: 0.6792

Epoch 6/20

1000/1000 [==============================] - 2s - loss: 0.7306 - acc: 0.8670 - val_loss: 1.5090 - val_acc: 0.6718

Epoch 7/20

1000/1000 [==============================] - 2s - loss: 0.5763 - acc: 0.8940 - val_loss: 1.4532 - val_acc: 0.6802

Epoch 8/20

1000/1000 [==============================] - 2s - loss: 0.4569 - acc: 0.9260 - val_loss: 1.4229 - val_acc: 0.6873

Epoch 9/20

1000/1000 [==============================] - 2s - loss: 0.3643 - acc: 0.9460 - val_loss: 1.3973 - val_acc: 0.6977

Epoch 10/20

1000/1000 [==============================] - 2s - loss: 0.2897 - acc: 0.9570 - val_loss: 1.3989 - val_acc: 0.6957

Epoch 11/20

1000/1000 [==============================] - 2s - loss: 0.2319 - acc: 0.9670 - val_loss: 1.4184 - val_acc: 0.6941

Epoch 12/20

1000/1000 [==============================] - 2s - loss: 0.1817 - acc: 0.9760 - val_loss: 1.4184 - val_acc: 0.6993

Epoch 13/20

1000/1000 [==============================] - 1s - loss: 0.1427 - acc: 0.9830 - val_loss: 1.4141 - val_acc: 0.7060

Epoch 14/20

1000/1000 [==============================] - 2s - loss: 0.1132 - acc: 0.9870 - val_loss: 1.4141 - val_acc: 0.7075

Epoch 15/20

1000/1000 [==============================] - 2s - loss: 0.0877 - acc: 0.9900 - val_loss: 1.4143 - val_acc: 0.7156

Epoch 16/20

1000/1000 [==============================] - 2s - loss: 0.0673 - acc: 0.9910 - val_loss: 1.4332 - val_acc: 0.71270.9

Epoch 17/20

1000/1000 [==============================] - 2s - loss: 0.0551 - acc: 0.9910 - val_loss: 1.4719 - val_acc: 0.7126

Epoch 18/20

1000/1000 [==============================] - 2s - loss: 0.0434 - acc: 0.9930 - val_loss: 1.4835 - val_acc: 0.7130

Epoch 19/20

1000/1000 [==============================] - 2s - loss: 0.0354 - acc: 0.9960 - val_loss: 1.5006 - val_acc: 0.7161

Epoch 20/20

1000/1000 [==============================] - 2s - loss: 0.0295 - acc: 0.9930 - val_loss: 1.5302 - val_acc: 0.7156

2176/2246 [============================>.] - ETA: 0s[1.581864387141947, 0.7052537845588643]

#驗證中間維度的重要性:128個隱藏單元,準確率71%,未增加

#重新訓練一個新的網(wǎng)絡

model=models.Sequential()

model.add(layers.Dense(64,activation='relu',input_shape=(10000,)))

model.add(layers.Dense(128,activation='relu'))

model.add(layers.Dense(46,activation='softmax'))

model.compile(

optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['accuracy'])

model.fit(partial_x_train,

partial_y_train,

epochs=20,

batch_size=128,

validation_data=(x_val,y_val))

results=model.evaluate(x_test,one_hot_test_labels)

print(results)

Train on 1000 samples, validate on 7982 samples

Epoch 1/20

1000/1000 [==============================] - 2s - loss: 3.0798 - acc: 0.4140 - val_loss: 2.2990 - val_acc: 0.4930

Epoch 2/20

1000/1000 [==============================] - 2s - loss: 1.7348 - acc: 0.6320 - val_loss: 1.7799 - val_acc: 0.5948

Epoch 3/20

1000/1000 [==============================] - 2s - loss: 1.2176 - acc: 0.7360 - val_loss: 1.5994 - val_acc: 0.6490

Epoch 4/20

1000/1000 [==============================] - 2s - loss: 0.9145 - acc: 0.8090 - val_loss: 1.4957 - val_acc: 0.6783

Epoch 5/20

1000/1000 [==============================] - 2s - loss: 0.6999 - acc: 0.8580 - val_loss: 1.4199 - val_acc: 0.6887

Epoch 6/20

1000/1000 [==============================] - 2s - loss: 0.5349 - acc: 0.9080 - val_loss: 1.3807 - val_acc: 0.6999

Epoch 7/20

1000/1000 [==============================] - 2s - loss: 0.4050 - acc: 0.9320 - val_loss: 1.3455 - val_acc: 0.7050

Epoch 8/20

1000/1000 [==============================] - 2s - loss: 0.3031 - acc: 0.9630 - val_loss: 1.3325 - val_acc: 0.7124

Epoch 9/20

1000/1000 [==============================] - 2s - loss: 0.2237 - acc: 0.9750 - val_loss: 1.3138 - val_acc: 0.7226

Epoch 10/20

1000/1000 [==============================] - 2s - loss: 0.1591 - acc: 0.9820 - val_loss: 1.3621 - val_acc: 0.7115

Epoch 11/20

1000/1000 [==============================] - 2s - loss: 0.1145 - acc: 0.9840 - val_loss: 1.3353 - val_acc: 0.7273

Epoch 12/20

1000/1000 [==============================] - 2s - loss: 0.0801 - acc: 0.9910 - val_loss: 1.3492 - val_acc: 0.7221

Epoch 13/20

1000/1000 [==============================] - 2s - loss: 0.0603 - acc: 0.9930 - val_loss: 1.3493 - val_acc: 0.7334

Epoch 14/20

1000/1000 [==============================] - 2s - loss: 0.0460 - acc: 0.9940 - val_loss: 1.3759 - val_acc: 0.7259

Epoch 15/20

1000/1000 [==============================] - 2s - loss: 0.0335 - acc: 0.9950 - val_loss: 1.3890 - val_acc: 0.7331

Epoch 16/20

1000/1000 [==============================] - 2s - loss: 0.0267 - acc: 0.9950 - val_loss: 1.4839 - val_acc: 0.7211

Epoch 17/20

1000/1000 [==============================] - 2s - loss: 0.0217 - acc: 0.9940 - val_loss: 1.4429 - val_acc: 0.7296

Epoch 18/20

1000/1000 [==============================] - 2s - loss: 0.0169 - acc: 0.9950 - val_loss: 1.4571 - val_acc: 0.7365

Epoch 19/20

1000/1000 [==============================] - 2s - loss: 0.0154 - acc: 0.9950 - val_loss: 1.4853 - val_acc: 0.7280

Epoch 20/20

1000/1000 [==============================] - 2s - loss: 0.0112 - acc: 0.9960 - val_loss: 1.5115 - val_acc: 0.7313

2112/2246 [===========================>..] - ETA: 0s[1.5476579676752948, 0.7159394479604672]

#驗證中間維度的重要性:一個隱藏層,準確率72%

#重新訓練一個新的網(wǎng)絡

model=models.Sequential()

model.add(layers.Dense(64,activation='relu',input_shape=(10000,)))

#model.add(layers.Dense(32,activation='relu'))

model.add(layers.Dense(46,activation='softmax'))

model.compile(

optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['accuracy'])

model.fit(partial_x_train,

partial_y_train,

epochs=20,

batch_size=128,

validation_data=(x_val,y_val))

results=model.evaluate(x_test,one_hot_test_labels)

print(results)

Train on 1000 samples, validate on 7982 samples

Epoch 1/20

1000/1000 [==============================] - 2s - loss: 3.3507 - acc: 0.4410 - val_loss: 2.7851 - val_acc: 0.5682

Epoch 2/20

1000/1000 [==============================] - 1s - loss: 2.2370 - acc: 0.6660 - val_loss: 2.1916 - val_acc: 0.6245

Epoch 3/20

1000/1000 [==============================] - 2s - loss: 1.5662 - acc: 0.7600 - val_loss: 1.8646 - val_acc: 0.6506

Epoch 4/20

1000/1000 [==============================] - 2s - loss: 1.1541 - acc: 0.8100 - val_loss: 1.6695 - val_acc: 0.6706

Epoch 5/20

1000/1000 [==============================] - 2s - loss: 0.8744 - acc: 0.8590 - val_loss: 1.5402 - val_acc: 0.6867

Epoch 6/20

1000/1000 [==============================] - 2s - loss: 0.6714 - acc: 0.8980 - val_loss: 1.4544 - val_acc: 0.6971

Epoch 7/20

1000/1000 [==============================] - 2s - loss: 0.5207 - acc: 0.9270 - val_loss: 1.3934 - val_acc: 0.7037

Epoch 8/20

1000/1000 [==============================] - 2s - loss: 0.4029 - acc: 0.9510 - val_loss: 1.3484 - val_acc: 0.7110

Epoch 9/20

1000/1000 [==============================] - 2s - loss: 0.3116 - acc: 0.9620 - val_loss: 1.3183 - val_acc: 0.7141

Epoch 10/20

1000/1000 [==============================] - 2s - loss: 0.2415 - acc: 0.9720 - val_loss: 1.3024 - val_acc: 0.7176

Epoch 11/20

1000/1000 [==============================] - 2s - loss: 0.1882 - acc: 0.9820 - val_loss: 1.2922 - val_acc: 0.7202

Epoch 12/20

1000/1000 [==============================] - 2s - loss: 0.1451 - acc: 0.9850 - val_loss: 1.2912 - val_acc: 0.7216

Epoch 13/20

1000/1000 [==============================] - 2s - loss: 0.1145 - acc: 0.9900 - val_loss: 1.2879 - val_acc: 0.72410.9

Epoch 14/20

1000/1000 [==============================] - 2s - loss: 0.0894 - acc: 0.9910 - val_loss: 1.2864 - val_acc: 0.7276

Epoch 15/20

1000/1000 [==============================] - 2s - loss: 0.0712 - acc: 0.9920 - val_loss: 1.2991 - val_acc: 0.7274

Epoch 16/20

1000/1000 [==============================] - 2s - loss: 0.0564 - acc: 0.9920 - val_loss: 1.3104 - val_acc: 0.7279

Epoch 17/20

1000/1000 [==============================] - 2s - loss: 0.0457 - acc: 0.9910 - val_loss: 1.3191 - val_acc: 0.7301

Epoch 18/20

1000/1000 [==============================] - 2s - loss: 0.0385 - acc: 0.9910 - val_loss: 1.3317 - val_acc: 0.7296

Epoch 19/20

1000/1000 [==============================] - 2s - loss: 0.0290 - acc: 0.9940 - val_loss: 1.3557 - val_acc: 0.7283

Epoch 20/20

1000/1000 [==============================] - 2s - loss: 0.0275 - acc: 0.9940 - val_loss: 1.3766 - val_acc: 0.7288

2240/2246 [============================>.] - ETA: 0s[1.4121944055107165, 0.716384683935534]

#驗證中間維度的重要性:3個隱藏層,準確率64%

#重新訓練一個新的網(wǎng)絡

model=models.Sequential()

model.add(layers.Dense(64,activation='relu',input_shape=(10000,)))

model.add(layers.Dense(128,activation='relu'))

model.add(layers.Dense(32,activation='relu'))

model.add(layers.Dense(46,activation='softmax'))

model.compile(

optimizer='rmsprop',

loss='categorical_crossentropy',

metrics=['accuracy'])

model.fit(partial_x_train,

partial_y_train,

epochs=20,

batch_size=128,

validation_data=(x_val,y_val))

results=model.evaluate(x_test,one_hot_test_labels)

print(results)

Train on 1000 samples, validate on 7982 samples

Epoch 1/20

1000/1000 [==============================] - 2s - loss: 3.2393 - acc: 0.3370 - val_loss: 2.4314 - val_acc: 0.4326

Epoch 2/20

1000/1000 [==============================] - 2s - loss: 1.9808 - acc: 0.5450 - val_loss: 1.9284 - val_acc: 0.5823

Epoch 3/20

1000/1000 [==============================] - 2s - loss: 1.4943 - acc: 0.6850 - val_loss: 1.7668 - val_acc: 0.6082

Epoch 4/20

1000/1000 [==============================] - 2s - loss: 1.1805 - acc: 0.7450 - val_loss: 1.6545 - val_acc: 0.6267

Epoch 5/20

1000/1000 [==============================] - 2s - loss: 0.9341 - acc: 0.7920 - val_loss: 1.5798 - val_acc: 0.6635

Epoch 6/20

1000/1000 [==============================] - 2s - loss: 0.7301 - acc: 0.8450 - val_loss: 1.5412 - val_acc: 0.67730.849

Epoch 7/20

1000/1000 [==============================] - 2s - loss: 0.5585 - acc: 0.8890 - val_loss: 1.4969 - val_acc: 0.6868

Epoch 8/20

1000/1000 [==============================] - 2s - loss: 0.4301 - acc: 0.9070 - val_loss: 1.5158 - val_acc: 0.6839

Epoch 9/20

1000/1000 [==============================] - 2s - loss: 0.3289 - acc: 0.9290 - val_loss: 1.5401 - val_acc: 0.6721

Epoch 10/20

1000/1000 [==============================] - 2s - loss: 0.2564 - acc: 0.9500 - val_loss: 1.5659 - val_acc: 0.6892

Epoch 11/20

1000/1000 [==============================] - 2s - loss: 0.1939 - acc: 0.9610 - val_loss: 1.5217 - val_acc: 0.7023

Epoch 12/20

1000/1000 [==============================] - 2s - loss: 0.1388 - acc: 0.9840 - val_loss: 1.5936 - val_acc: 0.6944

Epoch 13/20

1000/1000 [==============================] - 2s - loss: 0.1036 - acc: 0.9880 - val_loss: 1.5633 - val_acc: 0.7061

Epoch 14/20

1000/1000 [==============================] - 2s - loss: 0.0720 - acc: 0.9910 - val_loss: 1.7625 - val_acc: 0.6729

Epoch 15/20

1000/1000 [==============================] - 2s - loss: 0.0537 - acc: 0.9920 - val_loss: 1.6561 - val_acc: 0.6944

Epoch 16/20

1000/1000 [==============================] - 2s - loss: 0.0421 - acc: 0.9930 - val_loss: 1.7160 - val_acc: 0.6992

Epoch 17/20

1000/1000 [==============================] - 2s - loss: 0.0298 - acc: 0.9950 - val_loss: 1.8821 - val_acc: 0.6776

Epoch 18/20

1000/1000 [==============================] - 2s - loss: 0.0261 - acc: 0.9940 - val_loss: 1.7448 - val_acc: 0.6994

Epoch 19/20

1000/1000 [==============================] - 2s - loss: 0.0220 - acc: 0.9940 - val_loss: 1.8322 - val_acc: 0.7021

Epoch 20/20

1000/1000 [==============================] - 2s - loss: 0.0146 - acc: 0.9960 - val_loss: 2.0921 - val_acc: 0.6634

2208/2246 [============================>.] - ETA: 0s[2.1851936431198595, 0.6447016919232433]

小結:

- 如果要對 N 個類別的數(shù)據(jù)點進行分類,網(wǎng)絡的最后一層應該是大小為 N 的 Dense 層。

- 對于單標簽、多分類問題,網(wǎng)絡的最后一層應該使用 softmax 激活,這樣可以輸出在 N個輸出類別上的概率分布。

- 這種問題的損失函數(shù)幾乎總是應該使用分類交叉熵。它將網(wǎng)絡輸出的概率分布與目標的真實分布之間的距離最小化。

- 處理多分類問題的標簽有兩種方法。

- 通過分類編碼(也叫 one-hot 編碼)對標簽進行編碼,然后使用 categorical_crossentropy 作為損失函數(shù)。

- 將標簽編碼為整數(shù),然后使用 sparse_categorical_crossentropy 損失函數(shù)。

- 如果你需要將數(shù)據(jù)劃分到許多類別中,應該避免使用太小的中間層,以免在網(wǎng)絡中造成信息瓶頸。

案例3:預測房價:回歸問題

數(shù)據(jù)集:波士頓房價數(shù)據(jù)集

任務:本節(jié)將要預測 20 世紀 70 年代中期波士頓郊區(qū)房屋價格的中位數(shù),已知當時郊區(qū)的一些數(shù)據(jù)點,比如犯罪率、當?shù)胤慨a(chǎn)稅率等。本節(jié)用到的數(shù)據(jù)集與前面兩個例子有一個有趣的區(qū)別。它包含的數(shù)據(jù)點相對較少,只有 506 個,分為 404 個訓練樣本和 102 個測試樣本。輸入數(shù)據(jù)的每個特征(比如犯罪率)都有不同的取值范圍。例如,有些特性是比例,取值范圍為 0~1;有的取值范圍為 1~12;還有的取值范圍為 0~100,等等。

#加載數(shù)據(jù)

from keras.datasets import boston_housing

(train_data,train_targets),(test_data,test_targets)=boston_housing.load_data()

print(train_data.shape,test_data.shape)

(404, 13) (102, 13)

test_targets

#單位*1000

array([ 7.2, 18.8, 19. , 27. , 22.2, 24.5, 31.2, 22.9, 20.5, 23.2, 18.6,

14.5, 17.8, 50. , 20.8, 24.3, 24.2, 19.8, 19.1, 22.7, 12. , 10.2,

20. , 18.5, 20.9, 23. , 27.5, 30.1, 9.5, 22. , 21.2, 14.1, 33.1,

23.4, 20.1, 7.4, 15.4, 23.8, 20.1, 24.5, 33. , 28.4, 14.1, 46.7,

32.5, 29.6, 28.4, 19.8, 20.2, 25. , 35.4, 20.3, 9.7, 14.5, 34.9,

26.6, 7.2, 50. , 32.4, 21.6, 29.8, 13.1, 27.5, 21.2, 23.1, 21.9,

13. , 23.2, 8.1, 5.6, 21.7, 29.6, 19.6, 7. , 26.4, 18.9, 20.9,

28.1, 35.4, 10.2, 24.3, 43.1, 17.6, 15.4, 16.2, 27.1, 21.4, 21.5,

22.4, 25. , 16.6, 18.6, 22. , 42.8, 35.1, 21.5, 36. , 21.9, 24.1,

50. , 26.7, 25. ])

np.mean(test_targets)

23.07843137254902

#數(shù)據(jù)標準化

from sklearn.preprocessing import StandardScaler

data_scaler=StandardScaler().fit(train_data)

train_data=data_scaler.transform(train_data)

test_data=data_scaler.transform(test_data)

target_scaler=StandardScaler().fit(train_targets.reshape((-1,1)))

train_targets=target_scaler.transform(train_targets.reshape((-1,1)))

train_targets=train_targets.flatten()

test_targets=target_scaler.transform(test_targets.reshape((-1,1)))

test_targets=test_targets.flatten()

#定義模型

from keras import models

from keras import layers

def build_model():

model=models.Sequential()

model.add(layers.Dense(64,activation='relu',

input_shape=(train_data.shape[1],)))

model.add(layers.Dense(64,activation='relu'))

model.add(layers.Dense(1))

model.compile(optimizer='rmsprop',loss='mse',metrics=['mae'])

return model

注:

- 網(wǎng)絡的最后一層只有一個單元,沒有激活,是一個線性層。這是標量回歸(標量回歸是預測單一連續(xù)值的回歸)的典型設置。添加激活函數(shù)將會限制輸出范圍。例如,如果向最后一層添加 sigmoid 激活函數(shù),網(wǎng)絡只能學會預測 0~1 范圍內的值。這里最后一層是純線性的,所以網(wǎng)絡可以學會預測任意范圍內的值。

- 注意,編譯網(wǎng)絡用的是 mse 損失函數(shù),即均方誤差( MSE, mean squared error),預測值與目標值之差的平方。這是回歸問題常用的損失函數(shù)。

- 在訓練過程中還監(jiān)控一個新指標: 平均絕對誤差( MAE, mean absolute error)。它是預測值與目標值之差的絕對值。比如,如果這個問題的 MAE 等于 0.5,就表示你預測的房價與實際價格平均相差 500 美元。

#使用K折交叉驗證

import numpy as np

k=4

num_val_samples=len(train_data)//4

num_epochs=500

all_mae_histories=[]

for i in range(k):

print('propressing fold #',i)

val_data=train_data[i*num_val_samples:(i+1)*num_val_samples]

val_targets=train_targets[i*num_val_samples:(i+1)*num_val_samples]

partial_train_data=np.concatenate(

[train_data[:i*num_val_samples],train_data[(i+1)*num_val_samples:]],

axis=0)

#print(partial_train_data.shape)

partial_train_targets=np.concatenate(

[train_targets[:i*num_val_samples],train_targets[(i+1)*num_val_samples:]],

axis=0)

#構建已編譯的Keras模型

model=build_model()

#訓練模式(靜默模式)

history=model.fit(partial_train_data,partial_train_targets,epochs=num_epochs,

batch_size=1,verbose=0,validation_data=(val_data,val_targets))

#val_mse,val_mae=model.evaluate(val_data,val_targets,verbose=0)

history_dict=history.history

print(history_dict.keys())

mae_history=history.history['val_mean_absolute_error']

all_mae_histories.append(mae_history)

propressing fold # 0

dict_keys(['val_loss', 'val_mean_absolute_error', 'loss', 'mean_absolute_error'])

propressing fold # 1

dict_keys(['val_loss', 'val_mean_absolute_error', 'loss', 'mean_absolute_error'])

propressing fold # 2

dict_keys(['val_loss', 'val_mean_absolute_error', 'loss', 'mean_absolute_error'])

propressing fold # 3

dict_keys(['val_loss', 'val_mean_absolute_error', 'loss', 'mean_absolute_error'])

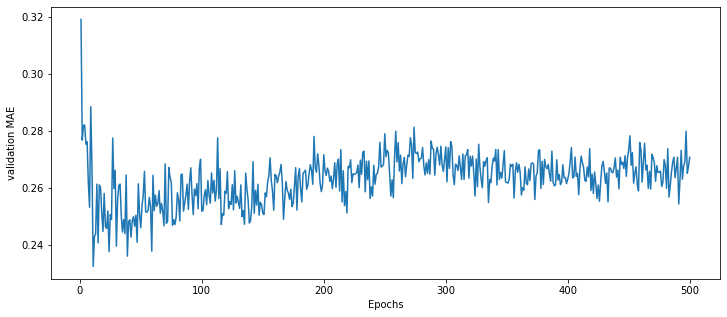

#計算所有輪次中的K折驗證分數(shù)平均值

average_mae_history=[

np.mean([x[i] for x in all_mae_histories]) for i in range(num_epochs)]

#繪制驗證分數(shù)

import matplotlib.pyplot as plt

fig=plt.figure(figsize=(12,5))

plt.plot(range(1,len(average_mae_history)+1),average_mae_history)

plt.xlabel('Epochs')

plt.ylabel('validation MAE')

plt.show()

mae還是偏大,可以通過增加

num_epochs進行更準確的優(yōu)化。

#訓練的最終模型

model=build_model()

history=model.fit(train_data,train_targets,

epochs=80,batch_size=16,verbose=0)

test_mse_score,test_mae_score=model.evaluate(test_data,test_targets)

32/102 [========>.....................] - ETA: 1s

test_mse_score

0.17438595084583058

test_mae_score

0.26517656153323604

target_scaler.inverse_transform([test_mae_score])

#預測值與真實值相差24.83*1000元,差異還是比較大(ps:郁悶,和書上的不一樣。。。(書上預測相差2550元))

array([24.83441809])

小結:

- 回歸問題使用的損失函數(shù)與分類問題不同。回歸常用的損失函數(shù)是均方誤差( MSE)。

- 同樣,回歸問題使用的評估指標也與分類問題不同。顯而易見,精度的概念不適用于回歸問題。常見的回歸指標是平均絕對誤差( MAE)。

- 如果輸入數(shù)據(jù)的特征具有不同的取值范圍,應該先進行預處理,對每個特征單獨進行縮放。

- 如果可用的數(shù)據(jù)很少,使用 K 折驗證可以可靠地評估模型。

- 如果可用的訓練數(shù)據(jù)很少,最好使用隱藏層較少(通常只有一到兩個)的小型網(wǎng)絡,以避免嚴重的過擬合。

浙公網(wǎng)安備 33010602011771號

浙公網(wǎng)安備 33010602011771號